|

Pontryagin's Minimum Principle

Pontryagin's maximum principle is used in optimal control theory to find the best possible control for taking a dynamical system from one state to another, especially in the presence of constraints for the state or input controls. It states that it is necessary for any optimal control along with the optimal state trajectory to solve the so-called Hamiltonian system, which is a two-point boundary value problem, plus a maximum condition of the control Hamiltonian. These necessary conditions become sufficient under certain convexity conditions on the objective and constraint functions. The maximum principle was formulated in 1956 by the Russian mathematician Lev Pontryagin and his students, and its initial application was to the maximization of the terminal speed of a rocket. The result was derived using ideas from the classical calculus of variations. After a slight perturbation of the optimal control, one considers the first-order term of a Taylor expansion with respect to the pert ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Optimal Control

Optimal control theory is a branch of mathematical optimization that deals with finding a control for a dynamical system over a period of time such that an objective function is optimized. It has numerous applications in science, engineering and operations research. For example, the dynamical system might be a spacecraft with controls corresponding to rocket thrusters, and the objective might be to reach the moon with minimum fuel expenditure. Or the dynamical system could be a nation's economy, with the objective to minimize unemployment; the controls in this case could be fiscal and monetary policy. A dynamical system may also be introduced to embed operations research problems within the framework of optimal control theory. Optimal control is an extension of the calculus of variations, and is a mathematical optimization method for deriving control policies. The method is largely due to the work of Lev Pontryagin and Richard Bellman in the 1950s, after contributions to calc ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Pointwise

In mathematics, the qualifier pointwise is used to indicate that a certain property is defined by considering each value f(x) of some function f. An important class of pointwise concepts are the ''pointwise operations'', that is, operations defined on functions by applying the operations to function values separately for each point in the domain of definition. Important relations can also be defined pointwise. Pointwise operations Formal definition A binary operation on a set can be lifted pointwise to an operation on the set of all functions from to as follows: Given two functions and , define the function by Commonly, ''o'' and ''O'' are denoted by the same symbol. A similar definition is used for unary operations ''o'', and for operations of other arity. Examples \begin (f+g)(x) & = f(x)+g(x) & \text \\ (f\cdot g)(x) & = f(x) \cdot g(x) & \text \\ (\lambda \cdot f)(x) & = \lambda \cdot f(x) & \text \end where f, g : X \to R. See also pointwise product, and scalar. ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Principles

A principle is a proposition or value that is a guide for behavior or evaluation. In law, it is a rule that has to be or usually is to be followed. It can be desirably followed, or it can be an inevitable consequence of something, such as the laws observed in nature or the way that a system is constructed. The principles of such a system are understood by its users as the essential characteristics of the system, or reflecting system's designed purpose, and the effective operation or use of which would be impossible if any one of the principles was to be ignored. A system may be explicitly based on and implemented from a document of principles as was done in IBM's 360/370 ''Principles of Operation''. Examples of principles are, entropy in a number of fields, least action in physics, those in descriptive comprehensive and fundamental law: doctrines or assumptions forming normative rules of conduct, separation of church and state in statecraft, the central dogma of molecular biolo ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Optimal Control

Optimal control theory is a branch of mathematical optimization that deals with finding a control for a dynamical system over a period of time such that an objective function is optimized. It has numerous applications in science, engineering and operations research. For example, the dynamical system might be a spacecraft with controls corresponding to rocket thrusters, and the objective might be to reach the moon with minimum fuel expenditure. Or the dynamical system could be a nation's economy, with the objective to minimize unemployment; the controls in this case could be fiscal and monetary policy. A dynamical system may also be introduced to embed operations research problems within the framework of optimal control theory. Optimal control is an extension of the calculus of variations, and is a mathematical optimization method for deriving control policies. The method is largely due to the work of Lev Pontryagin and Richard Bellman in the 1950s, after contributions to calc ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Lagrange Multipliers On Banach Spaces

In the field of calculus of variations in mathematics, the method of Lagrange multipliers on Banach spaces can be used to solve certain infinite-dimensional constrained optimization problems. The method is a generalization of the classical method of Lagrange multipliers as used to find extrema of a function of finitely many variables. The Lagrange multiplier theorem for Banach spaces Let ''X'' and ''Y'' be real Banach spaces. Let ''U'' be an open subset of ''X'' and let ''f'' : ''U'' → R be a continuously differentiable function. Let ''g'' : ''U'' → ''Y'' be another continuously differentiable function, the ''constraint'': the objective is to find the extremal points (maxima or minima) of ''f'' subject to the constraint that ''g'' is zero. Suppose that ''u''0 is a ''constrained extremum'' of ''f'', i.e. an extremum of ''f'' on :g^ (0) = \ \subseteq U. Suppose also that the Fréchet derivative D''g''(''u''0) : ''X'' → ''Y'' of ''g'' at ''u''0 is a surjective linear ma ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Costate Equation

The costate equation is related to the state equation used in optimal control. It is also referred to as auxiliary, adjoint, influence, or multiplier equation. It is stated as a vector of first order differential equations : \dot^(t)=-\frac where the right-hand side is the vector of partial derivatives of the negative of the Hamiltonian with respect to the state variables. Interpretation The costate variables \lambda(t) can be interpreted as Lagrange multipliers associated with the state equations. The state equations represent constraints of the minimization problem, and the costate variables represent the marginal cost of violating those constraints; in economic terms the costate variables are the shadow prices. Solution The state equation is subject to an initial condition and is solved forwards in time. The costate equation must satisfy a transversality condition and is solved backwards in time, from the final time towards the beginning. For more details see Pontryagin ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Lagrange Multipliers

In mathematical optimization, the method of Lagrange multipliers is a strategy for finding the local maxima and minima of a function subject to equality constraints (i.e., subject to the condition that one or more equations have to be satisfied exactly by the chosen values of the variables). It is named after the mathematician Joseph-Louis Lagrange. The basic idea is to convert a constrained problem into a form such that the derivative test of an unconstrained problem can still be applied. The relationship between the gradient of the function and gradients of the constraints rather naturally leads to a reformulation of the original problem, known as the Lagrangian function. The method can be summarized as follows: in order to find the maximum or minimum of a function f(x) subjected to the equality constraint g(x) = 0, form the Lagrangian function :\mathcal(x, \lambda) = f(x) + \lambda g(x) and find the stationary points of \mathcal considered as a function of x and the Lagrange mu ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Lagrangian Mechanics

In physics, Lagrangian mechanics is a formulation of classical mechanics founded on the stationary-action principle (also known as the principle of least action). It was introduced by the Italian-French mathematician and astronomer Joseph-Louis Lagrange in his 1788 work, '' Mécanique analytique''. Lagrangian mechanics describes a mechanical system as a pair (M,L) consisting of a configuration space M and a smooth function L within that space called a ''Lagrangian''. By convention, L = T - V, where T and V are the kinetic and potential energy of the system, respectively. The stationary action principle requires that the action functional of the system derived from L must remain at a stationary point (a maximum, minimum, or saddle) throughout the time evolution of the system. This constraint allows the calculation of the equations of motion of the system using Lagrange's equations. Introduction Suppose there exists a bead sliding around on a wire, or a swinging simple p ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Hamilton–Jacobi–Bellman Equation

In optimal control theory, the Hamilton-Jacobi-Bellman (HJB) equation gives a necessary and sufficient condition for optimality of a control with respect to a loss function. It is, in general, a nonlinear partial differential equation in the value function, which means its solution the value function itself. Once this solution is known, it can be used to obtain the optimal control by taking the maximizer (or minimizer) of the Hamiltonian involved in the HJB equation. The equation is a result of the theory of dynamic programming which was pioneered in the 1950s by Richard Bellman and coworkers. The connection to the Hamilton–Jacobi equation from classical physics was first drawn by Rudolf Kálmán. In discrete-time problems, the corresponding difference equation is usually referred to as the Bellman equation. While classical variational problems, such as the brachistochrone problem, can be solved using the Hamilton–Jacobi–Bellman equation, the method can be applied to a ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

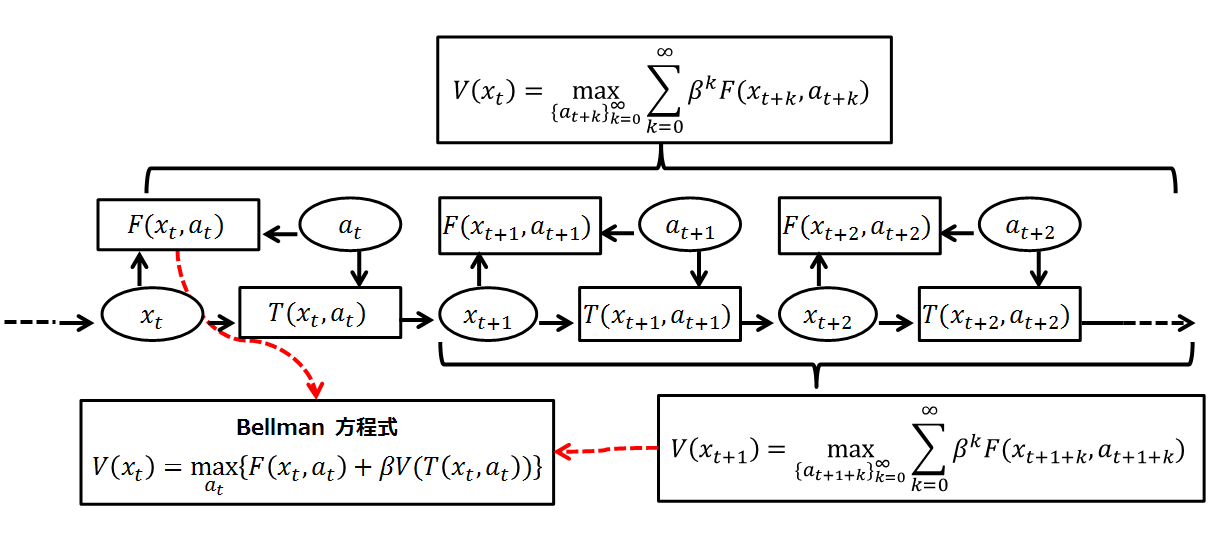

Bellman's Principle Of Optimality

A Bellman equation, named after Richard E. Bellman, is a necessary condition for optimality associated with the mathematical optimization method known as dynamic programming. It writes the "value" of a decision problem at a certain point in time in terms of the payoff from some initial choices and the "value" of the remaining decision problem that results from those initial choices. This breaks a dynamic optimization problem into a sequence of simpler subproblems, as Bellman's “principle of optimality" prescribes. The equation applies to algebraic structures with a total ordering; for algebraic structures with a partial ordering, the generic Bellman's equation can be used. The Bellman equation was first applied to engineering control theory and to other topics in applied mathematics, and subsequently became an important tool in economic theory; though the basic concepts of dynamic programming are prefigured in John von Neumann and Oskar Morgenstern's '' Theory of Games and Eco ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Function Space

In mathematics, a function space is a set of functions between two fixed sets. Often, the domain and/or codomain will have additional structure which is inherited by the function space. For example, the set of functions from any set into a vector space has a natural vector space structure given by pointwise addition and scalar multiplication. In other scenarios, the function space might inherit a topological or metric structure, hence the name function ''space''. In linear algebra Let be a vector space over a field and let be any set. The functions → can be given the structure of a vector space over where the operations are defined pointwise, that is, for any , : → , any in , and any in , define \begin (f+g)(x) &= f(x)+g(x) \\ (c\cdot f)(x) &= c\cdot f(x) \end When the domain has additional structure, one might consider instead the subset (or subspace) of all such functions which respect that structure. For example, if is also a vector space over , the ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Dynamical System

In mathematics, a dynamical system is a system in which a Function (mathematics), function describes the time dependence of a Point (geometry), point in an ambient space. Examples include the mathematical models that describe the swinging of a clock pendulum, fluid dynamics, the flow of water in a pipe, the Brownian motion, random motion of particles in the air, and population dynamics, the number of fish each springtime in a lake. The most general definition unifies several concepts in mathematics such as ordinary differential equations and ergodic theory by allowing different choices of the space and how time is measured. Time can be measured by integers, by real number, real or complex numbers or can be a more general algebraic object, losing the memory of its physical origin, and the space may be a manifold or simply a Set (mathematics), set, without the need of a Differentiability, smooth space-time structure defined on it. At any given time, a dynamical system has a State ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |