|

Perceptual-based 3D Sound Localization

Perceptual-based 3D sound localization is the application of knowledge of the human auditory system to develop 3D sound localization technology. Motivation and applications Human listeners combine information from two ears to localize and separate sound sources originating in different locations in a process called binaural hearing. The powerful signal processing methods found in the neural systems and brains of humans and other animals are flexible, environmentally adaptable, and take place rapidly and seemingly without effort. Emulating the mechanisms of binaural hearing can improve recognition accuracy and signal separation in DSP algorithms, especially in noisy environments. Furthermore, by understanding and exploiting biological mechanisms of sound localization, virtual sound scenes may be rendered with more perceptually relevant methods, allowing listeners to accurately perceive the locations of auditory events. One way to obtain the perceptual-based sound localization is ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Human Auditory System

The auditory system is the sensory system for the sense of hearing. It includes both the sensory organs (the ears) and the auditory parts of the sensory system. System overview The outer ear funnels sound vibrations to the eardrum, increasing the sound pressure in the middle frequency range. The middle-ear ossicles further amplify the vibration pressure roughly 20 times. The base of the stapes couples vibrations into the cochlea via the oval window, which vibrates the perilymph liquid (present throughout the inner ear) and causes the round window to bulb out as the oval window bulges in. Vestibular and tympanic ducts are filled with perilymph, and the smaller cochlear duct between them is filled with endolymph, a fluid with a very different ion concentration and voltage. Vestibular duct perilymph vibrations bend organ of Corti outer cells (4 lines) causing prestin to be released in cell tips. This causes the cells to be chemically elongated and shrunk ( somatic motor), and ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

3D Sound Localization

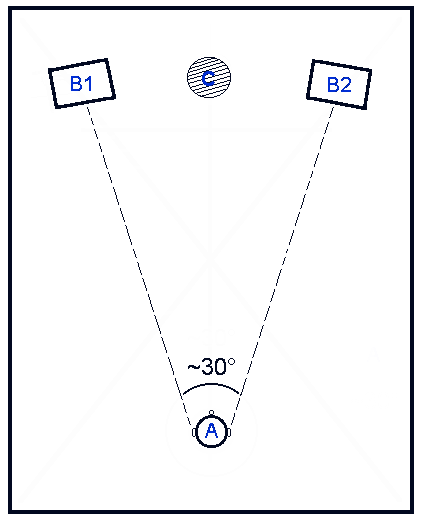

3D sound localization refers to an acoustical engineering, acoustic technology that is used to locate the source of a sound in a three-dimensional space. The source location is usually determined by the direction of the incoming sound waves (horizontal and vertical angles) and the distance between the source and sensors. It involves the structure arrangement design of the sensors and signal processing techniques. Most mammals (including humans) use binaural hearing to localize sound, by comparing the information received from each ear in a complex process that involves a significant amount of synthesis. It is difficult to localize using monaural hearing, especially in 3D space. Technology Sound localization technology is used in some Audio signal, audio and acoustics fields, such as hearing aids, surveillance and navigation. Existing real-time passive sound localization systems are mainly based on the time-difference-of-arrival (TDOA) approach, limiting sound localization to two-di ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Digital Signal Processing

Digital signal processing (DSP) is the use of digital processing, such as by computers or more specialized digital signal processors, to perform a wide variety of signal processing operations. The digital signals processed in this manner are a sequence of numbers that represent Sampling (signal processing), samples of a continuous variable in a domain such as time, space, or frequency. In digital electronics, a digital signal is represented as a pulse train, which is typically generated by the switching of a transistor. Digital signal processing and analog signal processing are subfields of signal processing. DSP applications include Audio signal processing, audio and speech processing, sonar, radar and other sensor array processing, spectral density estimation, statistical signal processing, digital image processing, data compression, video coding, audio coding, image compression, signal processing for telecommunications, control systems, biomedical engineering, and seismology ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Psychoacoustics

Psychoacoustics is the branch of psychophysics involving the scientific study of the perception of sound by the human auditory system. It is the branch of science studying the psychological responses associated with sound including noise, speech, and music. Psychoacoustics is an interdisciplinary field including psychology, acoustics, electronic engineering, physics, biology, physiology, and computer science. Background Hearing is not a purely mechanical phenomenon of wave propagation, but is also a sensory and perceptual event. When a person hears something, that something arrives at the ear as a mechanical sound wave traveling through the air, but within the ear it is transformed into neural action potentials. These nerve pulses then travel to the brain where they are perceived. Hence, in many problems in acoustics, such as for Auditory system, audio processing, it is advantageous to take into account not just the mechanics of the environment, but also the fact that both the ear ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Interaural Time Difference

The interaural time difference (or ITD) when concerning humans or animals, is the difference in arrival time of a sound between two ears. It is important in the Sound localization, localization of sounds, as it provides a cue to the direction or angle of the sound source from the head. If a signal arrives at the head from one side, the signal has further to travel to reach the far ear than the near ear. This pathlength difference results in a time difference between the sound's arrivals at the ears, which is detected and aids the process of identifying the direction of sound source. When a signal is produced in the horizontal plane, its angle in relation to the head is referred to as its azimuth, with 0 degrees (0°) azimuth being directly in front of the listener, 90° to the right, and 180° being directly behind. Different methods for measuring ITDs * For an abrupt stimulus such as a click, onset ITDs are measured. An onset ITD is the time difference between the onset of the s ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Interaural Intensity Difference

Sound localization is a listener's ability to identify the location or origin of a detected sound in direction and distance. The sound localization mechanisms of the mammalian auditory system have been extensively studied. The auditory system uses several cues for sound source localization, including time difference and level difference (or intensity difference) between the ears, and spectral information. Other animals, such as birds and reptiles, also use them but they may use them differently, and some also have localization cues which are absent in the human auditory system, such as the effects of ear movements. Animals with the ability to localize sound have a clear evolutionary advantage. How sound reaches the brain Sound is the perceptual result of mechanical vibrations traveling through a medium such as air or water. Through the mechanisms of compression and rarefaction, sound waves travel through the air, bounce off the pinna and concha of the exterior ear, and ent ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Pinna (anatomy)

The auricle or auricula is the visible part of the ear that is outside the head. It is also called the pinna (Latin for 'wing' or ' fin', : pinnae), a term that is used more in zoology. Structure The diagram shows the shape and location of most of these components: * '' antihelix'' forms a 'Y' shape where the upper parts are: ** ''Superior crus'' (to the left of the ''fossa triangularis'' in the diagram) ** ''Inferior crus'' (to the right of the ''fossa triangularis'' in the diagram) * ''Antitragus'' is below the ''tragus'' * ''Aperture'' is the entrance to the ear canal * ''Auricular sulcus'' is the depression behind the ear next to the head * ''Concha'' is the hollow next to the ear canal * Conchal angle is the angle that the back of the ''concha'' makes with the side of the head * ''Crus'' of the helix is just above the ''tragus'' * ''Cymba conchae'' is the narrowest end of the ''concha'' * External auditory meatus is the ear canal * ''Fossa triangularis'' is the depression ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Precedence Effect

The precedence effect or law of the first wavefront is a binaural psychoacoustical effect concerning sound reflection and the perception of echoes. When two versions of the same sound presented are separated by a sufficiently short time delay (below the listener's echo threshold), listeners perceive a single auditory event; its perceived spatial location is dominated by the location of the first-arriving sound (the first wave front). The lagging sound does also affect the perceived location; however, its effect is mostly suppressed by the first-arriving sound. The Haas effect was described in 1949 by Helmut Haas in his Ph.D. thesis. The term "Haas effect" is often loosely taken to include the precedence effect which underlies it. History Joseph Henry published "On The Limit of Perceptibility of a Direct and Reflected Sound" in 1851. The "law of the first wavefront" was described and named in 1948 by Lothar Cremer. The "precedence effect" was described and named in 1949 b ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

HRTF

A head-related transfer function (HRTF) is a response that characterizes how an ear receives a sound from a point in space. As sound strikes the listener, the size and shape of the head, ears, ear canal, density of the head, size and shape of nasal and oral cavities, all transform the sound and affect how it is perceived, boosting some frequencies and attenuating others. Generally speaking, the HRTF boosts frequencies from 2–5 kHz with a primary resonance of +17 dB at 2,700 Hz. But the response curve is more complex than a single bump, affects a broad frequency spectrum, and varies significantly from person to person. A pair of HRTFs for two ears can be used to synthesize a binaural sound that seems to come from a particular point in space. It is a transfer function, describing how a sound from a specific point will arrive at the ear (generally at the outer end of the auditory canal). Some consumer home entertainment products designed to reproduce surround soun ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Dummy Head Recording

Binaural recording is a method of recording sound that uses two microphones, arranged with the intent to create a 3D stereo sound sensation for the listener of actually being in the room with the performers or instruments. This effect is often created using a technique known as dummy head recording, wherein a mannequin head is fitted with a microphone in each ear. Binaural recording is intended for replay using headphones and will not translate properly over stereo speakers. This idea of a three-dimensional or "internal" form of sound has also translated into useful advancement of technology in many things such as stethoscopes creating "in-head" acoustics and IMAX movies being able to create a three-dimensional acoustic experience. The term "binaural" has frequently been confused as a synonym for the word "stereo", due in part to systematic use in the mid-1950s by the recording industry, as a marketing buzzword. Conventional stereo recordings do not factor in natural ear sp ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Interaural Level Difference

Sound localization is a listener's ability to identify the location or origin of a detected sound in direction and distance. The sound localization mechanisms of the mammalian auditory system have been extensively studied. The auditory system uses several cues for sound source localization, including time difference and level difference (or intensity difference) between the ears, and spectral information. Other animals, such as birds and reptiles, also use them but they may use them differently, and some also have localization cues which are absent in the human auditory system, such as the effects of ear movements. Animals with the ability to localize sound have a clear evolutionary advantage. How sound reaches the brain Sound is the perceptual result of mechanical vibrations traveling through a medium such as air or water. Through the mechanisms of compression and rarefaction, sound waves travel through the air, bounce off the pinna and concha of the exterior ear, and ent ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Azimuth

An azimuth (; from ) is the horizontal angle from a cardinal direction, most commonly north, in a local or observer-centric spherical coordinate system. Mathematically, the relative position vector from an observer ( origin) to a point of interest is projected perpendicularly onto a reference plane (the horizontal plane); the angle between the projected vector and a reference vector on the reference plane is called the azimuth. When used as a celestial coordinate, the azimuth is the horizontal direction of a star or other astronomical object in the sky. The star is the point of interest, the reference plane is the local area (e.g. a circular area with a 5 km radius at sea level) around an observer on Earth's surface, and the reference vector points to true north. The azimuth is the angle between the north vector and the star's vector on the horizontal plane. Azimuth is usually measured in degrees (°), in the positive range 0° to 360° or in the signed ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |