|

Parallel Slowdown

Parallel slowdown is a phenomenon in parallel computing where parallelization of a parallel algorithm In computer science, a parallel algorithm, as opposed to a traditional serial algorithm, is an algorithm which can do multiple operations in a given time. It has been a tradition of computer science to describe serial algorithms in abstract mach ... beyond a certain point causes the program to run slower (take more time to run to completion). Parallel slowdown is typically the result of a communications bottleneck. As more processor nodes are added, each processing node spends progressively more time doing communication than useful processing. At some point, the communications overhead created by adding another processing node surpasses the increased processing power that node provides, and parallel slowdown occurs. Parallel slowdown occurs when the algorithm requires significant communication, particularly of intermediate results. Some problems, known as embarrassingly p ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Parallelization Diagram

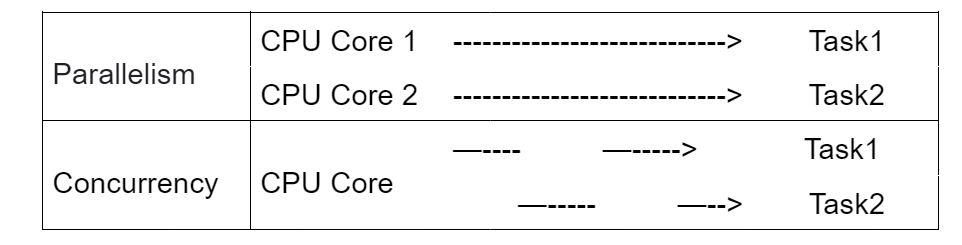

Parallel computing is a type of computation in which many calculations or processes are carried out simultaneously. Large problems can often be divided into smaller ones, which can then be solved at the same time. There are several different forms of parallel computing: bit-level, instruction-level, data, and task parallelism. Parallelism has long been employed in high-performance computing, but has gained broader interest due to the physical constraints preventing frequency scaling.S.V. Adve ''et al.'' (November 2008)"Parallel Computing Research at Illinois: The UPCRC Agenda" (PDF). Parallel@Illinois, University of Illinois at Urbana-Champaign. "The main techniques for these performance benefits—increased clock frequency and smarter but increasingly complex architectures—are now hitting the so-called power wall. The computer industry has accepted that future performance increases must largely come from increasing the number of processors (or cores) on a die, rather than mak ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Parallel Computing

Parallel computing is a type of computing, computation in which many calculations or Process (computing), processes are carried out simultaneously. Large problems can often be divided into smaller ones, which can then be solved at the same time. There are several different forms of parallel computing: Bit-level parallelism, bit-level, Instruction-level parallelism, instruction-level, Data parallelism, data, and task parallelism. Parallelism has long been employed in high-performance computing, but has gained broader interest due to the physical constraints preventing frequency scaling.S.V. Adve ''et al.'' (November 2008)"Parallel Computing Research at Illinois: The UPCRC Agenda" (PDF). Parallel@Illinois, University of Illinois at Urbana-Champaign. "The main techniques for these performance benefits—increased clock frequency and smarter but increasingly complex architectures—are now hitting the so-called power wall. The computer industry has accepted that future performance inc ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Parallel Algorithm

In computer science, a parallel algorithm, as opposed to a traditional serial algorithm, is an algorithm which can do multiple operations in a given time. It has been a tradition of computer science to describe serial algorithms in abstract machine models, often the one known as random-access machine. Similarly, many computer science researchers have used a so-called parallel random-access machine (PRAM) as a parallel abstract machine (shared-memory). Many parallel algorithms are executed concurrently – though in general concurrent algorithms are a distinct concept – and thus these concepts are often conflated, with which aspect of an algorithm is parallel and which is concurrent not being clearly distinguished. Further, non-parallel, non-concurrent algorithms are often referred to as " sequential algorithms", by contrast with concurrent algorithms. Parallelizability Algorithms vary significantly in how parallelizable they are, ranging from easily parallelizable to compl ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Processor Node

This glossary of computer hardware terms is a list of definitions of terms and concepts related to computer hardware, i.e. the physical and structural components of computers, architectural issues, and peripheral devices. A B C D E F G H I J K L M N O P R S T U V W Z See also *List of computer term etymologies *Glossary of backup terms *Glossary of computer graphics This is a glossary of terms relating to computer graphics. For more general c ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Embarrassingly Parallel Problem

In parallel computing, an embarrassingly parallel workload or problem (also called embarrassingly parallelizable, perfectly parallel, delightfully parallel or pleasingly parallel) is one where little or no effort is needed to split the problem into a number of parallel tasks. This is due to minimal or no dependency upon communication between the parallel tasks, or for results between them.Section 1.4.4 of: These differ from distributed computing problems, which need communication between tasks, especially communication of intermediate results. They are easier to perform on server farms which lack the special infrastructure used in a true supercomputer cluster. They are well-suited to large, Internet-based volunteer computing platforms such as BOINC, and suffer less from parallel slowdown. The opposite of embarrassingly parallel problems are inherently serial problems, which cannot be parallelized at all. A common example of an embarrassingly parallel problem is 3D video rendering ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Mythical Man Month

''The Mythical Man-Month: Essays on Software Engineering'' is a book on software engineering and project management by Fred Brooks first published in 1975, with subsequent editions in 1982 and 1995. Its central theme is that adding manpower to a software project that is behind schedule delays it even longer. This idea is known as Brooks's law, and is presented along with the second-system effect and advocacy of prototyping. Brooks's observations are based on his experiences at IBM while managing the development of OS/360. He had added more programmers to a project falling behind schedule, a decision that he would later conclude had, counter-intuitively, delayed the project even further. He also made the mistake of asserting that one project—involved in writing an ALGOL compiler—would require six months, regardless of the number of workers involved (it required longer). The tendency for managers to repeat such errors in project development led Brooks to quip that his book is cal ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |