|

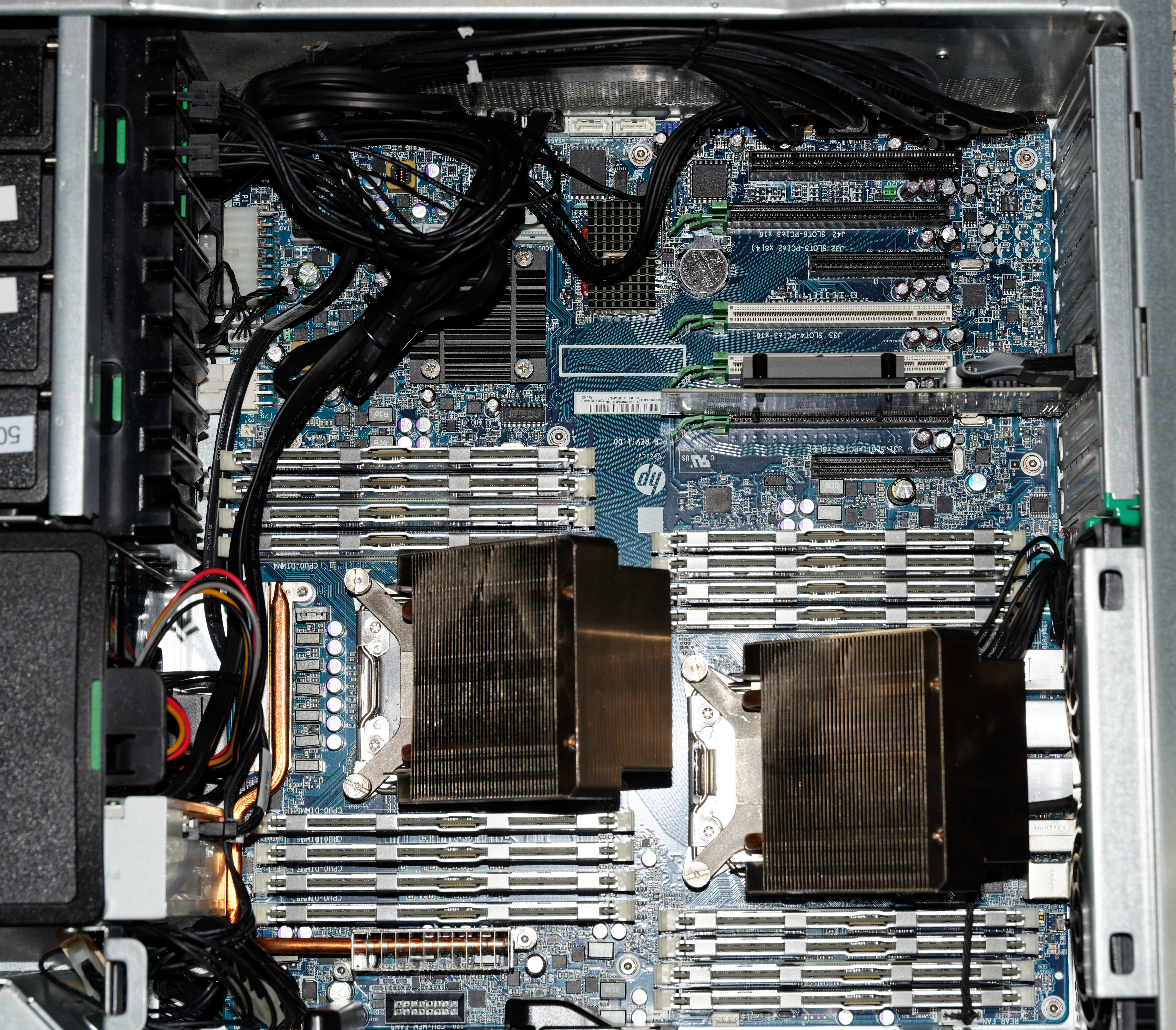

Parallel Database

A parallel database system seeks to improve performance through parallelization of various operations, such as loading data, building indexes and evaluating queries. Although data may be stored in a distributed fashion, the distribution is governed solely by performance considerations. Parallel databases improve processing and input/output speeds by using multiple CPUs and disks in parallel. Centralized and client–server database systems are not powerful enough to handle such applications. In parallel processing, many operations are performed simultaneously, as opposed to serial processing, in which the computational steps are performed sequentially. Parallel databases can be roughly divided into two groups, the first group of architecture is the multiprocessor architecture, the alternatives of which are the following: ;Shared-memory architecture: Where multiple processors share the main memory (RAM) space but each processor has its own disk (HDD). If many processes run simultan ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Database

In computing, a database is an organized collection of data or a type of data store based on the use of a database management system (DBMS), the software that interacts with end users, applications, and the database itself to capture and analyze the data. The DBMS additionally encompasses the core facilities provided to administer the database. The sum total of the database, the DBMS and the associated applications can be referred to as a database system. Often the term "database" is also used loosely to refer to any of the DBMS, the database system or an application associated with the database. Before digital storage and retrieval of data have become widespread, index cards were used for data storage in a wide range of applications and environments: in the home to record and store recipes, shopping lists, contact information and other organizational data; in business to record presentation notes, project research and notes, and contact information; in schools as flash c ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Parallelization

Parallel computing is a type of computation in which many calculations or processes are carried out simultaneously. Large problems can often be divided into smaller ones, which can then be solved at the same time. There are several different forms of parallel computing: bit-level, instruction-level, data, and task parallelism. Parallelism has long been employed in high-performance computing, but has gained broader interest due to the physical constraints preventing frequency scaling.S.V. Adve ''et al.'' (November 2008)"Parallel Computing Research at Illinois: The UPCRC Agenda" (PDF). Parallel@Illinois, University of Illinois at Urbana-Champaign. "The main techniques for these performance benefits—increased clock frequency and smarter but increasingly complex architectures—are now hitting the so-called power wall. The computer industry has accepted that future performance increases must largely come from increasing the number of processors (or cores) on a die, rather than m ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Input/output

In computing, input/output (I/O, i/o, or informally io or IO) is the communication between an information processing system, such as a computer, and the outside world, such as another computer system, peripherals, or a human operator. Inputs are the signals or data received by the system and outputs are the signals or data sent from it. The term can also be used as part of an action; to "perform I/O" is to perform an input or output operation. are the pieces of hardware used by a human (or other system) to communicate with a computer. For instance, a keyboard or computer mouse is an input device for a computer, while monitors and printers are output devices. Devices for communication between computers, such as modems and network cards, typically perform both input and output operations. Any interaction with the system by an interactor is an input and the reaction the system responds is called the output. The designation of a device as either input or output depend ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Central Processing Unit

A central processing unit (CPU), also called a central processor, main processor, or just processor, is the primary Processor (computing), processor in a given computer. Its electronic circuitry executes Instruction (computing), instructions of a computer program, such as arithmetic, logic, controlling, and input/output (I/O) operations. This role contrasts with that of external components, such as main memory and I/O circuitry, and specialized coprocessors such as graphics processing units (GPUs). The form, CPU design, design, and implementation of CPUs have changed over time, but their fundamental operation remains almost unchanged. Principal components of a CPU include the arithmetic–logic unit (ALU) that performs arithmetic operation, arithmetic and Bitwise operation, logic operations, processor registers that supply operands to the ALU and store the results of ALU operations, and a control unit that orchestrates the #Fetch, fetching (from memory), #Decode, decoding and ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Main Memory

Computer data storage or digital data storage is a technology consisting of computer components and recording media that are used to retain digital data. It is a core function and fundamental component of computers. The central processing unit (CPU) of a computer is what manipulates data by performing computations. In practice, almost all computers use a storage hierarchy, which puts fast but expensive and small storage options close to the CPU and slower but less expensive and larger options further away. Generally, the fast technologies are referred to as "memory", while slower persistent technologies are referred to as "storage". Even the first computer designs, Charles Babbage's Analytical Engine and Percy Ludgate's Analytical Machine, clearly distinguished between processing and memory (Babbage stored numbers as rotations of gears, while Ludgate stored numbers as displacements of rods in shuttles). This distinction was extended in the Von Neumann architecture, wh ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Shared-disk Architecture

A shared-disk architecture (SD) is a distributed computing architecture Architecture is the art and technique of designing and building, as distinguished from the skills associated with construction. It is both the process and the product of sketching, conceiving, planning, designing, and construction, constructi ... in which the nodes share same disk devices but each node has its own private memory. The disks have active nodes which all share memory in case of any failures. In this architecture, the disks are accessible from all the cluster nodes. This architecture has quick adaptability to the changing workloads. It uses robust optimization techniques. Multiple processors can access all disks directly via intercommunication network and every processor has local memory. It contrasts with shared-nothing architecture, in which all nodes have sole access to distinct disks, and with shared-memory, in which they also share memory. Shared-disk has two advantages over Shared-mem ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Storage Area Network

A storage area network (SAN) or storage network is a computer network which provides access to consolidated, block device, block-level data storage. SANs are primarily used to access Computer data storage, data storage devices, such as disk arrays and tape libraries from Server (computing), servers so that the devices appear to the operating system as direct-attached storage. A SAN typically is a dedicated network of storage devices not accessible through the local area network (LAN). Although a SAN provides only block-level access, file systems built on top of SANs do provide file-level access and are known as shared-disk file systems. Newer SAN configurations enable hybrid SAN and allow traditional block storage that appears as local storage but also object storage for web services through APIs. Storage architectures Storage area networks (SANs) are sometimes referred to as ''network behind the servers'' and historically developed out of a centralized data storage mode ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Shared-nothing Architecture

A shared-nothing architecture (SN) is a distributed computing architecture in which each update request is satisfied by a single node (processor/memory/storage unit) in a computer cluster. The intent is to eliminate contention among nodes. Nodes do not share (independently access) the same memory or storage. One alternative architecture is shared everything, in which requests are satisfied by arbitrary combinations of nodes. This may introduce contention, as multiple nodes may seek to update the same data at the same time. It also contrasts with shared-disk and shared-memory architectures. SN eliminates single points of failure, allowing the overall system to continue operating despite failures in individual nodes and allowing individual nodes to upgrade hardware or software without a system-wide shutdown. A SN system can scale simply by adding nodes, since no central resource bottlenecks the system. In databases, a term for the part of a database on a single node is a '' sha ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Non-uniform Memory Access

Non-uniform memory access (NUMA) is a computer storage, computer memory design used in multiprocessing, where the memory access time depends on the memory location relative to the processor. Under NUMA, a processor can access its own local memory faster than non-local memory (memory local to another processor or memory shared between processors). NUMA is beneficial for workloads with high memory locality of reference and low lock contention, because a processor may operate on a subset of memory mostly or entirely within its own cache node, reducing traffic on the memory bus. NUMA architectures logically follow in scaling from symmetric multiprocessing (SMP) architectures. They were developed commercially during the 1990s by Unisys, Convex Computer (later Hewlett-Packard), Honeywell Information Systems Italy (HISI) (later Groupe Bull), Silicon Graphics (later Silicon Graphics International), Sequent Computer Systems (later IBM), Data General (later EMC Corporation, EMC, now Dell Te ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Network Switch

A network switch (also called switching hub, bridging hub, Ethernet switch, and, by the IEEE, MAC bridge) is networking hardware that connects devices on a computer network by using packet switching to receive and forward data to the destination device. A network switch is a multiport network bridge that uses MAC addresses to forward data at the data link layer (layer 2) of the OSI model. Some switches can also forward data at the network layer (layer 3) by additionally incorporating routing functionality. Such switches are commonly known as layer-3 switches or multilayer switches. Switches for Ethernet are the most common form of network switch. The first MAC Bridge was invented in 1983 by Mark Kempf, an engineer in the Networking Advanced Development group of Digital Equipment Corporation. The first 2 port Bridge product (LANBridge 100) was introduced by that company shortly after. The company subsequently produced multi-port switches for both Ethernet and FDDI such as ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |