|

Multi-task Learning

Multi-task learning (MTL) is a subfield of machine learning in which multiple learning tasks are solved at the same time, while exploiting commonalities and differences across tasks. This can result in improved learning efficiency and prediction accuracy for the task-specific models, when compared to training the models separately. Inherently, Multi-task learning is a multi-objective optimization problem having trade-offs between different tasks. Early versions of MTL were called "hints". In a widely cited 1997 paper, Rich Caruana gave the following characterization:Multitask Learning is an approach to inductive transfer that improves generalization by using the domain information contained in the training signals of related tasks as an inductive bias. It does this by learning tasks in parallel while using a shared representation; what is learned for each task can help other tasks be learned better. In the classification context, MTL aims to improve the performance of multiple ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Machine Learning

Machine learning (ML) is a field of study in artificial intelligence concerned with the development and study of Computational statistics, statistical algorithms that can learn from data and generalise to unseen data, and thus perform Task (computing), tasks without explicit Machine code, instructions. Within a subdiscipline in machine learning, advances in the field of deep learning have allowed Neural network (machine learning), neural networks, a class of statistical algorithms, to surpass many previous machine learning approaches in performance. ML finds application in many fields, including natural language processing, computer vision, speech recognition, email filtering, agriculture, and medicine. The application of ML to business problems is known as predictive analytics. Statistics and mathematical optimisation (mathematical programming) methods comprise the foundations of machine learning. Data mining is a related field of study, focusing on exploratory data analysi ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

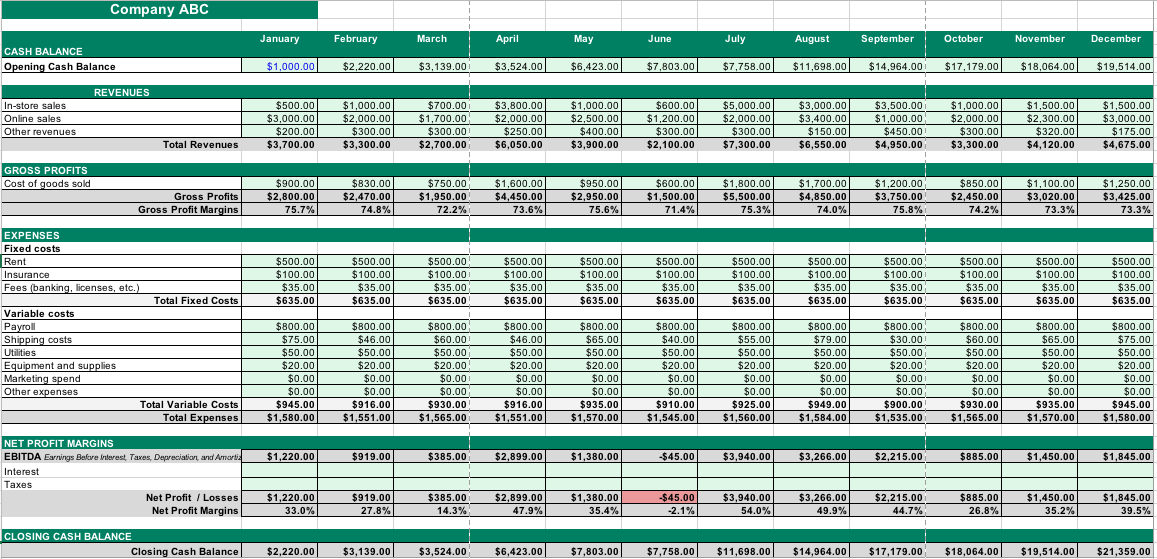

Financial Modeling

Financial modeling is the task of building an abstract representation (a model) of a real world financial situation. This is a mathematical model designed to represent (a simplified version of) the performance of a financial asset or portfolio of a business, project, or any other investment. Typically, then, financial modeling is understood to mean an exercise in either asset pricing or corporate finance, of a quantitative nature. It is about translating a set of hypotheses about the behavior of markets or agents into numerical predictions. At the same time, "financial modeling" is a general term that means different things to different users; the reference usually relates either to accounting and corporate finance applications or to quantitative finance applications. Accounting In corporate finance and the accounting profession, ''financial modeling'' typically entails financial statement forecasting; usually the preparation of detailed company-specific models used for deci ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Ensemble Learning

In statistics and machine learning, ensemble methods use multiple learning algorithms to obtain better predictive performance than could be obtained from any of the constituent learning algorithms alone. Unlike a statistical ensemble in statistical mechanics, which is usually infinite, a machine learning ensemble consists of only a concrete finite set of alternative models, but typically allows for much more flexible structure to exist among those alternatives. Overview Supervised learning algorithms search through a hypothesis space to find a suitable hypothesis that will make good predictions with a particular problem. Even if this space contains hypotheses that are very well-suited for a particular problem, it may be very difficult to find a good one. Ensembles combine multiple hypotheses to form one which should be theoretically better. ''Ensemble learning'' trains two or more machine learning algorithms on a specific classification or regression task. The algorithms wi ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Cooperative Bargaining

Cooperative bargaining is a process in which two people decide how to share a surplus that they can jointly generate. In many cases, the surplus created by the two players can be shared in many ways, forcing the players to negotiate which division of payoffs to choose. Such surplus-sharing problems (also called bargaining problem) are faced by management and labor in the division of a firm's profit, by trade partners in the specification of the terms of trade, and more. The present article focuses on the ''normative'' approach to bargaining. It studies how the surplus ''should'' be shared, by formulating appealing axioms that the solution to a bargaining problem should satisfy. It is useful when both parties are willing to cooperate in implementing the fair solution. Such solutions, particularly the Nash solution, were used to solve concrete economic problems, such as management–labor conflicts, on numerous occasions. An alternative approach to bargaining is the ''positive'' appr ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Deep Neural Networks

Deep learning is a subset of machine learning that focuses on utilizing multilayered neural networks to perform tasks such as classification, regression, and representation learning. The field takes inspiration from biological neuroscience and is centered around stacking artificial neurons into layers and "training" them to process data. The adjective "deep" refers to the use of multiple layers (ranging from three to several hundred or thousands) in the network. Methods used can be either supervised, semi-supervised or unsupervised. Some common deep learning network architectures include fully connected networks, deep belief networks, recurrent neural networks, convolutional neural networks, generative adversarial networks, transformers, and neural radiance fields. These architectures have been applied to fields including computer vision, speech recognition, natural language processing, machine translation, bioinformatics, drug design, medical image analysis, climat ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Gradient Descent

Gradient descent is a method for unconstrained mathematical optimization. It is a first-order iterative algorithm for minimizing a differentiable multivariate function. The idea is to take repeated steps in the opposite direction of the gradient (or approximate gradient) of the function at the current point, because this is the direction of steepest descent. Conversely, stepping in the direction of the gradient will lead to a trajectory that maximizes that function; the procedure is then known as ''gradient ascent''. It is particularly useful in machine learning for minimizing the cost or loss function. Gradient descent should not be confused with local search algorithms, although both are iterative methods for optimization. Gradient descent is generally attributed to Augustin-Louis Cauchy, who first suggested it in 1847. Jacques Hadamard independently proposed a similar method in 1907. Its convergence properties for non-linear optimization problems were first studied by Has ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Crossover (genetic Algorithm)

Crossover in evolutionary algorithms and evolutionary computation, also called recombination, is a genetic operator used to combine the chromosome (genetic algorithm), genetic information of two parents to generate new offspring. It is one way to stochastic, stochastically generate new candidate solution, solutions from an existing population, and is analogous to the chromosomal crossover, crossover that happens during sexual reproduction in biology. New solutions can also be generated by cloning (programming), cloning an existing solution, which is analogous to asexual reproduction. Newly generated solutions may be mutation (genetic algorithm), mutated before being added to the population. The aim of recombination is to transfer good characteristics from two different parents to one child. Different algorithms in evolutionary computation may use different data structures to store genetic information, and each genetic representation can be recombined with different crossover operat ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Implicit Parallelism

In computer science, implicit parallelism is a characteristic of a programming language that allows a compiler or interpreter to automatically exploit the parallelism inherent to the computations expressed by some of the language's constructs. A pure implicitly parallel language does not need special directives, operators or functions to enable parallel execution, as opposed to explicit parallelism. Programming languages with implicit parallelism include Axum, BMDFM, HPF, Id, LabVIEW, MATLAB M-code, NESL, SaC, SISAL, ZPL, and pH. Example If a particular problem involves performing the same operation on a group of numbers (such as taking the sine or logarithm of each in turn), a language that provides implicit parallelism might allow the programmer to write the instruction thus: numbers = 1 2 3 4 5 6 7 result = sin(numbers); The compiler or interpreter can calculate the sine of each element independently, spreading the effort across multiple processors if availa ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Gaussian Process

In probability theory and statistics, a Gaussian process is a stochastic process (a collection of random variables indexed by time or space), such that every finite collection of those random variables has a multivariate normal distribution. The distribution of a Gaussian process is the joint distribution of all those (infinitely many) random variables, and as such, it is a distribution over functions with a continuous domain, e.g. time or space. The concept of Gaussian processes is named after Carl Friedrich Gauss because it is based on the notion of the Gaussian distribution (normal distribution). Gaussian processes can be seen as an infinite-dimensional generalization of multivariate normal distributions. Gaussian processes are useful in statistical modelling, benefiting from properties inherited from the normal distribution. For example, if a random process is modelled as a Gaussian process, the distributions of various derived quantities can be obtained explicitly. Such quanti ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Hyperparameter Optimization

In machine learning, hyperparameter optimization or tuning is the problem of choosing a set of optimal hyperparameters for a learning algorithm. A hyperparameter is a parameter whose value is used to control the learning process, which must be configured before the process starts. Hyperparameter optimization determines the set of hyperparameters that yields an optimal model which minimizes a predefined loss function on a given data set. The objective function takes a set of hyperparameters and returns the associated loss. Cross-validation is often used to estimate this generalization performance, and therefore choose the set of values for hyperparameters that maximize it. Approaches Grid search The traditional method for hyperparameter optimization has been ''grid search'', or a ''parameter sweep'', which is simply an exhaustive searching through a manually specified subset of the hyperparameter space of a learning algorithm. A grid search algorithm must be guided by so ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Game Theory

Game theory is the study of mathematical models of strategic interactions. It has applications in many fields of social science, and is used extensively in economics, logic, systems science and computer science. Initially, game theory addressed two-person zero-sum games, in which a participant's gains or losses are exactly balanced by the losses and gains of the other participant. In the 1950s, it was extended to the study of non zero-sum games, and was eventually applied to a wide range of Human behavior, behavioral relations. It is now an umbrella term for the science of rational Decision-making, decision making in humans, animals, and computers. Modern game theory began with the idea of mixed-strategy equilibria in two-person zero-sum games and its proof by John von Neumann. Von Neumann's original proof used the Brouwer fixed-point theorem on continuous mappings into compact convex sets, which became a standard method in game theory and mathematical economics. His paper was f ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Evolutionary Computation

Evolutionary computation from computer science is a family of algorithms for global optimization inspired by biological evolution, and the subfield of artificial intelligence and soft computing studying these algorithms. In technical terms, they are a family of population-based trial and error problem solvers with a metaheuristic or stochastic optimization character. In evolutionary computation, an initial set of candidate solutions is generated and iteratively updated. Each new generation is produced by stochastically removing less desired solutions, and introducing small random changes as well as, depending on the method, mixing parental information. In biological terminology, a population of solutions is subjected to natural selection (or artificial selection), mutation and possibly recombination. As a result, the population will gradually evolve to increase in fitness, in this case the chosen fitness function of the algorithm. Evolutionary computation techni ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |