|

Mallows's Cp

In statistics, Mallows's ''Cp'', named for Colin Lingwood Mallows, is used to assess the fit of a regression model that has been estimated using ordinary least squares. It is applied in the context of model selection, where a number of predictor variables are available for predicting some outcome, and the goal is to find the best model involving a subset of these predictors. A small value of Cp means that the model is relatively precise. Mallows's ''Cp'' has been shown to be equivalent to Akaike information criterion in the special case of Gaussian linear regression. Definition and properties Mallows's ''Cp'' addresses the issue of overfitting, in which model selection statistics such as the residual sum of squares always get smaller as more variables are added to a model. Thus, if we aim to select the model giving the smallest residual sum of squares, the model including all variables would always be selected. Instead, the ''Cp'' statistic calculated on a sample of data es ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Statistics

Statistics (from German language, German: ''wikt:Statistik#German, Statistik'', "description of a State (polity), state, a country") is the discipline that concerns the collection, organization, analysis, interpretation, and presentation of data. In applying statistics to a scientific, industrial, or social problem, it is conventional to begin with a statistical population or a statistical model to be studied. Populations can be diverse groups of people or objects such as "all people living in a country" or "every atom composing a crystal". Statistics deals with every aspect of data, including the planning of data collection in terms of the design of statistical survey, surveys and experimental design, experiments.Dodge, Y. (2006) ''The Oxford Dictionary of Statistical Terms'', Oxford University Press. When census data cannot be collected, statisticians collect data by developing specific experiment designs and survey sample (statistics), samples. Representative sampling as ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Collinearity

In geometry, collinearity of a set of Point (geometry), points is the property of their lying on a single Line (geometry), line. A set of points with this property is said to be collinear (sometimes spelled as colinear). In greater generality, the term has been used for aligned objects, that is, things being "in a line" or "in a row". Points on a line In any geometry, the set of points on a line are said to be collinear. In Euclidean geometry this relation is intuitively visualized by points lying in a row on a "straight line". However, in most geometries (including Euclidean) a Line (geometry), line is typically a Primitive notion, primitive (undefined) object type, so such visualizations will not necessarily be appropriate. A Mathematical model, model for the geometry offers an interpretation of how the points, lines and other object types relate to one another and a notion such as collinearity must be interpreted within the context of that model. For instance, in spherical ge ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Biometrics (journal)

''Biometrics'' is a journal that publishes articles on the application of statistics and mathematics to the biological sciences. It is published by the International Biometric Society (IBS).Biometrics homepage Originally published in 1945 under the title ''Biometrics Bulletin'', the journal adopted the shorter title in 1947. Biometrics, Vol. 3, No. 1, Mar., 1947 Page 53 /ref> A notable contributor to the journal was , for whom a memorial edition was published in 1964. [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Coefficient Of Determination

In statistics, the coefficient of determination, denoted ''R''2 or ''r''2 and pronounced "R squared", is the proportion of the variation in the dependent variable that is predictable from the independent variable(s). It is a statistic used in the context of statistical models whose main purpose is either the prediction of future outcomes or the testing of hypotheses, on the basis of other related information. It provides a measure of how well observed outcomes are replicated by the model, based on the proportion of total variation of outcomes explained by the model. There are several definitions of ''R''2 that are only sometimes equivalent. One class of such cases includes that of simple linear regression where ''r''2 is used instead of ''R''2. When only an intercept is included, then ''r''2 is simply the square of the sample correlation coefficient (i.e., ''r'') between the observed outcomes and the observed predictor values. If additional regressors are included, ''R''2 ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Regression Analysis

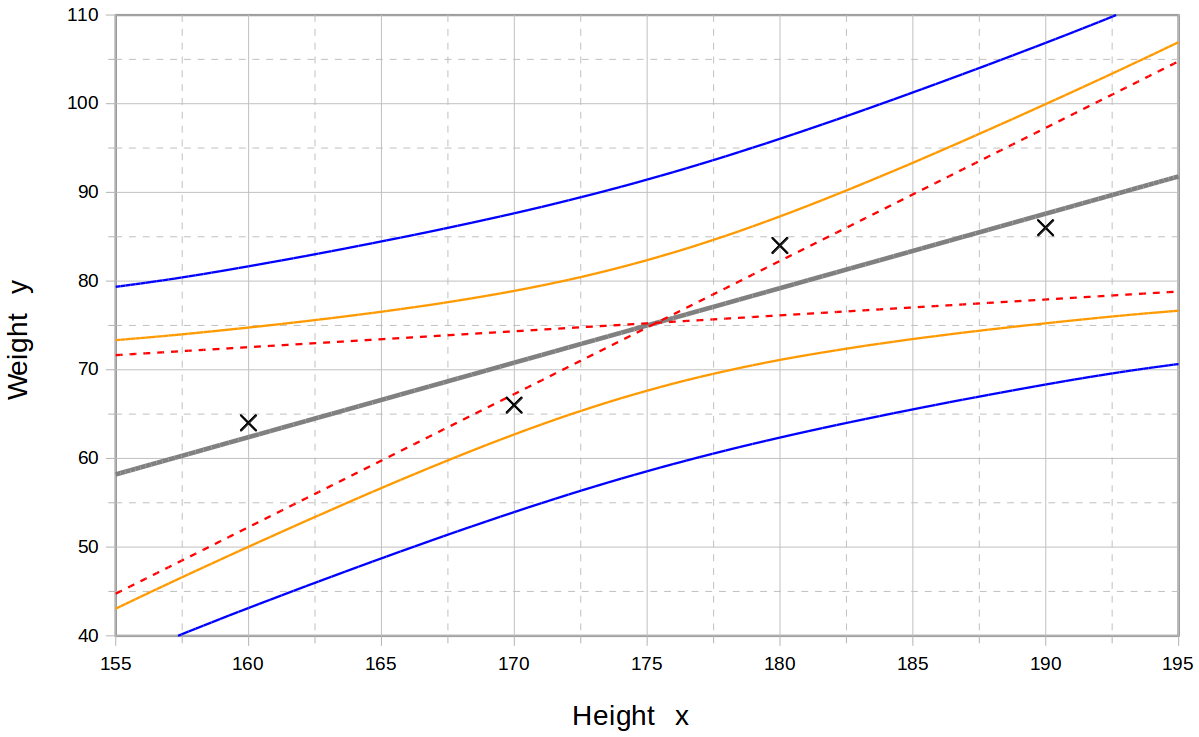

In statistical modeling, regression analysis is a set of statistical processes for estimating the relationships between a dependent variable (often called the 'outcome' or 'response' variable, or a 'label' in machine learning parlance) and one or more independent variables (often called 'predictors', 'covariates', 'explanatory variables' or 'features'). The most common form of regression analysis is linear regression, in which one finds the line (or a more complex linear combination) that most closely fits the data according to a specific mathematical criterion. For example, the method of ordinary least squares computes the unique line (or hyperplane) that minimizes the sum of squared differences between the true data and that line (or hyperplane). For specific mathematical reasons (see linear regression), this allows the researcher to estimate the conditional expectation (or population average value) of the dependent variable when the independent variables take on a given set of ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Stepwise Regression

In statistics, stepwise regression is a method of fitting regression models in which the choice of predictive variables is carried out by an automatic procedure. In each step, a variable is considered for addition to or subtraction from the set of explanatory variables based on some prespecified criterion. Usually, this takes the form of a forward, backward, or combined sequence of ''F''-tests or ''t''-tests. The frequent practice of fitting the final selected model followed by reporting estimates and confidence intervals without adjusting them to take the model building process into account has led to calls to stop using stepwise model building altogetherFlom, P. L. and Cassell, D. L. (2007) "Stopping stepwise: Why stepwise and similar selection methods are bad, and what you should use," NESUG 2007. or to at least make sure model uncertainty is correctly reflected.Chatfield, C. (1995) "Model uncertainty, data mining and statistical inference," J. R. Statist. Soc. A 158, Part 3, ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Feature Selection

In machine learning and statistics, feature selection, also known as variable selection, attribute selection or variable subset selection, is the process of selecting a subset of relevant features (variables, predictors) for use in model construction. Feature selection techniques are used for several reasons: :* simplification of models to make them easier to interpret by researchers/users, :* shorter training times, :* to avoid the curse of dimensionality, :*improve data's compatibility with a learning model class, :*encode inherent symmetries present in the input space. The central premise when using a feature selection technique is that the data contains some features that are either ''redundant'' or ''irrelevant'', and can thus be removed without incurring much loss of information. ''Redundant'' and ''irrelevant'' are two distinct notions, since one relevant feature may be redundant in the presence of another relevant feature with which it is strongly correlated. Feature sele ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Sample Size

Sample size determination is the act of choosing the number of observations or Replication (statistics), replicates to include in a statistical sample. The sample size is an important feature of any empirical study in which the goal is to make statistical inference, inferences about a statistical population, population from a sample. In practice, the sample size used in a study is usually determined based on the cost, time, or convenience of collecting the data, and the need for it to offer sufficient statistical power. In complicated studies there may be several different sample sizes: for example, in a stratified sampling, stratified survey sampling, survey there would be different sizes for each stratum. In a census, data is sought for an entire population, hence the intended sample size is equal to the population. In experimental design, where a study may be divided into different treatment groups, there may be different sample sizes for each group. Sample sizes may be chosen in ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Mean Square Error

In statistics, the mean squared error (MSE) or mean squared deviation (MSD) of an estimator (of a procedure for estimating an unobserved quantity) measures the average of the squares of the errors—that is, the average squared difference between the estimated values and the actual value. MSE is a risk function, corresponding to the expected value of the squared error loss. The fact that MSE is almost always strictly positive (and not zero) is because of randomness or because the estimator does not account for information that could produce a more accurate estimate. In machine learning, specifically empirical risk minimization, MSE may refer to the ''empirical'' risk (the average loss on an observed data set), as an estimate of the true MSE (the true risk: the average loss on the actual population distribution). The MSE is a measure of the quality of an estimator. As it is derived from the square of Euclidean distance, it is always a positive value that decreases as the error a ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Regression Analysis

In statistical modeling, regression analysis is a set of statistical processes for estimating the relationships between a dependent variable (often called the 'outcome' or 'response' variable, or a 'label' in machine learning parlance) and one or more independent variables (often called 'predictors', 'covariates', 'explanatory variables' or 'features'). The most common form of regression analysis is linear regression, in which one finds the line (or a more complex linear combination) that most closely fits the data according to a specific mathematical criterion. For example, the method of ordinary least squares computes the unique line (or hyperplane) that minimizes the sum of squared differences between the true data and that line (or hyperplane). For specific mathematical reasons (see linear regression), this allows the researcher to estimate the conditional expectation (or population average value) of the dependent variable when the independent variables take on a given ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Predict

A prediction (Latin ''præ-'', "before," and ''dicere'', "to say"), or forecast, is a statement about a future event or data. They are often, but not always, based upon experience or knowledge. There is no universal agreement about the exact difference from "estimation"; different authors and disciplines ascribe different connotations. Future events are necessarily uncertain, so guaranteed accurate information about the future is impossible. Prediction can be useful to assist in making plans about possible developments. Opinion In a non-statistical sense, the term "prediction" is often used to refer to an informed guess or opinion. A prediction of this kind might be informed by a predicting person's abductive reasoning, inductive reasoning, deductive reasoning, and experience; and may be useful—if the predicting person is a knowledgeable person in the field. The Delphi method is a technique for eliciting such expert-judgement-based predictions in a controlled way. ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Residual Sum Of Squares

In statistics, the residual sum of squares (RSS), also known as the sum of squared estimate of errors (SSE), is the sum of the squares of residuals (deviations predicted from actual empirical values of data). It is a measure of the discrepancy between the data and an estimation model, such as a linear regression. A small RSS indicates a tight fit of the model to the data. It is used as an optimality criterion in parameter selection and model selection. In general, total sum of squares = explained sum of squares + residual sum of squares. For a proof of this in the multivariate ordinary least squares (OLS) case, see partitioning in the general OLS model. One explanatory variable In a model with a single explanatory variable, RSS is given by: :\operatorname = \sum_^n (y_i - f(x_i))^2 where ''y''''i'' is the ''i''th value of the variable to be predicted, ''x''''i'' is the ''i''th value of the explanatory variable, and f(x_i) is the predicted value of ''y''''i'' (also te ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |