|

Malgrange–Ehrenpreis Theorem

In mathematics, the Malgrange–Ehrenpreis theorem states that every non-zero linear differential operator with constant coefficients has a Green's function. It was first proved independently by and . This means that the differential equation :P\left(\frac, \ldots, \frac \right) u(\mathbf) = \delta(\mathbf), where ''P'' is a polynomial in several variables and ''δ'' is the Dirac delta function, has a distributional solution ''u''. It can be used to show that :P\left(\frac, \ldots, \frac \right) u(\mathbf) = f(\mathbf) has a solution for any compactly supported distribution ''f''. The solution is not unique in general. The analogue for differential operators whose coefficients are polynomials (rather than constants) is false: see Lewy's example. Proofs The original proofs of Malgrange and Ehrenpreis were non-constructive as they used the Hahn–Banach theorem. Since then several constructive proofs have been found. There is a very short proof using the Fourier transform ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Differential Operator

In mathematics, a differential operator is an operator defined as a function of the differentiation operator. It is helpful, as a matter of notation first, to consider differentiation as an abstract operation that accepts a function and returns another function (in the style of a higher-order function in computer science). This article considers mainly linear differential operators, which are the most common type. However, non-linear differential operators also exist, such as the Schwarzian derivative. Definition An order-m linear differential operator is a map A from a function space \mathcal_1 to another function space \mathcal_2 that can be written as: A = \sum_a_\alpha(x) D^\alpha\ , where \alpha = (\alpha_1,\alpha_2,\cdots,\alpha_n) is a multi-index of non-negative integers, , \alpha, = \alpha_1 + \alpha_2 + \cdots + \alpha_n, and for each \alpha, a_\alpha(x) is a function on some open domain in ''n''-dimensional space. The operator D^\alpha is interpreted as D^\alp ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Constant Coefficients

In mathematics, a linear differential equation is a differential equation that is defined by a linear polynomial in the unknown function and its derivatives, that is an equation of the form :a_0(x)y + a_1(x)y' + a_2(x)y'' \cdots + a_n(x)y^ = b(x) where and are arbitrary differentiable functions that do not need to be linear, and are the successive derivatives of an unknown function of the variable . Such an equation is an ordinary differential equation (ODE). A ''linear differential equation'' may also be a linear partial differential equation (PDE), if the unknown function depends on several variables, and the derivatives that appear in the equation are partial derivatives. A linear differential equation or a system of linear equations such that the associated homogeneous equations have constant coefficients may be solved by quadrature, which means that the solutions may be expressed in terms of integrals. This is also true for a linear equation of order one, with non-con ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Green's Function

In mathematics, a Green's function is the impulse response of an inhomogeneous linear differential operator defined on a domain with specified initial conditions or boundary conditions. This means that if \operatorname is the linear differential operator, then * the Green's function G is the solution of the equation \operatorname G = \delta, where \delta is Dirac's delta function; * the solution of the initial-value problem \operatorname y = f is the convolution (G \ast f). Through the superposition principle, given a linear ordinary differential equation (ODE), \operatorname y = f, one can first solve \operatorname G = \delta_s, for each , and realizing that, since the source is a sum of delta functions, the solution is a sum of Green's functions as well, by linearity of . Green's functions are named after the British mathematician George Green, who first developed the concept in the 1820s. In the modern study of linear partial differential equations, Green's functions are s ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Differential Equation

In mathematics, a differential equation is an equation that relates one or more unknown functions and their derivatives. In applications, the functions generally represent physical quantities, the derivatives represent their rates of change, and the differential equation defines a relationship between the two. Such relations are common; therefore, differential equations play a prominent role in many disciplines including engineering, physics, economics, and biology. Mainly the study of differential equations consists of the study of their solutions (the set of functions that satisfy each equation), and of the properties of their solutions. Only the simplest differential equations are solvable by explicit formulas; however, many properties of solutions of a given differential equation may be determined without computing them exactly. Often when a closed-form expression for the solutions is not available, solutions may be approximated numerically using computers. The theory of d ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Dirac Delta Function

In mathematics, the Dirac delta distribution ( distribution), also known as the unit impulse, is a generalized function or distribution over the real numbers, whose value is zero everywhere except at zero, and whose integral over the entire real line is equal to one. The current understanding of the unit impulse is as a linear functional that maps every continuous function (e.g., f(x)) to its value at zero of its domain (f(0)), or as the weak limit of a sequence of bump functions (e.g., \delta(x) = \lim_ \frace^), which are zero over most of the real line, with a tall spike at the origin. Bump functions are thus sometimes called "approximate" or "nascent" delta distributions. The delta function was introduced by physicist Paul Dirac as a tool for the normalization of state vectors. It also has uses in probability theory and signal processing. Its validity was disputed until Laurent Schwartz developed the theory of distributions where it is defined as a linear form acting on ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Distribution (mathematics)

Distributions, also known as Schwartz distributions or generalized functions, are objects that generalize the classical notion of functions in mathematical analysis. Distributions make it possible to differentiate functions whose derivatives do not exist in the classical sense. In particular, any locally integrable function has a distributional derivative. Distributions are widely used in the theory of partial differential equations, where it may be easier to establish the existence of distributional solutions than classical solutions, or where appropriate classical solutions may not exist. Distributions are also important in physics and engineering where many problems naturally lead to differential equations whose solutions or initial conditions are singular, such as the Dirac delta function. A function f is normally thought of as on the in the function domain by "sending" a point x in its domain to the point f(x). Instead of acting on points, distribution theory reinterpr ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Lewy's Example

In the mathematical study of partial differential equations, Lewy's example is a celebrated example, due to Hans Lewy, of a linear partial differential equation with no solutions. It shows that the analog of the Cauchy–Kovalevskaya theorem does not hold in the smooth category. The original example is not explicit, since it employs the Hahn–Banach theorem, but there since have been various explicit examples of the same nature found by Howard Jacobowitz. The Malgrange–Ehrenpreis theorem states (roughly) that linear partial differential equations with constant coefficients always have at least one solution; Lewy's example shows that this result cannot be extended to linear partial differential equations with polynomial coefficients. The example The statement is as follows :On \mathbb \times \mathbb, there exists a smooth complex-valued function F(t,z) such that the differential equation ::\frac-iz\frac = F(t,z) :admits no solution on any open set. Note that if ''F'' is a ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Hahn–Banach Theorem

The Hahn–Banach theorem is a central tool in functional analysis. It allows the extension of bounded linear functionals defined on a subspace of some vector space to the whole space, and it also shows that there are "enough" continuous linear functionals defined on every normed vector space to make the study of the dual space "interesting". Another version of the Hahn–Banach theorem is known as the Hahn–Banach separation theorem or the hyperplane separation theorem, and has numerous uses in convex geometry. History The theorem is named for the mathematicians Hans Hahn and Stefan Banach, who proved it independently in the late 1920s. The special case of the theorem for the space C[a, b] of continuous functions on an interval was proved earlier (in 1912) by Eduard Helly, and a more general extension theorem, the M. Riesz extension theorem, from which the Hahn–Banach theorem can be derived, was proved in 1923 by Marcel Riesz. The first Hahn–Banach theorem was proved by ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Bernstein–Sato Polynomial

In mathematics, the Bernstein–Sato polynomial is a polynomial related to differential operators, introduced independently by and , . It is also known as the b-function, the b-polynomial, and the Bernstein polynomial, though it is not related to the Bernstein polynomials used in approximation theory. It has applications to singularity theory, monodromy theory, and quantum field theory. gives an elementary introduction, while and give more advanced accounts. Definition and properties If f(x) is a polynomial in several variables, then there is a non-zero polynomial b(s) and a differential operator P(s) with polynomial coefficients such that :P(s)f(x)^ = b(s)f(x)^s. The Bernstein–Sato polynomial is the monic polynomial of smallest degree amongst such polynomials b(s). Its existence can be shown using the notion of holonomic D-modules. proved that all roots of the Bernstein–Sato polynomial are negative rational numbers. The Bernstein–Sato polynomial can also be ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Fourier Transform

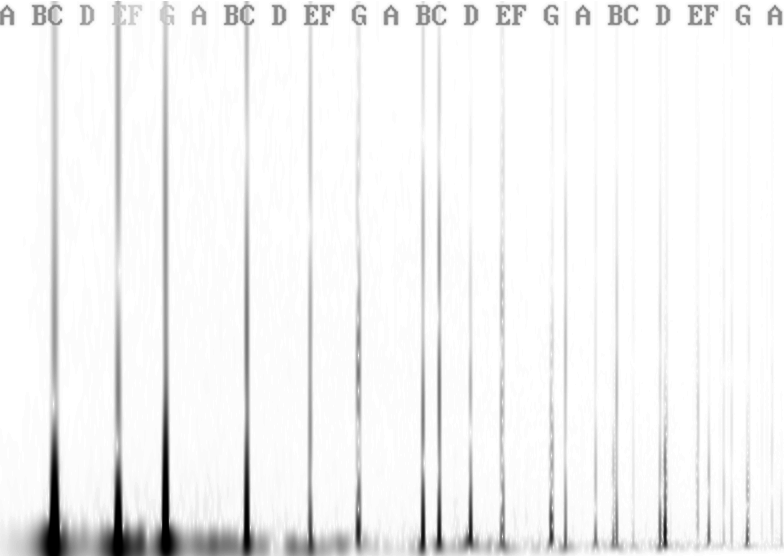

A Fourier transform (FT) is a mathematical transform that decomposes functions into frequency components, which are represented by the output of the transform as a function of frequency. Most commonly functions of time or space are transformed, which will output a function depending on temporal frequency or spatial frequency respectively. That process is also called ''analysis''. An example application would be decomposing the waveform of a musical chord into terms of the intensity of its constituent pitches. The term ''Fourier transform'' refers to both the frequency domain representation and the mathematical operation that associates the frequency domain representation to a function of space or time. The Fourier transform of a function is a complex-valued function representing the complex sinusoids that comprise the original function. For each frequency, the magnitude (absolute value) of the complex value represents the amplitude of a constituent complex sinusoid with that ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Differential Equations

In mathematics, a differential equation is an equation that relates one or more unknown functions and their derivatives. In applications, the functions generally represent physical quantities, the derivatives represent their rates of change, and the differential equation defines a relationship between the two. Such relations are common; therefore, differential equations play a prominent role in many disciplines including engineering, physics, economics, and biology. Mainly the study of differential equations consists of the study of their solutions (the set of functions that satisfy each equation), and of the properties of their solutions. Only the simplest differential equations are solvable by explicit formulas; however, many properties of solutions of a given differential equation may be determined without computing them exactly. Often when a closed-form expression for the solutions is not available, solutions may be approximated numerically using computers. The theory of d ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Theorems In Analysis

In mathematics, a theorem is a statement that has been proved, or can be proved. The ''proof'' of a theorem is a logical argument that uses the inference rules of a deductive system to establish that the theorem is a logical consequence of the axioms and previously proved theorems. In the mainstream of mathematics, the axioms and the inference rules are commonly left implicit, and, in this case, they are almost always those of Zermelo–Fraenkel set theory with the axiom of choice, or of a less powerful theory, such as Peano arithmetic. A notable exception is Wiles's proof of Fermat's Last Theorem, which involves the Grothendieck universes whose existence requires the addition of a new axiom to the set theory. Generally, an assertion that is explicitly called a theorem is a proved result that is not an immediate consequence of other known theorems. Moreover, many authors qualify as ''theorems'' only the most important results, and use the terms ''lemma'', ''proposition'' and '' ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |