|

Maximally Stable Extremal Regions

In computer vision, maximally stable extremal regions (MSER) are used as a method of blob detection in images. This technique was proposed by :cs:Jiří_Matas, Matas et al.J. Matas, O. Chum, M. Urban, and T. Pajdla"Robust wide baseline stereo from maximally stable extremal regions."Proc. of British Machine Vision Conference, pages 384-396, 2002. to find correspondence problem, correspondences between image elements from two images with different viewpoints. This method of extracting a comprehensive number of corresponding image elements contributes to the wide-baseline matching, and it has led to better stereo matching and object recognition algorithms. Terms and definitions Image I is a mapping I : D \subset \mathbb^2 \to S. Extremal regions are well defined on images if: # S is totally ordered (total, antisymmetric and transitive binary relations \le exist). # An adjacency relation A \subset D \times D is defined. We will denote that two points are adjacent as pAq. Region Q i ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Computer Vision

Computer vision is an Interdisciplinarity, interdisciplinary scientific field that deals with how computers can gain high-level understanding from digital images or videos. From the perspective of engineering, it seeks to understand and automate tasks that the human visual system can do. Computer vision tasks include methods for image sensor, acquiring, Image processing, processing, Image analysis, analyzing and understanding digital images, and extraction of high-dimensional data from the real world in order to produce numerical or symbolic information, e.g. in the forms of decisions. Understanding in this context means the transformation of visual images (the input of the retina) into descriptions of the world that make sense to thought processes and can elicit appropriate action. This image understanding can be seen as the disentangling of symbolic information from image data using models constructed with the aid of geometry, physics, statistics, and learning theory. The scien ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

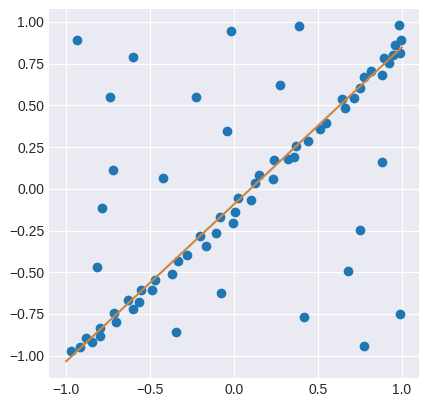

RANSAC

Random sample consensus (RANSAC) is an iterative method to estimate parameters of a mathematical model from a set of observed data that contains outliers, when outliers are to be accorded no influence on the values of the estimates. Therefore, it also can be interpreted as an outlier detection method. It is a non-deterministic algorithm in the sense that it produces a reasonable result only with a certain probability, with this probability increasing as more iterations are allowed. The algorithm was first published by Fischler and Bolles at SRI International in 1981. They used RANSAC to solve the Location Determination Problem (LDP), where the goal is to determine the points in the space that project onto an image into a set of landmarks with known locations. RANSAC uses repeated random sub-sampling. A basic assumption is that the data consists of "inliers", i.e., data whose distribution can be explained by some set of model parameters, though may be subject to noise, and "outlier ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

MEX File

A MEX file is a type of computer file that provides an interface between MATLAB or Octave In music, an octave ( la, octavus: eighth) or perfect octave (sometimes called the diapason) is the interval between one musical pitch and another with double its frequency. The octave relationship is a natural phenomenon that has been refer ... and functions written in C, C++ or Fortran. It stands for "MATLAB executable". When compiled, MEX files are dynamically loaded and allow external functions to be invoked from within MATLAB or Octave as if they were built-in functions. To support the development of MEX files, both MATLAB and Octave offer external interface functions that facilitate the transfer of data between MEX files and the workspace. In addition to MEX files, Octave has its own format using its own native API, with better performance. References External links MEX-files guide from MathWorks [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

C (programming Language)

C (''pronounced like the letter c'') is a General-purpose language, general-purpose computer programming language. It was created in the 1970s by Dennis Ritchie, and remains very widely used and influential. By design, C's features cleanly reflect the capabilities of the targeted CPUs. It has found lasting use in operating systems, device drivers, protocol stacks, though decreasingly for application software. C is commonly used on computer architectures that range from the largest supercomputers to the smallest microcontrollers and embedded systems. A successor to the programming language B (programming language), B, C was originally developed at Bell Labs by Ritchie between 1972 and 1973 to construct utilities running on Unix. It was applied to re-implementing the kernel of the Unix operating system. During the 1980s, C gradually gained popularity. It has become one of the measuring programming language popularity, most widely used programming languages, with C compilers avail ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Feature Detection (computer Vision)

In computer vision and image processing, a feature is a piece of information about the content of an image; typically about whether a certain region of the image has certain properties. Features may be specific structures in the image such as points, edges or objects. Features may also be the result of a general neighborhood operation or feature detection applied to the image. Other examples of features are related to motion in image sequences, or to shapes defined in terms of curves or boundaries between different image regions. More broadly a ''feature'' is any piece of information which is relevant for solving the computational task related to a certain application. This is the same sense as feature in machine learning and pattern recognition generally, though image processing has a very sophisticated collection of features. The feature concept is very general and the choice of features in a particular computer vision system may be highly dependent on the specific problem at ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Blob Detection

In computer vision, blob detection methods are aimed at detecting regions in a digital image that differ in properties, such as brightness or color, compared to surrounding regions. Informally, a blob is a region of an image in which some properties are constant or approximately constant; all the points in a blob can be considered in some sense to be similar to each other. The most common method for blob detection is convolution. Given some property of interest expressed as a function of position on the image, there are two main classes of blob detectors: (i) '' differential methods'', which are based on derivatives of the function with respect to position, and (ii) ''methods based on local extrema'', which are based on finding the local maxima and minima of the function. With the more recent terminology used in the field, these detectors can also be referred to as ''interest point operators'', or alternatively interest region operators (see also interest point detecti ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Mahalanobis Distance

The Mahalanobis distance is a measure of the distance between a point ''P'' and a distribution ''D'', introduced by P. C. Mahalanobis in 1936. Mahalanobis's definition was prompted by the problem of identifying the similarities of skulls based on measurements in 1927. It is a multi-dimensional generalization of the idea of measuring how many standard deviations away ''P'' is from the mean of ''D''. This distance is zero for ''P'' at the mean of ''D'' and grows as ''P'' moves away from the mean along each principal component axis. If each of these axes is re-scaled to have unit variance, then the Mahalanobis distance corresponds to standard Euclidean distance in the transformed space. The Mahalanobis distance is thus unitless, scale-invariant, and takes into account the correlations of the data set. Definition Given a probability distribution Q on \R^N, with mean \vec = (\mu_1, \mu_2, \mu_3, \dots , \mu_N)^\mathsf and positive-definite covariance matrix S, the Mahalanobis ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

HSV Color Space

HSL (for hue, saturation, lightness) and HSV (for hue, saturation, value; also known as HSB, for hue, saturation, brightness) are alternative representations of the RGB color model, RGB color model, designed in the 1970s by computer graphics researchers to more closely align with the way human vision perceives color-making attributes. In these models, colors of each ''hue'' are arranged in a radial slice, around a central axis of neutral colors which ranges from black at the bottom to white at the top. The HSL representation models the way different paints mix together to create color in the real world, with the ''lightness'' dimension resembling the varying amounts of black or white paint in the mixture (e.g. to create "light red", a red pigment can be mixed with white paint; this white paint corresponds to a high "lightness" value in the HSL representation). Fully saturated colors are placed around a circle at a lightness value of ½, with a lightness value of 0 or 1 correspon ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Agglomerative Clustering

In data mining and statistics, hierarchical clustering (also called hierarchical cluster analysis or HCA) is a method of cluster analysis that seeks to build a hierarchy of clusters. Strategies for hierarchical clustering generally fall into two categories: * Agglomerative: This is a " bottom-up" approach: Each observation starts in its own cluster, and pairs of clusters are merged as one moves up the hierarchy. * Divisive: This is a "top-down" approach: All observations start in one cluster, and splits are performed recursively as one moves down the hierarchy. In general, the merges and splits are determined in a greedy manner. The results of hierarchical clustering are usually presented in a dendrogram. The standard algorithm for hierarchical agglomerative clustering (HAC) has a time complexity of \mathcal(n^3) and requires \Omega(n^2) memory, which makes it too slow for even medium data sets. However, for some special cases, optimal efficient agglomerative methods (of comp ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Graph Cuts In Computer Vision

As applied in the field of computer vision, graph cut optimization can be employed to efficiently solve a wide variety of low-level computer vision problems (''early vision''), such as image smoothing, the stereo correspondence problem, image segmentation, object co-segmentation, and many other computer vision problems that can be formulated in terms of energy minimization. Many of these energy minimization problems can be approximated by solving a maximum flow problem in a graph (and thus, by the max-flow min-cut theorem, define a minimal cut of the graph). Under most formulations of such problems in computer vision, the minimum energy solution corresponds to the maximum a posteriori estimate of a solution. Although many computer vision algorithms involve cutting a graph (e.g., normalized cuts), the term "graph cuts" is applied specifically to those models which employ a max-flow/min-cut optimization (other graph cutting algorithms may be considered as graph partitioning alg ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Canny Edge Detector

The Canny edge detector is an edge detection operator that uses a multi-stage algorithm to detect a wide range of edges in images. It was developed by John F. Canny in 1986. Canny also produced a ''computational theory of edge detection'' explaining why the technique works. Development Canny edge detection is a technique to extract useful structural information from different vision objects and dramatically reduce the amount of data to be processed. It has been widely applied in various computer vision systems. Canny has found that the requirements for the application of edge detection on diverse vision systems are relatively similar. Thus, an edge detection solution to address these requirements can be implemented in a wide range of situations. The general criteria for edge detection include: # Detection of edge with low error rate, which means that the detection should accurately catch as many edges shown in the image as possible # The edge point detected from the operator s ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Eight-point Algorithm

The eight-point algorithm is an algorithm used in computer vision to estimate the essential matrix or the fundamental matrix related to a stereo camera pair from a set of corresponding image points. It was introduced by Christopher Longuet-Higgins in 1981 for the case of the essential matrix. In theory, this algorithm can be used also for the fundamental matrix, but in practice the normalized eight-point algorithm, described by Richard Hartley in 1997, is better suited for this case. The algorithm's name derives from the fact that it estimates the essential matrix or the fundamental matrix from a set of eight (or more) corresponding image points. However, variations of the algorithm can be used for fewer than eight points. Coplanarity constraint One may express the epipolar geometry of two cameras and a point in space with an algebraic equation. Observe that, no matter where the point P is in space, the vectors \overline, \overline and \overline belong to the same plan ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

_(cropped).png)