|

MapR

MapR was a business software company headquartered in Santa Clara, California. MapR software provides access to a variety of data sources from a single computer cluster, including big data workloads such as Apache Hadoop and Apache Spark, a distributed file system, a multi-model database management system, and event stream processing, combining analytics in real-time with operational applications. Its technology runs on both commodity hardware and public cloud computing services. In August 2019, following financial difficulties, the technology and intellectual property of the company were sold to Hewlett Packard Enterprise. Funding MapR was privately held with original funding of $9 million from Lightspeed Venture Partners and New Enterprise Associates in 2009. MapR executives come from Google, Lightspeed Venture Partners, Informatica, EMC Corporation and Veoh. MapR had an additional round of funding led by Redpoint Ventures in August, 2011. A round in 2013 was led by Mayfi ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Apache Hadoop

Apache Hadoop () is a collection of open-source software utilities for reliable, scalable, distributed computing. It provides a software framework for distributed storage and processing of big data using the MapReduce programming model. Hadoop was originally designed for computer clusters built from commodity hardware, which is still the common use. It has since also found use on clusters of higher-end hardware. All the modules in Hadoop are designed with a fundamental assumption that hardware failures are common occurrences and should be automatically handled by the framework. Overview The core of Apache Hadoop consists of a storage part, known as Hadoop Distributed File System (HDFS), and a processing part which is a MapReduce programming model. Hadoop splits files into large blocks and distributes them across nodes in a cluster. It then transfers packaged code into nodes to process the data in parallel. This approach takes advantage of data locality, where nodes manipulate ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Apache Hive

Apache Hive is a data warehouse software project. It is built on top of Apache Hadoop for providing data query and analysis. Hive gives an SQL-like Interface (computing), interface to query data stored in various databases and file systems that integrate with Hadoop. Traditional SQL queries must be implemented in the MapReduce Java API to execute SQL applications and queries over distributed data. Hive provides the necessary SQL abstraction to integrate SQL-like queries (#HiveQL, HiveQL) into the underlying Java without the need to implement queries in the low-level Java API. Hive facilitates the integration of SQL-based querying languages with Hadoop, which is commonly used in data warehousing applications. While initially developed by Facebook, Inc., Facebook, Apache Hive is used and developed by other companies such as Netflix and the Financial Industry Regulatory Authority (FINRA). Amazon maintains a software fork of Apache Hive included in Apache Hadoop#On Amazon Elastic MapR ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Apache Spark

Apache Spark is an open-source unified analytics engine for large-scale data processing. Spark provides an interface for programming clusters with implicit data parallelism and fault tolerance. Originally developed at the University of California, Berkeley's AMPLab starting in 2009, in 2013, the Spark codebase was donated to the Apache Software Foundation, which has maintained it since. Overview Apache Spark has its architectural foundation in the resilient distributed dataset (RDD), a read-only multiset of data items distributed over a cluster of machines, that is maintained in a fault-tolerant way. The Dataframe API was released as an abstraction on top of the RDD, followed by the Dataset API. In Spark 1.x, the RDD was the primary application programming interface (API), but as of Spark 2.x use of the Dataset API is encouraged even though the RDD API is not deprecated. The RDD technology still underlies the Dataset API. Spark and its RDDs were developed in 2012 in respon ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

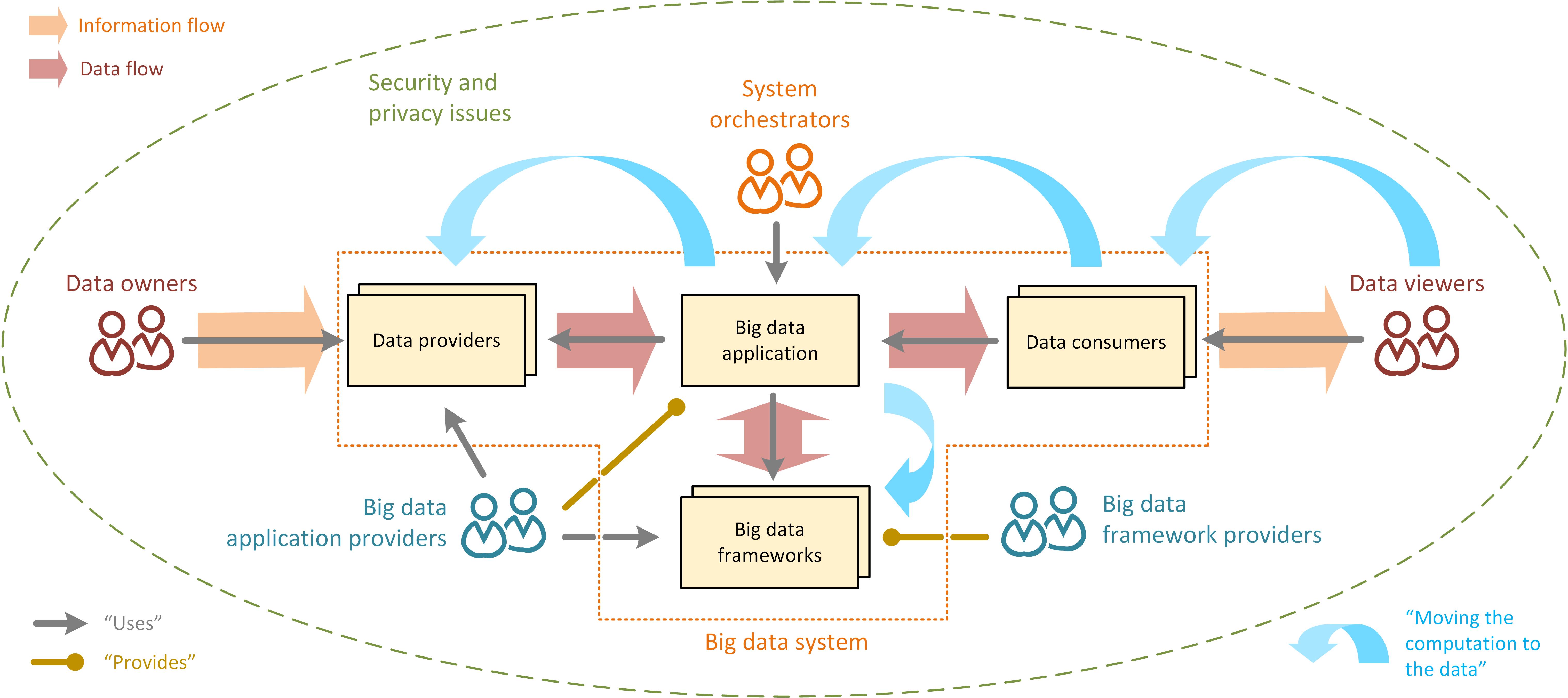

Big Data

Big data primarily refers to data sets that are too large or complex to be dealt with by traditional data processing, data-processing application software, software. Data with many entries (rows) offer greater statistical power, while data with higher complexity (more attributes or columns) may lead to a higher false discovery rate. Big data analysis challenges include Automatic identification and data capture, capturing data, Computer data storage, data storage, data analysis, search, Data sharing, sharing, Data transmission, transfer, Data visualization, visualization, Query language, querying, updating, information privacy, and data source. Big data was originally associated with three key concepts: ''volume'', ''variety'', and ''velocity''. The analysis of big data presents challenges in sampling, and thus previously allowing for only observations and sampling. Thus a fourth concept, ''veracity,'' refers to the quality or insightfulness of the data. Without sufficient investm ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Pig (programming Language)

Apache Pig is a high-level platform for creating programs that run on Apache Hadoop. The language for this platform is called Pig Latin. Pig can execute its Hadoop jobs in MapReduce, Apache Tez, or Apache Spark. Pig Latin abstracts the programming from the Java MapReduce idiom into a notation which makes MapReduce programming high level, similar to that of SQL for relational database management systems. Pig Latin can be extended using user-defined functions (UDFs) which the user can write in Java, Python, JavaScript, Ruby or Groovy and then call directly from the language. History Apache Pig was originally developed at Yahoo Research around 2006 for researchers to have an ad hoc way of creating and executing MapReduce jobs on very large data sets. In 2007, it was moved into the Apache Software Foundation. Naming Regarding the naming of the Pig programming language, the name was chosen arbitrarily and stuck because it was memorable, easy to spell, and for novelty. Example ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Google Capital

CapitalG Management Company LLC (file no. 5324444) (formerly Google Capital) is the independent growth fund under Alphabet Inc. Alphabet Inc. is an American multinational technology conglomerate holding company headquartered in Mountain View, California. Alphabet is the world's third-largest technology company by revenue, after Amazon and Apple, the largest techno ... Founded in 2013, it focuses on larger, growth-stage technology companies, and invests for profit rather than strategically for Google. In addition to capital investment, CapitalG's approach includes giving portfolio companies access to Google's people, knowledge, and culture to support the companies' growth and offer them guidance. History The fund began operating in 2013 but was only officially unveiled on February 19, 2014. The firm operates out of the Ferry Building in San Francisco. Following the Alphabet restructuring, Google Capital was renamed CapitalG on November 4, 2016. Team CapitalG is ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Hewlett Packard Enterprise

The Hewlett Packard Enterprise Company (HPE) is an American multinational information technology company based in Spring, Texas. It is a business-focused organization which works in servers, storage, networking, containerization software and consulting and support. HPE was ranked No. 107 in the 2018 ''Fortune'' 500 list of the largest United States corporations by total revenue. HPE was founded on November 1, 2015, in Palo Alto, California, as part of the splitting of the Hewlett-Packard company. The split was structured so that the former Hewlett-Packard Company would change its name to HP Inc. and spin off Hewlett Packard Enterprise as a newly created company. HP Inc. retained the old HP's personal computer and printing business, as well as its stock-price history and original NYSE ticker symbol for Hewlett-Packard; Enterprise trades under its own ticker symbol: HPE. At the time of the spin-off, HPE's revenue was slightly less than that of HP Inc. The company relocated to ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Event Stream Processing

In computer science, stream processing (also known as event stream processing, data stream processing, or distributed stream processing) is a programming paradigm which views streams, or sequences of events in time, as the central input and output objects of computation. Stream processing encompasses dataflow programming, reactive programming, and distributed data processing. Stream processing systems aim to expose parallel processing for data streams and rely on streaming algorithms for efficient implementation. The software stack for these systems includes components such as programming models and query languages, for expressing computation; stream management systems, for distribution and scheduling; and hardware components for acceleration including floating-point units, graphics processing units, and field-programmable gate arrays. The stream processing paradigm simplifies parallel software and hardware by restricting the parallel computation that can be performed. Given a se ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

HBase

HBase is an open-source non-relational distributed database modeled after Google's Bigtable and written in Java. It is developed as part of Apache Software Foundation's Apache Hadoop project and runs on top of HDFS (Hadoop Distributed File System) or Alluxio, providing Bigtable-like capabilities for Hadoop. That is, it provides a fault-tolerant way of storing large quantities of sparse data (small amounts of information caught within a large collection of empty or unimportant data, such as finding the 50 largest items in a group of 2 billion records, or finding the non-zero items representing less than 0.1% of a huge collection). HBase features compression, in-memory operation, and Bloom filters on a per-column basis as outlined in the original Bigtable paper. Tables in HBase can serve as the input and output for MapReduce jobs run in Hadoop, and may be accessed through the Java API but also through REST, Avro or Thrift gateway APIs. HBase is a wide-column store and has be ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Computer Cluster

A computer cluster is a set of computers that work together so that they can be viewed as a single system. Unlike grid computers, computer clusters have each node set to perform the same task, controlled and scheduled by software. The newest manifestation of cluster computing is cloud computing. The components of a cluster are usually connected to each other through fast local area networks, with each node (computer used as a server) running its own instance of an operating system. In most circumstances, all of the nodes use the same hardware and the same operating system, although in some setups (e.g. using Open Source Cluster Application Resources (OSCAR)), different operating systems can be used on each computer, or different hardware. Clusters are usually deployed to improve performance and availability over that of a single computer, while typically being much more cost-effective than single computers of comparable speed or availability. Computer clusters emerged as ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Distributed File System

A clustered file system (CFS) is a file system which is shared by being simultaneously Mount (computing), mounted on multiple Server (computing), servers. There are several approaches to computer cluster, clustering, most of which do not employ a clustered file system (only direct attached storage for each node). Clustered file systems can provide features like location-independent addressing and redundancy which improve reliability or reduce the complexity of the other parts of the cluster. Parallel file systems are a type of clustered file system that spread data across multiple storage nodes, usually for redundancy or performance. Shared-disk file system A shared-disk file system uses a storage area network (SAN) to allow multiple computers to gain direct disk access at the Block (data storage), block level. Access control and translation from file-level operations that applications use to block-level operations used by the SAN must take place on the client node. The mos ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Redpoint Ventures

Redpoint Ventures is an American venture capital firm focused on investments in seed, early and growth-stage companies. History The firm was founded in 1999 and is headquartered in Menlo Park, California, with offices in San Francisco, Los Angeles, Beijing and Shanghai. The firm manages $3.8 billion of capital. The firm's partners include Alex Bard, Allen Beasley, Erica Brescia, Jeff Brody, Satish Dharmaraj, Tom Dyal, Tim Haley, Brad Jones, Annie Kadavy, Chris Moore, Lars Pedersen, Scott Raney, John Walecka, Geoff Yang and David Yuan. The founders of Redpoint Ventures have been involved with successful investments including Foundry, Juniper Networks, Netflix and Right Media. Its partners have been involved in 136 IPOs and acquisitions. IPOs include Snowflake, Twilio, Pure Storage, 2u, Just Eat, Zendesk, HomeAway, Qihoo, Responsys, Fortinet and Calix. Acquisitions include Acompli, Caspida, Efficient Frontier, Heroku, RelateIQ, BlueKai, Posterous, Trip.com, LifeSize, Ref ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |