|

Logical Schema

A logical data model or logical schema is a data model of a specific problem domain expressed independently of a particular database management product or storage technology (physical data model) but in terms of data structures such as relational tables and columns, object-oriented classes, or XML tags. This is as opposed to a conceptual data model, which describes the semantics of an organization without reference to technology. Overview Logical data models represent the abstract structure of a domain of information. They are often diagrammatic in nature and are most typically used in business processes that seek to capture things of importance to an organization and how they relate to one another. Once validated and approved, the logical data model can become the basis of a physical data model and form the design of a database. Logical data models should be based on the structures identified in a preceding conceptual data model, since this describes the semantics of the informa ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Data Model

A data model is an abstract model that organizes elements of data and standardizes how they relate to one another and to the properties of real-world entities. For instance, a data model may specify that the data element representing a car be composed of a number of other elements which, in turn, represent the color and size of the car and define its owner. The term data model can refer to two distinct but closely related concepts. Sometimes it refers to an abstract formalization of the objects and relationships found in a particular application domain: for example the customers, products, and orders found in a manufacturing organization. At other times it refers to the set of concepts used in defining such formalizations: for example concepts such as entities, attributes, relations, or tables. So the "data model" of a banking application may be defined using the entity-relationship "data model". This article uses the term in both senses. A data model explicitly determines the ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Impact Evaluation

Impact evaluation assesses the changes that can be attributed to a particular intervention, such as a project, program or policy, both the intended ones, as well as ideally the unintended ones. In contrast to outcome monitoring, which examines whether targets have been achieved, impact evaluation is structured to answer the question: how would outcomes such as participants' well-being have changed if the intervention had not been undertaken? This involves counterfactual analysis, that is, "a comparison between what actually happened and what would have happened in the absence of the intervention." Impact evaluations seek to answer cause-and-effect questions. In other words, they look for the changes in outcome that are directly attributable to a program. Impact evaluation helps people answer key questions for evidence-based policy making: what works, what doesn't, where, why and for how much? It has received increasing attention in policy making in recent years in the context of bo ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Object-role Modeling

Object-role modeling (ORM) is used to model the semantics of a universe of discourse. ORM is often used for data modeling and software engineering. An object-role model uses graphical symbols that are based on first order predicate logic and set theory to enable the modeler to create an unambiguous definition of an arbitrary universe of discourse. Attribute free, the predicates of an ORM Model lend themselves to the analysis and design of graph database models in as much as ORM was originally conceived to benefit relational database design. The term "object-role model" was coined in the 1970s and ORM based tools have been used for more than 30 years – principally for data modeling. More recently ORM has been used to model business rules, XML-Schemas, data warehouses, requirements engineering and web forms. History The roots of ORM can be traced to research into semantic modeling for information systems in Europe during the 1970s. There were many pioneers and this short ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Database Schema

The database schema is the structure of a database described in a formal language supported by the database management system (DBMS). The term "schema" refers to the organization of data as a blueprint of how the database is constructed (divided into database tables in the case of relational databases). The formal definition of a database schema is a set of formulas (sentences) called integrity constraints imposed on a database. These integrity constraints ensure compatibility between parts of the schema. All constraints are expressible in the same language. A database can be considered a structure in realization of the database language. The states of a created conceptual schema are transformed into an explicit mapping, the database schema. This describes how real-world entities are modeled in the database. "A database schema specifies, based on the database administrator's knowledge of possible applications, the facts that can enter the database, or those of interest to the ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Database Design

Database design is the organization of data according to a database model. The designer determines what data must be stored and how the data elements interrelate. With this information, they can begin to fit the data to the database model.Teorey, T.J., Lightstone, S.S., et al., (2009). Database Design: Know it all.1st ed. Burlington, MA.: Morgan Kaufmann Publishers Database management system manages the data accordingly. Database design involves classifying data and identifying interrelationships. This theoretical representation of the data is called an ''ontology''. The ontology is the theory behind the database's design. Determining data to be stored In a majority of cases, a person who is doing the design of a database is a person with expertise in the area of database design, rather than expertise in the domain from which the data to be stored is drawn e.g. financial information, biological information etc. Therefore, the data to be stored in the database must be determined in ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

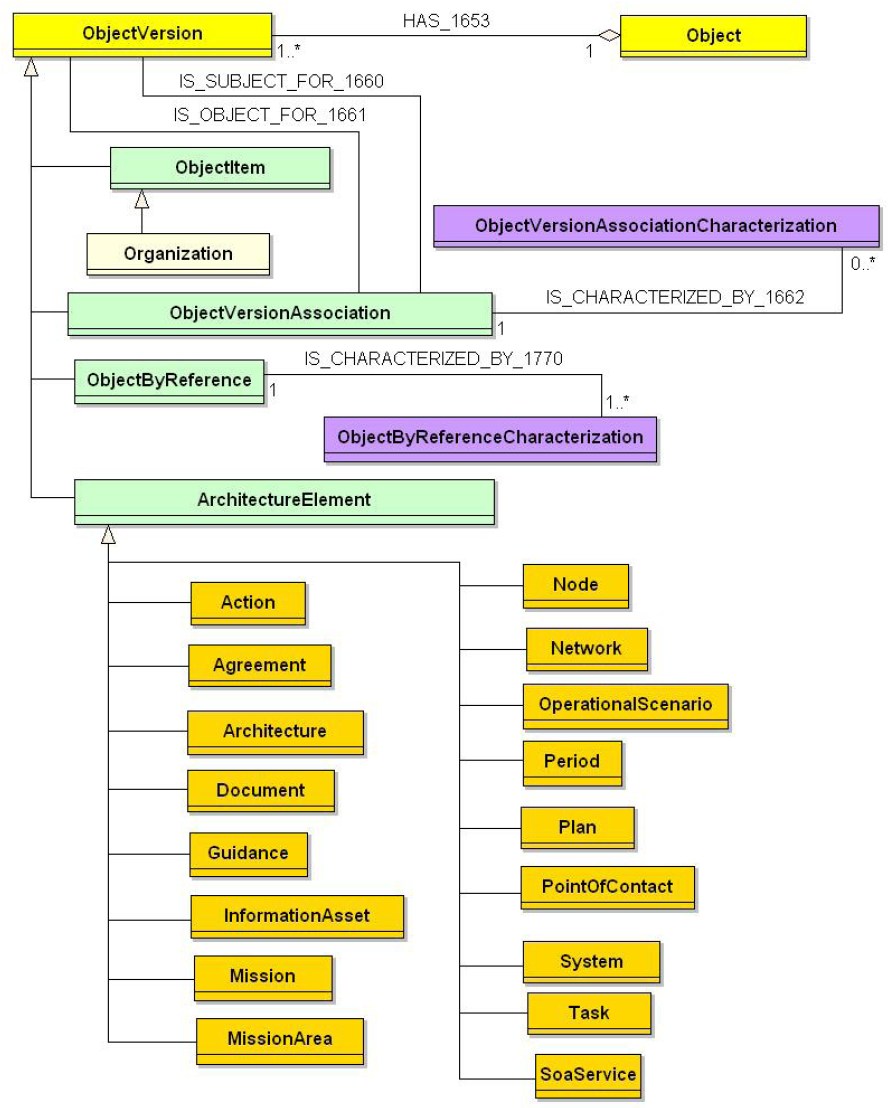

Core Architecture Data Model

Core architecture data model (CADM) in enterprise architecture is a logical data model of information used to describe and build architectures. GOA May 2001. The CADM is essentially a common , defined within the US Department of Defense Architecture Framework . It was initially published in 1997 as a for architecture data.< ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

DODAF

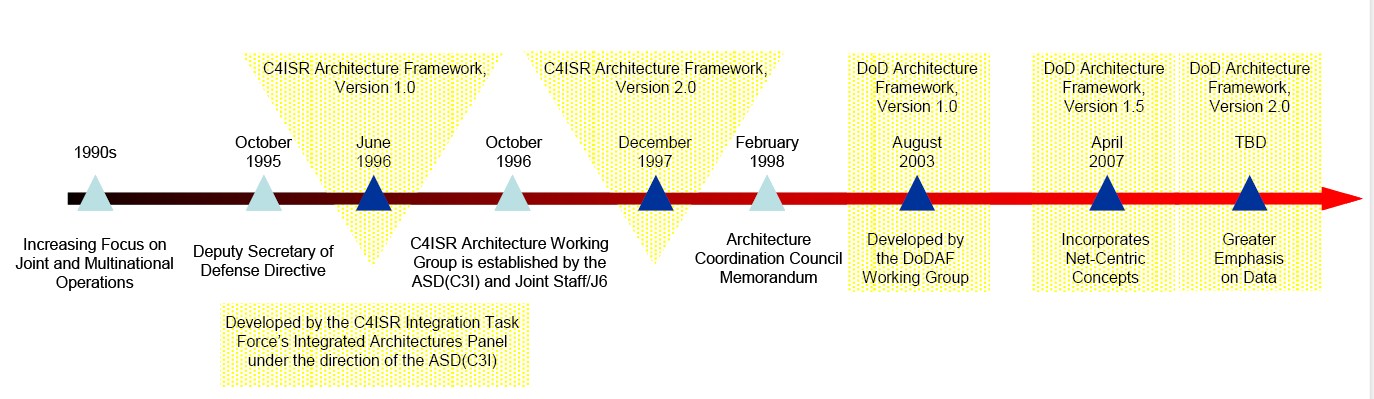

The Department of Defense Architecture Framework (DoDAF) is an architecture framework for the United States Department of Defense (DoD) that provides visualization infrastructure for specific stakeholders concerns through viewpoints organized by various views. These views are artifacts for visualizing, understanding, and assimilating the broad scope and complexities of an architecture description through tabular, structural, behavioral, ontological, pictorial, temporal, graphical, probabilistic, or alternative conceptual means. The current release is DoDAF 2.02. This Architecture Framework is especially suited to large systems with complex integration and interoperability challenges, and it is apparently unique in its employment of "operational views". These views offer overview and details aimed to specific stakeholders within their domain and in interaction with other domains in which the system will operate. Overview The DoDAF provides a foundational framework for developi ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Department Of Defense Architecture Framework

The Department of Defense Architecture Framework (DoDAF) is an architecture framework for the United States Department of Defense (DoD) that provides visualization infrastructure for specific stakeholders concerns through viewpoints organized by various views. These views are artifacts for visualizing, understanding, and assimilating the broad scope and complexities of an architecture description through tabular, structural, behavioral, ontological In metaphysics, ontology is the philosophical study of being, as well as related concepts such as existence, becoming, and reality. Ontology addresses questions like how entities are grouped into categories and which of these entities exi ..., image, pictorial, timeline, temporal, diagram, graphical, Graphical model, probabilistic, or alternative Conceptual model#Techniques, conceptual means. The current release is DoDAF 2.02. This Architecture Framework is especially suited to large systems with complex integration and in ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Process Model

The term process model is used in various contexts. For example, in business process modeling the enterprise process model is often referred to as the ''business process model''. Overview Process models are processes of the same nature that are classified together into a model. Thus, a process model is a description of a process at the type level. Since the process model is at the type level, a process is an instantiation of it. The same process model is used repeatedly for the development of many applications and thus, has many instantiations. One possible use of a process model is to prescribe how things must/should/could be done in contrast to the process itself which is really what happens. A process model is roughly an anticipation of what the process will look like. What the process shall be will be determined during actual system development.Colette Rolland and Pernici, C. Thanos (1998). ''A Comprehensive View of Process Engineering. Proceedings of the 10th International ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Physical Data Model

A physical data model (or database design) is a representation of a data design as implemented, or intended to be implemented, in a database management system. In the lifecycle of a project it typically derives from a logical data model, though it may be reverse-engineered from a given database implementation. A complete physical data model will include all the database artifacts required to create relationships between tables or to achieve performance goals, such as indexes, constraint definitions, linking tables, partitioned tables or clusters. Analysts can usually use a physical data model to calculate storage estimates; it may include specific storage allocation details for a given database system. seven main databases dominate the commercial marketplace: Informix, Oracle, Postgres, SQL Server, Sybase, IBM Db2 and MySQL. Other RDBMS systems tend either to be legacy databases or used within academia such as universities or further education colleges. Physical data ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Data Redundancy

In computer main memory, auxiliary storage and computer buses, data redundancy is the existence of data that is additional to the actual data and permits correction of errors in stored or transmitted data. The additional data can simply be a complete copy of the actual data (a type of repetition code), or only select pieces of data that allow detection of errors and reconstruction of lost or damaged data up to a certain level. For example, by including additional data checksums, ECC memory is capable of detecting and correcting single-bit errors within each memory word, while RAID 1 combines two hard disk drives (HDDs) into a logical storage unit that allows stored data to survive a complete failure of one drive. Data redundancy can also be used as a measure against silent data corruption; for example, file systems such as Btrfs and ZFS use data and metadata checksumming in combination with copies of stored data to detect silent data corruption and repair its effects. In d ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |