|

List Update Problem

The List Update or the List Access problem is a simple model used in the study of competitive analysis of online algorithms. Given a set of items in a list where the cost of accessing an item is proportional to its distance from the head of the list, e.g. a linked List, and a request sequence of accesses, the problem is to come up with a strategy of reordering the list so that the total cost of accesses is minimized. The reordering can be done at any time but incurs a cost. The standard model includes two reordering actions: * A free transposition of the item being accessed anywhere ahead of its current position; * A paid transposition of a unit cost for exchanging any two adjacent items in the list. Performance of algorithms depend on the construction of request sequences by adversaries under various adversary models An online algorithm for this problem has to reorder the elements and serve requests based only on the knowledge of previously requested items and hence its strategy ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Competitive Analysis (online Algorithm)

Competitive analysis is a method invented for analyzing online algorithms, in which the performance of an online algorithm (which must satisfy an unpredictable sequence of requests, completing each request without being able to see the future) is compared to the performance of an optimal ''offline algorithm'' that can view the sequence of requests in advance. An algorithm is ''competitive'' if its ''competitive ratio''—the ratio between its performance and the offline algorithm's performance—is bounded. Unlike traditional worst-case analysis, where the performance of an algorithm is measured only for "hard" inputs, competitive analysis requires that an algorithm perform well both on hard and easy inputs, where "hard" and "easy" are defined by the performance of the optimal offline algorithm. For many algorithms, performance is dependent not only on the size of the inputs, but also on their values. For example, sorting an array of elements varies in difficulty depending on th ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Online Algorithms

In computer technology and telecommunications, online indicates a state of connectivity, and offline indicates a disconnected state. In modern terminology, this usually refers to an Internet connection, but (especially when expressed as "on line" or "on the line") could refer to any piece of equipment or functional unit that is connected to a larger system. Being online means that the equipment or subsystem is connected, or that it is ready for use. "Online" has come to describe activities and concepts that take place on the Internet, such as online identity, online predator and online shop. A similar meaning is also given by the prefixes cyber and e, as in words ''cyberspace'', ''cybercrime'', ''email'', and '' e-commerce''. In contrast, "offline" can refer to either computing activities performed while disconnected from the Internet, or alternatives to Internet activities (such as shopping in brick-and-mortar stores). The term "offline" is sometimes used interchangeably ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Linked List

In computer science, a linked list is a linear collection of data elements whose order is not given by their physical placement in memory. Instead, each element points to the next. It is a data structure consisting of a collection of nodes which together represent a sequence. In its most basic form, each node contains data, and a reference (in other words, a ''link'') to the next node in the sequence. This structure allows for efficient insertion or removal of elements from any position in the sequence during iteration. More complex variants add additional links, allowing more efficient insertion or removal of nodes at arbitrary positions. A drawback of linked lists is that data access time is linear in respect to the number of nodes in the list. Because nodes are serially linked, accessing any node requires that the prior node be accessed beforehand (which introduces difficulties in pipelining). Faster access, such as random access, is not feasible. Arrays have better cache ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Adversary Model

In computer science, an online algorithm measures its competitiveness against different adversary models. For deterministic algorithms, the adversary is the same as the adaptive offline adversary. For randomized online algorithms competitiveness can depend upon the adversary model used. Common adversaries The three common adversaries are the oblivious adversary, the adaptive online adversary, and the adaptive offline adversary. The oblivious adversary is sometimes referred to as the weak adversary. This adversary knows the algorithm's code, but does not get to know the randomized results of the algorithm. The adaptive online adversary is sometimes called the medium adversary. This adversary must make its own decision before it is allowed to know the decision of the algorithm. The adaptive offline adversary is sometimes called the strong adversary. This adversary knows everything, even the random number generator. This adversary is so strong that randomization does not help agains ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Burrows–Wheeler Transform

The Burrows–Wheeler transform (BWT) rearranges a character string into runs of similar characters, in a manner that can be reversed to recover the original string. Since compression techniques such as move-to-front transform and run-length encoding are more effective when such runs are present, the BWT can be used as a preparatory step to improve the efficiency of a compression algorithm, and is used this way in software such as bzip2. The algorithm can be implemented efficiently using a suffix array thus reaching linear time complexity. It was invented by David Wheeler in 1983, and later published by him and Michael Burrows in 1994. Their paper included a compression algorithm, called the Block-sorting Lossless Data Compression Algorithm or BSLDCA, that compresses data by using the BWT followed by move-to-front coding and Huffman coding or arithmetic coding. Description The transform is done by constructing a matrix (known as the Burrows-Wheeler Matrix) whose rows are the ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

NP-hard

In computational complexity theory, a computational problem ''H'' is called NP-hard if, for every problem ''L'' which can be solved in non-deterministic polynomial-time, there is a polynomial-time reduction from ''L'' to ''H''. That is, assuming a solution for ''H'' takes 1 unit time, ''H''s solution can be used to solve ''L'' in polynomial time. As a consequence, finding a polynomial time algorithm to solve a single NP-hard problem would give polynomial time algorithms for all the problems in the complexity class NP. As it is suspected, but unproven, that P≠NP, it is unlikely that any polynomial-time algorithms for NP-hard problems exist. A simple example of an NP-hard problem is the subset sum problem. Informally, if ''H'' is NP-hard, then it is at least as difficult to solve as the problems in NP. However, the opposite direction is not true: some problems are undecidable, and therefore even more difficult to solve than all problems in NP, but they are probably not NP- ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Potential Method

In computational complexity theory, the potential method is a method used to analyze the amortized time and space complexity of a data structure, a measure of its performance over sequences of operations that smooths out the cost of infrequent but expensive operations.. Definition of amortized time In the potential method, a function Φ is chosen that maps states of the data structure to non-negative numbers. If ''S'' is a state of the data structure, Φ(''S'') represents work that has been accounted for ("paid for") in the amortized analysis but not yet performed. Thus, Φ(''S'') may be thought of as calculating the amount of potential energy stored in that state. The potential value prior to the operation of initializing a data structure is defined to be zero. Alternatively, Φ(''S'') may be thought of as representing the amount of disorder in state ''S'' or its distance from an ideal state. Let ''o'' be any individual operation within a sequence of operations on some data stru ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Online Algorithm

In computer science, an online algorithm is one that can process its input piece-by-piece in a serial fashion, i.e., in the order that the input is fed to the algorithm, without having the entire input available from the start. In contrast, an offline algorithm is given the whole problem data from the beginning and is required to output an answer which solves the problem at hand. In operations research Operations research () (U.S. Air Force Specialty Code: Operations Analysis), often shortened to the initialism OR, is a branch of applied mathematics that deals with the development and application of analytical methods to improve management and ..., the area in which online algorithms are developed is called online optimization. As an example, consider the sorting algorithms selection sort and insertion sort: selection sort repeatedly selects the minimum element from the unsorted remainder and places it at the front, which requires access to the entire input; it is thus a ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Cache Algorithms

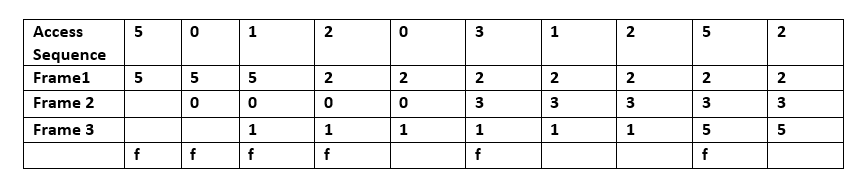

In computing, cache replacement policies (also known as cache replacement algorithms or cache algorithms) are optimizing instructions or algorithms which a computer program or hardware-maintained structure can utilize to manage a cache of information. Caching improves performance by keeping recent or often-used data items in memory locations which are faster, or computationally cheaper to access, than normal memory stores. When the cache is full, the algorithm must choose which items to discard to make room for new data. __TOC__ Overview The average memory reference time is : T = m \times T_m + T_h + E where : m = miss ratio = 1 - (hit ratio) : T_m = time to make main-memory access when there is a miss (or, with a multi-level cache, average memory reference time for the next-lower cache) : T_h= latency: time to reference the cache (should be the same for hits and misses) : E = secondary effects, such as queuing effects in multiprocessor systems A cache has two primary figures o ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Analysis Of Algorithms

In computer science, the analysis of algorithms is the process of finding the computational complexity of algorithms—the amount of time, storage, or other resources needed to execute them. Usually, this involves determining a function that relates the size of an algorithm's input to the number of steps it takes (its time complexity) or the number of storage locations it uses (its space complexity). An algorithm is said to be efficient when this function's values are small, or grow slowly compared to a growth in the size of the input. Different inputs of the same size may cause the algorithm to have different behavior, so best, worst and average case descriptions might all be of practical interest. When not otherwise specified, the function describing the performance of an algorithm is usually an upper bound, determined from the worst case inputs to the algorithm. The term "analysis of algorithms" was coined by Donald Knuth. Algorithm analysis is an important part of a broa ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Online Algorithms

In computer technology and telecommunications, online indicates a state of connectivity, and offline indicates a disconnected state. In modern terminology, this usually refers to an Internet connection, but (especially when expressed as "on line" or "on the line") could refer to any piece of equipment or functional unit that is connected to a larger system. Being online means that the equipment or subsystem is connected, or that it is ready for use. "Online" has come to describe activities and concepts that take place on the Internet, such as online identity, online predator and online shop. A similar meaning is also given by the prefixes cyber and e, as in words ''cyberspace'', ''cybercrime'', ''email'', and '' e-commerce''. In contrast, "offline" can refer to either computing activities performed while disconnected from the Internet, or alternatives to Internet activities (such as shopping in brick-and-mortar stores). The term "offline" is sometimes used interchangeably ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |