|

List Of Algebraic Coding Theory Topics

This is a list of algebraic coding theory topics. {, , valign="top" , * ARQ * Adler-32 * BCH code * BCJR algorithm * Belief propagation * Berger code * Berlekamp–Massey algorithm * Binary Golay code * Bipolar violation * CRHF * Casting out nines * Check digit * Chien's search * Chipkill * Cksum * Coding gain * Coding theory * Constant-weight code * Convolutional code * Cross R-S code * Cryptographic hash function * Cyclic redundancy check * Damm algorithm * Dual code * EXIT chart * Error-correcting code * Enumerator polynomial * Fletcher's checksum , , , valign="top" , * Forward error correction * Forward-backward algorithm * Gilbert–Varshamov bound * Goppa code * GOST (hash function) * Group coded recording * HAS-160 * HAS-V * HAVAL * Hadamard code * Hagelbarger code * Hamming bound * Hamming code * Hamming(7,4) * Hamming distance * Hamming weight * Hash collision * Hash function * Hash list * Hash tree * Induction puzzles * Integrity check value * Inte ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Algebraic Coding Theory

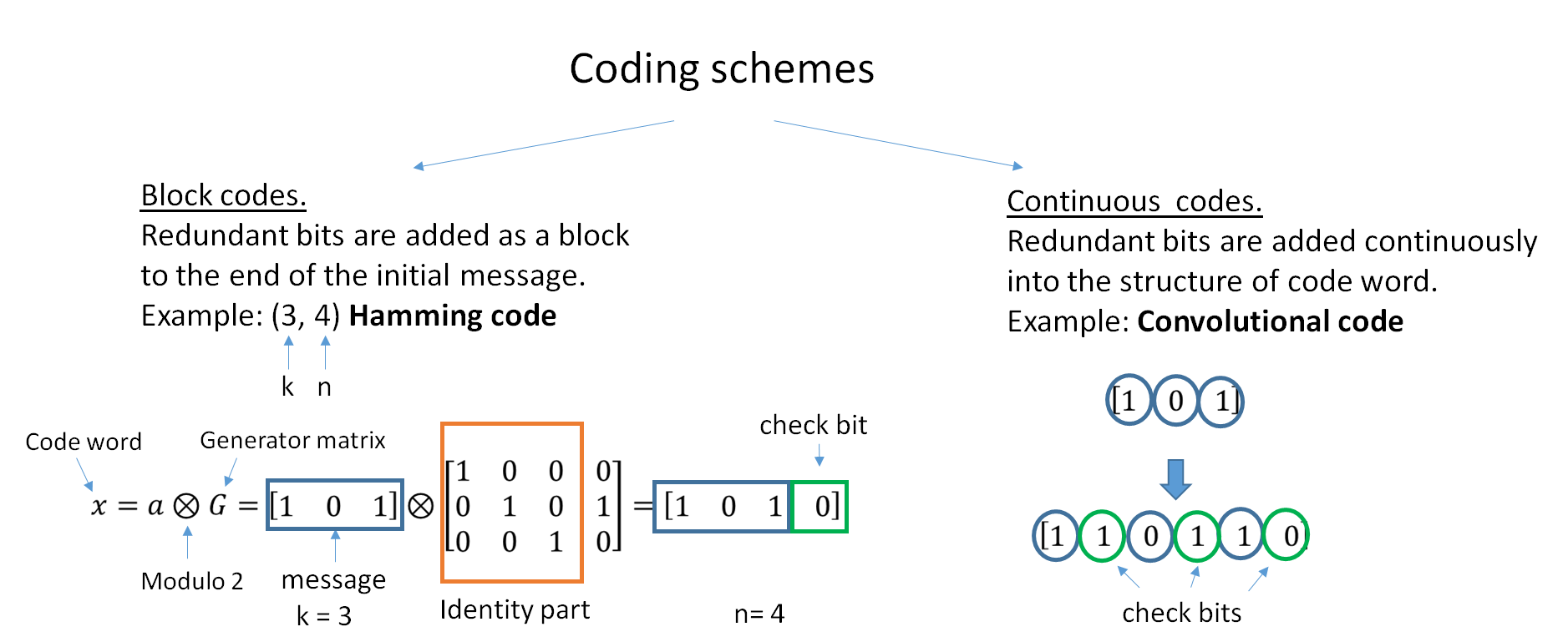

Coding theory is the study of the properties of codes and their respective fitness for specific applications. Codes are used for data compression, cryptography, error detection and correction, data transmission and data storage. Codes are studied by various scientific disciplines—such as information theory, electrical engineering, mathematics, linguistics, and computer science—for the purpose of designing efficient and reliable data transmission methods. This typically involves the removal of redundancy and the correction or detection of errors in the transmitted data. There are four types of coding: # Data compression (or ''source coding'') # Error control (or ''channel coding'') # Cryptographic coding # Line coding Data compression attempts to remove unwanted redundancy from the data from a source in order to transmit it more efficiently. For example, ZIP data compression makes data files smaller, for purposes such as to reduce Internet traffic. Data compression and er ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Coding Theory

Coding theory is the study of the properties of codes and their respective fitness for specific applications. Codes are used for data compression, cryptography, error detection and correction, data transmission and data storage. Codes are studied by various scientific disciplines—such as information theory, electrical engineering, mathematics, linguistics, and computer science—for the purpose of designing efficient and reliable data transmission methods. This typically involves the removal of redundancy and the correction or detection of errors in the transmitted data. There are four types of coding: # Data compression (or ''source coding'') # Error control (or ''channel coding'') # Cryptographic coding # Line coding Data compression attempts to remove unwanted redundancy from the data from a source in order to transmit it more efficiently. For example, ZIP data compression makes data files smaller, for purposes such as to reduce Internet traffic. Data compression and er ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Forward Error Correction

In computing, telecommunication, information theory, and coding theory, an error correction code, sometimes error correcting code, (ECC) is used for controlling errors in data over unreliable or noisy communication channels. The central idea is the sender encodes the message with redundant information in the form of an ECC. The redundancy allows the receiver to detect a limited number of errors that may occur anywhere in the message, and often to correct these errors without retransmission. The American mathematician Richard Hamming pioneered this field in the 1940s and invented the first error-correcting code in 1950: the Hamming (7,4) code. ECC contrasts with error detection in that errors that are encountered can be corrected, not simply detected. The advantage is that a system using ECC does not require a reverse channel to request retransmission of data when an error occurs. The downside is that there is a fixed overhead that is added to the message, thereby requiring a ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Fletcher's Checksum

The Fletcher checksum is an algorithm for computing a position-dependent checksum devised by John G. Fletcher (1934–2012) at Lawrence Livermore Labs in the late 1970s. The objective of the Fletcher checksum was to provide error-detection properties approaching those of a cyclic redundancy check but with the lower computational effort associated with summation techniques. The algorithm Review of simple checksums As with simpler checksum algorithms, the Fletcher checksum involves dividing the binary data word to be protected from errors into short "blocks" of bits and computing the modular sum of those blocks. (Note that the terminology used in this domain can be confusing. The data to be protected, in its entirety, is referred to as a "word", and the pieces into which it is divided are referred to as "blocks".) As an example, the data may be a message to be transmitted consisting of 136 characters, each stored as an 8-bit byte, making a data word of 1088 bits in total. A co ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Enumerator Polynomial

In coding theory, the weight enumerator polynomial of a binary linear code specifies the number of words of each possible Hamming weight. Let C \subset \mathbb_2^n be a binary linear code length n. The weight distribution is the sequence of numbers : A_t = \#\ giving the number of codewords ''c'' in ''C'' having weight ''t'' as ''t'' ranges from 0 to ''n''. The weight enumerator is the bivariate polynomial : W(C;x,y) = \sum_^n A_w x^w y^. Basic properties # W(C;0,1) = A_=1 # W(C;1,1) = \sum_^A_=, C, # W(C;1,0) = A_= 1 \mbox (1,\ldots,1)\in C\ \mbox 0 \mbox # W(C;1,-1) = \sum_^A_(-1)^ = A_+(-1)^A_+\ldots+(-1)^A_+(-1)^A_ MacWilliams identity Denote the dual code of C \subset \mathbb_2^n by :C^\perp = \ (where \langle\ ,\ \rangle denotes the vector dot product and which is taken over \mathbb_2). The MacWilliams identity states that :W(C^\perp;x,y) = \frac W(C;y-x,y+x). The identity is named after Jessie MacWilliams. Distance enumerator The distance distribution or ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Error-correcting Code

In computing, telecommunication, information theory, and coding theory, an error correction code, sometimes error correcting code, (ECC) is used for controlling errors in data over unreliable or noisy communication channels. The central idea is the sender encodes the message with redundant information in the form of an ECC. The redundancy allows the receiver to detect a limited number of errors that may occur anywhere in the message, and often to correct these errors without retransmission. The American mathematician Richard Hamming pioneered this field in the 1940s and invented the first error-correcting code in 1950: the Hamming (7,4) code. ECC contrasts with error detection in that errors that are encountered can be corrected, not simply detected. The advantage is that a system using ECC does not require a reverse channel to request retransmission of data when an error occurs. The downside is that there is a fixed overhead that is added to the message, thereby requiring a ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

EXIT Chart

An extrinsic information transfer chart, commonly called an EXIT chart, is a technique to aid the construction of good iteratively-decoded error-correcting codes (in particular low-density parity-check code, low-density parity-check (LDPC) codes and Turbo codes). EXIT charts were developed by Stephan ten Brink, building on the concept of extrinsic information developed in the Turbo coding community.Stephan ten Brink, Convergence of Iterative Decoding, Electronics Letters, 35(10), May 1999 An EXIT chart includes the response of elements of decoder (for example a convolutional decoder of a Turbo code, the LDPC parity-check nodes or the LDPC variable nodes). The response can either be seen as extrinsic information or a representation of the messages in belief propagation. If there are two components which exchange messages, the behaviour of the decoder can be plotted on a two-dimensional chart. One component is plotted with its input on the horizontal axis and its output on the ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Dual Code

In coding theory, the dual code of a linear code :C\subset\mathbb_q^n is the linear code defined by :C^\perp = \ where :\langle x, c \rangle = \sum_^n x_i c_i is a scalar product. In linear algebra terms, the dual code is the annihilator of ''C'' with respect to the bilinear form \langle\cdot\rangle. The dimension of ''C'' and its dual always add up to the length ''n'': :\dim C + \dim C^\perp = n. A generator matrix for the dual code is the parity-check matrix for the original code and vice versa. The dual of the dual code is always the original code. Self-dual codes A self-dual code is one which is its own dual. This implies that ''n'' is even and dim ''C'' = ''n''/2. If a self-dual code is such that each codeword's weight is a multiple of some constant c > 1, then it is of one of the following four types: *Type I codes are binary self-dual codes which are not doubly even. Type I codes are always even (every codeword has even Hamming weight The Hamming weight of ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Damm Algorithm

In error detection, the Damm algorithm is a check digit algorithm that detects all single-digit errors and all adjacent transposition errors. It was presented by H. Michael Damm in 2004. Strengths and weaknesses Strengths The Damm algorithm is similar to the Verhoeff algorithm. It too will detect ''all'' occurrences of the two most frequently appearing types of transcription errors, namely altering one single digit, and transposing two adjacent digits (including the transposition of the trailing check digit and the preceding digit). But the Damm algorithm has the benefit that it makes do without the dedicatedly constructed permutations and its position specific powers being inherent in the Verhoeff scheme. Furthermore, a table of inverses can be dispensed with provided all main diagonal entries of the operation table are zero. The Damm algorithm does not suffer from exceeding the number of 10 possible values, resulting in the need for using a non-digit character (as the X ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Cyclic Redundancy Check

A cyclic redundancy check (CRC) is an error-detecting code commonly used in digital networks and storage devices to detect accidental changes to digital data. Blocks of data entering these systems get a short ''check value'' attached, based on the remainder of a polynomial division of their contents. On retrieval, the calculation is repeated and, in the event the check values do not match, corrective action can be taken against data corruption. CRCs can be used for error correction (see bitfilters). CRCs are so called because the ''check'' (data verification) value is a ''redundancy'' (it expands the message without adding information) and the algorithm is based on ''cyclic'' codes. CRCs are popular because they are simple to implement in binary hardware, easy to analyze mathematically, and particularly good at detecting common errors caused by noise in transmission channels. Because the check value has a fixed length, the function that generates it is occasionally used ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Cryptographic Hash Function

A cryptographic hash function (CHF) is a hash algorithm (a map of an arbitrary binary string to a binary string with fixed size of n bits) that has special properties desirable for cryptography: * the probability of a particular n-bit output result (hash value) for a random input string ("message") is 2^ (like for any good hash), so the hash value can be used as a representative of the message; * finding an input string that matches a given hash value (a ''pre-image'') is unfeasible, unless the value is selected from a known pre-calculated dictionary (" rainbow table"). The ''resistance'' to such search is quantified as security strength, a cryptographic hash with n bits of hash value is expected to have a ''preimage resistance'' strength of n bits. A ''second preimage'' resistance strength, with the same expectations, refers to a similar problem of finding a second message that matches the given hash value when one message is already known; * finding any pair of different messa ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |