|

Learning Curve (machine Learning)

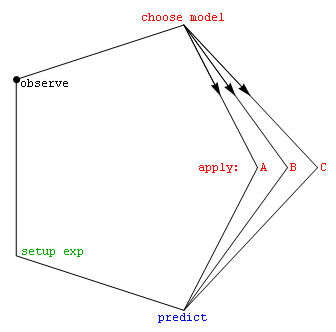

In machine learning, a learning curve (or training curve) plots the optimal value of a model's loss function for a training set against this loss function evaluated on a validation data set with same parameters as produced the optimal function. Synonyms include ''error curve'', ''experience curve'', ''improvement curve'' and ''generalization curve''. More abstractly, the learning curve is a curve of (learning effort)-(predictive performance), where usually learning effort means number of training samples and predictive performance means accuracy on testing samples. The machine learning curve is useful for many purposes including comparing different algorithms, choosing model parameters during design, adjusting optimization to improve convergence, and determining the amount of data used for training. Formal definition One model of a machine learning is producing a function, , which given some information, , predicts some variable, , from training data X_\text and Y_\tex ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Learning Curves (Naive Bayes)

Learning is the process of acquiring new understanding, knowledge, behaviors, skills, values, attitudes, and preferences. The ability to learn is possessed by humans, animals, and some machines; there is also evidence for some kind of learning in certain plants. Some learning is immediate, induced by a single event (e.g. being burned by a hot stove), but much skill and knowledge accumulate from repeated experiences. The changes induced by learning often last a lifetime, and it is hard to distinguish learned material that seems to be "lost" from that which cannot be retrieved. Human learning starts at birth (it might even start before in terms of an embryo's need for both interaction with, and freedom within its environment within the womb.) and continues until death as a consequence of ongoing interactions between people and their environment. The nature and processes involved in learning are studied in many established fields (including educational psychology, neu ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Convergence (mathematics)

In mathematics, a series is the sum of the terms of an infinite sequence of numbers. More precisely, an infinite sequence (a_0, a_1, a_2, \ldots) defines a series that is denoted :S=a_0 +a_1+ a_2 + \cdots=\sum_^\infty a_k. The th partial sum is the sum of the first terms of the sequence; that is, :S_n = \sum_^n a_k. A series is convergent (or converges) if the sequence (S_1, S_2, S_3, \dots) of its partial sums tends to a limit; that means that, when adding one a_k after the other ''in the order given by the indices'', one gets partial sums that become closer and closer to a given number. More precisely, a series converges, if there exists a number \ell such that for every arbitrarily small positive number \varepsilon, there is a (sufficiently large) integer N such that for all n \ge N, :\left , S_n - \ell \right , 1 produce a convergent series: *: ++++++\cdots = . * Alternating the signs of reciprocals of powers of 2 also produces a convergent series: *: -+-+-+\cdots = ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Verification And Validation

Verification and validation (also abbreviated as V&V) are independent procedures that are used together for checking that a product, service, or system meets requirements and specifications and that it fulfills its intended purpose. These are critical components of a quality management system such as ISO 9000. The words "verification" and "validation" are sometimes preceded with "independent", indicating that the verification and validation is to be performed by a disinterested third party. "Independent verification and validation" can be abbreviated as "IV&V". In practice, as quality management terms, the definitions of verification and validation can be inconsistent. Sometimes they are even used interchangeably. However, the PMBOK guide, a standard adopted by the Institute of Electrical and Electronics Engineers (IEEE), defines them as follows in its 4th edition: * "Validation. The assurance that a product, service, or system meets the needs of the customer and other iden ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Validity (statistics)

Validity is the main extent to which a concept, conclusion or measurement is well-founded and likely corresponds accurately to the real world. The word "valid" is derived from the Latin validus, meaning strong. The validity of a measurement tool (for example, a test in education) is the degree to which the tool measures what it claims to measure. Validity is based on the strength of a collection of different types of evidence (e.g. face validity, construct validity, etc.) described in greater detail below. In psychometrics, validity has a particular application known as test validity: "the degree to which evidence and theory support the interpretations of test scores" ("as entailed by proposed uses of tests"). It is generally accepted that the concept of scientific validity addresses the nature of reality in terms of statistical measures and as such is an epistemological and philosophical issue as well as a question of measurement. The use of the term in logic is narrower, relati ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Model Selection

Model selection is the task of selecting a statistical model from a set of candidate models, given data. In the simplest cases, a pre-existing set of data is considered. However, the task can also involve the design of experiments such that the data collected is well-suited to the problem of model selection. Given candidate models of similar predictive or explanatory power, the simplest model is most likely to be the best choice (Occam's razor). state, "The majority of the problems in statistical inference can be considered to be problems related to statistical modeling". Relatedly, has said, "How hetranslation from subject-matter problem to statistical model is done is often the most critical part of an analysis". Model selection may also refer to the problem of selecting a few representative models from a large set of computational models for the purpose of decision making or optimization under uncertainty. Introduction In its most basic forms, model selection is one ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Bias–variance Tradeoff

In statistics and machine learning, the bias–variance tradeoff is the property of a model that the variance of the parameter estimated across samples can be reduced by increasing the bias in the estimated parameters. The bias–variance dilemma or bias–variance problem is the conflict in trying to simultaneously minimize these two sources of error that prevent supervised learning algorithms from generalizing beyond their training set: * The ''bias'' error is an error from erroneous assumptions in the learning algorithm. High bias can cause an algorithm to miss the relevant relations between features and target outputs (underfitting). * The ''variance'' is an error from sensitivity to small fluctuations in the training set. High variance may result from an algorithm modeling the random noise in the training data (overfitting). The bias–variance decomposition is a way of analyzing a learning algorithm's expected generalization error with respect to a particular problem ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Overfitting

mathematical modeling, overfitting is "the production of an analysis that corresponds too closely or exactly to a particular set of data, and may therefore fail to fit to additional data or predict future observations reliably". An overfitted model is a mathematical model that contains more parameters than can be justified by the data. The essence of overfitting is to have unknowingly extracted some of the residual variation (i.e., the noise) as if that variation represented underlying model structure. Underfitting occurs when a mathematical model cannot adequately capture the underlying structure of the data. An under-fitted model is a model where some parameters or terms that would appear in a correctly specified model are missing. Under-fitting would occur, for example, when fitting a linear model to non-linear data. Such a model will tend to have poor predictive performance. The possibility of over-fitting exists because the criterion used for selecting the model is no ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Gradient Descent

In mathematics, gradient descent (also often called steepest descent) is a first-order iterative optimization algorithm for finding a local minimum of a differentiable function. The idea is to take repeated steps in the opposite direction of the gradient (or approximate gradient) of the function at the current point, because this is the direction of steepest descent. Conversely, stepping in the direction of the gradient will lead to a local maximum of that function; the procedure is then known as gradient ascent. Gradient descent is generally attributed to Augustin-Louis Cauchy, who first suggested it in 1847. Jacques Hadamard independently proposed a similar method in 1907. Its convergence properties for non-linear optimization problems were first studied by Haskell Curry in 1944, with the method becoming increasingly well-studied and used in the following decades. Description Gradient descent is based on the observation that if the multi-variable function F(\mathbf) is def ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Generalization (learning)

Generalization is the concept that humans and other animals use past learning in present situations of learning if the conditions in the situations are regarded as similar. The learner uses generalized patterns, principles, and other similarities between past experiences and novel experiences to more efficiently navigate the world.Banich, M. T., Dukes, P., & Caccamise, D. (2010). Generalization of knowledge: Multidisciplinary perspectives. Psychology Press. For example, if a person has learned in the past that every time they eat an apple, their throat becomes itchy and swollen, they might assume they are allergic to all fruit. When this person is offered a banana to eat, they reject it upon assuming they are also allergic to it through generalizing that all fruits cause the same reaction. Although this generalization about being allergic to all fruit based on experiences with one fruit could be correct in some cases, it may not be correct in all. Both positive and negative effects h ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Machine Learning

Machine learning (ML) is a field of inquiry devoted to understanding and building methods that 'learn', that is, methods that leverage data to improve performance on some set of tasks. It is seen as a part of artificial intelligence. Machine learning algorithms build a model based on sample data, known as training data, in order to make predictions or decisions without being explicitly programmed to do so. Machine learning algorithms are used in a wide variety of applications, such as in medicine, email filtering, speech recognition, agriculture, and computer vision, where it is difficult or unfeasible to develop conventional algorithms to perform the needed tasks.Hu, J.; Niu, H.; Carrasco, J.; Lennox, B.; Arvin, F.,Voronoi-Based Multi-Robot Autonomous Exploration in Unknown Environments via Deep Reinforcement Learning IEEE Transactions on Vehicular Technology, 2020. A subset of machine learning is closely related to computational statistics, which focuses on making predicti ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Mathematical Optimization

Mathematical optimization (alternatively spelled ''optimisation'') or mathematical programming is the selection of a best element, with regard to some criterion, from some set of available alternatives. It is generally divided into two subfields: discrete optimization and continuous optimization. Optimization problems of sorts arise in all quantitative disciplines from computer science and engineering to operations research and economics, and the development of solution methods has been of interest in mathematics for centuries. In the more general approach, an optimization problem consists of maxima and minima, maximizing or minimizing a Function of a real variable, real function by systematically choosing Argument of a function, input values from within an allowed set and computing the Value (mathematics), value of the function. The generalization of optimization theory and techniques to other formulations constitutes a large area of applied mathematics. More generally, opti ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Function (mathematics)

In mathematics, a function from a set to a set assigns to each element of exactly one element of .; the words map, mapping, transformation, correspondence, and operator are often used synonymously. The set is called the domain of the function and the set is called the codomain of the function.Codomain ''Encyclopedia of Mathematics'Codomain. ''Encyclopedia of Mathematics''/ref> The earliest known approach to the notion of function can be traced back to works of Persian mathematicians Al-Biruni and Sharaf al-Din al-Tusi. Functions were originally the idealization of how a varying quantity depends on another quantity. For example, the position of a planet is a ''function'' of time. Historically, the concept was elaborated with the infinitesimal calculus at the end of the 17th century, and, until the 19th century, the functions that were considered were differentiable (that is, they had a high degree of regularity). The concept of a function was formalized at the end of the ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |