|

Loop Interchange

Loop or LOOP may refer to: Brands and enterprises * Loop (mobile), a Bulgarian virtual network operator and co-founder of Loop Live * Loop, clothing, a company founded by Carlos Vasquez in the 1990s and worn by Digable Planets * Loop Mobile, an Indian mobile phone operator * Loop Internet, an internet service provider in Pennsylvania, United States * Loop, a reusable container program announced in 2019 by TerraCycle Geography * Loop, Germany, a municipality in Schleswig-Holstein * Loop (Texarkana), a roadway loop around Texarkana, Arkansas, United States * Loop, Blair County, Pennsylvania, United States * Loop, Indiana County, Pennsylvania, United States * Loop, West Virginia, United States * Loop 101, a semi-beltway of the Phoenix Metropolitan Area * Loop 202, a semi-beltway of the Phoenix Metropolitan Area * Loop 303, a semi-beltway of the Phoenix Metropolitan Area * Chicago Loop, the downtown neighborhood of Chicago bounded by the elevated railway The Loop ** L ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Loop (mobile)

Loop or LOOP may refer to: Brands and enterprises * Loop (mobile), a Bulgarian virtual network operator and co-founder of Loop Live * Loop, clothing, a company founded by Carlos Vasquez in the 1990s and worn by Digable Planets * Loop Mobile, an Indian mobile phone operator * Loop Internet, an internet service provider in Pennsylvania, United States * Loop, a reusable container program announced in 2019 by TerraCycle Geography * Loop, Germany, a municipality in Schleswig-Holstein * Loop (Texarkana), a roadway loop around Texarkana, Arkansas, United States * Loop, Blair County, Pennsylvania, United States * Loop, Indiana County, Pennsylvania, United States * Loop, West Virginia, United States * Loop 101, a semi-beltway of the Phoenix Metropolitan Area * Loop 202, a semi-beltway of the Phoenix Metropolitan Area * Loop 303, a semi-beltway of the Phoenix Metropolitan Area * Chicago Loop, the downtown neighborhood of Chicago bounded by the elevated railway The Loop ** Loop Retail Histo ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

London Outer Orbital Path

The London Outer Orbital Path—more usually the "London LOOP"—is a 150-mile (242 km) signed walk along Rights of way in England and Wales, public footpaths, and through parks, woods and fields around the edge of Outer London, England, described as "the M25 motorway, M25 for walkers". The walk begins at Erith on the south bank of the River Thames and passes clockwise through Crayford, Petts Wood, Coulsdon, Banstead, Ewell, Kingston upon Thames, Uxbridge, Elstree, Cockfosters, Chingford, Chigwell, Grange Hill and Upminster Bridge before ending at Purfleet, almost directly across the River Thames, Thames from its starting point. Between these settlements the route passes through buffer zone, green buffers and some of the highest points in Greater London. History The walk was first proposed at a meeting between The Ramblers and the Countryside Commission in 1990. It was given an official launch at the House of Lords in 1993. The first section was opened on 3 May 1996, w ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Loop (album)

''Loop'' is the fifth album by the rock artist Keller Williams. It was released in 2001 on SCI Fidelity Records. The album contains live recordings of three performances in the Pacific Northwest The Pacific Northwest (PNW; ) is a geographic region in Western North America bounded by its coastal waters of the Pacific Ocean to the west and, loosely, by the Rocky Mountains to the east. Though no official boundary exists, the most common ... in 2000. Track listing # Thin Mint 4:15 # Kiwi and the Apricot 4:07 # More Than a Little 7:48 # Vacate 6:21 # Blatant Ripoff 4:22 # Kidney in a Cooler 5:59 # Landlord 7:03 # Turn in Difference 6:00 # No Hablo Espanol 2:26 # Rockumal 3:13 # Stupid Questions 7:52 # Inhale to the Chief 4:28 # Nomini 3:44 Credits *Tom Capek - Mastering *Phil Crumrine - Multi-Track Mix *Doug Derryberry - Mixing * Keller Williams - Producer Loop Overview ''allmusic.com'', Retrieved May 13, 2008. References {{Authority cont ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Loop (band)

Loop are an English rock band, formed in 1986 by Robert Hampson in Croydon. The group topped the UK independent charts with their albums '' Fade Out'' (1989) and '' A Gilded Eternity'' (1990). Their dissonant "trance-rock" sound drew on the work of artists like the Stooges and Can, and helped to resurrect the concept of space rock in the late 1980s. The group split in 1991, with Hampson going on to form the experimental project Main with guitarist Scott Dawson, and Mackay and Wills forming The Hair and Skin Trading Company. In 2013, the 1989–90 line-up of Hampson, Dawson, John Wills, and Neil Mackay briefly reformed for a series of gigs, and the following year Hampson unveiled a new line-up of the band with himself as the sole original member. Career Loop were formed in 1986 by Robert Hampson (vocals, guitar), with his then-girlfriend Becky Stewart on drums. Stewart was later replaced by John Wills ( The Servants) and Glen Ray, with James Endeacott on guitar.Strong, Mar ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Porn Loop

Pornographic films (pornos), erotic films, adult films, blue films, sexually explicit films, or 18+ films, are films that represent sexually explicit subject matter in order to arouse, fascinate, or satisfy the viewer. Pornographic films represent sexual fantasies and usually include erotically stimulating material such as nudity or fetishes ( softcore) and sexual intercourse ( hardcore). A distinction is sometimes made between "erotic" and "pornographic" films on the basis that the latter category contains more explicit sexuality, and focuses more on arousal than storytelling; the distinction is highly subjective. Pornographic films are produced and distributed on a variety of media, depending on the demand and technology available, including traditional film stock footage in various formats, home video, DVDs, mobile devices, Internet pornography Internet download, cable TV, in addition to other media. Pornography is often sold or rented on DVD; shown through Internet strea ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Movie Projector

A movie projector (or film projector) is an optics, opto-mechanics, mechanical device for displaying Film, motion picture film by projecting it onto a movie screen, screen. Most of the optical and mechanical elements, except for the illumination and sound devices, are present in movie cameras. Modern movie projectors are specially built video projectors (see also digital cinema). Many projectors are specific to a particular film gauge and not all movie projectors are film projectors since the use of film is required. Predecessors The main precursor to the movie projector was the magic lantern. In its most common setup it had a concave mirror behind a light source to help direct as much light as possible through a painted glass picture slide and a lens, out of the lantern onto a screen. Simple mechanics to have the painted images moving were probably implemented since Christiaan Huygens introduced the apparatus around 1659. Initially, candles and oil lamps were used, but oth ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Loop (2020 Film)

''Loop'' is a 2020 American animated drama short film directed and written by Erica Milsom with the story being written by Adam Burke, Matthias De Clercq and Milsom, produced by Pixar Animation Studios, and distributed by Walt Disney Studios Motion Pictures. It is the sixth short film in Pixar's '' SparkShorts'' program and focuses on a non-verbal autistic girl and a chatty boy, learning to understand each other. The short was released on Disney+ on January 10, 2020. Plot Renee, a 13-year-old non-verbal autistic girl, sits in a canoe and plays with a sound app on her phone. Marcus arrives late and the camp counselor partners him with Renee, much to his annoyance. When Marcus attempts to show off his paddling skills, Renee is unimpressed and starts rocking the boat. Marcus asks her what she wants and she has him paddle to land so she can touch the reeds. When Renee goes back to her phone, Marcus has an idea. He paddles them to a tunnel and has Renee play her phone so that the s ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Loop (1999 Film)

''Loop'' () is a 1999 Venezuelan drama film written and directed by Julio Sosa Pietri. The film was selected as the Venezuelan entry for the Best Foreign Language Film at the 71st Academy Awards, but was not accepted as a nominee.Margaret Herrick Library, Academy of Motion Picture Arts and Sciences Cast * Jean Carlo Simancas as Alejandro del Rey * Arcelia Ramírez as Lucía / Sandra * Luly Bossa as Shara Goldberg * Claudio Obregón as Julio Andrés Martínez * Julio Medina as Ataúlfo * Fausto Cabrera as Alfonso del Rey * Roberto Colmenares as Francisco Leoz * Amanda Gutiérrez as Mimí Cordero See also * List of submissions to the 71st Academy Awards for Best Foreign Language Film A list is a set of discrete items of information collected and set forth in some format for utility, entertainment, or other purposes. A list may be memorialized in any number of ways, including existing only in the mind of the list-maker, but ... * List of Venezuelan submissions for t ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Loop (1997 Film)

''Loop'' is a 1997 British romantic comedy feature film produced by Tedi De Toledo and Michael Riley. It was written by Tim Pears and is the debut film of director Allan Niblo. The writer of ''Loop'', Tim Pears, also wrote the novel for '' In a Land of Plenty'' which was turned into an acclaimed 10-part TV drama serial for the BBC and produced by the London-based production company Sterling Pictures and Talkback Productions. Plot In ''Loop'', the main character, Rachel, is dumped by her boyfriend and exacts revenge. This film is classified as a romantic comedy. Cast * Andy Serkis as Bill * Susannah York as Olivia * Tony Selby as Tom * Moya Brady as Waitress * Willie Ross as Geordie Trucker * Heather Craney ... Cashier * Howard Lee ... Bank Clerk * Paul Ryan ... City Barman * Emer McCourt as Rachel * Jayne Ashbourne as Hannah * Gideon Turner as Jason * Alisa Bosschaert as Sarah * Paul Daly a Jack * Evelyn Doggart as Jean * Emer McCourt as Rachel * Maya Saxton as Jenn ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Tyler Loop

Steven Tyler Loop (born August 4, 2001) is an American professional American football, football placekicker for the Baltimore Ravens of the National Football League (NFL). He played college football for the Arizona Wildcats football, Arizona Wildcats and was selected by the Ravens in the sixth round of the 2025 NFL draft. Early life and high school Loop attended Lovejoy High School (Lucas, Texas), Lovejoy High School in Lucas, Texas. He was rated as a two-star recruit and committed to play college football for the Arizona Wildcats football, Arizona Wildcats. College career In the 2020 season, Loop served as the Wildcats punter where he punted 24 times for 1,033 yards with an average of 43.0 yards per punt. He transitioned to be Arizona's kicker in 2021, where he was perfect on all his kicks converting on all 12 of his field goal attempts and all 12 of his extra points. During the 2022 season, Loop went 18 for 21 on field goals, while being perfect on all 38 extra points. In the ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Half Man Half Biscuit

Half Man Half Biscuit are an English rock band, formed in 1984 in Birkenhead, Merseyside. Known for their satirical, sardonic, and sometimes surreal songs, the band comprises lead singer and guitarist Nigel Blackwell, bassist and singer Neil Crossley, drummer Carl Henry, and guitarist Karl Benson. The band parodies popular genres, while their lyrics allude to UK popular culture and geography. Within a long career, their best-known songs include "The Trumpton Riots" (1986), "For What Is Chatteris" (2005), "Joy Division Oven Gloves" (2005) and "National Shite Day" (2008). History Half Man Half Biscuit were formed by two friends from Birkenhead, guitarist Neil Crossley and singer, guitarist and songwriter Nigel Blackwell who was (in his own words) at the time "still robbing cars and playing football like normal people do". In 1979, Blackwell was editing a football fanzine (''Left For Wakeley Gage''); he met Crossley when he went to see the latter's band play.Kendal, Mark (2004) ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

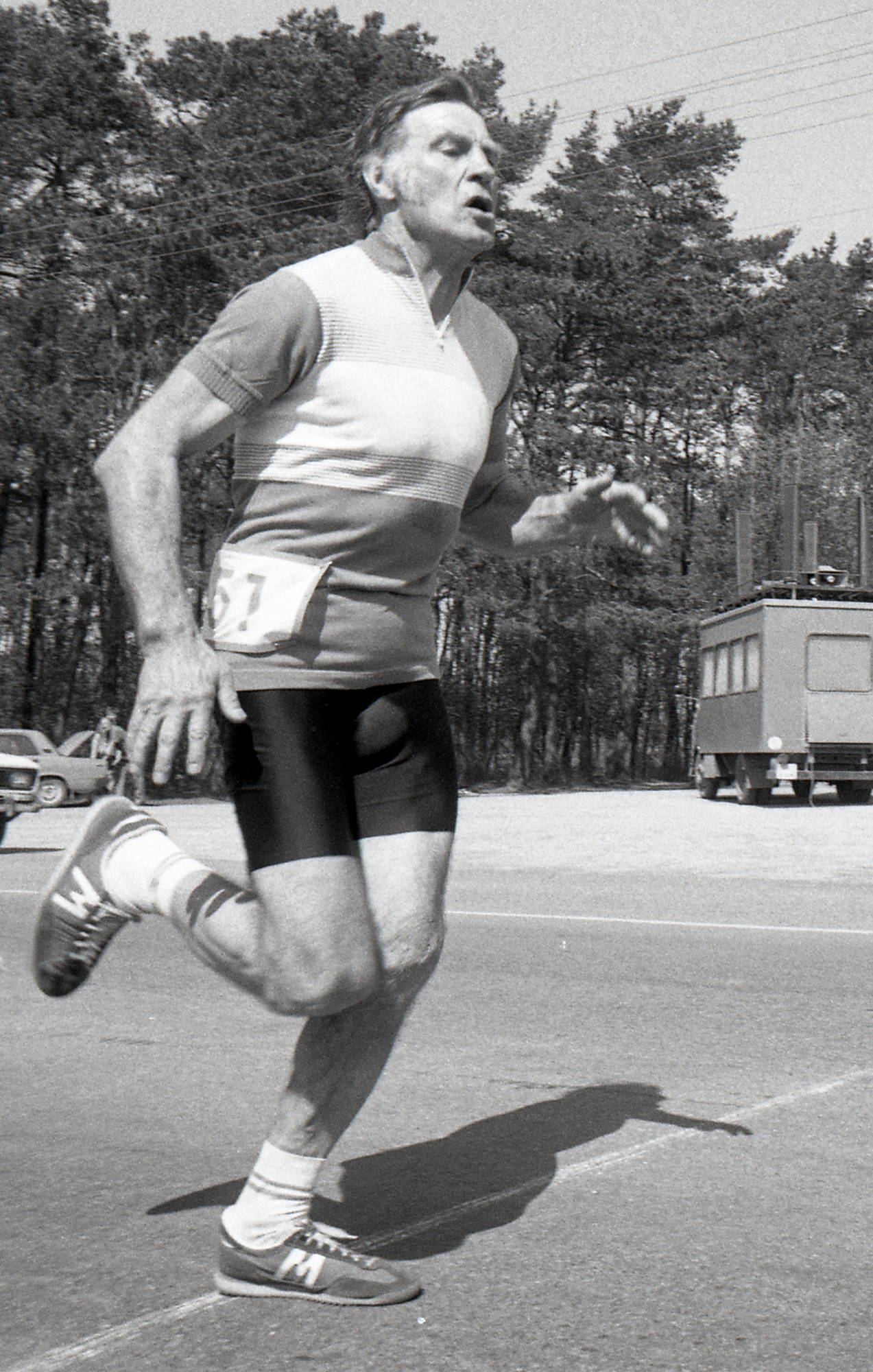

Uno Loop

Uno Loop (31 May 1930 – 8 September 2021) was an Estonian singer, musician, athlete, actor, and educator. Loop's career as a musician and singer began in the early 1950s. He performed with various ensembles and as a popular soloist beginning in the 1960s. In his youth, he trained as a boxer, and became the 1947–48 light-middleweight two-time Estonian Junior Champion. Later, he trained as a triathlete. Between the late 1950s and the early 1990s, he taught music, voice and guitar. Loop also worked as an actor, and appeared in several films beginning in the 1960s and in several roles in Estonian television series. Early life, education, and sport Uno Loop was born in Tallinn to Eduard and Amilde Hildegard Loop (''née'' Vesiloik) and grew up in Tallinn and the village of Nabala in Harju County. Interested in music from an early age, he attended the Music School of Tallinn, receiving a degree in music theory in 1958 and became an accomplished guitarist. In his youth, Loop wa ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |