|

Liquid State Machine

A liquid state machine (LSM) is a type of reservoir computer that uses a spiking neural network. An LSM consists of a large collection of units (called ''nodes'', or ''neurons''). Each node receives time varying input from external sources (the inputs) as well as from other nodes. Nodes are randomly connected to each other. The recurrent nature of the connections turns the time varying input into a spatio-temporal pattern of activations in the network nodes. The spatio-temporal patterns of activation are read out by linear discriminant units. The soup of recurrently connected nodes will end up computing a large variety of nonlinear functions on the input. Given a large enough variety of such nonlinear functions, it is theoretically possible to obtain linear combinations (using the read out units) to perform whatever mathematical operation is needed to perform a certain task, such as speech recognition or computer vision. The word liquid in the name comes from the analogy drawn ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Reservoir Computing

Reservoir computing is a framework for computation derived from recurrent neural network theory that maps input signals into higher dimensional computational spaces through the dynamics of a fixed, non-linear system called a reservoir. After the input signal is fed into the reservoir, which is treated as a "black box," a simple readout mechanism is trained to read the state of the reservoir and map it to the desired output. The first key benefit of this framework is that training is performed only at the readout stage, as the reservoir dynamics are fixed. The second is that the computational power of naturally available systems, both classical and quantum mechanical, can be used to reduce the effective computational cost. History The concept of reservoir computing stems from the use of recursive connections within neural networks to create a complex dynamical system. Schrauwen, Benjamin, David Verstraeten, and Jan Van Campenhout. "An overview of reservoir computing: theory, app ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Liquid State

A liquid is a nearly incompressible fluid that conforms to the shape of its container but retains a (nearly) constant volume independent of pressure. As such, it is one of the four fundamental states of matter (the others being solid, gas, and plasma), and is the only state with a definite volume but no fixed shape. A liquid is made up of tiny vibrating particles of matter, such as atoms, held together by intermolecular bonds. Like a gas, a liquid is able to flow and take the shape of a container. Most liquids resist compression, although others can be compressed. Unlike a gas, a liquid does not disperse to fill every space of a container, and maintains a fairly constant density. A distinctive property of the liquid state is surface tension, leading to wetting phenomena. Water is by far the most common liquid on Earth. The density of a liquid is usually close to that of a solid, and much higher than that of a gas. Therefore, liquid and solid are both termed condensed mat ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Reservoir Computing

Reservoir computing is a framework for computation derived from recurrent neural network theory that maps input signals into higher dimensional computational spaces through the dynamics of a fixed, non-linear system called a reservoir. After the input signal is fed into the reservoir, which is treated as a "black box," a simple readout mechanism is trained to read the state of the reservoir and map it to the desired output. The first key benefit of this framework is that training is performed only at the readout stage, as the reservoir dynamics are fixed. The second is that the computational power of naturally available systems, both classical and quantum mechanical, can be used to reduce the effective computational cost. History The concept of reservoir computing stems from the use of recursive connections within neural networks to create a complex dynamical system. Schrauwen, Benjamin, David Verstraeten, and Jan Van Campenhout. "An overview of reservoir computing: theory, app ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Echo State Network

An echo state network (ESN) is a type of reservoir computer that uses a recurrent neural network with a sparsely connected hidden layer (with typically 1% connectivity). The connectivity and weights of hidden neurons are fixed and randomly assigned. The weights of output neurons can be learned so that the network can produce or reproduce specific temporal patterns. The main interest of this network is that although its behaviour is non-linear, the only weights that are modified during training are for the synapses that connect the hidden neurons to output neurons. Thus, the error function is quadratic with respect to the parameter vector and can be differentiated easily to a linear system. Alternatively, one may consider a nonparametric Bayesian formulation of the output layer, under which: (i) a prior distribution is imposed over the output weights; and (ii) the output weights are marginalized out in the context of prediction generation, given the training data. This idea has be ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Stone–Weierstrass Theorem

In mathematical analysis, the Weierstrass approximation theorem states that every continuous function defined on a closed interval can be uniformly approximated as closely as desired by a polynomial function. Because polynomials are among the simplest functions, and because computers can directly evaluate polynomials, this theorem has both practical and theoretical relevance, especially in polynomial interpolation. The original version of this result was established by Karl Weierstrass in 1885 using the Weierstrass transform. Marshall H. Stone considerably generalized the theorem and simplified the proof . His result is known as the Stone–Weierstrass theorem. The Stone–Weierstrass theorem generalizes the Weierstrass approximation theorem in two directions: instead of the real interval , an arbitrary compact Hausdorff space is considered, and instead of the algebra of polynomial functions, a variety of other families of continuous functions on X are shown to suffice, as i ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Computational Neuroscience

Computational neuroscience (also known as theoretical neuroscience or mathematical neuroscience) is a branch of neuroscience which employs mathematical models, computer simulations, theoretical analysis and abstractions of the brain to understand the principles that govern the development, structure, physiology and cognitive abilities of the nervous system. Computational neuroscience employs computational simulations to validate and solve mathematical models, and so can be seen as a sub-field of theoretical neuroscience; however, the two fields are often synonymous. The term mathematical neuroscience is also used sometimes, to stress the quantitative nature of the field. Computational neuroscience focuses on the description of biologically plausible neurons (and neural systems) and their physiology and dynamics, and it is therefore not directly concerned with biologically unrealistic models used in connectionism, control theory, cybernetics, quantitative psychol ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Brain

The brain is an organ that serves as the center of the nervous system in all vertebrate and most invertebrate animals. It consists of nervous tissue and is typically located in the head ( cephalization), usually near organs for special senses such as vision, hearing and olfaction. Being the most specialized organ, it is responsible for receiving information from the sensory nervous system, processing those information (thought, cognition, and intelligence) and the coordination of motor control (muscle activity and endocrine system). While invertebrate brains arise from paired segmental ganglia (each of which is only responsible for the respective body segment) of the ventral nerve cord, vertebrate brains develop axially from the midline dorsal nerve cord as a vesicular enlargement at the rostral end of the neural tube, with centralized control over all body segments. All vertebrate brains can be embryonically divided into three parts: the forebrain (prosencep ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Capillary Wave

A capillary wave is a wave traveling along the phase boundary of a fluid, whose dynamics and phase velocity are dominated by the effects of surface tension. Capillary waves are common in nature, and are often referred to as ripples. The wavelength of capillary waves on water is typically less than a few centimeters, with a phase speed in excess of 0.2–0.3 meter/second. A longer wavelength on a fluid interface will result in gravity–capillary waves which are influenced by both the effects of surface tension and gravity, as well as by fluid inertia. Ordinary gravity waves have a still longer wavelength. When generated by light wind in open water, a nautical name for them is cat's paw waves. Light breezes which stir up such small ripples are also sometimes referred to as cat's paws. On the open ocean, much larger ocean surface waves ( seas and swells) may result from coalescence of smaller wind-caused ripple-waves. Dispersion relation The dispersion relation describe ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Computer Vision

Computer vision is an Interdisciplinarity, interdisciplinary scientific field that deals with how computers can gain high-level understanding from digital images or videos. From the perspective of engineering, it seeks to understand and automate tasks that the human visual system can do. Computer vision tasks include methods for image sensor, acquiring, Image processing, processing, Image analysis, analyzing and understanding digital images, and extraction of high-dimensional data from the real world in order to produce numerical or symbolic information, e.g. in the forms of decisions. Understanding in this context means the transformation of visual images (the input of the retina) into descriptions of the world that make sense to thought processes and can elicit appropriate action. This image understanding can be seen as the disentangling of symbolic information from image data using models constructed with the aid of geometry, physics, statistics, and learning theory. The scien ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Spiking Neural Network

Spiking neural networks (SNNs) are artificial neural networks that more closely mimic natural neural networks. In addition to neuronal and synaptic state, SNNs incorporate the concept of time into their operating model. The idea is that neurons in the SNN do not transmit information at each propagation cycle (as it happens with typical multi-layer perceptron networks), but rather transmit information only when a membrane potential – an intrinsic quality of the neuron related to its membrane electrical charge – reaches a specific value, called the threshold. When the membrane potential reaches the threshold, the neuron fires, and generates a signal that travels to other neurons which, in turn, increase or decrease their potentials in response to this signal. A neuron model that fires at the moment of threshold crossing is also called a spiking neuron model. The most prominent spiking neuron model is the leaky integrate-and-fire model. In the integrate-and-fire ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Speech Recognition

Speech recognition is an interdisciplinary subfield of computer science and computational linguistics that develops methodologies and technologies that enable the recognition and translation of spoken language into text by computers with the main benefit of searchability. It is also known as automatic speech recognition (ASR), computer speech recognition or speech to text (STT). It incorporates knowledge and research in the computer science, linguistics and computer engineering fields. The reverse process is speech synthesis. Some speech recognition systems require "training" (also called "enrollment") where an individual speaker reads text or isolated vocabulary into the system. The system analyzes the person's specific voice and uses it to fine-tune the recognition of that person's speech, resulting in increased accuracy. Systems that do not use training are called "speaker-independent" systems. Systems that use training are called "speaker dependent". Speech recognition ap ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

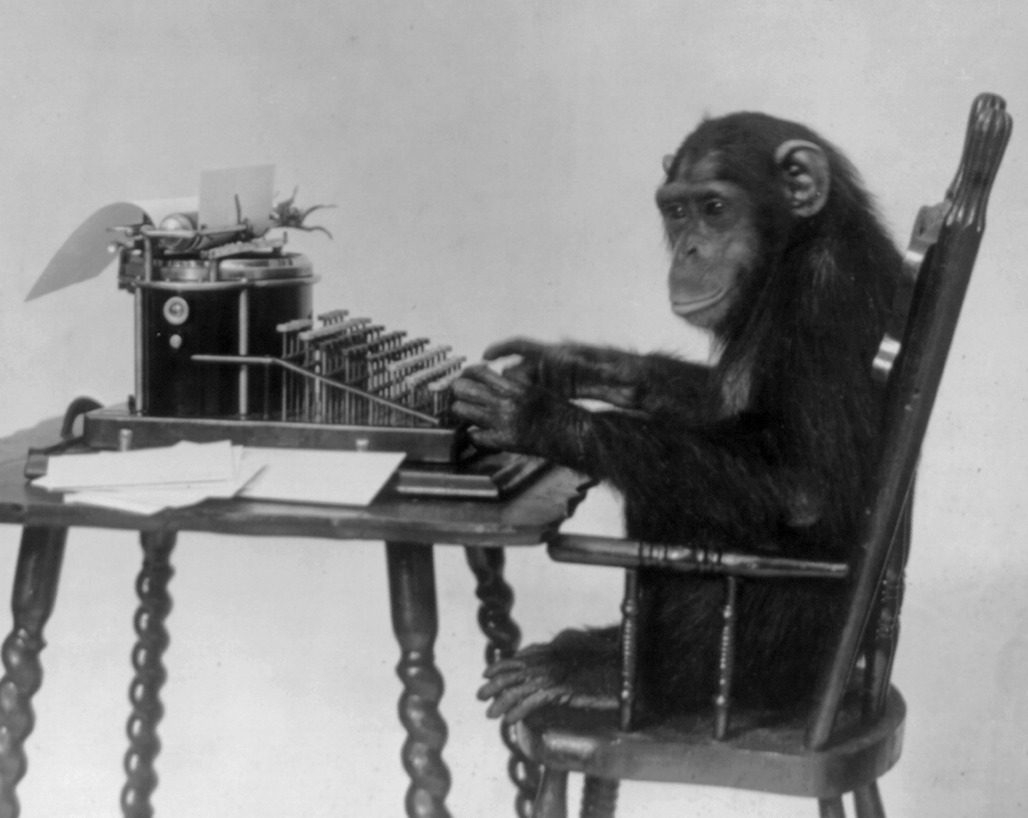

Typewriting Monkeys

The infinite monkey theorem states that a monkey hitting keys at random on a typewriter keyboard for an infinite amount of time will almost surely type any given text, such as the complete works of William Shakespeare. In fact, the monkey would almost surely type every possible finite text an infinite number of times. However, the probability that monkeys filling the entire observable universe would type a single complete work, such as Shakespeare's ''Hamlet'', is so tiny that the chance of it occurring during a period of time hundreds of thousands of orders of magnitude longer than the age of the universe is ''extremely'' low (but technically not zero). The theorem can be generalized to state that any sequence of events which has a non-zero probability of happening will almost certainly eventually occur, given enough time. In this context, "almost surely" is a mathematical term meaning the event happens with probability 1, and the "monkey" is not an actual monkey, but a metaphor ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

_(cropped).png)