|

LZ77 And LZ78 (algorithms)

LZ77 and LZ78 are the two lossless data compression algorithms published in papers by Abraham Lempel and Jacob Ziv in 1977 and 1978. They are also known as Lempel-Ziv 1 (LZ1) and Lempel-Ziv 2 (LZ2) respectively. These two algorithms form the basis for many variations including LZW, LZSS, LZMA and others. Besides their academic influence, these algorithms formed the basis of several ubiquitous compression schemes, including GIF and the DEFLATE algorithm used in PNG and ZIP. They are both theoretically dictionary coders. LZ77 maintains a sliding window during compression. This was later shown to be equivalent to the ''explicit dictionary'' constructed by LZ78—however, they are only equivalent when the entire data is intended to be decompressed. Since LZ77 encodes and decodes from a sliding window over previously seen characters, decompression must always start at the beginning of the input. Conceptually, LZ78 decompression could allow random access to the input if the en ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Lossless Data Compression

Lossless compression is a class of data compression that allows the original data to be perfectly reconstructed from the compressed data with no loss of information. Lossless compression is possible because most real-world data exhibits Redundancy (information theory), statistical redundancy. By contrast, lossy compression permits reconstruction only of an approximation of the original data, though usually with greatly improved Bit rate#Bitrates in multimedia, compression rates (and therefore reduced media sizes). By operation of the pigeonhole principle, no lossless compression algorithm can shrink the size of all possible data: Some data will get longer by at least one symbol or bit. Compression algorithms are usually effective for human- and machine-readable documents and cannot shrink the size of random data that contain no Redundancy (information theory), redundancy. Different algorithms exist that are designed either with a specific type of input data in mind or with speci ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Data Compression Ratio

Data compression ratio, also known as compression power, is a measurement of the relative reduction in size of data representation produced by a data compression algorithm. It is typically expressed as the division of uncompressed size by compressed size. Definition Data compression ratio is defined as the ratio between the ''uncompressed size'' and ''compressed size'': : = \frac Thus, a representation that compresses a file's storage size from 10 MB to 2 MB has a compression ratio of 10/2 = 5, often notated as an explicit ratio, 5:1 (read "five" to "one"), or as an implicit ratio, 5/1. This formulation applies equally for compression, where the uncompressed size is that of the original; and for decompression, where the uncompressed size is that of the reproduction. Sometimes the ''space saving'' is given instead, which is defined as the reduction in size relative to the uncompressed size: : = 1 - \frac Thus, a representation that compresses the storage size of a ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Lossless Compression Algorithms

Lossless compression is a class of data compression that allows the original data to be perfectly reconstructed from the compressed data with no loss of information. Lossless compression is possible because most real-world data exhibits statistical redundancy. By contrast, lossy compression permits reconstruction only of an approximation of the original data, though usually with greatly improved compression rates (and therefore reduced media sizes). By operation of the pigeonhole principle, no lossless compression algorithm can shrink the size of all possible data: Some data will get longer by at least one symbol or bit. Compression algorithms are usually effective for human- and machine-readable documents and cannot shrink the size of random data that contain no redundancy. Different algorithms exist that are designed either with a specific type of input data in mind or with specific assumptions about what kinds of redundancy the uncompressed data are likely to contain. Lo ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Faculty Of Electrical Engineering And Computing, University Of Zagreb

The Faculty of Electrical Engineering and Computing (, Abbreviation, abbr: ''FER'') is a faculty (division), faculty of the University of Zagreb. It is the largest technical faculty and the leading educational facility for research and development in the fields of electrical engineering and computing in Croatia. FER owns four buildings situated in the Zagreb neighbourhood of Martinovka, Trnje. The total area of the site is . , the Faculty employs more than 160 professors and 210 teaching and research assistants. In the academic year 2010/2011, the total number of students was about 3,800 in the undergraduate and graduate level, and about 450 in the PhD program. As of the academic year 2004./2005., when the implementation of the Bologna process started at the University of Zagreb, the faculty has two baccalaureus programmes (each lasting 3 years): * Electrical engineering and information technology * Computer science, Computing After receiving a Baccalaureus, bachelor's degree, stu ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Lempel–Ziv–Stac

Lempel–Ziv–Stac (LZS, or Stac compression or Stacker compression) is a lossless data compression algorithm that uses a combination of the LZ77 sliding-window compression algorithm and fixed Huffman coding. It was originally developed by Stac Electronics for tape compression, and subsequently adapted for hard disk compression and sold as the Stacker disk compression software. It was later specified as a compression algorithm for various network protocols. LZS is specified in the Cisco IOS stack. Standards LZS compression is standardized as an INCITS (previously ANSI) standard. LZS compression is specified for various Internet protocols: * – ''PPP LZS-DCP Compression Protocol (LZS-DCP)'' * – ''PPP Stac LZS Compression Protocol'' * – ''IP Payload Compression Using LZS'' * – ''Transport Layer Security (TLS) Protocol Compression Using Lempel-Ziv-Stac (LZS)'' Algorithm LZS compression and decompression uses an LZ77 type algorithm. It uses the last 2 KB of u ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Trie

In computer science, a trie (, ), also known as a digital tree or prefix tree, is a specialized search tree data structure used to store and retrieve strings from a dictionary or set. Unlike a binary search tree, nodes in a trie do not store their associated key. Instead, each node's ''position'' within the trie determines its associated key, with the connections between nodes defined by individual Character (computing), characters rather than the entire key. Tries are particularly effective for tasks such as autocomplete, spell checking, and IP routing, offering advantages over hash tables due to their prefix-based organization and lack of hash collisions. Every child node shares a common prefix (computer science), prefix with its parent node, and the root node represents the empty string. While basic trie implementations can be memory-intensive, various optimization techniques such as compression and bitwise representations have been developed to improve their efficiency. A n ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

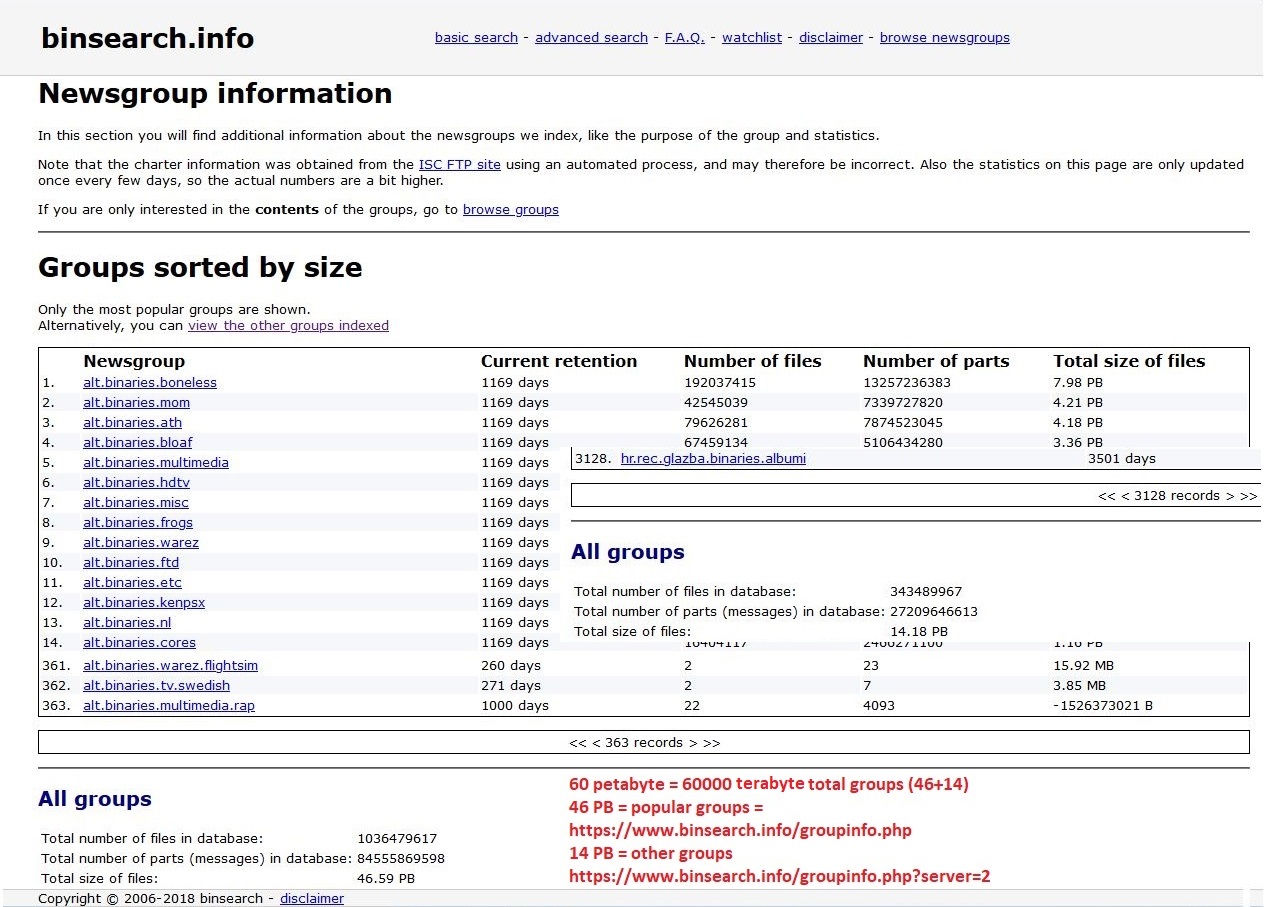

Usenet Newsgroup

A Usenet newsgroup is a repository usually within the Usenet system for messages posted from users in different locations using the Internet. They are not only discussion groups or conversations, but also a repository to publish articles, start developing tasks like creating Linux, sustain mailing lists and file uploading. That’s thank to the protocol that poses no article size limit, but are to the providers to decide. In the late 1980s, Usenet articles were often limited by the providers to 60,000 characters, but in time, Usenet groups have been split into two types: ''text'' for mainly discussions, conversations, articles, limited by most providers to about 32,000 characters, and ''binary'' for file transfer, with providers setting limits ranging from less than 1 MB to about 4 MB. Newsgroups are technically distinct from, but functionally similar to, discussion forums on the World Wide Web. Newsreader software is used to read the content of newsgroups. Before the adoption ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Huffman Coding

In computer science and information theory, a Huffman code is a particular type of optimal prefix code that is commonly used for lossless data compression. The process of finding or using such a code is Huffman coding, an algorithm developed by David A. Huffman while he was a Doctor of Science, Sc.D. student at Massachusetts Institute of Technology, MIT, and published in the 1952 paper "A Method for the Construction of Minimum-Redundancy Codes". The output from Huffman's algorithm can be viewed as a variable-length code table for encoding a source symbol (such as a character in a file). The algorithm derives this table from the estimated probability or frequency of occurrence (''weight'') for each possible value of the source symbol. As in other entropy encoding methods, more common symbols are generally represented using fewer bits than less common symbols. Huffman's method can be efficiently implemented, finding a code in time linear time, linear to the number of input weigh ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Endianness

file:Gullivers_travels.jpg, ''Gulliver's Travels'' by Jonathan Swift, the novel from which the term was coined In computing, endianness is the order in which bytes within a word (data type), word of digital data are transmitted over a data communication medium or Memory_address, addressed (by rising addresses) in computer memory, counting only byte Bit_numbering#Bit significance and indexing, significance compared to earliness. Endianness is primarily expressed as big-endian (BE) or little-endian (LE), terms introduced by Danny Cohen (computer scientist), Danny Cohen into computer science for data ordering in an Internet Experiment Note published in 1980. Also published at The adjective ''endian'' has its origin in the writings of 18th century Anglo-Irish writer Jonathan Swift. In the 1726 novel ''Gulliver's Travels'', he portrays the conflict between sects of Lilliputians divided into those breaking the shell of a boiled egg from the big end or from the little end. By analogy, ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Electronic Arts

Electronic Arts Inc. (EA) is an American video game company headquartered in Redwood City, California. Founded in May 1982 by former Apple Inc., Apple employee Trip Hawkins, the company was a pioneer of the early home computer game industry and promoted the designers and programmers responsible for its games as "software artists". EA published numerous games and some productivity software for personal computers, all of which were developed by external individuals or groups until 1987's ''Skate or Die!'' The company shifted toward internal game studios, often through acquisitions, such as Distinctive Software becoming EA Canada in 1991. Into the 21st century, EA develops and publishes games of established franchises, including ''Battlefield (video game series), Battlefield'', ''Need for Speed'', ''The Sims'', ''Medal of Honor (video game series), Medal of Honor'', ''Command & Conquer'', ''Dead Space'', ''Mass Effect'', ''Dragon Age'', ''Army of Two (series), Army of Two'', ''A ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Run-length Encoding

Run-length encoding (RLE) is a form of lossless data compression in which ''runs'' of data (consecutive occurrences of the same data value) are stored as a single occurrence of that data value and a count of its consecutive occurrences, rather than as the original run. As an imaginary example of the concept, when encoding an image built up from colored dots, the sequence "green green green green green green green green green" is shortened to "green x 9". This is most efficient on data that contains many such runs, for example, simple graphic images such as icons, line drawings, games, and animations. For files that do not have many runs, encoding them with RLE could increase the file size. RLE may also refer in particular to an early graphics file format supported by CompuServe for compressing black and white images, that was widely supplanted by their later Graphics Interchange Format (GIF). RLE also refers to a little-used image format in Windows 3.x that is saved with the fil ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |