|

Karloff–Zwick Algorithm

The Karloff–Zwick algorithm, in computational complexity theory, is a randomised approximation algorithm taking an instance of MAX-3SAT Boolean satisfiability problem as input. If the instance is satisfiable, then the expected weight of the assignment found is at least 7/8 of optimal. There is strong evidence (but not a mathematical proof) that the algorithm achieves 7/8 of optimal even on unsatisfiable MAX-3SAT instances. Howard Karloff and Uri Zwick presented the algorithm in 1997.. The algorithm is based on semidefinite programming. It can be derandomized using, e.g., the techniques from to yield a deterministic polynomial-time algorithm with the same approximation guarantees. Comparison to random assignment For the related MAX-E3SAT problem, in which all clauses in the input 3SAT formula are guaranteed to have exactly three literals, the simple randomized approximation algorithm which assigns a truth value to each variable independently and uniformly at random satisfies ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Computational Complexity Theory

In theoretical computer science and mathematics, computational complexity theory focuses on classifying computational problems according to their resource usage, and explores the relationships between these classifications. A computational problem is a task solved by a computer. A computation problem is solvable by mechanical application of mathematical steps, such as an algorithm. A problem is regarded as inherently difficult if its solution requires significant resources, whatever the algorithm used. The theory formalizes this intuition, by introducing mathematical models of computation to study these problems and quantifying their computational complexity, i.e., the amount of resources needed to solve them, such as time and storage. Other measures of complexity are also used, such as the amount of communication (used in communication complexity), the number of logic gate, gates in a circuit (used in circuit complexity) and the number of processors (used in parallel computing). O ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Randomized Algorithm

A randomized algorithm is an algorithm that employs a degree of randomness as part of its logic or procedure. The algorithm typically uses uniformly random bits as an auxiliary input to guide its behavior, in the hope of achieving good performance in the "average case" over all possible choices of random determined by the random bits; thus either the running time, or the output (or both) are random variables. There is a distinction between algorithms that use the random input so that they always terminate with the correct answer, but where the expected running time is finite (Las Vegas algorithms, for example Quicksort), and algorithms which have a chance of producing an incorrect result ( Monte Carlo algorithms, for example the Monte Carlo algorithm for the MFAS problem) or fail to produce a result either by signaling a failure or failing to terminate. In some cases, probabilistic algorithms are the only practical means of solving a problem. In common practice, randomized alg ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Approximation Algorithm

In computer science and operations research, approximation algorithms are efficient algorithms that find approximate solutions to optimization problems (in particular NP-hard problems) with provable guarantees on the distance of the returned solution to the optimal one. Approximation algorithms naturally arise in the field of theoretical computer science as a consequence of the widely believed P ≠ NP conjecture. Under this conjecture, a wide class of optimization problems cannot be solved exactly in polynomial time. The field of approximation algorithms, therefore, tries to understand how closely it is possible to approximate optimal solutions to such problems in polynomial time. In an overwhelming majority of the cases, the guarantee of such algorithms is a multiplicative one expressed as an approximation ratio or approximation factor i.e., the optimal solution is always guaranteed to be within a (predetermined) multiplicative factor of the returned solution. However, there a ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

MAX-3SAT

MAX-3SAT is a problem in the computational complexity subfield of computer science. It generalises the Boolean satisfiability problem (SAT) which is a decision problem considered in complexity theory. It is defined as: ''Given a 3-CNF formula Φ (i.e. with at most 3 variables per clause), find an assignment that satisfies the largest number of clauses.'' MAX-3SAT is a canonical complete problem for the complexity class MAXSNP (shown complete in Papadimitriou pg. 314). Approximability The decision version of MAX-3SAT is NP-complete. Therefore, a polynomial-time solution can only be achieved if P = NP. An approximation within a factor of 2 can be achieved with this simple algorithm, however: * Output the solution in which most clauses are satisfied, when either all variables = TRUE or all variables = FALSE. * Every clause is satisfied by one of the two solutions, therefore one solution satisfies at least half of the clauses. The Karloff-Zwick algorithm runs in polynomial ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Boolean Satisfiability Problem

In logic and computer science, the Boolean satisfiability problem (sometimes called propositional satisfiability problem and abbreviated SATISFIABILITY, SAT or B-SAT) asks whether there exists an Interpretation (logic), interpretation that Satisfiability, satisfies a given Boolean logic, Boolean Formula (mathematical logic), formula. In other words, it asks whether the formula's variables can be consistently replaced by the values TRUE or FALSE to make the formula evaluate to TRUE. If this is the case, the formula is called ''satisfiable'', else ''unsatisfiable''. For example, the formula "''a'' AND NOT ''b''" is satisfiable because one can find the values ''a'' = TRUE and ''b'' = FALSE, which make (''a'' AND NOT ''b'') = TRUE. In contrast, "''a'' AND NOT ''a''" is unsatisfiable. SAT is the first problem that was proven to be NP-complete—this is the Cook–Levin theorem. This means that all problems in the complexity class NP (complexity), NP, which includes a wide range of natu ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Mathematical Proof

A mathematical proof is a deductive reasoning, deductive Argument-deduction-proof distinctions, argument for a Proposition, mathematical statement, showing that the stated assumptions logically guarantee the conclusion. The argument may use other previously established statements, such as theorems; but every proof can, in principle, be constructed using only certain basic or original assumptions known as axioms, along with the accepted rules of inference. Proofs are examples of exhaustive deductive reasoning that establish logical certainty, to be distinguished from empirical evidence, empirical arguments or non-exhaustive inductive reasoning that establish "reasonable expectation". Presenting many cases in which the statement holds is not enough for a proof, which must demonstrate that the statement is true in ''all'' possible cases. A proposition that has not been proved but is believed to be true is known as a conjecture, or a hypothesis if frequently used as an assumption for ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Howard Karloff

Howard is a masculine given name derived from the English surname Howard. ''The Oxford Dictionary of English Christian Names'' notes that "the use of this surname as a christian name is quite recent and there seems to be no particular reason for it except that it is the name of several noble families". The surname has a number of possible origins; in the case of the noble family, the likely source is the Norse given name Hávarðr, composed of the elements ''há'' ("high") and ''varðr'' ("guardian"). Diminutives include Howie and Ward. Howard reached peak popularity in the United States in the 1920s, when it ranked as the 26th most popular boys' name. As of 2018, it had fallen to 968th place. People with the given name * Howard Allen (1949–2020), American serial killer * Howard Duane Allman (1946–1971), American guitar virtuoso * Howard Anderson (other), name of several people * Howard Andrew (1934–2021), American poker player * Howard Ashman (1950–1991), ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Uri Zwick

Uri Zwick (Hebrew: אורי צוויק) is an Israeli computer scientist and mathematician known for his work on graph algorithms, in particular on distances in graphs and on the color-coding technique for subgraph isomorphism. With Howard Karloff, he is the namesake of the Karloff–Zwick algorithm for approximating the MAX-3SAT problem of Boolean satisfiability. He and his coauthors won the David P. Robbins Prize in 2011 for their work on the block-stacking problem. Zwick earned a bachelor's degree from the Technion – Israel Institute of Technology, and completed his doctorate at Tel Aviv University in 1989 under the supervision of Noga Alon Noga Alon (; born 1956) is an Israeli mathematician and a professor of mathematics at Princeton University noted for his contributions to combinatorics and theoretical computer science, having authored hundreds of papers. Education and career .... He is currently a professor of computer science at Tel Aviv University. References ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Semidefinite Programming

Semidefinite programming (SDP) is a subfield of mathematical programming concerned with the optimization of a linear objective function (a user-specified function that the user wants to minimize or maximize) over the intersection of the cone of positive semidefinite matrices with an affine space, i.e., a spectrahedron. Semidefinite programming is a relatively new field of optimization which is of growing interest for several reasons. Many practical problems in operations research and combinatorial optimization can be modeled or approximated as semidefinite programming problems. In automatic control theory, SDPs are used in the context of linear matrix inequalities. SDPs are in fact a special case of cone programming and can be efficiently solved by interior point methods. All linear programs and (convex) quadratic programs can be expressed as SDPs, and via hierarchies of SDPs the solutions of polynomial optimization problems can be approximated. Semidefinite programming ha ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Polynomial-time

In theoretical computer science, the time complexity is the computational complexity that describes the amount of computer time it takes to run an algorithm. Time complexity is commonly estimated by counting the number of elementary operations performed by the algorithm, supposing that each elementary operation takes a fixed amount of time to perform. Thus, the amount of time taken and the number of elementary operations performed by the algorithm are taken to be related by a constant factor. Since an algorithm's running time may vary among different inputs of the same size, one commonly considers the worst-case time complexity, which is the maximum amount of time required for inputs of a given size. Less common, and usually specified explicitly, is the average-case complexity, which is the average of the time taken on inputs of a given size (this makes sense because there are only a finite number of possible inputs of a given size). In both cases, the time complexity is gener ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Randomized Algorithm

A randomized algorithm is an algorithm that employs a degree of randomness as part of its logic or procedure. The algorithm typically uses uniformly random bits as an auxiliary input to guide its behavior, in the hope of achieving good performance in the "average case" over all possible choices of random determined by the random bits; thus either the running time, or the output (or both) are random variables. There is a distinction between algorithms that use the random input so that they always terminate with the correct answer, but where the expected running time is finite (Las Vegas algorithms, for example Quicksort), and algorithms which have a chance of producing an incorrect result ( Monte Carlo algorithms, for example the Monte Carlo algorithm for the MFAS problem) or fail to produce a result either by signaling a failure or failing to terminate. In some cases, probabilistic algorithms are the only practical means of solving a problem. In common practice, randomized alg ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Method Of Conditional Probabilities

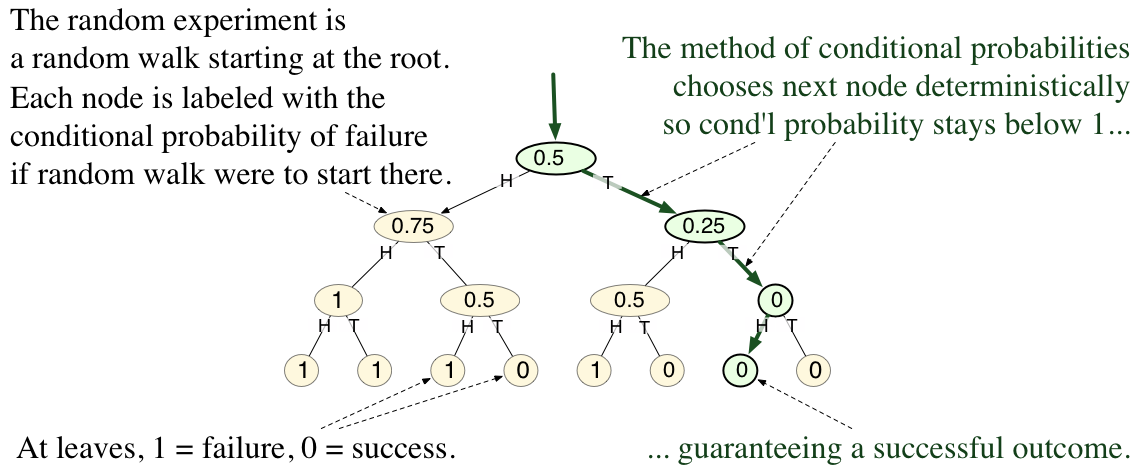

In mathematics and computer science, the method of conditional probabilities is a systematic method for converting non-constructive probabilistic existence proofs into efficient Deterministic algorithm, deterministic algorithms that explicitly construct the desired object. blog entry by Neal E. Young, accessed 19/04/2012 and 14/09/2023. Often, the probabilistic method is used to prove the existence of mathematical objects with some desired combinatorial properties. The proofs in that method work by showing that a random object, chosen from some probability distribution, has the desired properties with positive probability. Consequently, they are nonconstructive proof, nonconstructive — th ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |