|

Kolmogorov's Three-series Theorem

In probability theory, Kolmogorov's Three-Series Theorem, named after Andrey Kolmogorov, gives a criterion for the almost sure convergence of an infinite series of random variables in terms of the convergence of three different series involving properties of their probability distributions. Kolmogorov's three-series theorem, combined with Kronecker's lemma, can be used to give a relatively easy proof of the Strong Law of Large Numbers. Statement of the theorem Let (X_n)_ be independent random variables. The random series \sum_^\infty X_n converges almost surely in \mathbb if the following conditions hold for some A > 0, and only if the following conditions hold for any A > 0: Proof Sufficiency of conditions ("if") Condition (i) and Borel–Cantelli give that X_n = Y_n for n large, almost surely. Hence \textstyle\sum_^X_n converges if and only if \textstyle\sum_^Y_n converges. Conditions (ii)-(iii) and Kolmogorov's Two-Series Theorem give the almost sure convergence of ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Probability Theory

Probability theory is the branch of mathematics concerned with probability. Although there are several different probability interpretations, probability theory treats the concept in a rigorous mathematical manner by expressing it through a set of axioms. Typically these axioms formalise probability in terms of a probability space, which assigns a measure taking values between 0 and 1, termed the probability measure, to a set of outcomes called the sample space. Any specified subset of the sample space is called an event. Central subjects in probability theory include discrete and continuous random variables, probability distributions, and stochastic processes (which provide mathematical abstractions of non-deterministic or uncertain processes or measured quantities that may either be single occurrences or evolve over time in a random fashion). Although it is not possible to perfectly predict random events, much can be said about their behavior. Two major results in probability ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Expected Value

In probability theory, the expected value (also called expectation, expectancy, mathematical expectation, mean, average, or first moment) is a generalization of the weighted average. Informally, the expected value is the arithmetic mean of a large number of independently selected outcomes of a random variable. The expected value of a random variable with a finite number of outcomes is a weighted average of all possible outcomes. In the case of a continuum of possible outcomes, the expectation is defined by integration. In the axiomatic foundation for probability provided by measure theory, the expectation is given by Lebesgue integration. The expected value of a random variable is often denoted by , , or , with also often stylized as or \mathbb. History The idea of the expected value originated in the middle of the 17th century from the study of the so-called problem of points, which seeks to divide the stakes ''in a fair way'' between two players, who have to end th ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Harmonic Series (mathematics)

In mathematics, the harmonic series is the infinite series formed by summing all positive unit fractions: \sum_^\infty\frac = 1 + \frac + \frac + \frac + \frac + \cdots. The first n terms of the series sum to approximately \ln n + \gamma, where \ln is the natural logarithm and \gamma\approx0.577 is the Euler–Mascheroni constant. Because the logarithm has arbitrarily large values, the harmonic series does not have a finite limit: it is a divergent series. Its divergence was proven in the 14th century by Nicole Oresme using a precursor to the Cauchy condensation test for the convergence of infinite series. It can also be proven to diverge by comparing the sum to an integral, according to the integral test for convergence. Applications of the harmonic series and its partial sums include Euler's proof that there are infinitely many prime numbers, the analysis of the coupon collector's problem on how many random trials are needed to provide a complete range of responses, the co ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Bounded Set

:''"Bounded" and "boundary" are distinct concepts; for the latter see boundary (topology). A circle in isolation is a boundaryless bounded set, while the half plane is unbounded yet has a boundary. In mathematical analysis and related areas of mathematics, a set is called bounded if it is, in a certain sense, of finite measure. Conversely, a set which is not bounded is called unbounded. The word 'bounded' makes no sense in a general topological space without a corresponding metric Metric or metrical may refer to: * Metric system, an internationally adopted decimal system of measurement * An adjective indicating relation to measurement in general, or a noun describing a specific type of measurement Mathematics In mathem .... A bounded set is not necessarily a closed set and vise versa. For example, a subset ''S'' of a 2-dimensional real space R''2'' constrained by two parabolic curves ''x''2 + 1 and ''x''2 - 1 defined in a Cartesian coordinate system is a closed but is not b ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Variance

In probability theory and statistics, variance is the expectation of the squared deviation of a random variable from its population mean or sample mean. Variance is a measure of dispersion, meaning it is a measure of how far a set of numbers is spread out from their average value. Variance has a central role in statistics, where some ideas that use it include descriptive statistics, statistical inference, hypothesis testing, goodness of fit, and Monte Carlo sampling. Variance is an important tool in the sciences, where statistical analysis of data is common. The variance is the square of the standard deviation, the second central moment of a distribution, and the covariance of the random variable with itself, and it is often represented by \sigma^2, s^2, \operatorname(X), V(X), or \mathbb(X). An advantage of variance as a measure of dispersion is that it is more amenable to algebraic manipulation than other measures of dispersion such as the expected absolute deviation; for e ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Martingale (probability Theory)

In probability theory, a martingale is a sequence of random variables (i.e., a stochastic process) for which, at a particular time, the conditional expectation of the next value in the sequence is equal to the present value, regardless of all prior values. History Originally, '' martingale'' referred to a class of betting strategies that was popular in 18th-century France. The simplest of these strategies was designed for a game in which the gambler wins their stake if a coin comes up heads and loses it if the coin comes up tails. The strategy had the gambler double their bet after every loss so that the first win would recover all previous losses plus win a profit equal to the original stake. As the gambler's wealth and available time jointly approach infinity, their probability of eventually flipping heads approaches 1, which makes the martingale betting strategy seem like a sure thing. However, the exponential growth of the bets eventually bankrupts its users due to f ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Martingale Theory

In probability theory, a martingale is a sequence of random variables (i.e., a stochastic process) for which, at a particular time, the conditional expectation of the next value in the sequence is equal to the present value, regardless of all prior values. History Originally, ''martingale (betting system), martingale'' referred to a class of betting strategy, betting strategies that was popular in 18th-century France. The simplest of these strategies was designed for a game in which the gambler wins their stake if a coin comes up heads and loses it if the coin comes up tails. The strategy had the gambler double their bet after every loss so that the first win would recover all previous losses plus win a profit equal to the original stake. As the gambler's wealth and available time jointly approach infinity, their probability of eventually flipping heads approaches 1, which makes the martingale betting strategy seem like a almost surely, sure thing. However, the exponential gr ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Kolmogorov's Two-series Theorem

In probability theory, Kolmogorov's two-series theorem is a result about the convergence of random series. It follows from Kolmogorov's inequality and is used in one proof of the strong law of large numbers. Statement of the theorem Let \left( X_n \right)_^ be independent random variables with expected values \mathbf \left X_n \right= \mu_n and variances \mathbf \left( X_n \right) = \sigma_n^2, such that \sum_^ \mu_n converges in ℝ and \sum_^ \sigma_n^2 converges in ℝ. Then \sum_^ X_n converges in ℝ almost surely. Proof Assume WLOG \mu_n = 0. Set S_N = \sum_^N X_n, and we will see that \limsup_N S_N - \liminf_NS_N = 0 with probability 1. For every m \in \mathbb, \limsup_ S_N - \liminf_ S_N = \limsup_ \left( S_N - S_m \right) - \liminf_ \left( S_N - S_m \right) \leq 2 \max_ \left, \sum_^ X_ \ Thus, for every m \in \mathbb and \epsilon > 0, \begin \mathbb \left( \limsup_ \left( S_N - S_m \right) - \liminf_ \left( S_N - S_m \right) \geq \epsilon \right) &\leq \mathbb ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Kolmogorov's Two-Series Theorem

In probability theory, Kolmogorov's two-series theorem is a result about the convergence of random series. It follows from Kolmogorov's inequality and is used in one proof of the strong law of large numbers. Statement of the theorem Let \left( X_n \right)_^ be independent random variables with expected values \mathbf \left X_n \right= \mu_n and variances \mathbf \left( X_n \right) = \sigma_n^2, such that \sum_^ \mu_n converges in ℝ and \sum_^ \sigma_n^2 converges in ℝ. Then \sum_^ X_n converges in ℝ almost surely. Proof Assume WLOG \mu_n = 0. Set S_N = \sum_^N X_n, and we will see that \limsup_N S_N - \liminf_NS_N = 0 with probability 1. For every m \in \mathbb, \limsup_ S_N - \liminf_ S_N = \limsup_ \left( S_N - S_m \right) - \liminf_ \left( S_N - S_m \right) \leq 2 \max_ \left, \sum_^ X_ \ Thus, for every m \in \mathbb and \epsilon > 0, \begin \mathbb \left( \limsup_ \left( S_N - S_m \right) - \liminf_ \left( S_N - S_m \right) \geq \epsilon \right) &\leq \mathbb ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Almost Surely

In probability theory, an event is said to happen almost surely (sometimes abbreviated as a.s.) if it happens with probability 1 (or Lebesgue measure 1). In other words, the set of possible exceptions may be non-empty, but it has probability 0. The concept is analogous to the concept of "almost everywhere" in measure theory. In probability experiments on a finite sample space, there is no difference between ''almost surely'' and ''surely'' (since having a probability of 1 often entails including all the sample points). However, this distinction becomes important when the sample space is an infinite set, because an infinite set can have non-empty subsets of probability 0. Some examples of the use of this concept include the strong and uniform versions of the law of large numbers, and the continuity of the paths of Brownian motion. The terms almost certainly (a.c.) and almost always (a.a.) are also used. Almost never describes the opposite of ''almost surely'': an event that h ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

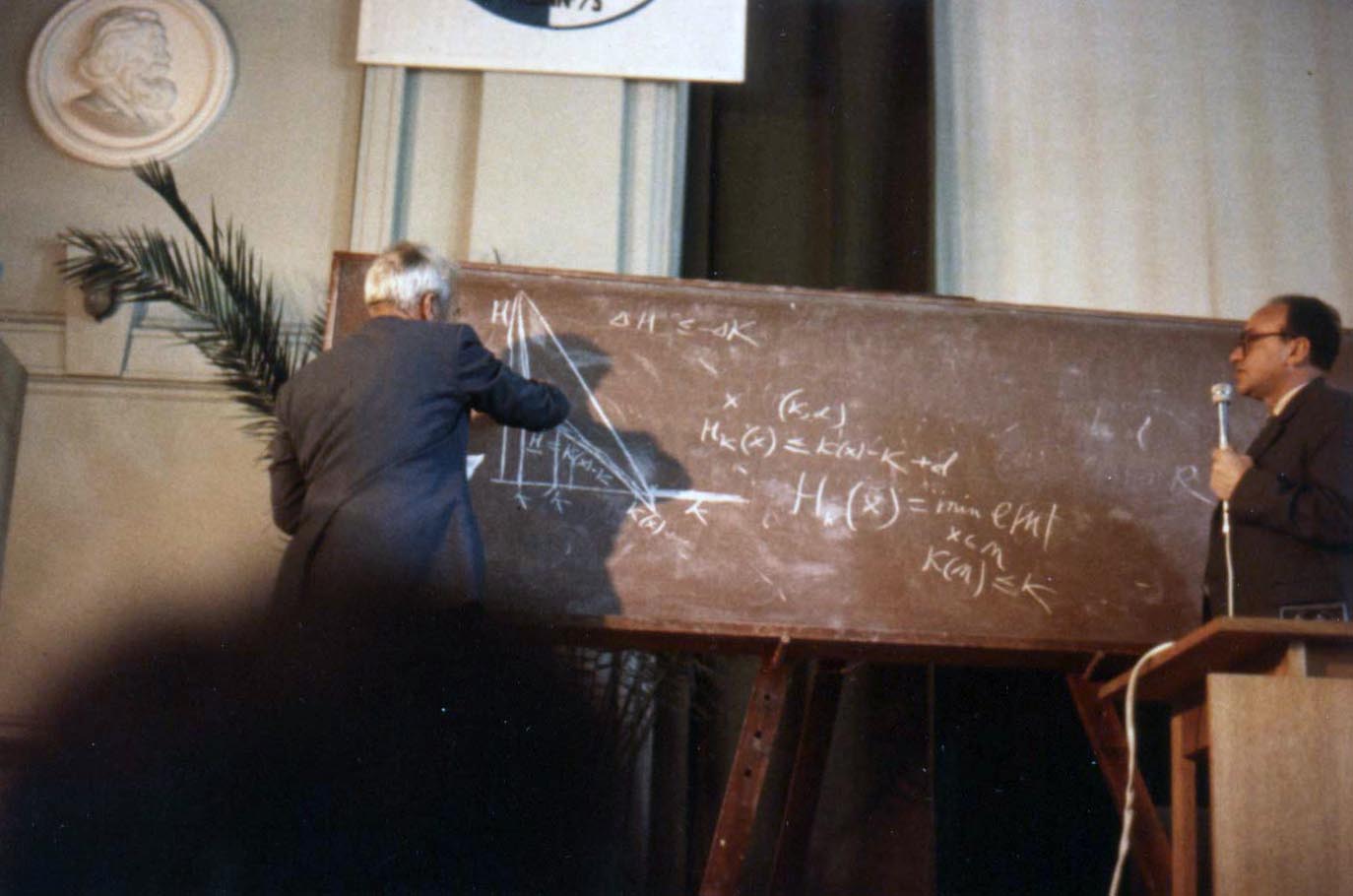

Andrey Kolmogorov

Andrey Nikolaevich Kolmogorov ( rus, Андре́й Никола́евич Колмого́ров, p=ɐnˈdrʲej nʲɪkɐˈlajɪvʲɪtɕ kəlmɐˈɡorəf, a=Ru-Andrey Nikolaevich Kolmogorov.ogg, 25 April 1903 – 20 October 1987) was a Soviet mathematician who contributed to the mathematics of probability theory, topology, intuitionistic logic, turbulence, classical mechanics, algorithmic information theory and computational complexity. Biography Early life Andrey Kolmogorov was born in Tambov, about 500 kilometers south-southeast of Moscow, in 1903. His unmarried mother, Maria Y. Kolmogorova, died giving birth to him. Andrey was raised by two of his aunts in Tunoshna (near Yaroslavl) at the estate of his grandfather, a well-to-do nobleman. Little is known about Andrey's father. He was supposedly named Nikolai Matveevich Kataev and had been an agronomist. Kataev had been exiled from St. Petersburg to the Yaroslavl province after his participation in the revolutionary movem ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |