|

Jacobi's Formula

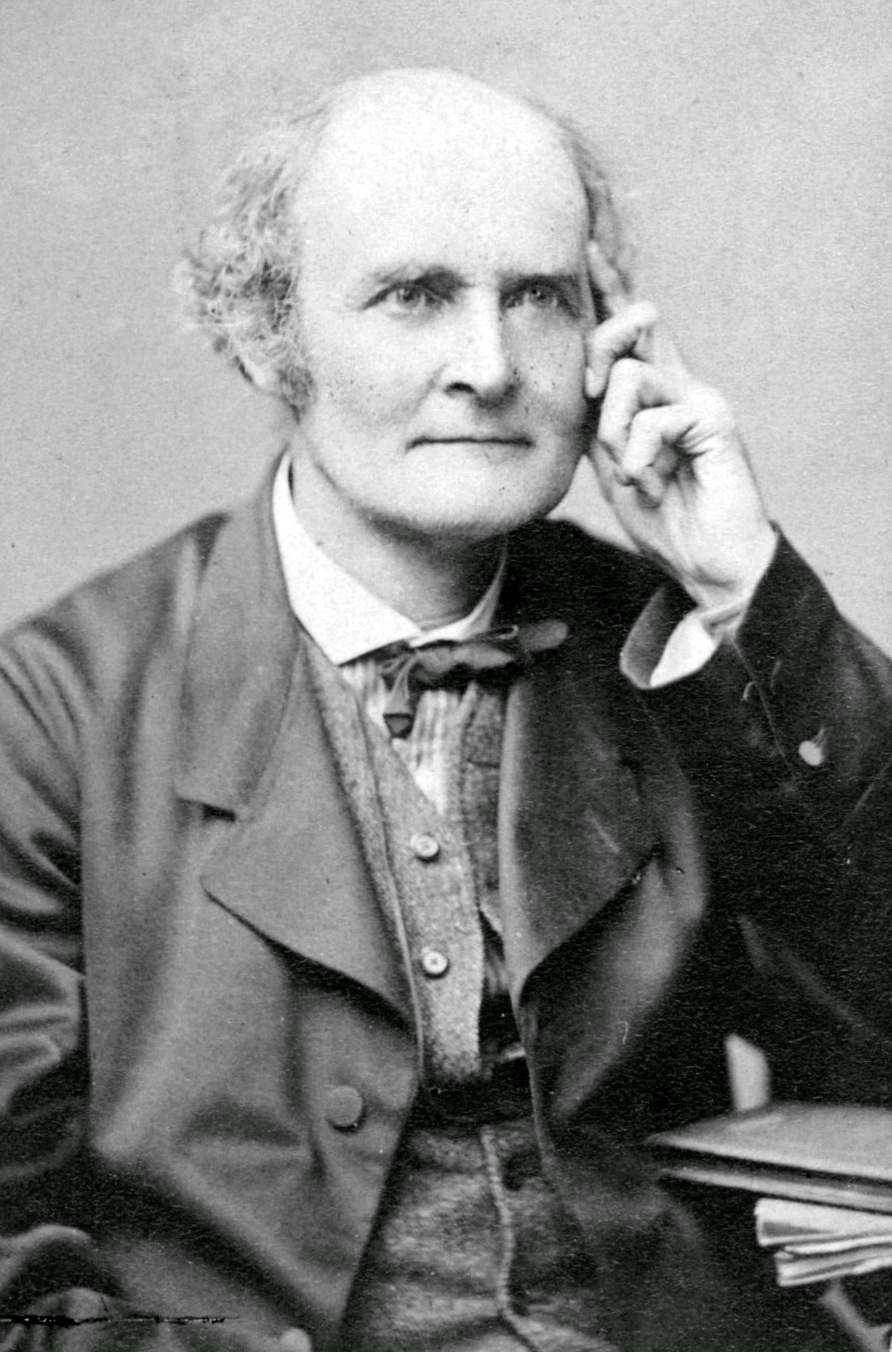

In matrix calculus, Jacobi's formula expresses the derivative of the determinant of a matrix ''A'' in terms of the adjugate of ''A'' and the derivative of ''A''., Part Three, Section 8.3 If is a differentiable map from the real numbers to matrices, then : \frac \det A(t) = \operatorname \left (\operatorname(A(t)) \, \frac\right ) = \left(\det A(t) \right) \cdot \operatorname \left (A(t)^ \cdot \, \frac\right ) where is the trace of the matrix . (The latter equality only holds if ''A''(''t'') is invertible.) As a special case, : = \operatorname(A)_. Equivalently, if stands for the differential of , the general formula is : d \det (A) = \operatorname (\operatorname(A) \, dA). The formula is named after the mathematician Carl Gustav Jacob Jacobi. Derivation Via Matrix Computation We first prove a preliminary lemma: Lemma. Let ''A'' and ''B'' be a pair of square matrices of the same dimension ''n''. Then :\sum_i \sum_j A_ B_ = \operatorname (A^ B). ''Proof.'' The pr ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Matrix Calculus

In mathematics, matrix calculus is a specialized notation for doing multivariable calculus, especially over spaces of matrices. It collects the various partial derivatives of a single function with respect to many variables, and/or of a multivariate function with respect to a single variable, into vectors and matrices that can be treated as single entities. This greatly simplifies operations such as finding the maximum or minimum of a multivariate function and solving systems of differential equations. The notation used here is commonly used in statistics and engineering, while the tensor index notation is preferred in physics. Two competing notational conventions split the field of matrix calculus into two separate groups. The two groups can be distinguished by whether they write the derivative of a scalar with respect to a vector as a column vector or a row vector. Both of these conventions are possible even when the common assumption is made that vectors shoul ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Directional Derivative

In mathematics, the directional derivative of a multivariable differentiable (scalar) function along a given vector v at a given point x intuitively represents the instantaneous rate of change of the function, moving through x with a velocity specified by v. The directional derivative of a scalar function ''f'' with respect to a vector v at a point (e.g., position) x may be denoted by any of the following: \nabla_(\mathbf)=f'_\mathbf(\mathbf)=D_\mathbff(\mathbf)=Df(\mathbf)(\mathbf)=\partial_\mathbff(\mathbf)=\mathbf\cdot=\mathbf\cdot \frac. It therefore generalizes the notion of a partial derivative, in which the rate of change is taken along one of the curvilinear coordinate curves, all other coordinates being constant. The directional derivative is a special case of the Gateaux derivative. Definition The ''directional derivative'' of a scalar function :f(\mathbf) = f(x_1, x_2, \ldots, x_n) along a vector :\mathbf = (v_1, \ldots, v_n) is the function \nabla_ defi ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Determinants

In mathematics, the determinant is a scalar value that is a function of the entries of a square matrix. It characterizes some properties of the matrix and the linear map represented by the matrix. In particular, the determinant is nonzero if and only if the matrix is invertible and the linear map represented by the matrix is an isomorphism. The determinant of a product of matrices is the product of their determinants (the preceding property is a corollary of this one). The determinant of a matrix is denoted , , or . The determinant of a matrix is :\begin a & b\\c & d \end=ad-bc, and the determinant of a matrix is : \begin a & b & c \\ d & e & f \\ g & h & i \end= aei + bfg + cdh - ceg - bdi - afh. The determinant of a matrix can be defined in several equivalent ways. Leibniz formula expresses the determinant as a sum of signed products of matrix entries such that each summand is the product of different entries, and the number of these summands is n!, the factorial of (th ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Adjugate Matrix

In linear algebra, the adjugate or classical adjoint of a square matrix is the transpose of its cofactor matrix and is denoted by . It is also occasionally known as adjunct matrix, or "adjoint", though the latter today normally refers to a different concept, the adjoint operator which is the conjugate transpose of the matrix. The product of a matrix with its adjugate gives a diagonal matrix (entries not on the main diagonal are zero) whose diagonal entries are the determinant of the original matrix: :\mathbf \operatorname(\mathbf) = \det(\mathbf) \mathbf, where is the identity matrix of the same size as . Consequently, the multiplicative inverse of an invertible matrix can be found by dividing its adjugate by its determinant. Definition The adjugate of is the transpose of the cofactor matrix of , :\operatorname(\mathbf) = \mathbf^\mathsf. In more detail, suppose is a unital commutative ring and is an matrix with entries from . The -'' minor'' of , denoted , is the dete ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Cayley–Hamilton Theorem

In linear algebra, the Cayley–Hamilton theorem (named after the mathematicians Arthur Cayley and William Rowan Hamilton) states that every square matrix over a commutative ring (such as the real or complex numbers or the integers) satisfies its own characteristic equation. If is a given matrix and is the identity matrix, then the characteristic polynomial of is defined as p_A(\lambda)=\det(\lambda I_n-A), where is the determinant operation and is a variable for a scalar element of the base ring. Since the entries of the matrix (\lambda I_n-A) are (linear or constant) polynomials in , the determinant is also a degree- monic polynomial in , p_A(\lambda) = \lambda^n + c_\lambda^ + \cdots + c_1\lambda + c_0~. One can create an analogous polynomial p_A(A) in the matrix instead of the scalar variable , defined as p_A(A) = A^n + c_A^ + \cdots + c_1A + c_0I_n~. The Cayley–Hamilton theorem states that this polynomial expression is equal to the zero matrix, which is to ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Faddeev–LeVerrier Algorithm

In mathematics (linear algebra), the Faddeev–LeVerrier algorithm is a recursive method to calculate the coefficients of the characteristic polynomial p_A(\lambda)=\det (\lambda I_n - A) of a square matrix, , named after Dmitry Konstantinovich Faddeev and Urbain Le Verrier. Calculation of this polynomial yields the eigenvalues of as its roots; as a matrix polynomial in the matrix itself, it vanishes by the fundamental Cayley–Hamilton theorem. Computing determinant from the definition of characteristic polynomial, however, is computationally cumbersome, because \lambda is new symbolic quantity, whereas this algorithm works directly with coefficients of matrix A. The algorithm has been independently rediscovered several times, in some form or another. It was first published in 1840 by Urbain Le Verrier, subsequently redeveloped by P. Horst, Jean-Marie Souriau, in its present form here by Faddeev and Sominsky, and further by J. S. Frame, and others. (For historical poi ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Via Chain Rule

Via or VIA may refer to the following: Science and technology * MOS Technology 6522, Versatile Interface Adapter * ''Via'' (moth), a genus of moths in the family Noctuidae * Via (electronics), a through-connection * VIA Technologies, a Taiwanese manufacturer of electronics * Virtual Interface Adapter, a network protocol * Virtual Interface Architecture, a networking standard used in high-performance computing Education * VIA Vancouver Institute for the Americas, an organization dedicated to education for sustainable development, since 1998 operating in Canada * VIA University College, a university college (Danish: professionshøjskole), since 2008 established in Denmark * VIA, Association of Information Sciences (Dutch: VIA Vereniging Informatiewetenschappen Amsterdam), at the University of Amsterdam, in the Netherlands Transportation * The name for a Roman road, e.g., ''Via Appia'' * VIA was the ICAO airline designator for Venezuelan airline Viasa (1960-1977) * VIA Me ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Invertible Matrix

In linear algebra, an -by- square matrix is called invertible (also nonsingular or nondegenerate), if there exists an -by- square matrix such that :\mathbf = \mathbf = \mathbf_n \ where denotes the -by- identity matrix and the multiplication used is ordinary matrix multiplication. If this is the case, then the matrix is uniquely determined by , and is called the (multiplicative) ''inverse'' of , denoted by . Matrix inversion is the process of finding the matrix that satisfies the prior equation for a given invertible matrix . A square matrix that is ''not'' invertible is called singular or degenerate. A square matrix is singular if and only if its determinant is zero. Singular matrices are rare in the sense that if a square matrix's entries are randomly selected from any finite region on the number line or complex plane, the probability that the matrix is singular is 0, that is, it will "almost never" be singular. Non-square matrices (-by- matrices for which ) do not ha ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Matrix Exponential

In mathematics, the matrix exponential is a matrix function on square matrices analogous to the ordinary exponential function. It is used to solve systems of linear differential equations. In the theory of Lie groups, the matrix exponential gives the exponential map between a matrix Lie algebra and the corresponding Lie group. Let be an real or complex matrix. The exponential of , denoted by or , is the matrix given by the power series e^X = \sum_^\infty \frac X^k where X^0 is defined to be the identity matrix I with the same dimensions as X. The above series always converges, so the exponential of is well-defined. If is a 1×1 matrix the matrix exponential of is a 1×1 matrix whose single element is the ordinary exponential of the single element of . Properties Elementary properties Let and be complex matrices and let and be arbitrary complex numbers. We denote the identity matrix by and the zero matrix by 0. The matrix exponential satisfies the foll ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Trace (linear Algebra)

In linear algebra, the trace of a square matrix , denoted , is defined to be the sum of elements on the main diagonal (from the upper left to the lower right) of . The trace is only defined for a square matrix (). It can be proved that the trace of a matrix is the sum of its (complex) eigenvalues (counted with multiplicities). It can also be proved that for any two matrices and . This implies that similar matrices have the same trace. As a consequence one can define the trace of a linear operator mapping a finite-dimensional vector space into itself, since all matrices describing such an operator with respect to a basis are similar. The trace is related to the derivative of the determinant (see Jacobi's formula). Definition The trace of an square matrix is defined as \operatorname(\mathbf) = \sum_^n a_ = a_ + a_ + \dots + a_ where denotes the entry on the th row and th column of . The entries of can be real numbers or (more generally) complex numbers. The trace is not ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Characteristic Polynomial

In linear algebra, the characteristic polynomial of a square matrix is a polynomial which is invariant under matrix similarity and has the eigenvalues as roots. It has the determinant and the trace of the matrix among its coefficients. The characteristic polynomial of an endomorphism of a finite-dimensional vector space is the characteristic polynomial of the matrix of that endomorphism over any base (that is, the characteristic polynomial does not depend on the choice of a basis). The characteristic equation, also known as the determinantal equation, is the equation obtained by equating the characteristic polynomial to zero. In spectral graph theory, the characteristic polynomial of a graph is the characteristic polynomial of its adjacency matrix. Motivation In linear algebra, eigenvalues and eigenvectors play a fundamental role, since, given a linear transformation, an eigenvector is a vector whose direction is not changed by the transformation, and the correspondin ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Kronecker Delta

In mathematics, the Kronecker delta (named after Leopold Kronecker) is a function of two variables, usually just non-negative integers. The function is 1 if the variables are equal, and 0 otherwise: \delta_ = \begin 0 &\text i \neq j, \\ 1 &\text i=j. \end or with use of Iverson brackets: \delta_ = =j, where the Kronecker delta is a piecewise function of variables and . For example, , whereas . The Kronecker delta appears naturally in many areas of mathematics, physics and engineering, as a means of compactly expressing its definition above. In linear algebra, the identity matrix has entries equal to the Kronecker delta: I_ = \delta_ where and take the values , and the inner product of vectors can be written as \mathbf\cdot\mathbf = \sum_^n a_\delta_b_ = \sum_^n a_ b_. Here the Euclidean vectors are defined as -tuples: \mathbf = (a_1, a_2, \dots, a_n) and \mathbf= (b_1, b_2, ..., b_n) and the last step is obtained by using the values of the Kronecker del ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |