|

JPEG XS

JPEG XS (ISO/IEC 21122) is an interoperable, visually lossless, low-latency and lightweight Image compression, image and Video codec, video coding system used in professional applications. Applications of the standard include streaming high quality content for virtual reality, drones, autonomous vehicles using cameras, gaming, and broadcasting (SMPTE SMPTE 2022, ST 2022 and SMPTE 2110, ST 2110). In this respect, JPEG XS is unique, being the first ISO codec ever designed for this specific purpose. JPEG XS, built on core technology from both intoPIX and Fraunhofer IIS, is formally standardized as ISO/IEC 21122 by the Joint Photographic Experts Group with the first edition published in 2019. Although not official, the XS acronym was chosen to highlight the ''eXtra Small'' and ''eXtra Speed'' characteristics of the codec. Today, the JPEG committee is still actively working on further improvements to XS, with the second editionA. Descampe et al., "JPEG XS--A New Standard for Visually L ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Joint Photographic Experts Group

The Joint Photographic Experts Group (JPEG) is the joint committee between ISO/IEC JTC 1/SC 29 and ITU-T Study Group 16 that created and maintains the JPEG, JPEG 2000, JPEG XR, JPEG XT, JPEG XS, JPEG XL, and related digital image standards. It also has the responsibility for maintenance of the JBIG and JBIG2 standards that were developed by the former Joint Bi-level Image Experts Group. Within ISO/IEC JTC 1, JPEG is Working Group 1 (WG 1) of Subcommittee 29 (SC 29) and has the formal title ''JPEG Coding of digital representations of images'', where it is one of eight working groups in SC 29. In the ITU-T (formerly called the CCITT), its work falls in the domain of the ITU-T Visual Coding Experts Group (VCEG), which is Question 6 of Study Group 16. JPEG has typically held meetings three or four times annually in North America, Asia and Europe (with meetings held virtually during the COVID-19 pandemic). The chairman of JPEG (termed its ''Convenor'' in ISO/IEC terminology) is ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Real-time Transport Protocol

The Real-time Transport Protocol (RTP) is a network protocol for delivering audio and video over IP networks. RTP is used in communication and entertainment systems that involve streaming media, such as telephony, video teleconference applications including WebRTC, television services and web-based push-to-talk features. RTP typically runs over User Datagram Protocol (UDP). RTP is used in conjunction with the RTP Control Protocol (RTCP). While RTP carries the media streams (e.g., audio and video), RTCP is used to monitor transmission statistics and quality of service (QoS) and aids synchronization of multiple streams. RTP is one of the technical foundations of Voice over IP and in this context is often used in conjunction with a signaling protocol such as the Session Initiation Protocol (SIP) which establishes connections across the network. RTP was developed by the Audio-Video Transport Working Group of the Internet Engineering Task Force (IETF) and first published in 1996 a ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Demosaicing

A demosaicing (also de-mosaicing, demosaicking or debayering) algorithm is a digital image process used to reconstruct a full color image from the incomplete color samples output from an image sensor overlaid with a color filter array (CFA). It is also known as ''CFA interpolation'' or ''color reconstruction''. Most modern digital cameras acquire images using a single image sensor overlaid with a CFA, so demosaicing is part of the processing pipeline required to render these images into a viewable format. Many modern digital cameras can save images in a raw format allowing the user to demosaic them using software, rather than using the camera's built-in firmware. Goal The aim of a demosaicing algorithm is to reconstruct a full color image (i.e. a full set of color triples) from the spatially undersampled color channels output from the CFA. The algorithm should have the following traits: * Avoidance of the introduction of false color artifacts, such as chromatic aliases, zipper ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Bayer Filter

A Bayer filter mosaic is a color filter array (CFA) for arranging RGB color filters on a square grid of photosensors. Its particular arrangement of color filters is used in most single-chip digital image sensors used in digital cameras, camcorders, and scanners to create a color image. The filter pattern is half green, one quarter red and one quarter blue, hence is also called BGGR, RGBG, GRBG, or RGGB. It is named after its inventor, Bryce Bayer of Eastman Kodak. Bayer is also known for his recursively defined matrix used in ordered dithering. Alternatives to the Bayer filter include both various modifications of colors and arrangement and completely different technologies, such as color co-site sampling, the Foveon X3 sensor, the dichroic mirrors or a transparent diffractive-filter array. Explanation Bryce Bayer's patent (U.S. Patent No. 3,971,065) in 1976 called the green photosensors ''luminance-sensitive elements'' and the red and blue ones ''chrominance-sensitive ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Color Filter Array

In digital imaging, a color filter array (CFA), or color filter mosaic (CFM), is a mosaic of tiny color filters placed over the pixel sensors of an image sensor to capture color information. The term is also used in reference to e paper devices where it means a mosaic of tiny color filters placed over the grey scale display panel to reproduce color images. Image sensor overview Color filters are needed because the typical photosensors detect light intensity with little or no wavelength specificity and therefore cannot separate color information. Since sensors are made of semiconductors, they obey solid-state physics. The color filters filter the light by wavelength range, such that the separate filtered intensities include information about the color of light. For example, the Bayer filter (shown by the image) gives information about the intensity of light in red, green, and blue (RGB) wavelength regions. The raw image data captured by the image sensor is then converted to a fu ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

High Dynamic Range

High dynamic range (HDR) is a dynamic range higher than usual, synonyms are wide dynamic range, extended dynamic range, expanded dynamic range. The term is often used in discussing the dynamic range of various signals such as images, videos, audio or radio. It may apply to the means of recording, processing, and reproducing such signals including analog and digitized signals. The term is also the name of some of the technologies or techniques allowing to achieve high dynamic range images, videos, or audio. Imaging In this context, the term ''high dynamic range'' means there is a lot of variation in light levels within a scene or an image. The ''dynamic range'' refers to the range of luminosity between the brightest area and the darkest area of that scene or image. (HDRI) refers to the set of imaging technologies and techniques that allow to increase the dynamic range of images or videos. It covers the acquisition, creation, storage, distribution and display of images and v ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Constant Bitrate

Constant bitrate (CBR) is a term used in telecommunications, relating to the quality of service. Compare with variable bitrate. When referring to codecs, constant bit rate encoding means that the rate at which a codec's output data should be consumed is constant. CBR is useful for streaming multimedia content on limited capacity channels since it is the maximum bit rate that matters, not the average, so CBR would be used to take advantage of all of the capacity. CBR is not optimal for storing data as it may not allocate enough data for complex sections (resulting in degraded quality); and if it maximizes quality for complex sections, it will waste data on simple sections. The problem of not allocating enough data for complex sections could be solved by choosing a high bitrate to ensure that there will be enough bits for the entire encoding process, though the size of the file at the end would be proportionally larger. Most coding schemes such as Huffman coding or run-length en ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Constant Bitrate

Constant bitrate (CBR) is a term used in telecommunications, relating to the quality of service. Compare with variable bitrate. When referring to codecs, constant bit rate encoding means that the rate at which a codec's output data should be consumed is constant. CBR is useful for streaming multimedia content on limited capacity channels since it is the maximum bit rate that matters, not the average, so CBR would be used to take advantage of all of the capacity. CBR is not optimal for storing data as it may not allocate enough data for complex sections (resulting in degraded quality); and if it maximizes quality for complex sections, it will waste data on simple sections. The problem of not allocating enough data for complex sections could be solved by choosing a high bitrate to ensure that there will be enough bits for the entire encoding process, though the size of the file at the end would be proportionally larger. Most coding schemes such as Huffman coding or run-length en ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

High Efficiency Video Coding

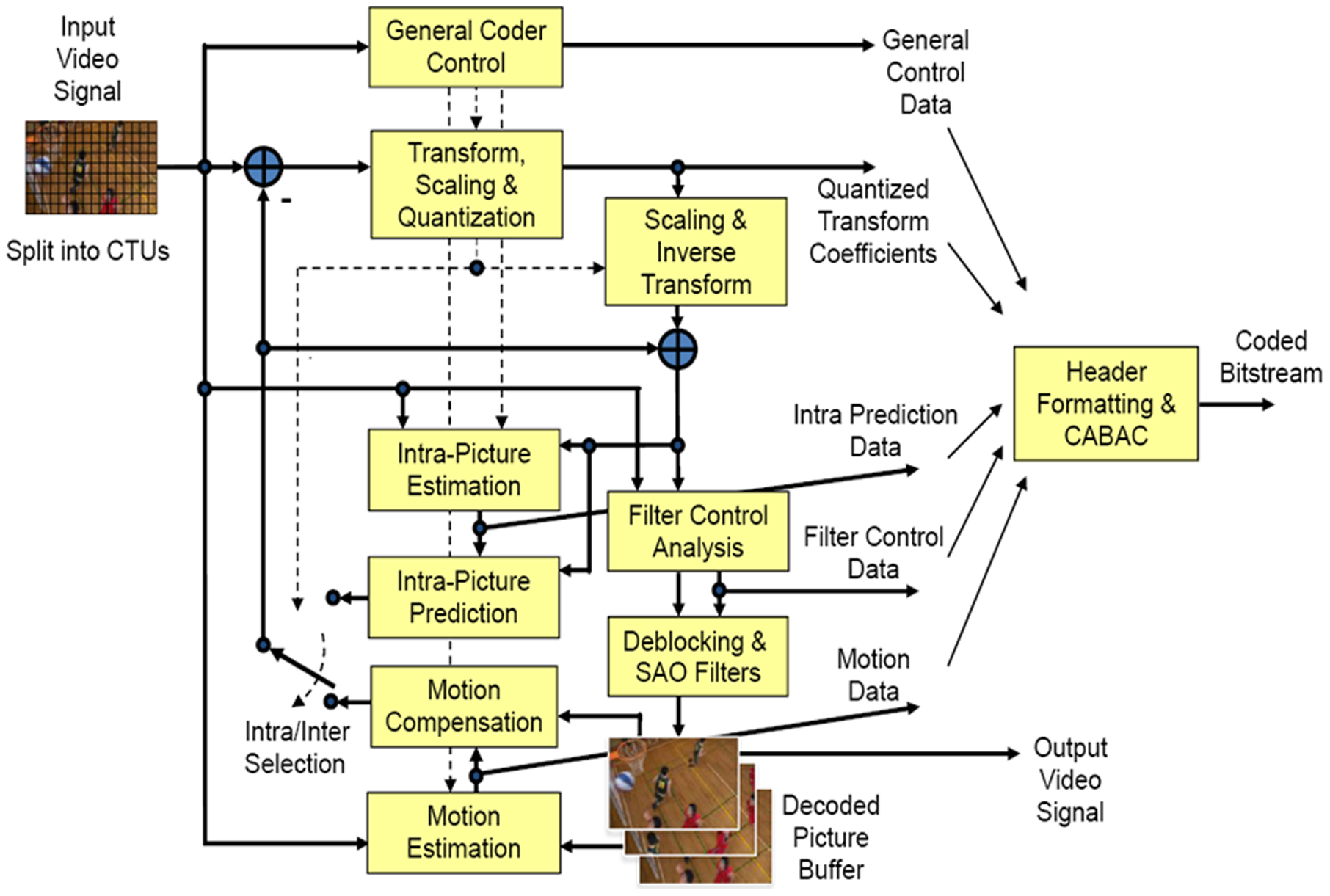

High Efficiency Video Coding (HEVC), also known as H.265 and MPEG-H Part 2, is a video compression standard designed as part of the MPEG-H project as a successor to the widely used Advanced Video Coding (AVC, H.264, or MPEG-4 Part 10). In comparison to AVC, HEVC offers from 25% to 50% better data compression at the same level of video quality, or substantially improved video quality at the same bit rate. It supports resolutions up to 8192×4320, including 8K UHD, and unlike the primarily 8-bit AVC, HEVC's higher fidelity Main 10 profile has been incorporated into nearly all supporting hardware. While AVC uses the integer discrete cosine transform (DCT) with 4×4 and 8×8 block sizes, HEVC uses integer DCT and DST transforms with varied block sizes between 4×4 and 32×32. The High Efficiency Image Format (HEIF) is based on HEVC. , HEVC is used by 43% of video developers, and is the second most widely used video coding format after AVC. Concept In most ways, HEVC is an extensi ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Advanced Video Coding

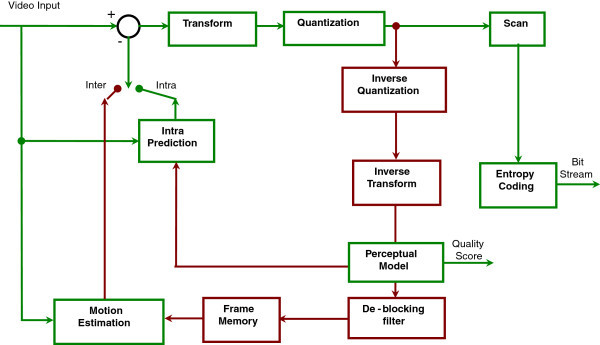

Advanced Video Coding (AVC), also referred to as H.264 or MPEG-4 Part 10, is a video compression standard based on block-oriented, motion-compensated coding. It is by far the most commonly used format for the recording, compression, and distribution of video content, used by 91% of video industry developers . It supports resolutions up to and including 8K UHD. The intent of the H.264/AVC project was to create a standard capable of providing good video quality at substantially lower bit rates than previous standards (i.e., half or less the bit rate of MPEG-2, H.263, or MPEG-4 Part 2), without increasing the complexity of design so much that it would be impractical or excessively expensive to implement. This was achieved with features such as a reduced-complexity integer discrete cosine transform (integer DCT), variable block-size segmentation, and multi-picture inter-picture prediction. An additional goal was to provide enough flexibility to allow the standard to be applied ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

JPEG XL

JPEG XL is a royalty-free raster-graphics file format that supports both lossy and lossless compression. It is designed to outperform existing raster formats and thus become their universal replacement. Name The name consists of ''JPEG'' (for the ''Joint Photographic Experts Group'', which is the committee which designed the format), ''X'' (part of the name of several JPEG standards since 2000: JPEG XT, JPEG XR, JPEG XS), and ''L'' (for long-term). The L was included because the authors' intention is for the format to replace the legacy JPEG and last as long too. Authors The main authors of the specification are Jyrki Alakuijala, Jon Sneyers, and Luca Versari. Other collaborators are Sami Boukortt, Alex Deymo, Moritz Firsching, Thomas Fischbacher, Eugene Kliuchnikov, Robert Obryk, Alexander Rhatushnyak, Zoltan Szabadka, Lode Vandevenne, and Jan Wassenberg. History In August 2017, JTC1 / SC29 / WG1 (JPEG) published a call for proposals for JPEG XL, the next generation image enc ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |