|

Infra-exponential

A growth rate is said to be infra-exponential or subexponential if it is dominated by all exponential growth rates, however great the doubling time. A continuous function with infra-exponential growth rate will have a Fourier transform that is a Fourier hyperfunction. Examples of sub-exponential growth rates arise in the analysis of algorithms, where they give rise to sub-exponential time complexity, and in the growth rate of groups, where a subexponential growth rate implies that a group is amenable. ReferencesFourier hyperfunctionin the Encyclopedia of Mathematics The ''Encyclopedia of Mathematics'' (also ''EOM'' and formerly ''Encyclopaedia of Mathematics'') is a large reference work in mathematics Mathematics is an area of knowledge that includes the topics of numbers, formulas and related structu ... Exponentials {{mathanalysis-stub ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Growth Rate (group Theory)

In the mathematical subject of geometric group theory, the growth rate of a group with respect to a symmetric generating set describes how fast a group grows. Every element in the group can be written as a product of generators, and the growth rate counts the number of elements that can be written as a product of length ''n''. Definition Suppose ''G'' is a finitely generated group; and ''T'' is a finite ''symmetric'' set of generators (symmetric means that if x \in T then x^ \in T ). Any element x \in G can be expressed as a word in the ''T''-alphabet : x = a_1 \cdot a_2 \cdots a_k \text a_i\in T. Consider the subset of all elements of ''G'' that can be expressed by such a word of length ≤ ''n'' :B_n(G,T) = \. This set is just the closed ball of radius ''n'' in the word metric ''d'' on ''G'' with respect to the generating set ''T'': :B_n(G,T) = \. More geometrically, B_n(G,T) is the set of vertices in the Cayley graph with respect to ''T'' that are within distan ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Time Complexity

In computer science, the time complexity is the computational complexity that describes the amount of computer time it takes to run an algorithm. Time complexity is commonly estimated by counting the number of elementary operations performed by the algorithm, supposing that each elementary operation takes a fixed amount of time to perform. Thus, the amount of time taken and the number of elementary operations performed by the algorithm are taken to be related by a constant factor. Since an algorithm's running time may vary among different inputs of the same size, one commonly considers the worst-case time complexity, which is the maximum amount of time required for inputs of a given size. Less common, and usually specified explicitly, is the average-case complexity, which is the average of the time taken on inputs of a given size (this makes sense because there are only a finite number of possible inputs of a given size). In both cases, the time complexity is generally expresse ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Exponential Growth

Exponential growth is a process that increases quantity over time. It occurs when the instantaneous rate of change (that is, the derivative) of a quantity with respect to time is proportional to the quantity itself. Described as a function, a quantity undergoing exponential growth is an exponential function of time, that is, the variable representing time is the exponent (in contrast to other types of growth, such as quadratic growth). If the constant of proportionality is negative, then the quantity decreases over time, and is said to be undergoing exponential decay instead. In the case of a discrete domain of definition with equal intervals, it is also called geometric growth or geometric decay since the function values form a geometric progression. The formula for exponential growth of a variable at the growth rate , as time goes on in discrete intervals (that is, at integer times 0, 1, 2, 3, ...), is x_t = x_0(1+r)^t where is the value of at ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Doubling Time

The doubling time is the time it takes for a population to double in size/value. It is applied to population growth, inflation, resource extraction, consumption of goods, compound interest, the volume of malignant tumours, and many other things that tend to grow over time. When the relative growth rate (not the absolute growth rate) is constant, the quantity undergoes exponential growth and has a constant doubling time or period, which can be calculated directly from the growth rate. This time can be calculated by dividing the natural logarithm of 2 by the exponent of growth, or approximated by dividing 70 by the percentage growth rate (more roughly but roundly, dividing 72; see the rule of 72 for details and derivations of this formula). The doubling time is a characteristic unit (a natural unit of scale) for the exponential growth equation, and its converse for exponential decay is the half-life. For example, given Canada's net population growth of 0.9% in the year 2006, di ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

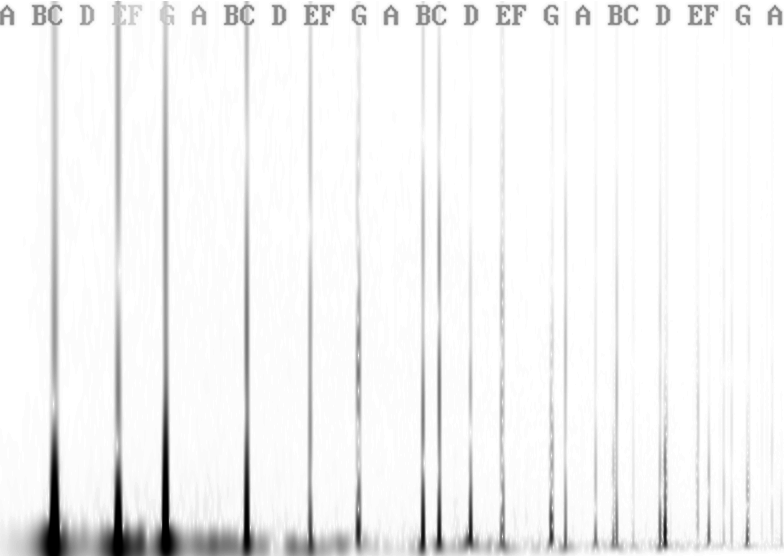

Fourier Transform

A Fourier transform (FT) is a mathematical transform that decomposes functions into frequency components, which are represented by the output of the transform as a function of frequency. Most commonly functions of time or space are transformed, which will output a function depending on temporal frequency or spatial frequency respectively. That process is also called ''analysis''. An example application would be decomposing the waveform of a musical chord into terms of the intensity of its constituent pitches. The term ''Fourier transform'' refers to both the frequency domain representation and the mathematical operation that associates the frequency domain representation to a function of space or time. The Fourier transform of a function is a complex-valued function representing the complex sinusoids that comprise the original function. For each frequency, the magnitude (absolute value) of the complex value represents the amplitude of a constituent complex sinusoid with that ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Hyperfunction

In mathematics, hyperfunctions are generalizations of functions, as a 'jump' from one holomorphic function to another at a boundary, and can be thought of informally as distributions of infinite order. Hyperfunctions were introduced by Mikio Sato in 1958 in Japanese, (1959, 1960 in English), building upon earlier work by Laurent Schwartz, Grothendieck and others. Formulation A hyperfunction on the real line can be conceived of as the 'difference' between one holomorphic function defined on the upper half-plane and another on the lower half-plane. That is, a hyperfunction is specified by a pair (''f'', ''g''), where ''f'' is a holomorphic function on the upper half-plane and ''g'' is a holomorphic function on the lower half-plane. Informally, the hyperfunction is what the difference f -g would be at the real line itself. This difference is not affected by adding the same holomorphic function to both ''f'' and ''g'', so if ''h'' is a holomorphic function on the whole compl ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Analysis Of Algorithms

In computer science, the analysis of algorithms is the process of finding the computational complexity of algorithms—the amount of time, storage, or other resources needed to execute them. Usually, this involves determining a function that relates the size of an algorithm's input to the number of steps it takes (its time complexity) or the number of storage locations it uses (its space complexity). An algorithm is said to be efficient when this function's values are small, or grow slowly compared to a growth in the size of the input. Different inputs of the same size may cause the algorithm to have different behavior, so best, worst and average case descriptions might all be of practical interest. When not otherwise specified, the function describing the performance of an algorithm is usually an upper bound, determined from the worst case inputs to the algorithm. The term "analysis of algorithms" was coined by Donald Knuth. Algorithm analysis is an important part of a broader ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Amenable Group

In mathematics, an amenable group is a locally compact topological group ''G'' carrying a kind of averaging operation on bounded functions that is invariant under translation by group elements. The original definition, in terms of a finitely additive measure (or mean) on subsets of ''G'', was introduced by John von Neumann in 1929 under the German name "messbar" ("measurable" in English) in response to the Banach–Tarski paradox. In 1949 Mahlon M. Day introduced the English translation "amenable", apparently as a pun on "''mean''". The amenability property has a large number of equivalent formulations. In the field of analysis, the definition is in terms of linear functionals. An intuitive way to understand this version is that the support of the regular representation is the whole space of irreducible representations. In discrete group theory, where ''G'' has the discrete topology, a simpler definition is used. In this setting, a group is amenable if one can say what proport ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Encyclopedia Of Mathematics

The ''Encyclopedia of Mathematics'' (also ''EOM'' and formerly ''Encyclopaedia of Mathematics'') is a large reference work in mathematics. Overview The 2002 version contains more than 8,000 entries covering most areas of mathematics at a graduate level, and the presentation is technical in nature. The encyclopedia is edited by Michiel Hazewinkel and was published by Kluwer Academic Publishers until 2003, when Kluwer became part of Springer. The CD-ROM contains animations and three-dimensional objects. The encyclopedia has been translated from the Soviet ''Matematicheskaya entsiklopediya'' (1977) originally edited by Ivan Matveevich Vinogradov and extended with comments and three supplements adding several thousand articles. Until November 29, 2011, a static version of the encyclopedia could be browsed online free of charge online. This URL now redirects to the new wiki incarnation of the EOM. ''Encyclopedia of Mathematics'' wiki A new dynamic version of the encyclopedia is now ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |