|

Implementations Of Differentially Private Analyses

Since the advent of differential privacy, a number of systems supporting differentially private data analyses have been implemented and deployed. This article tracks real-world deployments, production software packages, and research prototypes. Real-world deployments Production software packages These software packages purport to be usable in production systems. They are split in two categories: those focused on answering statistical queries with differential privacy, and those focused on training machine learning models with differential privacy. Statistical analyses Machine learning Research projects and prototypes {, class="sortable wikitable" style="text-align: left" , - ! Name ! Citation ! Year Published ! Notes , - , PINQ: An API implemented in C#. , , 2010 , , - , Airavat: A MapReduce-based system implemented in Java hardened with SELinux-like access control. , , 2010 , , - , Fuzz: Time-constant implementation in Caml Light of a domain-specifi ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Differential Privacy

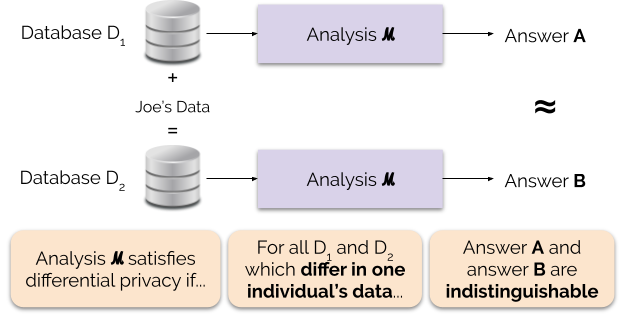

Differential privacy (DP) is a mathematically rigorous framework for releasing statistical information about datasets while protecting the privacy of individual data subjects. It enables a data holder to share aggregate patterns of the group while limiting information that is leaked about specific individuals. This is done by injecting carefully calibrated noise into statistical computations such that the utility of the statistic is preserved while provably limiting what can be inferred about any individual in the dataset. Another way to describe differential privacy is as a constraint on the algorithms used to publish aggregate information about a statistical database which limits the disclosure of private information of records in the database. For example, differentially private algorithms are used by some government agencies to publish demographic information or other statistical aggregates while ensuring confidentiality of survey responses, and by companies to collect informa ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

United States Census Bureau

The United States Census Bureau, officially the Bureau of the Census, is a principal agency of the Federal statistical system, U.S. federal statistical system, responsible for producing data about the American people and American economy, economy. The U.S. Census Bureau is part of the United States Department of Commerce, U.S. Department of Commerce and its Director of the United States Census Bureau, director is appointed by the president of the United States. Currently, Ron S. Jarmin is the acting director of the U.S. Census Bureau. The Census Bureau's primary mission is conducting the United States census, U.S. census every ten years, which allocates the seats of the United States House of Representatives, U.S. House of Representatives to the U.S. state, states based on their population. The bureau's various censuses and surveys help allocate over $675 billion in federal funds every year and it assists states, local communities, and businesses in making informed decisions. T ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Secure Multi-party Computation

Secure multi-party computation (also known as secure computation, multi-party computation (MPC) or privacy-preserving computation) is a subfield of cryptography with the goal of creating methods for parties to jointly compute a function over their inputs while keeping those inputs private. Unlike traditional cryptographic tasks, where cryptography assures security and integrity of communication or storage and the adversary is outside the system of participants (an eavesdropper on the sender and receiver), the cryptography in this model protects participants' privacy from each other. The foundation for secure multi-party computation started in the late 1970s with the work on mental poker, cryptographic work that simulates game playing/computational tasks over distances without requiring a trusted third party. Traditionally, cryptography was about concealing content, while this new type of computation and protocol is about concealing partial information about data while computing with ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Differential Privacy

Differential privacy (DP) is a mathematically rigorous framework for releasing statistical information about datasets while protecting the privacy of individual data subjects. It enables a data holder to share aggregate patterns of the group while limiting information that is leaked about specific individuals. This is done by injecting carefully calibrated noise into statistical computations such that the utility of the statistic is preserved while provably limiting what can be inferred about any individual in the dataset. Another way to describe differential privacy is as a constraint on the algorithms used to publish aggregate information about a statistical database which limits the disclosure of private information of records in the database. For example, differentially private algorithms are used by some government agencies to publish demographic information or other statistical aggregates while ensuring confidentiality of survey responses, and by companies to collect informa ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

MapReduce

MapReduce is a programming model and an associated implementation for processing and generating big data sets with a parallel and distributed algorithm on a cluster. A MapReduce program is composed of a ''map'' procedure, which performs filtering and sorting (such as sorting students by first name into queues, one queue for each name), and a '' reduce'' method, which performs a summary operation (such as counting the number of students in each queue, yielding name frequencies). The "MapReduce System" (also called "infrastructure" or "framework") orchestrates the processing by marshalling the distributed servers, running the various tasks in parallel, managing all communications and data transfers between the various parts of the system, and providing for redundancy and fault tolerance. The model is a specialization of the ''split-apply-combine'' strategy for data analysis. It is inspired by the map and reduce functions commonly used in functional programming,"Our abstracti ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

PyTorch

PyTorch is a machine learning library based on the Torch library, used for applications such as computer vision and natural language processing, originally developed by Meta AI and now part of the Linux Foundation umbrella. It is one of the most popular deep learning frameworks, alongside others such as TensorFlow, offering free and open-source software released under the modified BSD license. Although the Python interface is more polished and the primary focus of development, PyTorch also has a C++ interface. A number of pieces of deep learning software are built on top of PyTorch, including Tesla Autopilot, Uber's Pyro, Hugging Face's Transformers, and Catalyst. PyTorch provides two high-level features: * Tensor computing (like NumPy) with strong acceleration via graphics processing units (GPU) * Deep neural networks built on a tape-based automatic differentiation system History In 2001, Torch was written and released under a GPL license. It was a machine-learning li ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Meta Platforms

Meta Platforms, Inc. is an American multinational technology company headquartered in Menlo Park, California. Meta owns and operates several prominent social media platforms and communication services, including Facebook, Instagram, Threads (social network), Threads, Facebook Messenger, Messenger and WhatsApp. The company also operates an advertising network for its own sites and third parties; , advertising accounted for 97.8 percent of its total revenue. The company was originally established in 2004 as TheFacebook, Inc., and was renamed Facebook, Inc. in 2005. In 2021, it rebranded as Meta Platforms, Inc. to reflect a strategic shift toward developing the metaverse—an interconnected digital ecosystem spanning virtual reality, virtual and augmented reality technologies. Meta is considered one of the Big Tech, Big Five American technology companies, alongside Alphabet Inc., Alphabet (Google), Amazon (company), Amazon, Apple Inc., Apple, and Microsoft. In 2023, it was rank ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

TensorFlow

TensorFlow is a Library (computing), software library for machine learning and artificial intelligence. It can be used across a range of tasks, but is used mainly for Types of artificial neural networks#Training, training and Statistical inference, inference of Neural network (machine learning), neural networks. "It is machine learning software being used for various kinds of perceptual and language understanding tasks" – Jeffrey Dean, minute 0:47 / 2:17 from YouTube clip It is one of the most popular deep learning frameworks, alongside others such as PyTorch. It is free and open-source software released under the Apache License 2.0. It was developed by the Google Brain team for Google's internal use in research and production. The initial version was released under the Apache License 2.0 in 2015. Google released an updated version, TensorFlow 2.0, in September 2019. TensorFlow can be used in a wide variety of programming languages, including Python (programming language), P ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Harvard University

Harvard University is a Private university, private Ivy League research university in Cambridge, Massachusetts, United States. Founded in 1636 and named for its first benefactor, the History of the Puritans in North America, Puritan clergyman John Harvard (clergyman), John Harvard, it is the oldest institution of higher learning in the United States. Its influence, wealth, and rankings have made it one of the most prestigious universities in the world. Harvard was founded and authorized by the Massachusetts General Court, the governing legislature of Colonial history of the United States, colonial-era Massachusetts Bay Colony. While never formally affiliated with any Religious denomination, denomination, Harvard trained Congregationalism in the United States, Congregational clergy until its curriculum and student body were gradually secularized in the 18th century. By the 19th century, Harvard emerged as the most prominent academic and cultural institution among the Boston B ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Local Differential Privacy

Local differential privacy (LDP) is a model of differential privacy with the added requirement that if an adversary has access to the personal responses of an individual in the database, that adversary will still be unable to learn much of the user's personal data. This is contrasted with global differential privacy, a model of differential privacy that incorporates a central aggregator with access to the raw data. Local differential privacy (LDP) is an approach to mitigate the concern of data fusion and analysis techniques used to expose individuals to attacks and disclosures. LDP is a well-known privacy model for distributed architectures that aims to provide privacy guarantees for each user while collecting and analyzing data, protecting from privacy leaks for the client and server. LDP has been widely adopted to alleviate contemporary privacy concerns in the era of big data. History The randomized response survey technique proposed by Stanley L. Warner in 1965 is frequen ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |