|

IBM Alignment Models

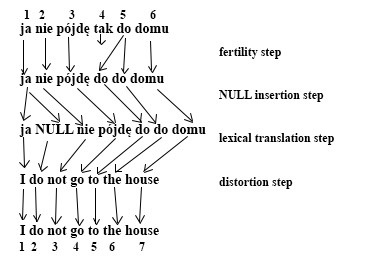

IBM alignment models are a sequence of increasingly complex models used in statistical machine translation to train a translation model and an alignment model, starting with lexical translation probabilities and moving to reordering and word duplication. They underpinned the majority of statistical machine translation systems for almost twenty years starting in the early 1990s, until neural machine translation began to dominate. These models offer principled probabilistic formulation and (mostly) tractable inference. The original work on statistical machine translation at IBM proposed five models, and a model 6 was proposed later. The sequence of the six models can be summarized as: * Model 1: lexical translation * Model 2: additional absolute alignment model * Model 3: extra fertility model * Model 4: added relative alignment model * Model 5: fixed deficiency problem. * Model 6: Model 4 combined with a HMM alignment model in a log linear way Mathematical setup The IBM alignm ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Statistical Machine Translation

Statistical machine translation (SMT) is a machine translation paradigm where translations are generated on the basis of statistical models whose parameters are derived from the analysis of bilingual text corpora. The statistical approach contrasts with the rule-based approaches to machine translation as well as with example-based machine translation, and has more recently been superseded by neural machine translation in many applications (see this article's final section). The first ideas of statistical machine translation were introduced by Warren Weaver in 1949, including the ideas of applying Claude Shannon's information theory. Statistical machine translation was re-introduced in the late 1980s and early 1990s by researchers at IBM's Thomas J. Watson Research Center and has contributed to the significant resurgence in interest in machine translation in recent years. Before the introduction of neural machine translation, it was by far the most widely studied machine translati ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Neural Machine Translation

Neural machine translation (NMT) is an approach to machine translation that uses an artificial neural network to predict the likelihood of a sequence of words, typically modeling entire sentences in a single integrated model. Properties They require only a fraction of the memory needed by traditional statistical machine translation (SMT) models. Furthermore, unlike conventional translation systems, all parts of the neural translation model are trained jointly (end-to-end) to maximize the translation performance. History Deep learning applications appeared first in speech recognition in the 1990s. The first scientific paper on using neural networks in machine translation appeared in 2014. This year Bahdanau et al.Bahdanau D, Cho K, Bengio Y. Neural machine translation by jointly learning to align and translate. In: Proceedings of the 3rd International Conference on Learning Representations; 2015 May 7–9; San Diego, USA; 2015. and Sutskever et al.Sutskever I, Vinyals O, Le QV. Sequ ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Hidden Markov Model

A hidden Markov model (HMM) is a statistical Markov model in which the system being modeled is assumed to be a Markov process — call it X — with unobservable ("''hidden''") states. As part of the definition, HMM requires that there be an observable process Y whose outcomes are "influenced" by the outcomes of X in a known way. Since X cannot be observed directly, the goal is to learn about X by observing Y. HMM has an additional requirement that the outcome of Y at time t=t_0 must be "influenced" exclusively by the outcome of X at t=t_0 and that the outcomes of X and Y at t handwriting recognition, handwriting, gesture recognition, part-of-speech tagging, musical score following, partial discharges and bioinformatics. Definition Let X_n and Y_n be discrete-time stochastic processes and n\geq 1. The pair (X_n,Y_n) is a ''hidden Markov model'' if * X_n is a Markov process whose behavior is not directly observable ("hidden"); * \operatorname\bigl(Y_n \i ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Dummy Pronoun

A dummy pronoun is a deictic pronoun that fulfills a syntactical requirement without providing a contextually explicit meaning of its referent. As such, it is an example of exophora. Dummy pronouns are used in many Germanic languages, including German and English. Pronoun-dropping languages such as Spanish, Portuguese, Chinese, and Turkish do not require dummy pronouns. A dummy pronoun is used when a particular verb argument (or preposition) is nonexistent (it could also be unknown, irrelevant, already understood, or otherwise "not to be spoken of directly") but when a reference to the argument (a pronoun) is nevertheless syntactically required. For example, in the phrase "It is obvious that the violence will continue", ''it'' is a dummy pronoun, not referring to any agent. Unlike a regular pronoun of English, it cannot be replaced by any noun phrase. The term ''dummy pronoun'' refers to the function of a word in a particular sentence, not a property of individual words. F ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Topic Marker

A topic marker is a grammatical particle used to mark the topic of a sentence. It is found in Japanese, Korean, Quechua, Ryukyuan, Imonda and, to a limited extent, Classical Chinese. It often overlaps with the subject of a sentence, causing confusion for learners, as most other languages lack it. It differs from a subject in that it puts more emphasis on the item and can be used with words in other roles as well. Korean: 은/는 The topic marker is one of many Korean particles. It comes in two varieties based on its phonetic environment: 은 (''eun'') is used after words that end in a consonant, and 는 (''neun'') is used after words that end in a vowel. Example In the following example, "school" () is the subject, and it is marked as the topic. Japanese: は The topic marker is one of many Japanese particles. It is written with the hiragana は, which is normally pronounced ''ha'', but when used as a particle is pronounced ''wa''. If what is to be the topic would have ha ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Expectation–maximization Algorithm

In statistics, an expectation–maximization (EM) algorithm is an iterative method to find (local) maximum likelihood or maximum a posteriori (MAP) estimates of parameters in statistical models, where the model depends on unobserved latent variables. The EM iteration alternates between performing an expectation (E) step, which creates a function for the expectation of the log-likelihood evaluated using the current estimate for the parameters, and a maximization (M) step, which computes parameters maximizing the expected log-likelihood found on the ''E'' step. These parameter-estimates are then used to determine the distribution of the latent variables in the next E step. History The EM algorithm was explained and given its name in a classic 1977 paper by Arthur Dempster, Nan Laird, and Donald Rubin. They pointed out that the method had been "proposed many times in special circumstances" by earlier authors. One of the earliest is the gene-counting method for estimating allele ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Lagrange Multiplier

In mathematical optimization, the method of Lagrange multipliers is a strategy for finding the local maxima and minima of a function subject to equality constraints (i.e., subject to the condition that one or more equations have to be satisfied exactly by the chosen values of the variables). It is named after the mathematician Joseph-Louis Lagrange. The basic idea is to convert a constrained problem into a form such that the derivative test of an unconstrained problem can still be applied. The relationship between the gradient of the function and gradients of the constraints rather naturally leads to a reformulation of the original problem, known as the Lagrangian function. The method can be summarized as follows: in order to find the maximum or minimum of a function f(x) subjected to the equality constraint g(x) = 0, form the Lagrangian function :\mathcal(x, \lambda) = f(x) + \lambda g(x) and find the stationary points of \mathcal considered as a function of x and the Lagrange mu ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Dirac Delta Function

In mathematics, the Dirac delta distribution ( distribution), also known as the unit impulse, is a generalized function or distribution over the real numbers, whose value is zero everywhere except at zero, and whose integral over the entire real line is equal to one. The current understanding of the unit impulse is as a linear functional that maps every continuous function (e.g., f(x)) to its value at zero of its domain (f(0)), or as the weak limit of a sequence of bump functions (e.g., \delta(x) = \lim_ \frace^), which are zero over most of the real line, with a tall spike at the origin. Bump functions are thus sometimes called "approximate" or "nascent" delta distributions. The delta function was introduced by physicist Paul Dirac as a tool for the normalization of state vectors. It also has uses in probability theory and signal processing. Its validity was disputed until Laurent Schwartz developed the theory of distributions where it is defined as a linear form acting on ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Hidden Markov Model

A hidden Markov model (HMM) is a statistical Markov model in which the system being modeled is assumed to be a Markov process — call it X — with unobservable ("''hidden''") states. As part of the definition, HMM requires that there be an observable process Y whose outcomes are "influenced" by the outcomes of X in a known way. Since X cannot be observed directly, the goal is to learn about X by observing Y. HMM has an additional requirement that the outcome of Y at time t=t_0 must be "influenced" exclusively by the outcome of X at t=t_0 and that the outcomes of X and Y at t handwriting recognition, handwriting, gesture recognition, part-of-speech tagging, musical score following, partial discharges and bioinformatics. Definition Let X_n and Y_n be discrete-time stochastic processes and n\geq 1. The pair (X_n,Y_n) is a ''hidden Markov model'' if * X_n is a Markov process whose behavior is not directly observable ("hidden"); * \operatorname\bigl(Y_n \i ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Machine Translation

Machine translation, sometimes referred to by the abbreviation MT (not to be confused with computer-aided translation, machine-aided human translation or interactive translation), is a sub-field of computational linguistics that investigates the use of software to translate text or speech from one language to another. On a basic level, MT performs mechanical substitution of words in one language for words in another, but that alone rarely produces a good translation because recognition of whole phrases and their closest counterparts in the target language is needed. Not all words in one language have equivalent words in another language, and many words have more than one meaning. Solving this problem with corpus statistical and neural techniques is a rapidly growing field that is leading to better translations, handling differences in linguistic typology, translation of idioms, and the isolation of anomalies. Current machine translation software often allows for customizat ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |