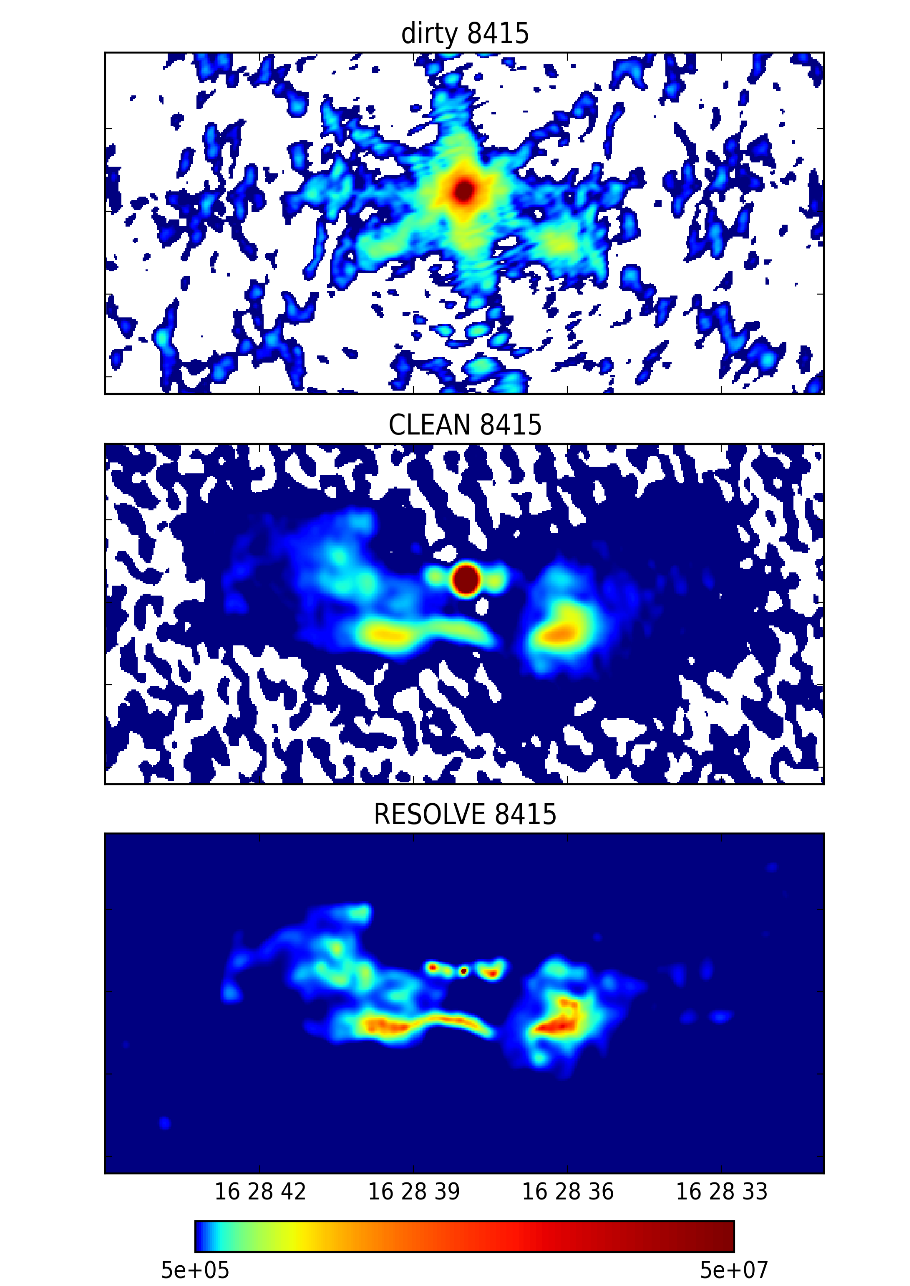

|

Information Field Theory

Information field theory (IFT) is a Bayesian statistical field theory relating to signal reconstruction, cosmography, and other related areas. IFT summarizes the information available on a physical field using Bayesian probabilities. It uses computational techniques developed for quantum field theory and statistical field theory to handle the infinite number of degrees of freedom of a field and to derive algorithms for the calculation of field expectation values. For example, the posterior expectation value of a field generated by a known Gaussian process and measured by a linear device with known Gaussian noise statistics is given by a generalized Wiener filter applied to the measured data. IFT extends such known filter formula to situations with nonlinear physics, nonlinear devices, non-Gaussian field or noise statistics, dependence of the noise statistics on the field values, and partly unknown parameters of measurement. For this it uses Feynman diagrams, renormalisation f ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Bayesian Inference

Bayesian inference is a method of statistical inference in which Bayes' theorem is used to update the probability for a hypothesis as more evidence or information becomes available. Bayesian inference is an important technique in statistics, and especially in mathematical statistics. Bayesian updating is particularly important in the Sequential analysis, dynamic analysis of a sequence of data. Bayesian inference has found application in a wide range of activities, including science, engineering, philosophy, medicine, sport, and law. In the philosophy of decision theory, Bayesian inference is closely related to subjective probability, often called "Bayesian probability". Introduction to Bayes' rule Formal explanation Bayesian inference derives the posterior probability as a consequence relation, consequence of two Antecedent (logic), antecedents: a prior probability and a "likelihood function" derived from a statistical model for the observed data. Bayesian inference computes ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

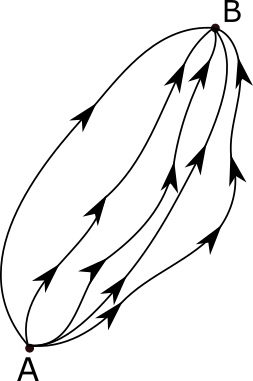

Feynman Diagrams

In theoretical physics, a Feynman diagram is a pictorial representation of the mathematical expressions describing the behavior and interaction of subatomic particles. The scheme is named after American physicist Richard Feynman, who introduced the diagrams in 1948. The interaction of subatomic particles can be complex and difficult to understand; Feynman diagrams give a simple visualization of what would otherwise be an arcane and abstract formula. According to David Kaiser, "Since the middle of the 20th century, theoretical physicists have increasingly turned to this tool to help them undertake critical calculations. Feynman diagrams have revolutionized nearly every aspect of theoretical physics." While the diagrams are applied primarily to quantum field theory, they can also be used in other fields, such as solid-state theory. Frank Wilczek wrote that the calculations that won him the 2004 Nobel Prize in Physics "would have been literally unthinkable without Feynman diagr ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Wiener Filter

In signal processing, the Wiener filter is a filter used to produce an estimate of a desired or target random process by linear time-invariant ( LTI) filtering of an observed noisy process, assuming known stationary signal and noise spectra, and additive noise. The Wiener filter minimizes the mean square error between the estimated random process and the desired process. Description The goal of the Wiener filter is to compute a statistical estimate of an unknown signal using a related signal as an input and filtering that known signal to produce the estimate as an output. For example, the known signal might consist of an unknown signal of interest that has been corrupted by additive noise. The Wiener filter can be used to filter out the noise from the corrupted signal to provide an estimate of the underlying signal of interest. The Wiener filter is based on a statistical approach, and a more statistical account of the theory is given in the minimum mean square error (MMSE) es ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Continuum Limit

In mathematical physics and mathematics, the continuum limit or scaling limit of a lattice model refers to its behaviour in the limit as the lattice spacing goes to zero. It is often useful to use lattice models to approximate real-world processes, such as Brownian motion. Indeed, according to Donsker's theorem, the discrete random walk would, in the scaling limit, approach the true Brownian motion. Terminology The term ''continuum limit'' mostly finds use in the physical sciences, often in reference to models of aspects of quantum physics, while the term ''scaling limit'' is more common in mathematical use. Application in quantum field theory A lattice model that approximates a continuum quantum field theory in the limit as the lattice spacing goes to zero may correspond to finding a second order phase transition of the model. This is the scaling limit of the model. See also * Universality classes In statistical mechanics, a universality class is a collection of mathematica ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Gaussian Probability Distribution

In statistics, a normal distribution or Gaussian distribution is a type of continuous probability distribution for a real-valued random variable. The general form of its probability density function is : f(x) = \frac e^ The parameter \mu is the mean or expectation of the distribution (and also its median and mode), while the parameter \sigma is its standard deviation. The variance of the distribution is \sigma^2. A random variable with a Gaussian distribution is said to be normally distributed, and is called a normal deviate. Normal distributions are important in statistics and are often used in the natural and social sciences to represent real-valued random variables whose distributions are not known. Their importance is partly due to the central limit theorem. It states that, under some conditions, the average of many samples (observations) of a random variable with finite mean and variance is itself a random variable—whose distribution converges to a normal distribu ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Path Integral Formulation

The path integral formulation is a description in quantum mechanics that generalizes the action principle of classical mechanics. It replaces the classical notion of a single, unique classical trajectory for a system with a sum, or functional integral, over an infinity of quantum-mechanically possible trajectories to compute a quantum amplitude. This formulation has proven crucial to the subsequent development of theoretical physics, because manifest Lorentz covariance (time and space components of quantities enter equations in the same way) is easier to achieve than in the operator formalism of canonical quantization. Unlike previous methods, the path integral allows one to easily change coordinates between very different canonical descriptions of the same quantum system. Another advantage is that it is in practice easier to guess the correct form of the Lagrangian of a theory, which naturally enters the path integrals (for interactions of a certain type, these are ''coord ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Lebesgue Measure

In measure theory, a branch of mathematics, the Lebesgue measure, named after French mathematician Henri Lebesgue, is the standard way of assigning a measure to subsets of ''n''-dimensional Euclidean space. For ''n'' = 1, 2, or 3, it coincides with the standard measure of length, area, or volume. In general, it is also called ''n''-dimensional volume, ''n''-volume, or simply volume. It is used throughout real analysis, in particular to define Lebesgue integration. Sets that can be assigned a Lebesgue measure are called Lebesgue-measurable; the measure of the Lebesgue-measurable set ''A'' is here denoted by ''λ''(''A''). Henri Lebesgue described this measure in the year 1901, followed the next year by his description of the Lebesgue integral. Both were published as part of his dissertation in 1902. Definition For any interval I = ,b/math>, or I = (a, b), in the set \mathbb of real numbers, let \ell(I)= b - a denote its length. For any subset E\subseteq\mathbb, the Lebesg ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Quantum Field Theory

In theoretical physics, quantum field theory (QFT) is a theoretical framework that combines classical field theory, special relativity, and quantum mechanics. QFT is used in particle physics to construct physical models of subatomic particles and in condensed matter physics to construct models of quasiparticles. QFT treats particles as excited states (also called quanta) of their underlying quantum fields, which are more fundamental than the particles. The equation of motion of the particle is determined by minimization of the Lagrangian, a functional of fields associated with the particle. Interactions between particles are described by interaction terms in the Lagrangian involving their corresponding quantum fields. Each interaction can be visually represented by Feynman diagrams according to perturbation theory in quantum mechanics. History Quantum field theory emerged from the work of generations of theoretical physicists spanning much of the 20th century. Its devel ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Statistical Field Theory

Statistics (from German: ''Statistik'', "description of a state, a country") is the discipline that concerns the collection, organization, analysis, interpretation, and presentation of data. In applying statistics to a scientific, industrial, or social problem, it is conventional to begin with a statistical population or a statistical model to be studied. Populations can be diverse groups of people or objects such as "all people living in a country" or "every atom composing a crystal". Statistics deals with every aspect of data, including the planning of data collection in terms of the design of surveys and experiments.Dodge, Y. (2006) ''The Oxford Dictionary of Statistical Terms'', Oxford University Press. When census data cannot be collected, statisticians collect data by developing specific experiment designs and survey samples. Representative sampling assures that inferences and conclusions can reasonably extend from the sample to the population as a whole. An experim ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Partition Function (statistical Mechanics)

In physics, a partition function describes the statistical properties of a system in thermodynamic equilibrium. Partition functions are functions of the thermodynamic state variables, such as the temperature and volume. Most of the aggregate thermodynamic variables of the system, such as the total energy, free energy, entropy, and pressure, can be expressed in terms of the partition function or its derivatives. The partition function is dimensionless. Each partition function is constructed to represent a particular statistical ensemble (which, in turn, corresponds to a particular free energy). The most common statistical ensembles have named partition functions. The canonical partition function applies to a canonical ensemble, in which the system is allowed to exchange heat with the environment at fixed temperature, volume, and number of particles. The grand canonical partition function applies to a grand canonical ensemble, in which the system can exchange both heat a ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Hamiltonian Field Theory

In theoretical physics, Hamiltonian field theory is the field-theoretic analogue to classical Hamiltonian mechanics. It is a formalism in classical field theory alongside Lagrangian field theory. It also has applications in quantum field theory. Definition The Hamiltonian for a system of discrete particles is a function of their generalized coordinates and conjugate momenta, and possibly, time. For continua and fields, Hamiltonian mechanics is unsuitable but can be extended by considering a large number of point masses, and taking the continuous limit, that is, infinitely many particles forming a continuum or field. Since each point mass has one or more degrees of freedom, the field formulation has infinitely many degrees of freedom. One scalar field The Hamiltonian density is the continuous analogue for fields; it is a function of the fields, the conjugate "momentum" fields, and possibly the space and time coordinates themselves. For one scalar field , the Hamiltonian density ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Bayes' Theorem

In probability theory and statistics, Bayes' theorem (alternatively Bayes' law or Bayes' rule), named after Thomas Bayes, describes the probability of an event, based on prior knowledge of conditions that might be related to the event. For example, if the risk of developing health problems is known to increase with age, Bayes' theorem allows the risk to an individual of a known age to be assessed more accurately (by conditioning it on their age) than simply assuming that the individual is typical of the population as a whole. One of the many applications of Bayes' theorem is Bayesian inference, a particular approach to statistical inference. When applied, the probabilities involved in the theorem may have different probability interpretations. With Bayesian probability interpretation, the theorem expresses how a degree of belief, expressed as a probability, should rationally change to account for the availability of related evidence. Bayesian inference is fundamental to Baye ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |