|

In-memory Processing

In computer science, in-memory processing is an emerging technology for processing of data stored in an in-memory database. In-memory processing is one method of addressing the performance and power bottlenecks caused by the movement of data between the processor and the main memory. Older systems have been based on disk storage and relational databases using SQL query language, but these are increasingly regarded as inadequate to meet business intelligence (BI) needs. Because stored data is accessed much more quickly when it is placed in random-access memory (RAM) or flash memory, in-memory processing allows data to be analysed in real time, enabling faster reporting and decision-making in business. Disk-based Business Intelligence Data structures With disk-based technology, data is loaded on to the computer's hard disk in the form of multiple tables and multi-dimensional structures against which queries are run. Disk-based technologies are relational database manageme ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Computer Science

Computer science is the study of computation, automation, and information. Computer science spans theoretical disciplines (such as algorithms, theory of computation, information theory, and automation) to practical disciplines (including the design and implementation of hardware and software). Computer science is generally considered an area of academic research and distinct from computer programming. Algorithms and data structures are central to computer science. The theory of computation concerns abstract models of computation and general classes of problems that can be solved using them. The fields of cryptography and computer security involve studying the means for secure communication and for preventing security vulnerabilities. Computer graphics and computational geometry address the generation of images. Programming language theory considers different ways to describe computational processes, and database theory concerns the management of repositories ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

OLAP Cube

An OLAP cube is a multi-dimensional array of data. Online analytical processing (OLAP) is a computer-based technique of analyzing data to look for insights. The term ''cube'' here refers to a multi-dimensional dataset, which is also sometimes called a hypercube if the number of dimensions is greater than three. Terminology A cube can be considered a multi-dimensional generalization of a two- or three-dimensional spreadsheet. For example, a company might wish to summarize financial data by product, by time-period, and by city to compare actual and budget expenses. Product, time, city and scenario (actual and budget) are the data's dimensions. ''Cube'' is a shorthand for ''multidimensional dataset'', given that data can have an arbitrary number of '' dimensions''. The term hypercube is sometimes used, especially for data with more than three dimensions. A cube is not a "cube" in the strict mathematical sense, as the sides are not all necessarily equal. But this term is used w ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Orders Of Magnitude

An order of magnitude is an approximation of the logarithm of a value relative to some contextually understood reference value, usually 10, interpreted as the base of the logarithm and the representative of values of magnitude one. Logarithmic distributions are common in nature and considering the order of magnitude of values sampled from such a distribution can be more intuitive. When the reference value is 10, the order of magnitude can be understood as the number of digits in the base-10 representation of the value. Similarly, if the reference value is one of some powers of 2, since computers store data in a binary format, the magnitude can be understood in terms of the amount of computer memory needed to store that value. Differences in order of magnitude can be measured on a base-10 logarithmic scale in “ decades” (i.e., factors of ten). Examples of numbers of different magnitudes can be found at Orders of magnitude (numbers). Definition Generally, the order of magni ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Oracle Coherence

In computing, Oracle Coherence (originally Tangosol Coherence) is a Java-based distributed cache and in-memory data grid. It is claimed to be "intended for systems that require high availability, high scalability and low latency, particularly in cases when traditional relational database management systems provide insufficient throughput, or insufficient performance." History Tangosol Coherence was created by Cameron Purdy and Gene Gleyzer, and initially released in December, 2001. Oracle Corporation acquired Tangosol Inc., the original owner of the product, in April 2007, at which point it had more than 100 direct customers. Tangosol Coherence was also embedded in a number of other companies' software products, some of which belonged to Oracle Corporation's competitors. Features Coherence provides a variety of mechanisms to integrate with other services using TopLink, Java Persistence API, Oracle Golden Gate and other platforms using APIs provided by Coherence. Cohere ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Infinispan

Infinispan is a distributed cache and key-value NoSQL data store software developed by Red Hat. Java applications can embed it as library, use it as a service in WildFly or any non-java applications can use it as remote service through TCP/IP. History Infinispan is the successor of JBoss Cache. The project was announced in 2009. Features * Transactions * MapReduce * Support for LRU and LIRS eviction algorithms * Through pluggable architecture, infinispan is able to persist data to filesystem, relational databases with JDBC, LevelDB, NoSQL databases like MongoDB, Apache Cassandra or HBase and others. Usage Typical use-cases for Infinispan include: * Distributed cache, often in front of a database * Storage for temporal data, like web sessions * In-memory data processing and analytics * Cross- JVM communication and shared storage * MapReduce Implementation in the In-Memory Data Grid. Infinispan is also used in academia and research as a framework for distributed exe ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Hazelcast

In computing, Hazelcast IMDG is an open source in-memory data grid based on Java. It is also the name of the company developing the product. The Hazelcast company is funded by venture capital and headquartered in Palo Alto, California. In a Hazelcast grid, data is evenly distributed among the nodes of a computer cluster, allowing for horizontal scaling of processing and available storage. Backups are also distributed among nodes to protect against failure of any single node. Hazelcast provides central, predictable scaling of applications through in-memory access to frequently used data and across an elastically scalable data grid. These techniques reduce the query load on databases and improve speed. Hazelcast can run on-premises, in the cloud (Amazon Web Services, Microsoft Azure, Cloud Foundry, OpenShift), virtually (VMware), and in Docker containers. Hazelcast offers technology integrations for multiple cloud configuration and deployment technologies, including Apache j ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

NoSQL

A NoSQL (originally referring to "non- SQL" or "non-relational") database provides a mechanism for storage and retrieval of data that is modeled in means other than the tabular relations used in relational databases. Such databases have existed since the late 1960s, but the name "NoSQL" was only coined in the early 21st century, triggered by the needs of Web 2.0 companies. NoSQL databases are increasingly used in big data and real-time web applications. NoSQL systems are also sometimes called Not only SQL to emphasize that they may support SQL-like query languages or sit alongside SQL databases in polyglot-persistent architectures. Motivations for this approach include simplicity of design, simpler "horizontal" scaling to clusters of machines (which is a problem for relational databases), finer control over availability and limiting the object-relational impedance mismatch. The data structures used by NoSQL databases (e.g. key–value pair, wide column, graph, or doc ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

IBM Db2

Db2 is a family of data management products, including database servers, developed by IBM. It initially supported the relational model, but was extended to support object–relational features and non-relational structures like JSON and XML. The brand name was originally styled as DB/2, then DB2 until 2017 and finally changed to its present form. History Unlike other database vendors, IBM previously produced a platform-specific Db2 product for each of its major operating systems. However, in the 1990s IBM changed track and produced a Db2 common product, designed with a mostly common code base for L-U-W (Linux-Unix-Windows); DB2 for System z and DB2 for IBM i are different. As a result, they use different drivers. DB2 traces its roots back to the beginning of the 1970s when Edgar F. Codd, a researcher working for IBM, described the theory of relational databases, and in June 1970 published the model for data manipulation. In 1974, the IBM San Jose Research center dev ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Oracle Database

Oracle Database (commonly referred to as Oracle DBMS, Oracle Autonomous Database, or simply as Oracle) is a multi-model database management system produced and marketed by Oracle Corporation. It is a database commonly used for running online transaction processing (OLTP), data warehousing (DW) and mixed (OLTP & DW) database workloads. Oracle Database is available by several service providers on-prem, on-cloud, or as a hybrid cloud installation. It may be run on third party servers as well as on Oracle hardware ( Exadata on-prem, on Oracle Cloud or at Cloud at Customer). History Larry Ellison and his two friends and former co-workers, Bob Miner and Ed Oates, started a consultancy called Software Development Laboratories (SDL) in 1977. SDL developed the original version of the Oracle software. The name ''Oracle'' comes from the code-name of a CIA-funded project Ellison had worked on while formerly employed by Ampex. Releases and versions Oracle products follow a cus ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Data Warehouse

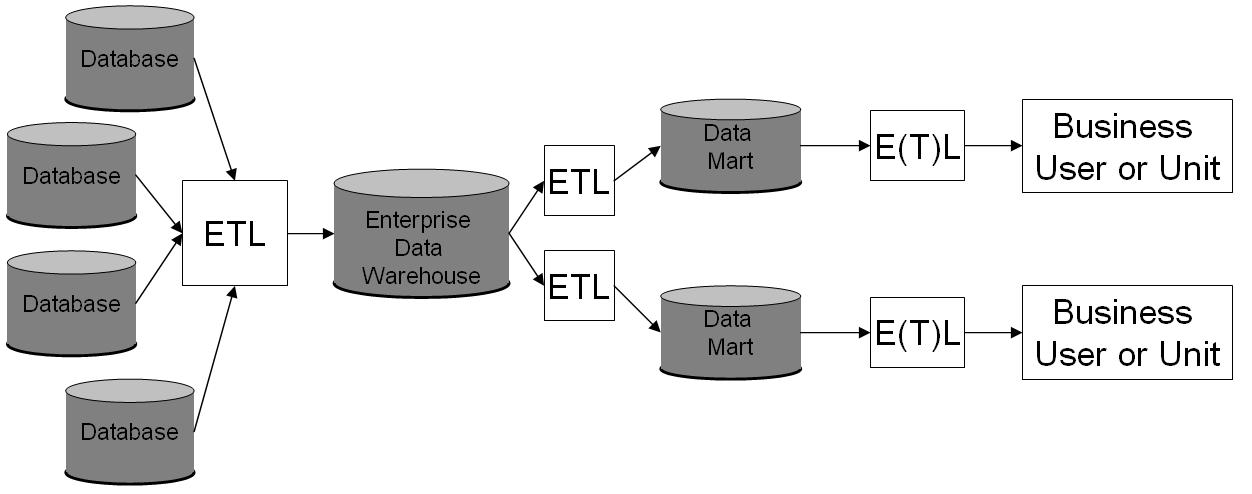

In computing, a data warehouse (DW or DWH), also known as an enterprise data warehouse (EDW), is a system used for reporting and data analysis and is considered a core component of business intelligence. DWs are central repositories of integrated data from one or more disparate sources. They store current and historical data in one single place that are used for creating analytical reports for workers throughout the enterprise. The data stored in the warehouse is uploaded from the operational systems (such as marketing or sales). The data may pass through an operational data store and may require data cleansing for additional operations to ensure data quality before it is used in the DW for reporting. Extract, transform, load (ETL) and extract, load, transform (ELT) are the two main approaches used to build a data warehouse system. ETL-based data warehousing The typical extract, transform, load (ETL)-based data warehouse uses staging, data integration, and access lay ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Data Modeling

Data modeling in software engineering is the process of creating a data model for an information system by applying certain formal techniques. Overview Data modeling is a process used to define and analyze data requirements needed to support the business processes within the scope of corresponding information systems in organizations. Therefore, the process of data modeling involves professional data modelers working closely with business stakeholders, as well as potential users of the information system. There are three different types of data models produced while progressing from requirements to the actual database to be used for the information system.Simison, Graeme. C. & Witt, Graham. C. (2005). ''Data Modeling Essentials''. 3rd Edition. Morgan Kaufmann Publishers. The data requirements are initially recorded as a conceptual data model which is essentially a set of technology independent specifications about the data and is used to discuss initial requirements ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |