|

HyperThreading

Hyper-threading (officially called Hyper-Threading Technology or HT Technology and abbreviated as HTT or HT) is Intel's proprietary simultaneous multithreading (SMT) implementation used to improve parallelization of computations (doing multiple tasks at once) performed on x86 microprocessors. It was introduced on Xeon server processors in February 2002 and on Pentium 4 desktop processors in November 2002. Since then, Intel has included this technology in Itanium, Atom, and Core 'i' Series CPUs, among others. For each processor core that is physically present, the operating system addresses two virtual (logical) cores and shares the workload between them when possible. The main function of hyper-threading is to increase the number of independent instructions in the pipeline; it takes advantage of superscalar architecture, in which multiple instructions operate on separate data in parallel. With HTT, one physical core appears as two processors to the operating system, a ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Hyper-threaded CPU

Hyper-threading (officially called Hyper-Threading Technology or HT Technology and abbreviated as HTT or HT) is Intel's proprietary simultaneous multithreading (SMT) implementation used to improve parallelization of computations (doing multiple tasks at once) performed on x86 microprocessors. It was introduced on Xeon server processors in February 2002 and on Pentium 4 desktop processors in November 2002. Since then, Intel has included this technology in Itanium, Atom, and Core 'i' Series CPUs, among others. For each processor core that is physically present, the operating system addresses two virtual (logical) cores and shares the workload between them when possible. The main function of hyper-threading is to increase the number of independent instructions in the pipeline; it takes advantage of superscalar architecture, in which multiple instructions operate on separate data in parallel. With HTT, one physical core appears as two processors to the operating system, a ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Multithreading (computer Architecture)

In computer architecture, multithreading is the ability of a central processing unit (CPU) (or a single core in a multi-core processor) to provide multiple threads of execution concurrently, supported by the operating system. This approach differs from multiprocessing. In a multithreaded application, the threads share the resources of a single or multiple cores, which include the computing units, the CPU caches, and the translation lookaside buffer (TLB). Where multiprocessing systems include multiple complete processing units in one or more cores, multithreading aims to increase utilization of a single core by using thread-level parallelism, as well as instruction-level parallelism. As the two techniques are complementary, they are combined in nearly all modern systems architectures with multiple multithreading CPUs and with CPUs with multiple multithreading cores. Overview The multithreading paradigm has become more popular as efforts to further exploit instruction-level p ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

CMOS

Complementary metal–oxide–semiconductor (CMOS, pronounced "sea-moss", ) is a type of metal–oxide–semiconductor field-effect transistor (MOSFET) fabrication process that uses complementary and symmetrical pairs of p-type and n-type MOSFETs for logic functions. CMOS technology is used for constructing integrated circuit (IC) chips, including microprocessors, microcontrollers, memory chips (including CMOS BIOS), and other digital logic circuits. CMOS technology is also used for analog circuits such as image sensors (CMOS sensors), data converters, RF circuits (RF CMOS), and highly integrated transceivers for many types of communication. The CMOS process was originally conceived by Frank Wanlass at Fairchild Semiconductor and presented by Wanlass and Chih-Tang Sah at the International Solid-State Circuits Conference in 1963. Wanlass later filed US patent 3,356,858 for CMOS circuitry and it was granted in 1967. commercialized the technology with the trademark "COS-MO ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Heterogeneous Element Processor

The Heterogeneous Element Processor (HEP) was introduced by Denelcor, Inc. in 1982. The HEP's architect was Burton Smith. The machine was designed to solve fluid dynamics problems for the Ballistic Research Laboratory. A HEP system, as the name implies, was pieced together from many heterogeneous components -- processors, data memory modules, and I/O modules. The components were connected via a switched network. A single processor, called a PEM, in a HEP system (up to sixteen PEMs could be connected) was rather unconventional; via a "program status word (PSW) queue," up to fifty processes could be maintained in hardware at once. The largest system ever delivered had 4 PEMs. The eight-stage instruction pipeline allowed instructions from eight different processes to proceed at once. In fact, only one instruction from a given process was allowed to be present in the pipeline at any point in time. Therefore, the full processor throughput of 10 MIPS could only be achieved when ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

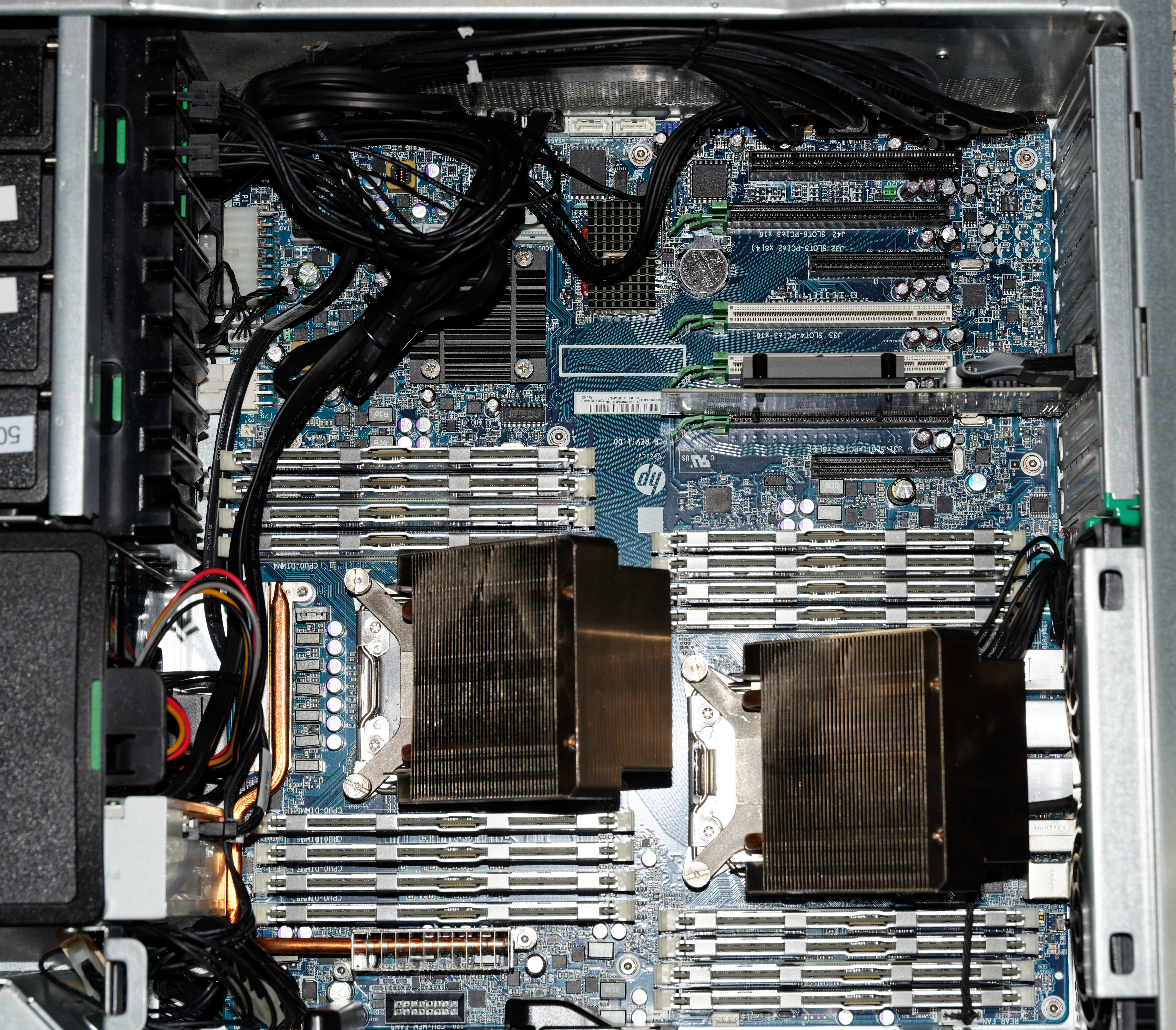

Non-Uniform Memory Access

Non-uniform memory access (NUMA) is a computer memory design used in multiprocessing, where the memory access time depends on the memory location relative to the processor. Under NUMA, a processor can access its own local memory faster than non-local memory (memory local to another processor or memory shared between processors). The benefits of NUMA are limited to particular workloads, notably on servers where the data is often associated strongly with certain tasks or users. NUMA architectures logically follow in scaling from symmetric multiprocessing (SMP) architectures. They were developed commercially during the 1990s by Unisys, Convex Computer (later Hewlett-Packard), Honeywell Information Systems Italy (HISI) (later Groupe Bull), Silicon Graphics (later Silicon Graphics International), Sequent Computer Systems (later IBM), Data General (later EMC, now Dell Technologies), and Digital (later Compaq, then HP, now HPE). Techniques developed by these companies later feature ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Scheduling (computing)

In computing, scheduling is the action of assigning ''resources'' to perform ''tasks''. The ''resources'' may be processors, network links or expansion cards. The ''tasks'' may be threads, processes or data flows. The scheduling activity is carried out by a process called scheduler. Schedulers are often designed so as to keep all computer resources busy (as in load balancing), allow multiple users to share system resources effectively, or to achieve a target quality-of-service. Scheduling is fundamental to computation itself, and an intrinsic part of the execution model of a computer system; the concept of scheduling makes it possible to have computer multitasking with a single central processing unit (CPU). Goals A scheduler may aim at one or more goals, for example: * maximizing ''throughput'' (the total amount of work completed per time unit); * minimizing '' wait time'' (time from work becoming ready until the first point it begins execution); * minimizing '' latency ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Symmetric Multiprocessing

Symmetric multiprocessing or shared-memory multiprocessing (SMP) involves a multiprocessor computer hardware and software architecture where two or more identical processors are connected to a single, shared main memory, have full access to all input and output devices, and are controlled by a single operating system instance that treats all processors equally, reserving none for special purposes. Most multiprocessor systems today use an SMP architecture. In the case of multi-core processors, the SMP architecture applies to the cores, treating them as separate processors. Professor John D. Kubiatowicz considers traditionally SMP systems to contain processors without caches. Culler and Pal-Singh in their 1998 book "Parallel Computer Architecture: A Hardware/Software Approach" mention: "The term SMP is widely used but causes a bit of confusion. ..The more precise description of what is intended by SMP is a shared memory multiprocessor where the cost of accessing a memory location ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Data Dependency

A data dependency in computer science is a situation in which a program statement (instruction) refers to the data of a preceding statement. In compiler theory, the technique used to discover data dependencies among statements (or instructions) is called dependence analysis. There are three types of dependencies: data, name, and control. Data dependencies Assuming statement S_1 and S_2, S_2 depends on S_1 if: :\left (S_1) \cap O(S_2)\right\cup \left (S_1) \cap I(S_2)\right\cup \left (S_1) \cap O(S_2)\right\neq \varnothing where: * I(S_i) is the set of memory locations read by * O(S_j) is the set of memory locations written by and * there is a feasible run-time execution path from S_1 to This Condition is called Bernstein Condition, named by A. J. Bernstein. Three cases exist: * Anti-dependence: I(S_1) \cap O(S_2) \neq \varnothing, S_1 \rightarrow S_2 and S_1 reads something before S_2 overwrites it * Flow (data) dependence: O(S_1) \cap I(S_2) \neq \varnothing, S_1 \right ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Branch Misprediction

In computer architecture, a branch predictor is a digital circuit that tries to guess which way a branch (e.g., an if–then–else structure) will go before this is known definitively. The purpose of the branch predictor is to improve the flow in the instruction pipeline. Branch predictors play a critical role in achieving high performance in many modern pipelined microprocessor architectures such as x86. Two-way branching is usually implemented with a conditional jump instruction. A conditional jump can either be "taken" and jump to a different place in program memory, or it can be "not taken" and continue execution immediately after the conditional jump. It is not known for certain whether a conditional jump will be taken or not taken until the condition has been calculated and the conditional jump has passed the execution stage in the instruction pipeline (see fig. 1). Without branch prediction, the processor would have to wait until the conditional jump instruction has p ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

CPU Cache

A CPU cache is a hardware cache used by the central processing unit (CPU) of a computer to reduce the average cost (time or energy) to access data from the main memory. A cache is a smaller, faster memory, located closer to a processor core, which stores copies of the data from frequently used main memory locations. Most CPUs have a hierarchy of multiple cache levels (L1, L2, often L3, and rarely even L4), with different instruction-specific and data-specific caches at level 1. The cache memory is typically implemented with static random-access memory (SRAM), in modern CPUs by far the largest part of them by chip area, but SRAM is not always used for all levels (of I- or D-cache), or even any level, sometimes some latter or all levels are implemented with eDRAM. Other types of caches exist (that are not counted towards the "cache size" of the most important caches mentioned above), such as the translation lookaside buffer (TLB) which is part of the memory management unit (MMU) w ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Virtualization

In computing, virtualization or virtualisation (sometimes abbreviated v12n, a numeronym) is the act of creating a virtual (rather than actual) version of something at the same abstraction level, including virtual computer hardware platforms, storage devices, and computer network resources. Virtualization began in the 1960s, as a method of logically dividing the system resources provided by mainframe computers between different applications. An early and successful example is IBM CP/CMS. The control program CP provided each user with a simulated stand-alone System/360 computer. Since then, the meaning of the term has broadened. Hardware virtualization ''Hardware virtualization'' or ''platform virtualization'' refers to the creation of a virtual machine that acts like a real computer with an operating system. Software executed on these virtual machines is separated from the underlying hardware resources. For example, a computer that is running Arch Linux may host a virtual machi ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |