|

Horvitz–Thompson Estimator

In statistics, the Horvitz–Thompson estimator, named after Daniel G. Horvitz and Donovan J. Thompson, is a method for estimating the total and mean of a pseudo-population in a stratified sample. Inverse probability weighting is applied to account for different proportions of observations within strata in a target population. The Horvitz–Thompson estimator is frequently applied in survey analyses and can be used to account for missing data, as well as many sources of unequal selection probabilities. The method Formally, let Y_i, i = 1, 2, \ldots, n be an independent sample from ''n'' of ''N ≥ n'' distinct strata with a common mean ''μ''. Suppose further that \pi_i is the inclusion probability that a randomly sampled individual in a superpopulation belongs to the ''i''th stratum. The Hansen and Hurwitz (1943) estimator of the total is given by: : \hat_ = \sum_^n \pi_i ^ Y_i, and the Horvitz–Thompson estimate of the mean is given by: : \hat_ = N^\hat_ = N^\sum_^n ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Statistics

Statistics (from German language, German: ''wikt:Statistik#German, Statistik'', "description of a State (polity), state, a country") is the discipline that concerns the collection, organization, analysis, interpretation, and presentation of data. In applying statistics to a scientific, industrial, or social problem, it is conventional to begin with a statistical population or a statistical model to be studied. Populations can be diverse groups of people or objects such as "all people living in a country" or "every atom composing a crystal". Statistics deals with every aspect of data, including the planning of data collection in terms of the design of statistical survey, surveys and experimental design, experiments.Dodge, Y. (2006) ''The Oxford Dictionary of Statistical Terms'', Oxford University Press. When census data cannot be collected, statisticians collect data by developing specific experiment designs and survey sample (statistics), samples. Representative sampling as ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Inclusion Probability

In statistics, in the theory relating to sampling from finite populations, the sampling probability (also known as inclusion probability) of an element or member of the population, is its probability of becoming part of the sample during the drawing of a single sample. For example, in simple random sampling the probability of a particular unit i to be selected into the sample is :p_ = \frac = \frac where n is the sample size and N is the population size. Each element of the population may have a different probability of being included in the sample. The inclusion probability is also termed the "first-order inclusion probability" to distinguish it from the "second-order inclusion probability", i.e. the probability of including a pair of elements. Generally, the first-order inclusion probability of the ''i''th element of the population is denoted by the symbol π''i'' and the second-order inclusion probability that a pair consisting of the ''i''th and ''j''th element of the populat ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Sampling (statistics)

In statistics, quality assurance, and survey methodology, sampling is the selection of a subset (a statistical sample) of individuals from within a statistical population to estimate characteristics of the whole population. Statisticians attempt to collect samples that are representative of the population in question. Sampling has lower costs and faster data collection than measuring the entire population and can provide insights in cases where it is infeasible to measure an entire population. Each observation measures one or more properties (such as weight, location, colour or mass) of independent objects or individuals. In survey sampling, weights can be applied to the data to adjust for the sample design, particularly in stratified sampling. Results from probability theory and statistical theory are employed to guide the practice. In business and medical research, sampling is widely used for gathering information about a population. Acceptance sampling is used to determine if ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

R (programming Language)

R is a programming language for statistical computing and graphics supported by the R Core Team and the R Foundation for Statistical Computing. Created by statisticians Ross Ihaka and Robert Gentleman, R is used among data miners, bioinformaticians and statisticians for data analysis and developing statistical software. Users have created packages to augment the functions of the R language. According to user surveys and studies of scholarly literature databases, R is one of the most commonly used programming languages used in data mining. R ranks 12th in the TIOBE index, a measure of programming language popularity, in which the language peaked in 8th place in August 2020. The official R software environment is an open-source free software environment within the GNU package, available under the GNU General Public License. It is written primarily in C, Fortran, and R itself (partially self-hosting). Precompiled executables are provided for various operating systems. R ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Statistical Benchmarking

{{Unreferenced, date=October 2007 In statistics, benchmarking is a method of using auxiliary information to adjust the sampling weights used in an estimation process, in order to yield more accurate estimates of totals. Suppose we have a population where each unit k has a "value" Y(k) associated with it. For example, Y(k) could be a wage of an employee k, or the cost of an item k. Suppose we want to estimate the sum Y of all the Y(k). So we take a sample of the k, get a sampling weight W(k) for all sampled k, and then sum up W(k) \cdot Y(k) for all sampled k. One property usually common to the weights W(k) described here is that if we sum them over all sampled k, then this sum is an estimate of the total number of units k in the population (for example, the total employment, or the total number of items). Because we have a sample, this estimate of the total number of units in the population will differ from the true population total. Similarly, the estimate of total Y (where we ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Imputation (statistics)

In statistics, imputation is the process of replacing missing data with substituted values. When substituting for a data point, it is known as "unit imputation"; when substituting for a component of a data point, it is known as "item imputation". There are three main problems that missing data causes: missing data can introduce a substantial amount of bias, make the handling and analysis of the data more arduous, and create reductions in efficiency. Because missing data can create problems for analyzing data, imputation is seen as a way to avoid pitfalls involved with listwise deletion of cases that have missing values. That is to say, when one or more values are missing for a case, most statistical packages default to discarding any case that has a missing value, which may introduce bias or affect the representativeness of the results. Imputation preserves all cases by replacing missing data with an estimated value based on other available information. Once all missing values h ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Resampling (statistics)

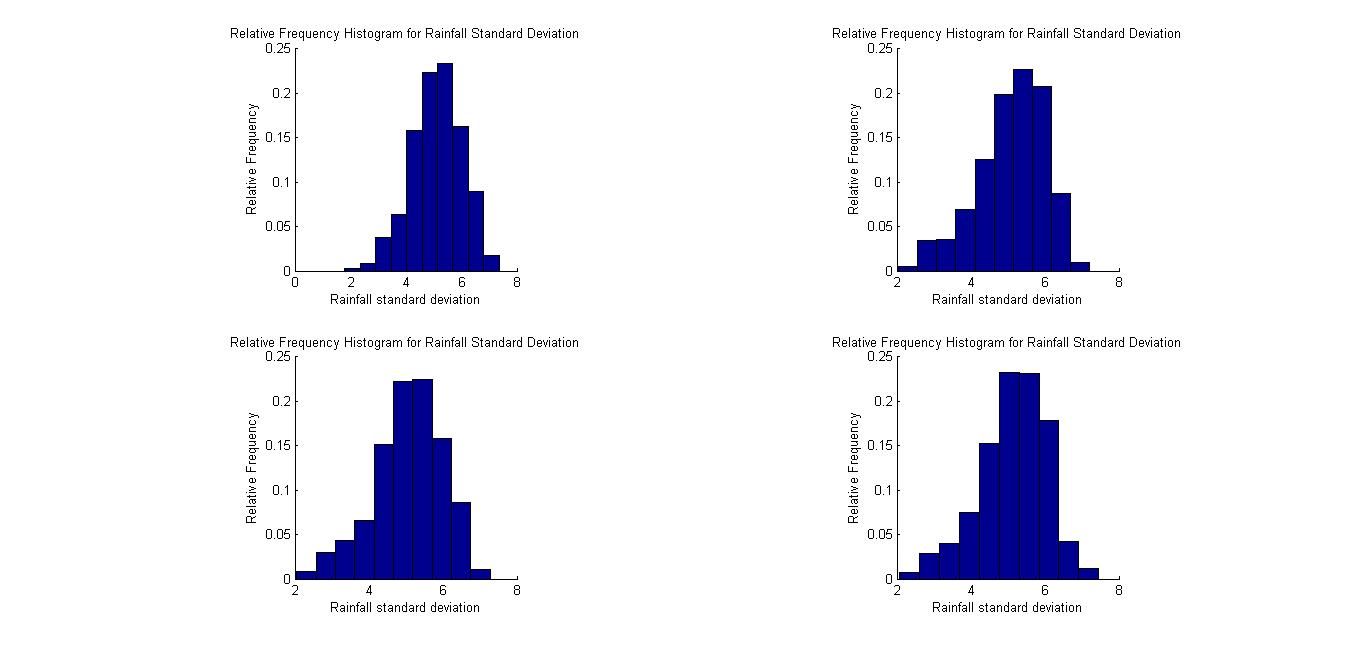

In statistics, resampling is the creation of new samples based on one observed sample. Resampling methods are: # Permutation tests (also re-randomization tests) # Bootstrapping # Cross validation Permutation tests Permutation tests rely on resampling the original data assuming the null hypothesis. Based on the resampled data it can be concluded how likely the original data is to occur under the null hypothesis. Bootstrap Bootstrapping is a statistical method for estimating the sampling distribution of an estimator by sampling with replacement from the original sample, most often with the purpose of deriving robust estimates of standard errors and confidence intervals of a population parameter like a mean, median, proportion, odds ratio, correlation coefficient or regression coefficient. It has been called the plug-in principle,Logan, J. David and Wolesensky, Willian R. Mathematical methods in biology. Pure and Applied Mathematics: a Wiley-interscience Series of Texts, Mon ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Bootstrapping (statistics)

Bootstrapping is any test or metric that uses random sampling with replacement (e.g. mimicking the sampling process), and falls under the broader class of resampling methods. Bootstrapping assigns measures of accuracy (bias, variance, confidence intervals, prediction error, etc.) to sample estimates.software This technique allows estimation of the sampling distribution of almost any statistic using random sampling methods. Bootstrapping estimates the properties of an (such as its ) by measuring those properties when sampling from an approximating distribution. One standard choice for an a ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Bayesian Probability

Bayesian probability is an Probability interpretations, interpretation of the concept of probability, in which, instead of frequentist probability, frequency or propensity probability, propensity of some phenomenon, probability is interpreted as reasonable expectation representing a state of knowledge or as quantification of a personal belief. The Bayesian interpretation of probability can be seen as an extension of propositional logic that enables reasoning with Hypothesis, hypotheses; that is, with propositions whose truth value, truth or falsity is unknown. In the Bayesian view, a probability is assigned to a hypothesis, whereas under frequentist inference, a hypothesis is typically tested without being assigned a probability. Bayesian probability belongs to the category of evidential probabilities; to evaluate the probability of a hypothesis, the Bayesian probabilist specifies a prior probability. This, in turn, is then updated to a posterior probability in the light of new, re ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Independence (probability Theory)

Independence is a fundamental notion in probability theory, as in statistics and the theory of stochastic processes. Two events are independent, statistically independent, or stochastically independent if, informally speaking, the occurrence of one does not affect the probability of occurrence of the other or, equivalently, does not affect the odds. Similarly, two random variables are independent if the realization of one does not affect the probability distribution of the other. When dealing with collections of more than two events, two notions of independence need to be distinguished. The events are called pairwise independent if any two events in the collection are independent of each other, while mutual independence (or collective independence) of events means, informally speaking, that each event is independent of any combination of other events in the collection. A similar notion exists for collections of random variables. Mutual independence implies pairwise independence ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Daniel G

Daniel is a masculine given name and a surname of Hebrew origin. It means "God is my judge"Hanks, Hardcastle and Hodges, ''Oxford Dictionary of First Names'', Oxford University Press, 2nd edition, , p. 68. (cf. Gabriel—"God is my strength"), and derives from two early biblical figures, primary among them Daniel from the Book of Daniel. It is a common given name for males, and is also used as a surname. It is also the basis for various derived given names and surnames. Background The name evolved into over 100 different spellings in countries around the world. Nicknames (Dan, Danny) are common in both English and Hebrew; "Dan" may also be a complete given name rather than a nickname. The name "Daniil" (Даниил) is common in Russia. Feminine versions (Danielle, Danièle, Daniela, Daniella, Dani, Danitza) are prevalent as well. It has been particularly well-used in Ireland. The Dutch names "Daan" and "Daniël" are also variations of Daniel. A related surname develo ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Design Effect

In survey methodology, the design effect (generally denoted as D_ or D_^2) is a measure of the expected impact of a sampling design on the variance of an estimator for some parameter. It is calculated as the ratio of the variance of an estimator based on a sample from an (often) complex sampling design, to the variance of an alternative estimator based on a simple random sample (SRS) of the same number of elements. The Deff (be it estimated, or known a-priori) can be used to adjust the variance of an estimator in cases where the sample is not drawn using simple random sampling. It may also be useful in sample size calculations and for quantifying the representativeness of a sample. The term "design effect" was coined by Leslie Kish in 1965. The design effect is a positive real number that indicates an inflation (D_>1), or deflation (D_ A general formula for the (theoretical) design effect of estimating a total (not the mean), for some design, is given in Cochran 1977. Deft A r ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |