|

Heteroskedasticity-consistent Standard Errors

The topic of heteroskedasticity-consistent (HC) standard errors arises in statistics and econometrics in the context of linear regression and time series analysis. These are also known as heteroskedasticity-robust standard errors (or simply robust standard errors), Eicker–Huber–White standard errors (also Huber–White standard errors or White standard errors), to recognize the contributions of Friedhelm Eicker, Peter J. Huber, and Halbert White. In regression and time-series modelling, basic forms of models make use of the assumption that the errors or disturbances ''u''''i'' have the same variance across all observation points. When this is not the case, the errors are said to be heteroskedastic, or to have heteroskedasticity, and this behaviour will be reflected in the residuals \widehat_i estimated from a fitted model. Heteroskedasticity-consistent standard errors are used to allow the fitting of a model that does contain heteroskedastic residuals. The first such appro ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Statistics

Statistics (from German language, German: ''wikt:Statistik#German, Statistik'', "description of a State (polity), state, a country") is the discipline that concerns the collection, organization, analysis, interpretation, and presentation of data. In applying statistics to a scientific, industrial, or social problem, it is conventional to begin with a statistical population or a statistical model to be studied. Populations can be diverse groups of people or objects such as "all people living in a country" or "every atom composing a crystal". Statistics deals with every aspect of data, including the planning of data collection in terms of the design of statistical survey, surveys and experimental design, experiments.Dodge, Y. (2006) ''The Oxford Dictionary of Statistical Terms'', Oxford University Press. When census data cannot be collected, statisticians collect data by developing specific experiment designs and survey sample (statistics), samples. Representative sampling as ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

BLUE

Blue is one of the three primary colours in the RYB colour model (traditional colour theory), as well as in the RGB (additive) colour model. It lies between violet and cyan on the spectrum of visible light. The eye perceives blue when observing light with a dominant wavelength between approximately 450 and 495 nanometres. Most blues contain a slight mixture of other colours; azure contains some green, while ultramarine contains some violet. The clear daytime sky and the deep sea appear blue because of an optical effect known as Rayleigh scattering. An optical effect called Tyndall effect explains blue eyes. Distant objects appear more blue because of another optical effect called aerial perspective. Blue has been an important colour in art and decoration since ancient times. The semi-precious stone lapis lazuli was used in ancient Egypt for jewellery and ornament and later, in the Renaissance, to make the pigment ultramarine, the most expensive of all pigments. In the ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Weighted Least Squares

Weighted least squares (WLS), also known as weighted linear regression, is a generalization of ordinary least squares and linear regression in which knowledge of the variance of observations is incorporated into the regression. WLS is also a specialization of generalized least squares. Introduction A special case of generalized least squares called weighted least squares can be used when all the off-diagonal entries of Ω, the covariance matrix of the residuals, are null; the variances of the observations (along the covariance matrix diagonal) may still be unequal (heteroscedasticity). The fit of a model to a data point is measured by its residual, r_i , defined as the difference between a measured value of the dependent variable, y_i and the value predicted by the model, f(x_i, \boldsymbol\beta): : r_i(\boldsymbol\beta) = y_i - f(x_i, \boldsymbol\beta). If the errors are uncorrelated and have equal variance, then the function : S(\boldsymbol\beta) = \sum_i r_i(\boldsymbol\b ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Linear Probability Model

In statistics, a linear probability model (LPM) is a special case of a binary regression model. Here the dependent variable for each observation takes values which are either 0 or 1. The probability of observing a 0 or 1 in any one case is treated as depending on one or more explanatory variables. For the "linear probability model", this relationship is a particularly simple one, and allows the model to be fitted by linear regression. The model assumes that, for a binary outcome (Bernoulli trial), Y, and its associated vector of explanatory variables, X, : \Pr(Y=1 , X=x) = x'\beta . For this model, : E X= \Pr(Y=1, X) =x'\beta, and hence the vector of parameters β can be estimated using least squares. This method of fitting would be inefficient, and can be improved by adopting an iterative scheme based on weighted least squares, in which the model from the previous iteration is used to supply estimates of the conditional variances, \operatorname(Y, X=x), which would vary betwee ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Bootstrapping (statistics)

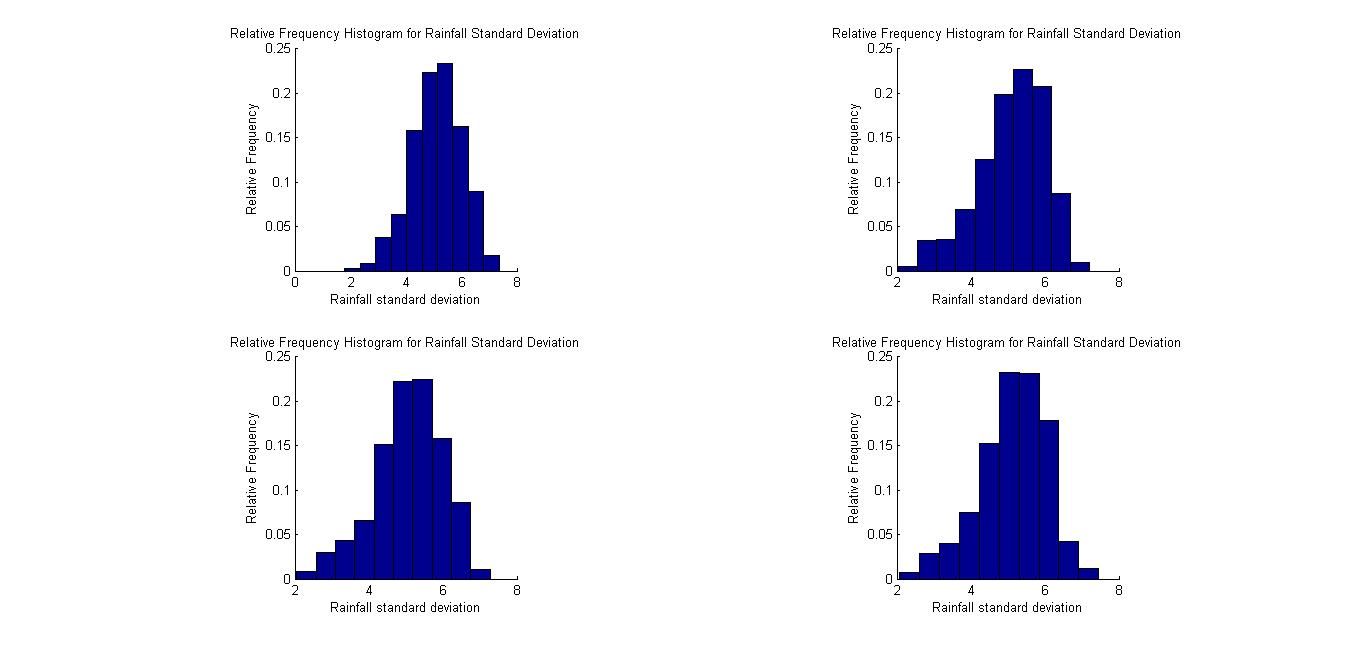

Bootstrapping is any test or metric that uses random sampling with replacement (e.g. mimicking the sampling process), and falls under the broader class of resampling methods. Bootstrapping assigns measures of accuracy (bias, variance, confidence intervals, prediction error, etc.) to sample estimates.software This technique allows estimation of the sampling distribution of almost any statistic using random sampling methods. Bootstrapping estimates the properties of an (such as its ) by measuring those properties when sampling from an approximating distribution. One standard choice for an a ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Resampling (statistics)

In statistics, resampling is the creation of new samples based on one observed sample. Resampling methods are: # Permutation tests (also re-randomization tests) # Bootstrapping # Cross validation Permutation tests Permutation tests rely on resampling the original data assuming the null hypothesis. Based on the resampled data it can be concluded how likely the original data is to occur under the null hypothesis. Bootstrap Bootstrapping is a statistical method for estimating the sampling distribution of an estimator by sampling with replacement from the original sample, most often with the purpose of deriving robust estimates of standard errors and confidence intervals of a population parameter like a mean, median, proportion, odds ratio, correlation coefficient or regression coefficient. It has been called the plug-in principle,Logan, J. David and Wolesensky, Willian R. Mathematical methods in biology. Pure and Applied Mathematics: a Wiley-interscience Series of Texts, Mon ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Statistical Hypothesis Testing

A statistical hypothesis test is a method of statistical inference used to decide whether the data at hand sufficiently support a particular hypothesis. Hypothesis testing allows us to make probabilistic statements about population parameters. History Early use While hypothesis testing was popularized early in the 20th century, early forms were used in the 1700s. The first use is credited to John Arbuthnot (1710), followed by Pierre-Simon Laplace (1770s), in analyzing the human sex ratio at birth; see . Modern origins and early controversy Modern significance testing is largely the product of Karl Pearson ( ''p''-value, Pearson's chi-squared test), William Sealy Gosset ( Student's t-distribution), and Ronald Fisher ("null hypothesis", analysis of variance, "significance test"), while hypothesis testing was developed by Jerzy Neyman and Egon Pearson (son of Karl). Ronald Fisher began his life in statistics as a Bayesian (Zabell 1992), but Fisher soon grew disenchanted with t ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Journal Of Econometrics

The ''Journal of Econometrics'' is a scholarly journal in econometrics. It was first published in 1973. Its current managing editors are Serena Ng and Elie Tamer, Torben Andersen and Xiaohong Chen serve as editors. The journal publishes work dealing with estimation and other methodological aspects of the application of statistical inference to economic data, as well as papers dealing with the application of econometric techniques to economics. The journal also publishes a supplement to the Journal of Econometrics which is called "Annals of Econometrics". Each issue of the Annals includes a collection of papers on a single topic selected by the editor of the issue. See also * ''Econometrics Journal'' References External links Homepage Econometrics, Journal of Econometrics journals Econometrics Econometrics is the application of Statistics, statistical methods to economic data in order to give Empirical evidence, empirical content to economic relationships.M. Hashem P ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Leverage (statistics)

In statistics and in particular in regression analysis, leverage is a measure of how far away the independent variable values of an observation are from those of the other observations. ''High-leverage points'', if any, are outliers with respect to the independent variables. That is, high-leverage points have no neighboring points in \mathbb^ space, where '''' is the number of independent variables in a regression model. This makes the fitted model likely to pass close to a high leverage observation. Hence high-leverage points have the potential to cause large changes in the parameter estimates when they are deleted i.e., to be influential points. Although an influential point will typically have high leverage, a high leverage point is not necessarily an influential point. The leverage is typically defined as the diagonal elements of the hat matrix. Definition and interpretations Consider the linear regression model _i = \boldsymbol_i^\boldsymbol+_i, i=1,\, 2,\ldots,\, n. That is ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

William Greene (economist)

William H. Greene (born January 16, 1951) is an American economist. He is the Robert Stansky Professor of Economics and Statistics at Stern School of Business at New York University. In 1972, Greene graduated with a Bachelor of Science in business administration from Ohio State University. He also earned a master's degree (1974) and a Ph.D. (1976) in econometrics from the University of Wisconsin–Madison. Before accepting his position in NYU, Greene worked as a consultant for the Civil Aeronautics Board in Washington, D.C. Greene is the author of a popular graduate-level econometrics textbook: ''Econometric Analysis,'' which has run to 8th edition . He is the founding editor-in-chief of Foundations and Trends in Econometrics journal. Selected publications * * * See also * LIMDEP LIMDEP is an econometric and statistical software package with a variety of estimation tools. In addition to the core econometric tools for analysis of cross sections and time series, LIMDEP ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Journal Of Econometric Methods

A journal, from the Old French ''journal'' (meaning "daily"), may refer to: *Bullet journal, a method of personal organization *Diary, a record of what happened over the course of a day or other period *Daybook, also known as a general journal, a daily record of financial transactions *Logbook, a record of events important to the operation of a vehicle, facility, or otherwise *Record (other) *Transaction log, a chronological record of data processing *Travel journal In publishing, ''journal'' can refer to various periodicals or serials: *Academic journal, an academic or scholarly periodical **Scientific journal, an academic journal focusing on science **Medical journal, an academic journal focusing on medicine **Law review, a professional journal focusing on legal interpretation *Magazine, non-academic or scholarly periodicals in general **Trade magazine, a magazine of interest to those of a particular profession or trade **Literary magazine, a magazine devoted to literat ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |