|

HPSG

Head-driven phrase structure grammar (HPSG) is a highly lexicalized, constraint-based grammar developed by Carl Pollard and Ivan Sag. It is a type of phrase structure grammar, as opposed to a dependency grammar, and it is the immediate successor to generalized phrase structure grammar. HPSG draws from other fields such as computer science ( data type theory and knowledge representation) and uses Ferdinand de Saussure's notion of the sign. It uses a uniform formalism and is organized in a modular way which makes it attractive for natural language processing. An HPSG grammar includes principles and grammar rules and lexicon entries which are normally not considered to belong to a grammar. The formalism is based on lexicalism. This means that the lexicon is more than just a list of entries; it is in itself richly structured. Individual entries are marked with types. Types form a hierarchy. Early versions of the grammar were very lexicalized with few grammatical rules (schema). More r ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

ID/LP Grammar

ID/LP Grammars are a subset of Phrase Structure Grammars, differentiated from other formal grammars by distinguishing between immediate dominance (ID) and linear precedence (LP) constraints. Whereas traditional phrase structure rules incorporate dominance and precedence into a single rule, ID/LP Grammars maintains separate rule sets which need not be processed simultaneously. ID/LP Grammars are used in Computational Linguistics. For example, a typical phrase structure rule such as S -> NP \; VP, indicating that an S-node dominates an NP-node and a VP-node, and that the NP precedes the VP in the surface string. In ID/LP Grammars, this rule would only indicate dominance, and a linear precedence statement, such as NP\prec VP, would also be given. The idea first came to prominence as part of Generalized Phrase Structure Grammar; the ID/LP Grammar approach is also used in head-driven phrase structure grammar, lexical functional grammar, and other unification grammars. Current work ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Ivan Sag

Ivan Andrew Sag (November 9, 1949 – September 10, 2013) was an American linguist and cognitive scientist. He did research in areas of syntax and semantics as well as work in computational linguistics. Personal life Born in Alliance, Ohio on November 9, 1949, Sag attended the Mercersburg Academy but was expelled shortly before graduation. He received a BA from the University of Rochester, an MA from the University of Pennsylvania—where he studied comparative Indo-European languages, Sanskrit, and sociolinguistics—and a PhD from MIT in 1976, writing his dissertation (advised by Noam Chomsky) on ellipsis. Sag received a Mellon Fellowship at Stanford University in 1978-79, and remained in California from that point on. He was appointed a position in Linguistics at Stanford, and earned tenure there. He was married to sociolinguist Penelope Eckert. Academic work Sag made notable contributions to the fields of syntax, semantics, pragmatics, and language processing. His early ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Constraint-based Grammar

Model-theoretic grammars, also known as constraint-based grammars, contrast with generative grammars in the way they define sets of sentences: they state constraints on syntactic structure rather than providing operations for generating syntactic objects. A generative grammar provides a set of operations such as rewriting, insertion, deletion, movement, or combination, and is interpreted as a definition of the set of all and only the objects that these operations are capable of producing through iterative application. A model-theoretic grammar simply states a set of conditions that an object must meet, and can be regarded as defining the set of all and only the structures of a certain sort that satisfy all of the constraints. The approach applies the mathematical techniques of model theory to the task of syntactic description: a grammar is a theory in the logician's sense (a consistent set of statements) and the well-formed structures are the models that satisfy the theory. Exampl ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Syntax

In linguistics, syntax () is the study of how words and morphemes combine to form larger units such as phrases and sentences. Central concerns of syntax include word order, grammatical relations, hierarchical sentence structure ( constituency), agreement, the nature of crosslinguistic variation, and the relationship between form and meaning (semantics). There are numerous approaches to syntax that differ in their central assumptions and goals. Etymology The word ''syntax'' comes from Ancient Greek roots: "coordination", which consists of ''syn'', "together", and ''táxis'', "ordering". Topics The field of syntax contains a number of various topics that a syntactic theory is often designed to handle. The relation between the topics is treated differently in different theories, and some of them may not be considered to be distinct but instead to be derived from one another (i.e. word order can be seen as the result of movement rules derived from grammatical relations). Se ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Feature Structure

In phrase structure grammars, such as generalised phrase structure grammar, head-driven phrase structure grammar and lexical functional grammar, a feature structure is essentially a set of attribute–value pairs. For example, the attribute named ''number'' might have the value ''singular''. The value of an attribute may be either atomic, e.g. the symbol ''singular'', or complex (most commonly a feature structure, but also a list or a set). A feature structure can be represented as a directed acyclic graph (DAG), with the nodes corresponding to the variable values and the paths to the variable names. Operations defined on feature structures, e.g. unification, are used extensively in phrase structure grammars. In most theories (e.g. HPSG), operations are strictly speaking defined over equations describing feature structures and not over feature structures themselves, though feature structures are usually used in informal exposition. Often, feature structures are written like this ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Attribute Value Matrix

In phrase structure grammars, such as generalised phrase structure grammar, head-driven phrase structure grammar and lexical functional grammar, a feature structure is essentially a set of attribute–value pairs. For example, the attribute named ''number'' might have the value ''singular''. The value of an attribute may be either atomic, e.g. the symbol ''singular'', or complex (most commonly a feature structure, but also a list or a set). A feature structure can be represented as a directed acyclic graph (DAG), with the nodes corresponding to the variable values and the paths to the variable names. Operations defined on feature structures, e.g. unification, are used extensively in phrase structure grammars. In most theories (e.g. HPSG), operations are strictly speaking defined over equations describing feature structures and not over feature structures themselves, though feature structures are usually used in informal exposition. Often, feature structures are written like this ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Natural Language Processing

Natural language processing (NLP) is an interdisciplinary subfield of linguistics, computer science, and artificial intelligence concerned with the interactions between computers and human language, in particular how to program computers to process and analyze large amounts of natural language data. The goal is a computer capable of "understanding" the contents of documents, including the contextual nuances of the language within them. The technology can then accurately extract information and insights contained in the documents as well as categorize and organize the documents themselves. Challenges in natural language processing frequently involve speech recognition, natural-language understanding, and natural-language generation. History Natural language processing has its roots in the 1950s. Already in 1950, Alan Turing published an article titled "Computing Machinery and Intelligence" which proposed what is now called the Turing test as a criterion of intelligence, t ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Carl Pollard

Carl Jesse Pollard (born June 28, 1947) is a Professor of Linguistics at the Ohio State University. He is the inventor of head grammar and higher-order grammar, as well as co-inventor of head-driven phrase structure grammar (HPSG). He is currently also working on convergent grammar (CVG). He has written numerous books and articles on formal syntax and semantics. He received his Ph.D. from Stanford Stanford University, officially Leland Stanford Junior University, is a private research university in Stanford, California. The campus occupies , among the largest in the United States, and enrolls over 17,000 students. Stanford is considere .... External linksCarl Pollard's website 1947 births Living people Linguists from the United States Syntacticians Ohio State University faculty {{US-linguist-stub ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Parsing

Parsing, syntax analysis, or syntactic analysis is the process of analyzing a string of symbols, either in natural language, computer languages or data structures, conforming to the rules of a formal grammar. The term ''parsing'' comes from Latin ''pars'' (''orationis''), meaning part (of speech). The term has slightly different meanings in different branches of linguistics and computer science. Traditional sentence parsing is often performed as a method of understanding the exact meaning of a sentence or word, sometimes with the aid of devices such as sentence diagrams. It usually emphasizes the importance of grammatical divisions such as subject and predicate. Within computational linguistics the term is used to refer to the formal analysis by a computer of a sentence or other string of words into its constituents, resulting in a parse tree showing their syntactic relation to each other, which may also contain semantic and other information (p-values). Some parsing algor ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

German Language

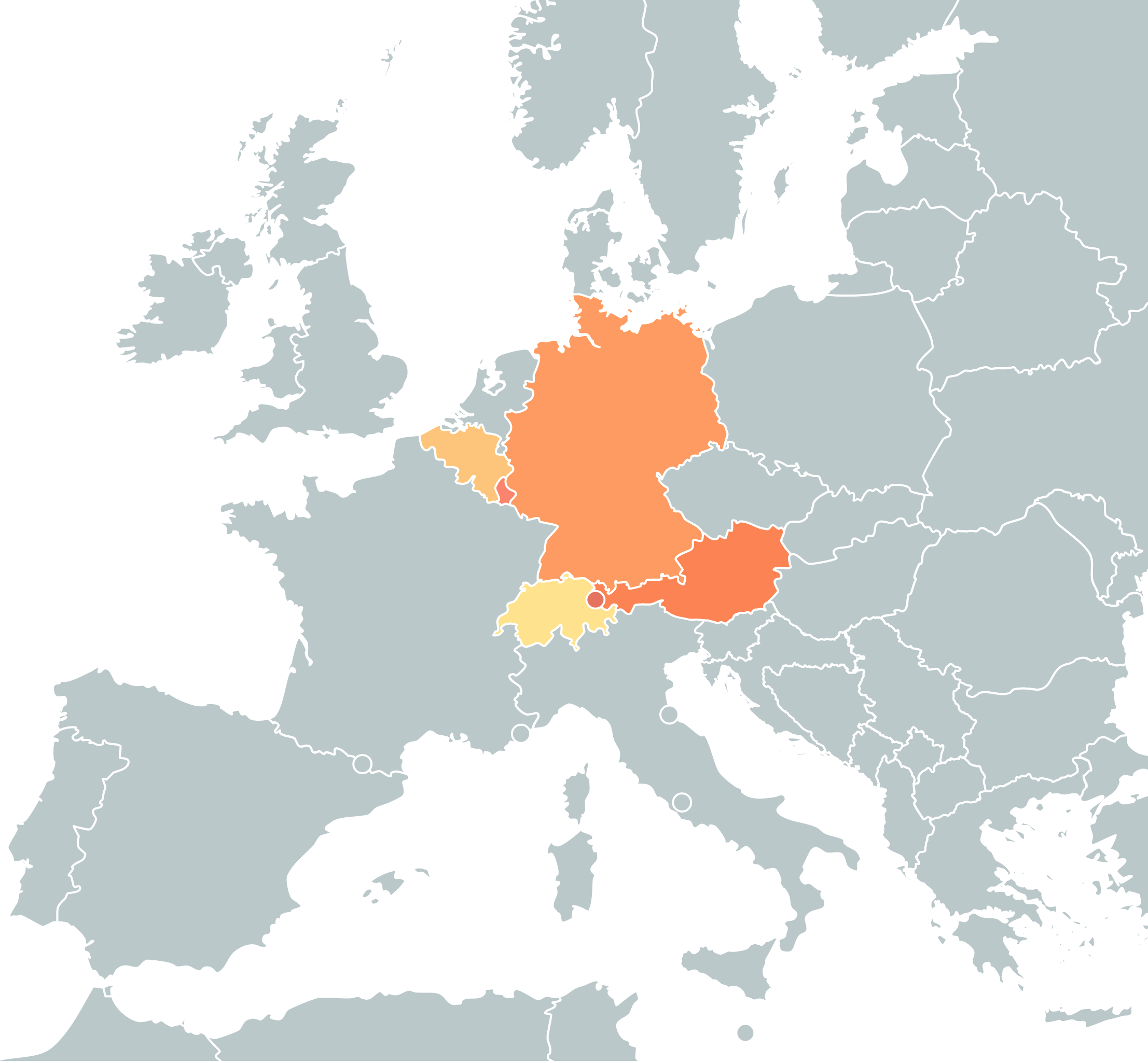

German ( ) is a West Germanic languages, West Germanic language mainly spoken in Central Europe. It is the most widely spoken and Official language, official or co-official language in Germany, Austria, Switzerland, Liechtenstein, and the Italy, Italian province of South Tyrol. It is also a co-official language of Luxembourg and German-speaking Community of Belgium, Belgium, as well as a national language in Namibia. Outside Germany, it is also spoken by German communities in France (Bas-Rhin), Czech Republic (North Bohemia), Poland (Upper Silesia), Slovakia (Bratislava Region), and Hungary (Sopron). German is most similar to other languages within the West Germanic language branch, including Afrikaans, Dutch language, Dutch, English language, English, the Frisian languages, Low German, Luxembourgish, Scots language, Scots, and Yiddish. It also contains close similarities in vocabulary to some languages in the North Germanic languages, North Germanic group, such as Danish lan ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |