|

Hyperparameter (machine Learning)

In machine learning, a hyperparameter is a parameter that can be set in order to define any configurable part of a model's learning process. Hyperparameters can be classified as either model hyperparameters (such as the topology and size of a neural network) or algorithm hyperparameters (such as the learning rate and the batch size of an optimizer). These are named ''hyper''parameters in contrast to parameters, which are characteristics that the model learns from the data. Hyperparameters are not required by every model or algorithm. Some simple algorithms such as ordinary least squares regression require none. However, the LASSO algorithm, for example, adds a regularization hyperparameter to ordinary least squares which must be set before training. Even models and algorithms without a strict requirement to define hyperparameters may not produce meaningful results if these are not carefully chosen. However, optimal values for hyperparameters are not always easy to predict. Some ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Machine Learning

Machine learning (ML) is a field of study in artificial intelligence concerned with the development and study of Computational statistics, statistical algorithms that can learn from data and generalise to unseen data, and thus perform Task (computing), tasks without explicit Machine code, instructions. Within a subdiscipline in machine learning, advances in the field of deep learning have allowed Neural network (machine learning), neural networks, a class of statistical algorithms, to surpass many previous machine learning approaches in performance. ML finds application in many fields, including natural language processing, computer vision, speech recognition, email filtering, agriculture, and medicine. The application of ML to business problems is known as predictive analytics. Statistics and mathematical optimisation (mathematical programming) methods comprise the foundations of machine learning. Data mining is a related field of study, focusing on exploratory data analysi ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Gradient Method

In optimization, a gradient method is an algorithm In mathematics and computer science, an algorithm () is a finite sequence of Rigour#Mathematics, mathematically rigorous instructions, typically used to solve a class of specific Computational problem, problems or to perform a computation. Algo ... to solve problems of the form :\min_\; f(x) with the search directions defined by the gradient of the function at the current point. Examples of gradient methods are the gradient descent and the conjugate gradient. See also * Gradient descent * Stochastic gradient descent * Coordinate descent * Frank–Wolfe algorithm * Landweber iteration * Random coordinate descent * Conjugate gradient method * Derivation of the conjugate gradient method * Nonlinear conjugate gradient method * Biconjugate gradient method * Biconjugate gradient stabilized method References * First order methods Optimization algorithms and methods Numerical linear algebra {{linear-al ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Replication Crisis

The replication crisis, also known as the reproducibility or replicability crisis, refers to the growing number of published scientific results that other researchers have been unable to reproduce or verify. Because the reproducibility of empirical results is an essential part of the scientific method, such failures undermine the credibility of theories that build on them and can call into question substantial parts of scientific knowledge. The replication crisis is frequently discussed in relation to psychology and medicine, wherein considerable efforts have been undertaken to reinvestigate the results of classic studies to determine whether they are reliable, and if they turn out not to be, the reasons for the failure. Data strongly indicate that other natural science, natural and social sciences are also affected. The phrase "replication crisis" was coined in the early 2010s as part of a growing awareness of the problem. Considerations of causes and remedies have given rise ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Hyper-heuristic

A hyper-heuristic is a heuristic search method that seeks to automate, often by the incorporation of machine learning techniques, the process of selecting, combining, generating or adapting several simpler heuristics (or components of such heuristics) to efficiently solve computational search problems. One of the motivations for studying hyper-heuristics is to build systems which can handle classes of problems rather than solving just one problem.P. Ross, Hyper-heuristics, Search Methodologies: Introductory Tutorials in Optimization and Decision Support Techniques (E. K. Burke and G. Kendall, eds.), Springer, 2005, pp. 529-556.E. Ozcan, B. Bilgin, E. E. KorkmazA Comprehensive Analysis of Hyper-heuristics Intelligent Data Analysis, 12:1, pp. 3-23, 2008. There might be multiple heuristics from which one can choose for solving a problem, and each heuristic has its own strength and weakness. The idea is to automatically devise algorithms by combining the strength and compensating for th ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Random Number Generation

Random number generation is a process by which, often by means of a random number generator (RNG), a sequence of numbers or symbols is generated that cannot be reasonably predicted better than by random chance. This means that the particular outcome sequence will contain some patterns detectable in hindsight but impossible to foresee. True random number generators can be ''Hardware random number generator, hardware random-number generators'' (HRNGs), wherein each generation is a function of the current value of a physical environment's attribute that is constantly changing in a manner that is practically impossible to model. This would be in contrast to so-called "random number generations" done by ''pseudorandom number generators'' (PRNGs), which generate numbers that only look random but are in fact predetermined—these generations can be reproduced simply by knowing the state of the PRNG. Various applications of randomness have led to the development of different methods for ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Deep Learning

Deep learning is a subset of machine learning that focuses on utilizing multilayered neural networks to perform tasks such as classification, regression, and representation learning. The field takes inspiration from biological neuroscience and is centered around stacking artificial neurons into layers and "training" them to process data. The adjective "deep" refers to the use of multiple layers (ranging from three to several hundred or thousands) in the network. Methods used can be either supervised, semi-supervised or unsupervised. Some common deep learning network architectures include fully connected networks, deep belief networks, recurrent neural networks, convolutional neural networks, generative adversarial networks, transformers, and neural radiance fields. These architectures have been applied to fields including computer vision, speech recognition, natural language processing, machine translation, bioinformatics, drug design, medical image analysis, c ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Derivative-free Optimization

Derivative-free optimization (sometimes referred to as blackbox optimization) is a discipline in mathematical optimization that does not use derivative information in the classical sense to find optimal solutions: Sometimes information about the derivative of the objective function ''f'' is unavailable, unreliable or impractical to obtain. For example, ''f'' might be non-smooth, or time-consuming to evaluate, or in some way noisy, so that methods that rely on derivatives or approximate them via finite differences are of little use. The problem to find optimal points in such situations is referred to as derivative-free optimization, algorithms that do not use derivatives or finite differences are called derivative-free algorithms. Introduction The problem to be solved is to numerically optimize an objective function f\colon A\to\mathbb for some set A (usually A\subset\mathbb^n), i.e. find x_0\in A such that without loss of generality f(x_0)\leq f(x) for all x\in A. When applicable, ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

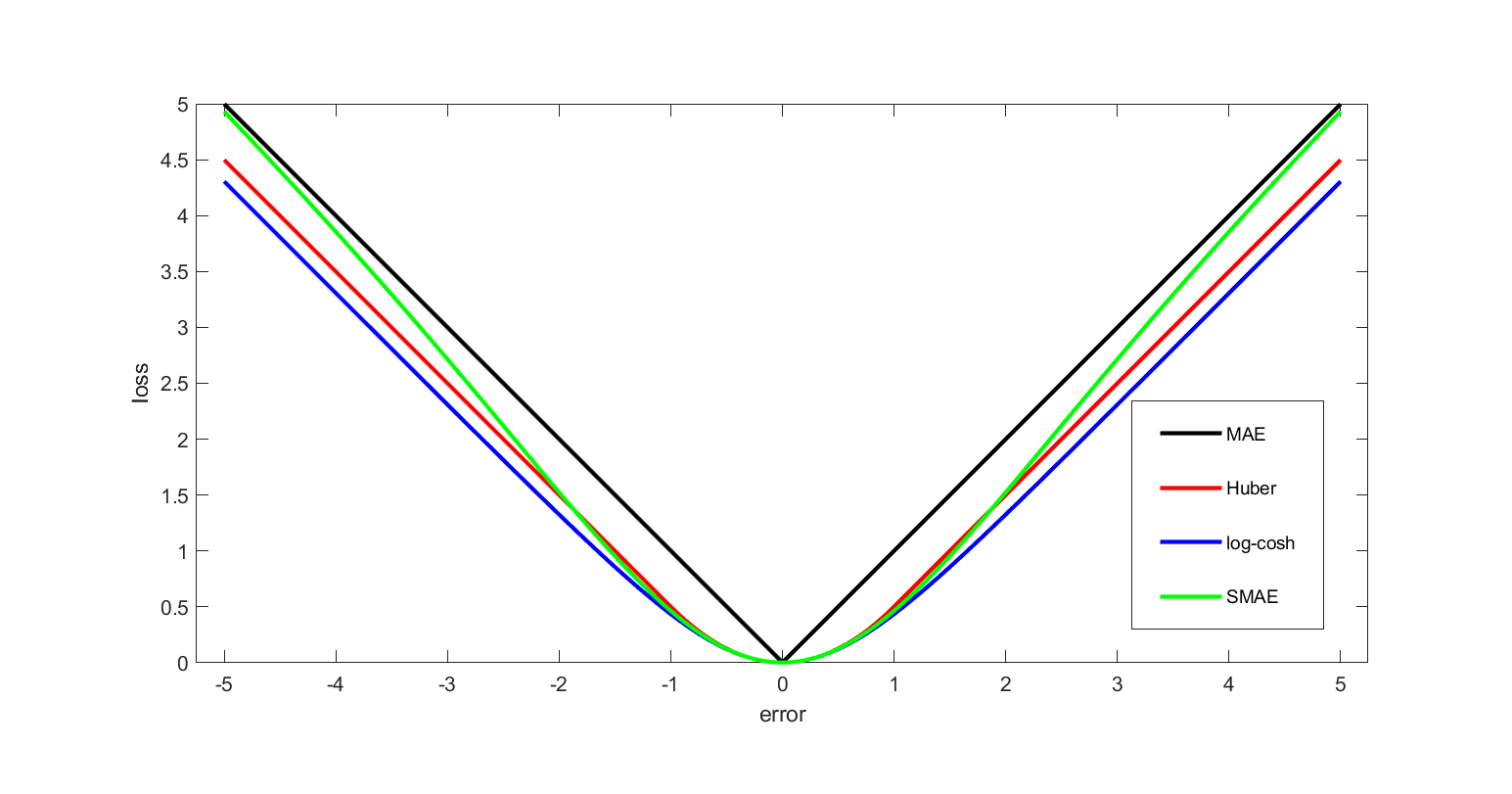

Loss Function

In mathematical optimization and decision theory, a loss function or cost function (sometimes also called an error function) is a function that maps an event or values of one or more variables onto a real number intuitively representing some "cost" associated with the event. An optimization problem seeks to minimize a loss function. An objective function is either a loss function or its opposite (in specific domains, variously called a reward function, a profit function, a utility function, a fitness function, etc.), in which case it is to be maximized. The loss function could include terms from several levels of the hierarchy. In statistics, typically a loss function is used for parameter estimation, and the event in question is some function of the difference between estimated and true values for an instance of data. The concept, as old as Pierre-Simon Laplace, Laplace, was reintroduced in statistics by Abraham Wald in the middle of the 20th century. In the context of economi ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Reinforcement Learning

Reinforcement learning (RL) is an interdisciplinary area of machine learning and optimal control concerned with how an intelligent agent should take actions in a dynamic environment in order to maximize a reward signal. Reinforcement learning is one of the three basic machine learning paradigms, alongside supervised learning and unsupervised learning. Reinforcement learning differs from supervised learning in not needing labelled input-output pairs to be presented, and in not needing sub-optimal actions to be explicitly corrected. Instead, the focus is on finding a balance between exploration (of uncharted territory) and exploitation (of current knowledge) with the goal of maximizing the cumulative reward (the feedback of which might be incomplete or delayed). The search for this balance is known as the exploration–exploitation dilemma. The environment is typically stated in the form of a Markov decision process (MDP), as many reinforcement learning algorithms use dyn ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Random Seed

A random seed (or seed state, or just seed) is a number (or vector) used to initialize a pseudorandom number generator. A pseudorandom number generator's number sequence is completely determined by the seed: thus, if a pseudorandom number generator is later reinitialized with the same seed, it will produce the same sequence of numbers. For a seed to be used in a pseudorandom number generator, it does not need to be random. Because of the nature of number generating algorithms, so long as the original seed is ignored, the rest of the values that the algorithm generates will follow probability distribution in a pseudorandom manner. However, a non-random seed will be cryptographically insecure, as it can allow an adversary to predict the pseudorandom numbers generated. The choice of a good random seed is crucial in the field of computer security. When a secret encryption key is pseudorandomly generated, having the seed will allow one to obtain the key. High entropy is importan ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Robustness (computer Science)

In computer science, robustness is the ability of a computer system to cope with errors during execution1990. IEEE Standard Glossary of Software Engineering Terminology, IEEE Std 610.12-1990 defines robustness as "The degree to which a system or component can function correctly in the presence of invalid inputs or stressful environmental conditions" and cope with erroneous input. Robustness can encompass many areas of computer science, such as robust programming, robust machine learning, and Robust Security Network. Formal techniques, such as fuzz testing, are essential to showing robustness since this type of testing involves invalid or unexpected inputs. Alternatively, fault injection can be used to test robustness. Various commercial products perform robustness testing of software analysis. Introduction In general, building robust systems that encompass every point of possible failure is difficult because of the vast quantity of possible inputs and input combinations. Si ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Long Short-term Memory

Long short-term memory (LSTM) is a type of recurrent neural network (RNN) aimed at mitigating the vanishing gradient problem commonly encountered by traditional RNNs. Its relative insensitivity to gap length is its advantage over other RNNs, hidden Markov models, and other sequence learning methods. It aims to provide a short-term memory for RNN that can last thousands of timesteps (thus "''long'' short-term memory"). The name is made in analogy with long-term memory and short-term memory and their relationship, studied by cognitive psychologists since the early 20th century. An LSTM unit is typically composed of a cell and three gates: an input gate, an output gate, and a forget gate. The cell remembers values over arbitrary time intervals, and the gates regulate the flow of information into and out of the cell. Forget gates decide what information to discard from the previous state, by mapping the previous state and the current input to a value between 0 and 1. A (rounded) ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |