|

Holm–Bonferroni Method

In statistics, the Holm–Bonferroni method, also called the Holm method or Bonferroni–Holm method, is used to counteract the problem of multiple comparisons. It is intended to control the family-wise error rate (FWER) and offers a simple test uniformly more powerful than the Bonferroni correction. It is named after Sture Holm, who codified the method, and Carlo Emilio Bonferroni. Motivation When considering several hypotheses, the problem of multiplicity arises: the more hypotheses are checked, the higher the probability of obtaining Type I errors (false positives). The Holm–Bonferroni method is one of many approaches for controlling the FWER, i.e., the probability that one or more Type I errors will occur, by adjusting the rejection criteria for each of the individual hypotheses. Formulation The method is as follows: * Suppose you have m p-values, sorted into order lowest-to-highest P_1,\ldots,P_m, and their corresponding hypotheses H_1,\ldots,H_m(null hypotheses). You ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Statistics

Statistics (from German language, German: ''wikt:Statistik#German, Statistik'', "description of a State (polity), state, a country") is the discipline that concerns the collection, organization, analysis, interpretation, and presentation of data. In applying statistics to a scientific, industrial, or social problem, it is conventional to begin with a statistical population or a statistical model to be studied. Populations can be diverse groups of people or objects such as "all people living in a country" or "every atom composing a crystal". Statistics deals with every aspect of data, including the planning of data collection in terms of the design of statistical survey, surveys and experimental design, experiments.Dodge, Y. (2006) ''The Oxford Dictionary of Statistical Terms'', Oxford University Press. When census data cannot be collected, statisticians collect data by developing specific experiment designs and survey sample (statistics), samples. Representative sampling as ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

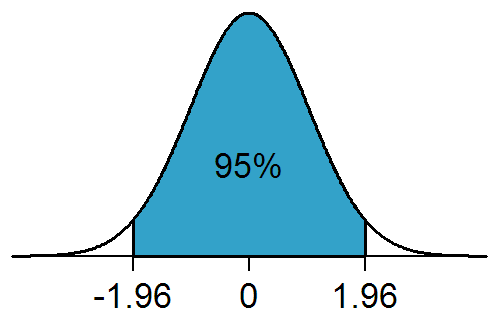

Significance Level

In statistical hypothesis testing, a result has statistical significance when it is very unlikely to have occurred given the null hypothesis (simply by chance alone). More precisely, a study's defined significance level, denoted by \alpha, is the probability of the study rejecting the null hypothesis, given that the null hypothesis is true; and the ''p''-value of a result, ''p'', is the probability of obtaining a result at least as extreme, given that the null hypothesis is true. The result is statistically significant, by the standards of the study, when p \le \alpha. The significance level for a study is chosen before data collection, and is typically set to 5% or much lower—depending on the field of study. In any experiment or observation that involves drawing a sample from a population, there is always the possibility that an observed effect would have occurred due to sampling error alone. But if the ''p''-value of an observed effect is less than (or equal to) the significa ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Statistical Hypothesis Testing

A statistical hypothesis test is a method of statistical inference used to decide whether the data at hand sufficiently support a particular hypothesis. Hypothesis testing allows us to make probabilistic statements about population parameters. History Early use While hypothesis testing was popularized early in the 20th century, early forms were used in the 1700s. The first use is credited to John Arbuthnot (1710), followed by Pierre-Simon Laplace (1770s), in analyzing the human sex ratio at birth; see . Modern origins and early controversy Modern significance testing is largely the product of Karl Pearson ( ''p''-value, Pearson's chi-squared test), William Sealy Gosset ( Student's t-distribution), and Ronald Fisher ("null hypothesis", analysis of variance, "significance test"), while hypothesis testing was developed by Jerzy Neyman and Egon Pearson (son of Karl). Ronald Fisher began his life in statistics as a Bayesian (Zabell 1992), but Fisher soon grew disenchanted with t ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Independence (probability Theory)

Independence is a fundamental notion in probability theory, as in statistics and the theory of stochastic processes. Two events are independent, statistically independent, or stochastically independent if, informally speaking, the occurrence of one does not affect the probability of occurrence of the other or, equivalently, does not affect the odds. Similarly, two random variables are independent if the realization of one does not affect the probability distribution of the other. When dealing with collections of more than two events, two notions of independence need to be distinguished. The events are called pairwise independent if any two events in the collection are independent of each other, while mutual independence (or collective independence) of events means, informally speaking, that each event is independent of any combination of other events in the collection. A similar notion exists for collections of random variables. Mutual independence implies pairwise independence ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Family-wise Error Rate

In statistics, family-wise error rate (FWER) is the probability of making one or more false discoveries, or type I errors when performing multiple hypotheses tests. Familywise and Experimentwise Error Rates Tukey (1953) developed the concept of a familywise error rate as the probability of making a Type I error among a specified group, or "family," of tests. Based on Tukey (1953), Ryan (1959) proposed the related concept of an ''experimentwise error rate'', which is the probability of making a Type I error in a given experiment. Hence, an experimentwise error rate is a familywise error rate for all of the tests that are conducted within an experiment. As Ryan (1959, Footnote 3) explained, an experiment may contain two or more families of multiple comparisons, each of which relates to a particular statistical inference and each of which has its own separate familywise error rate. Hence, familywise error rates are usually based on theoretically informative collections of multiple c ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

P-values

In null-hypothesis significance testing, the ''p''-value is the probability of obtaining test results at least as extreme as the result actually observed, under the assumption that the null hypothesis is correct. A very small ''p''-value means that such an extreme observed outcome would be very unlikely under the null hypothesis. Reporting ''p''-values of statistical tests is common practice in academic publications of many quantitative fields. Since the precise meaning of ''p''-value is hard to grasp, misuse is widespread and has been a major topic in metascience. Basic concepts In statistics, every conjecture concerning the unknown probability distribution of a collection of random variables representing the observed data X in some study is called a ''statistical hypothesis''. If we state one hypothesis only and the aim of the statistical test is to see whether this hypothesis is tenable, but not to investigate other specific hypotheses, then such a test is called a null ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Biometrika

''Biometrika'' is a peer-reviewed scientific journal published by Oxford University Press for thBiometrika Trust The editor-in-chief is Paul Fearnhead (Lancaster University). The principal focus of this journal is theoretical statistics. It was established in 1901 and originally appeared quarterly. It changed to three issues per year in 1977 but returned to quarterly publication in 1992. History ''Biometrika'' was established in 1901 by Francis Galton, Karl Pearson, and Raphael Weldon to promote the study of biometrics. The history of ''Biometrika'' is covered by Cox (2001). The name of the journal was chosen by Pearson, but Francis Edgeworth insisted that it be spelt with a "k" and not a "c". Since the 1930s, it has been a journal for statistical theory and methodology. Galton's role in the journal was essentially that of a patron and the journal was run by Pearson and Weldon and after Weldon's death in 1906 by Pearson alone until he died in 1936. In the early days, the American ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Closed Testing Procedure

In statistics, the closed testing procedure is a general method for performing more than one hypothesis test simultaneously. The closed testing principle Suppose there are ''k'' hypotheses ''H''1,..., ''H''''k'' to be tested and the overall type I error rate is α. The closed testing principle allows the rejection of any one of these elementary hypotheses, say ''H''''i'', if all possible intersection hypotheses involving ''H''''i'' can be rejected by using valid local level α tests; the adjusted p-value is the largest among those hypotheses. It controls the family-wise error rate for all the ''k'' hypotheses at level α in the strong sense. Example Suppose there are three hypotheses ''H''1,''H''2, and ''H''3 to be tested and the overall type I error rate is 0.05. Then ''H''1 can be rejected at level α if ''H''1 ∩ ''H''2 ∩ ''H''3, ''H''1 ∩ ''H''2, ''H''1 ∩ ''H''3 and ''H''1 can all be rejected using valid tests with level α. Special cases The Holm–Bonferroni method ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Bonferroni Inequalities

In probability theory, Boole's inequality, also known as the union bound, says that for any finite or countable set of events, the probability that at least one of the events happens is no greater than the sum of the probabilities of the individual events. This inequality provides an upper bound on the probability of occurrence of at least one of a countable number of events in terms of the individual probabilities of the events. Boole's inequality is named for its discoverer George Boole. Formally, for a countable set of events ''A''1, ''A''2, ''A''3, ..., we have :\left(\bigcup_^ A_i \right) \le \sum_^ (A_i). In measure-theoretic terms, Boole's inequality follows from the fact that a measure (and certainly any probability measure) is ''σ''- sub-additive. Proof Proof using induction Boole's inequality may be proved for finite collections of n events using the method of induction. For the n=1 case, it follows that :\mathbb P(A_1) \le \mathbb P(A_1). For the case n, we ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

False Positive

A false positive is an error in binary classification in which a test result incorrectly indicates the presence of a condition (such as a disease when the disease is not present), while a false negative is the opposite error, where the test result incorrectly indicates the absence of a condition when it is actually present. These are the two kinds of errors in a binary test, in contrast to the two kinds of correct result (a and a ). They are also known in medicine as a false positive (or false negative) diagnosis, and in statistical classification as a false positive (or false negative) error. In statistical hypothesis testing the analogous concepts are known as type I and type II errors, where a positive result corresponds to rejecting the null hypothesis, and a negative result corresponds to not rejecting the null hypothesis. The terms are often used interchangeably, but there are differences in detail and interpretation due to the differences between medical testing and statist ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Multiple Comparisons

In statistics, the multiple comparisons, multiplicity or multiple testing problem occurs when one considers a set of statistical inferences simultaneously or infers a subset of parameters selected based on the observed values. The more inferences are made, the more likely erroneous inferences become. Several statistical techniques have been developed to address that problem, typically by requiring a stricter significance threshold for individual comparisons, so as to compensate for the number of inferences being made. History The problem of multiple comparisons received increased attention in the 1950s with the work of statisticians such as Tukey and Scheffé. Over the ensuing decades, many procedures were developed to address the problem. In 1996, the first international conference on multiple comparison procedures took place in Israel. Definition Multiple comparisons arise when a statistical analysis involves multiple simultaneous statistical tests, each of which has a potent ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Type I Error

In statistical hypothesis testing, a type I error is the mistaken rejection of an actually true null hypothesis (also known as a "false positive" finding or conclusion; example: "an innocent person is convicted"), while a type II error is the failure to reject a null hypothesis that is actually false (also known as a "false negative" finding or conclusion; example: "a guilty person is not convicted"). Much of statistical theory revolves around the minimization of one or both of these errors, though the complete elimination of either is a statistical impossibility if the outcome is not determined by a known, observable causal process. By selecting a low threshold (cut-off) value and modifying the alpha (α) level, the quality of the hypothesis test can be increased. The knowledge of type I errors and type II errors is widely used in medical science, biometrics and computer science. Intuitively, type I errors can be thought of as errors of ''commission'', i.e. the researcher unluck ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |