|

Gesture Recognition

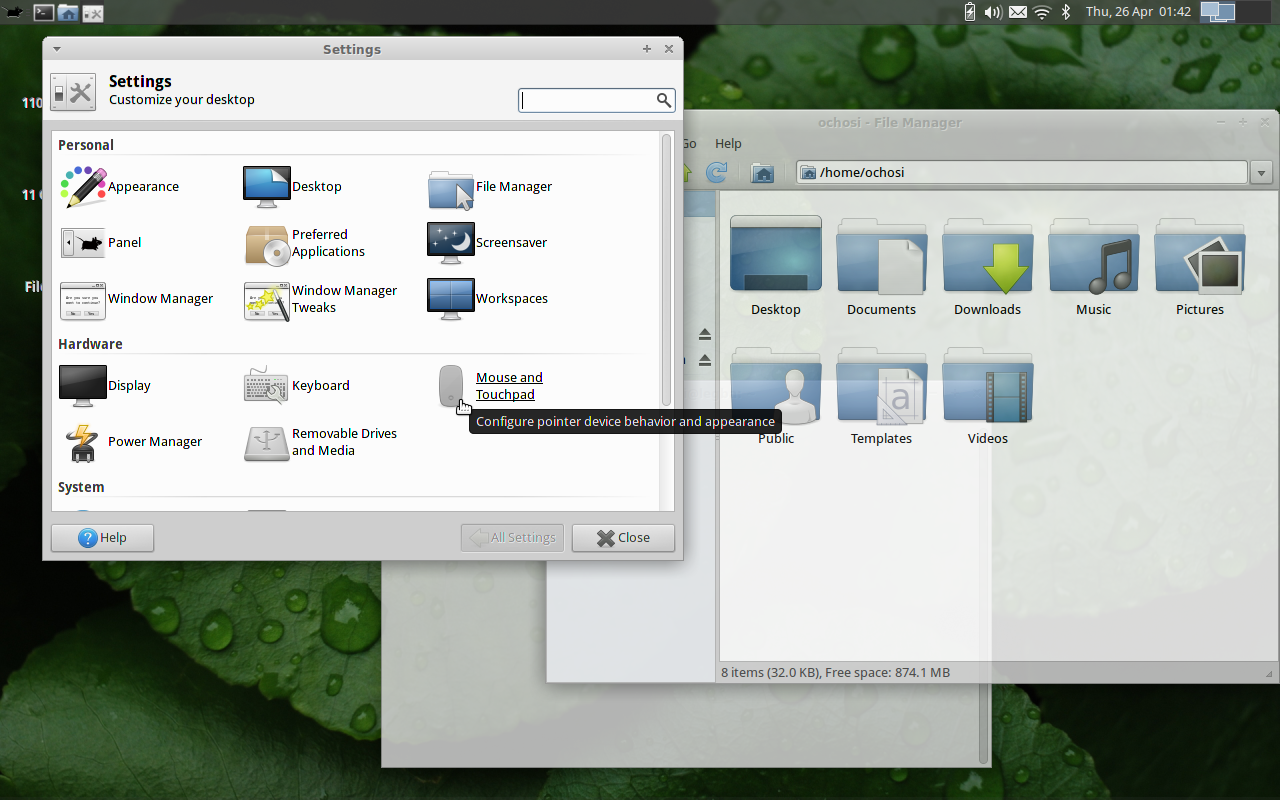

Gesture recognition is a topic in computer science and language technology with the goal of interpreting human gestures via mathematical algorithms. It is a subdiscipline of computer vision. Gestures can originate from any bodily motion or state, but commonly originate from the face or hand. Focuses in the field include emotion recognition from face and hand gesture recognition, since they are all expressions. Users can make simple gestures to control or interact with devices without physically touching them. Many approaches have been made using cameras and computer vision algorithms to interpret sign language, however, the identification and recognition of posture, gait, proxemics, and human behaviors is also the subject of gesture recognition techniques. Gesture recognition can be seen as a way for computers to begin to understand human body language, thus building a better bridge between machines and humans than older text user interfaces or even GUIs (graphical user in ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Gesture Recognition

Gesture recognition is a topic in computer science and language technology with the goal of interpreting human gestures via mathematical algorithms. It is a subdiscipline of computer vision. Gestures can originate from any bodily motion or state, but commonly originate from the face or hand. Focuses in the field include emotion recognition from face and hand gesture recognition, since they are all expressions. Users can make simple gestures to control or interact with devices without physically touching them. Many approaches have been made using cameras and computer vision algorithms to interpret sign language, however, the identification and recognition of posture, gait, proxemics, and human behaviors is also the subject of gesture recognition techniques. Gesture recognition can be seen as a way for computers to begin to understand human body language, thus building a better bridge between machines and humans than older text user interfaces or even GUIs (graphical user in ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Pose (computer Vision)

In the fields of computing and computer vision, pose (or spatial pose) represents the position and orientation of an object, usually in three dimensions. Poses are often stored internally as transformation matrices. The term “pose” is largely synonymous with the term “transform”, but a transform may often include scale, whereas pose does not. In computer vision, the pose of an object is often estimated from camera input by the process of ''pose estimation''. This information can then be used, for example, to allow a robot to manipulate an object or to avoid moving into the object based on its perceived position and orientation in the environment. Pose estimation The specific task of determining the pose of an object in an image (or stereo images, image sequence) is referred to as ''pose estimation''. The pose estimation problem can be solved in different ways depending on the image sensor configuration, and choice of methodology. Three classes of methodologies can b ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Depth Map

In 3D computer graphics and computer vision, a depth map is an image or image channel that contains information relating to the distance of the surfaces of scene objects from a viewpoint. The term is related (and may be analogous) to ''depth buffer'', ''Z-buffer'', '' Z-buffering'', and ''Z-depth''. tp://ftp.futurenet.co.uk/pub/arts/Glossary.pdf Computer Arts / 3D World Glossary Document retrieved 26 January 2011. The "Z" in these latter terms relates to a convention that the central axis of view of a camera is in the direction of the camera's Z axis, and not to the absolute Z axis of a scene. Examples File:Cubic Structure.jpg, Cubic Structure File:Cubic Frame Stucture and Floor Depth Map.jpg, Depth Map: Nearer is darker File:Cubic Structure and Floor Depth Map with Front and Back Delimitation.jpg, Depth Map: Nearer the Focal Plane is darker Two different depth maps can be seen here, together with the original model from which they are derived. The first depth map shows l ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Time-of-flight Camera

A time-of-flight camera (ToF camera), also known as time-of-flight sensor (ToF sensor), is a range imaging camera system for measuring distances between the camera and the subject for each point of the image based on time-of-flight, the round trip time of an artificial light signal, as provided by a laser or an LED. Laser-based time-of-flight cameras are part of a broader class of scannerless LIDAR, in which the entire scene is captured with each laser pulse, as opposed to point-by-point with a laser beam such as in scanning LIDAR systems. Time-of-flight camera products for civil applications began to emerge around 2000, as the semiconductor processes allowed the production of components fast enough for such devices. The systems cover ranges of a few centimeters up to several kilometers. Types of devices Several different technologies for time-of-flight cameras have been developed. RF-modulated light sources with phase detectors Photonic Mixer Devices (PMD), the Swiss Ranger, ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Structured Light

A structured light pattern designed for surface inspection An Automatix Seamtracker arc welding robot equipped with a camera and structured laser light source, enabling the robot to follow a welding seam automatically Structured light is the process of projecting a known pattern (often grids or horizontal bars) on to a scene. The way that these deform when striking surfaces allows vision systems to calculate the depth and surface information of the objects in the scene, as used in structured light 3D scanners. ''Invisible'' (or ''imperceptible'') structured light uses structured light without interfering with other computer vision tasks for which the projected pattern will be confusing. Example methods include the use of infrared light or of extremely high frame rates alternating between two exact opposite patterns. Structured light is used by a number of police forces for the purpose of photographing fingerprints in a 3D scene. Where previously they would use tape to extract ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Wired Glove

A wired glove (also called a dataglove or cyberglove) is an input device for human–computer interaction worn like a glove. Various sensor technologies are used to capture physical data such as bending of fingers. Often a motion tracker, such as a magnetic tracking device or inertial tracking device, is attached to capture the global position/rotation data of the glove. These movements are then interpreted by the software that accompanies the glove, so any one movement can mean any number of things. Gestures can then be categorized into useful information, such as to recognize sign language or other symbolic functions. Expensive high-end wired gloves can also provide haptic feedback, which is a simulation of the sense of touch. This allows a wired glove to also be used as an output device. Traditionally, wired gloves have only been available at a huge cost, with the finger bend sensors and the tracking device having to be bought separately. Wired gloves are often used in virt ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Kinect

Kinect is a line of motion sensing input devices produced by Microsoft and first released in 2010. The devices generally contain RGB cameras, and infrared projectors and detectors that map depth through either structured light or time of flight calculations, which can in turn be used to perform real-time gesture recognition and body skeletal detection, among other capabilities. They also contain microphones that can be used for speech recognition and voice control. Kinect was originally developed as a motion controller peripheral for Xbox video game consoles, distinguished from competitors (such as Nintendo's Wii Remote and Sony's PlayStation Move) by not requiring physical controllers. The first-generation Kinect was based on technology from Israeli company PrimeSense, and unveiled at E3 2009 as a peripheral for Xbox 360 codenamed "Project Natal". It was first released on November 4, 2010, and would go on to sell eight million units in its first 60 days of availabi ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Tangible User Interface

A tangible user interface (TUI) is a user interface in which a person interacts with digital information through the physical environment. The initial name was Graspable User Interface, which is no longer used. The purpose of TUI development is to empower collaboration, learning, and design by giving physical forms to digital information, thus taking advantage of the human ability to grasp and manipulate physical objects and materials. One of the pioneers in tangible user interfaces is Hiroshi Ishii, a professor at the MIT who heads the Tangible Media Group at the MIT Media Lab. His particular vision for tangible UIs, called ''Tangible Bits'', is to give physical form to digital information, making bits directly manipulable and perceptible. Tangible bits pursues the seamless coupling between physical objects and virtual data. Characteristics # Physical representations are computationally coupled to underlying digital information. # Physical representations embody mechan ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

User Interfaces

In the industrial design field of human–computer interaction, a user interface (UI) is the space where interactions between humans and machines occur. The goal of this interaction is to allow effective operation and control of the machine from the human end, while the machine simultaneously feeds back information that aids the operators' decision-making process. Examples of this broad concept of user interfaces include the interactive aspects of computer operating systems, hand tools, heavy machinery operator controls and process controls. The design considerations applicable when creating user interfaces are related to, or involve such disciplines as, ergonomics and psychology. Generally, the goal of user interface design is to produce a user interface that makes it easy, efficient, and enjoyable (user-friendly) to operate a machine in the way which produces the desired result (i.e. maximum usability). This generally means that the operator needs to provide minimal input t ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

COVID-19

Coronavirus disease 2019 (COVID-19) is a contagious disease caused by a virus, the severe acute respiratory syndrome coronavirus 2 (SARS-CoV-2). The first known case was identified in Wuhan, China, in December 2019. The disease quickly spread worldwide, resulting in the COVID-19 pandemic. The symptoms of COVID‑19 are variable but often include fever, cough, headache, fatigue, breathing difficulties, loss of smell, and loss of taste. Symptoms may begin one to fourteen days after exposure to the virus. At least a third of people who are infected do not develop noticeable symptoms. Of those who develop symptoms noticeable enough to be classified as patients, most (81%) develop mild to moderate symptoms (up to mild pneumonia), while 14% develop severe symptoms (dyspnea, hypoxia, or more than 50% lung involvement on imaging), and 5% develop critical symptoms (respiratory failure, shock, or multiorgan dysfunction). Older people are at a higher risk of developing se ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Context Menu

A context menu (also called contextual, shortcut, and pop up or pop-up menu) is a menu in a graphical user interface (GUI) that appears upon user interaction, such as a right-click mouse operation. A context menu offers a limited set of choices that are available in the current state, or context, of the operating system or application to which the menu belongs. Usually the available choices are actions related to the selected object. From a technical point of view, such a context menu is a graphical control element. History Context menus first appeared in the Smalltalk environment on the Xerox Alto computer, where they were called ''pop-up menus''; they were invented by Dan Ingalls in the mid-1970s. Microsoft Office v3.0 introduced the context menu for copy and paste functionality in 1990. Borland demonstrated extensive use of the context menu in 1991 at the Second Paradox Conference in Phoenix Arizona. Lotus 1-2-3/G for OS/2 v1.0 added additional formatting options ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Mouse Gesture

In computing, a pointing device gesture or mouse gesture (or simply gesture) is a way of combining pointing device or finger movements and clicks that the software recognizes as a specific computer event and responds to accordingly. They can be useful for people who have difficulties typing on a keyboard. For example, in a web browser, a user can navigate to the previously viewed page by pressing the right pointing device button, moving the pointing device briefly to the left, then releasing the button. History The first pointing device gesture, the " drag", was introduced by Apple to replace a dedicated "move" button on mice shipped with its Macintosh and Lisa computers. Dragging involves holding down a pointing device button while moving the pointing device; the software interprets this as an action distinct from separate clicking and moving behaviors. Unlike most pointing device gestures, it does not involve the tracing of any particular shape. Although the "drag" behavio ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

.jpg)