|

Generic-case Complexity

Generic-case complexity is a subfield of computational complexity theory that studies the complexity of computational problems on "most inputs". Generic-case complexity is a way of measuring the complexity of a computational problem by neglecting a small set of unrepresentative inputs and considering worst-case complexity on the rest. Small is defined in terms of asymptotic density. The apparent efficacy of generic case complexity is because for a wide variety of concrete computational problems, the most difficult instances seem to be rare. Typical instances are relatively easy. This approach to complexity originated in combinatorial group theory, which has a computational tradition going back to the beginning of the last century. The notion of generic complexity was introduced in a 2003 paper,I. Kapovich, A. Myasnikov, P. Schupp and V. Shpilrain, Generic case complexity, decision problems in group theory and random walks', J. Algebra, vol 264 (2003), 665–694. where authors show ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Computational Complexity Theory

In theoretical computer science and mathematics, computational complexity theory focuses on classifying computational problems according to their resource usage, and explores the relationships between these classifications. A computational problem is a task solved by a computer. A computation problem is solvable by mechanical application of mathematical steps, such as an algorithm. A problem is regarded as inherently difficult if its solution requires significant resources, whatever the algorithm used. The theory formalizes this intuition, by introducing mathematical models of computation to study these problems and quantifying their computational complexity, i.e., the amount of resources needed to solve them, such as time and storage. Other measures of complexity are also used, such as the amount of communication (used in communication complexity), the number of logic gate, gates in a circuit (used in circuit complexity) and the number of processors (used in parallel computing). O ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Time Complexity

In theoretical computer science, the time complexity is the computational complexity that describes the amount of computer time it takes to run an algorithm. Time complexity is commonly estimated by counting the number of elementary operations performed by the algorithm, supposing that each elementary operation takes a fixed amount of time to perform. Thus, the amount of time taken and the number of elementary operations performed by the algorithm are taken to be related by a constant factor. Since an algorithm's running time may vary among different inputs of the same size, one commonly considers the worst-case time complexity, which is the maximum amount of time required for inputs of a given size. Less common, and usually specified explicitly, is the average-case complexity, which is the average of the time taken on inputs of a given size (this makes sense because there are only a finite number of possible inputs of a given size). In both cases, the time complexity is gene ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Satisfiability Problem

In mathematical logic, a formula is ''satisfiable'' if it is true under some assignment of values to its variables. For example, the formula x+3=y is satisfiable because it is true when x=3 and y=6, while the formula x+1=x is not satisfiable over the integers. The dual concept to satisfiability is validity; a formula is ''valid'' if every assignment of values to its variables makes the formula true. For example, x+3=3+x is valid over the integers, but x+3=y is not. Formally, satisfiability is studied with respect to a fixed logic defining the syntax of allowed symbols, such as first-order logic, second-order logic or propositional logic. Rather than being syntactic, however, satisfiability is a semantic property because it relates to the ''meaning'' of the symbols, for example, the meaning of + in a formula such as x+1=x. Formally, we define an interpretation (or model) to be an assignment of values to the variables and an assignment of meaning to all other non-logical symbols, ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

NP-complete Problems

In computational complexity theory, NP-complete problems are the hardest of the problems to which ''solutions'' can be verified ''quickly''. Somewhat more precisely, a problem is NP-complete when: # It is a decision problem, meaning that for any input to the problem, the output is either "yes" or "no". # When the answer is "yes", this can be demonstrated through the existence of a short (polynomial length) ''solution''. # The correctness of each solution can be verified quickly (namely, in polynomial time) and a brute-force search algorithm can find a solution by trying all possible solutions. # The problem can be used to simulate every other problem for which we can verify quickly that a solution is correct. Hence, if we could find solutions of some NP-complete problem quickly, we could quickly find the solutions of every other problem to which a given solution can be easily verified. The name "NP-complete" is short for "nondeterministic polynomial-time complete". In this name, ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Presburger Arithmetic

Presburger arithmetic is the first-order theory of the natural numbers with addition, named in honor of Mojżesz Presburger, who introduced it in 1929. The signature of Presburger arithmetic contains only the addition operation and equality, omitting the multiplication operation entirely. The theory is computably axiomatizable; the axioms include a schema of induction. Presburger arithmetic is much weaker than Peano arithmetic, which includes both addition and multiplication operations. Unlike Peano arithmetic, Presburger arithmetic is a decidable theory. This means it is possible to algorithmically determine, for any sentence in the language of Presburger arithmetic, whether that sentence is provable from the axioms of Presburger arithmetic. The asymptotic running-time computational complexity of this algorithm is at least doubly exponential, however, as shown by . Overview The language of Presburger arithmetic contains constants 0 and 1 and a binary function +, interprete ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Decision Problem

In computability theory and computational complexity theory, a decision problem is a computational problem that can be posed as a yes–no question on a set of input values. An example of a decision problem is deciding whether a given natural number is prime. Another example is the problem, "given two numbers ''x'' and ''y'', does ''x'' evenly divide ''y''?" A decision procedure for a decision problem is an algorithmic method that answers the yes-no question on all inputs, and a decision problem is called decidable if there is a decision procedure for it. For example, the decision problem "given two numbers ''x'' and ''y'', does ''x'' evenly divide ''y''?" is decidable since there is a decision procedure called long division that gives the steps for determining whether ''x'' evenly divides ''y'' and the correct answer, ''YES'' or ''NO'', accordingly. Some of the most important problems in mathematics are undecidable, e.g. the halting problem. The field of computational ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Post Correspondence Problem

The Post correspondence problem is an undecidable decision problem that was introduced by Emil Post in 1946. Because it is simpler than the halting problem In computability theory (computer science), computability theory, the halting problem is the problem of determining, from a description of an arbitrary computer program and an input, whether the program will finish running, or continue to run for ... and the ''Entscheidungsproblem'' it is often used in proofs of undecidability. Definition of the problem Let A be an alphabet with at least two symbols. The input of the problem consists of two finite lists \alpha_, \ldots, \alpha_ and \beta_, \ldots, \beta_ of words over A. A solution to this problem is a sequence of indices (i_k)_ with K \ge 1 and 1 \le i_k \le N for all k, such that : \alpha_ \ldots \alpha_ = \beta_ \ldots \beta_. The decision problem then is to decide whether such a solution exists or not. Alternative definition :g: (i_1,\ldots,i_K) \mapsto \alpha ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Joel David Hamkins

Joel David Hamkins is an American mathematician and philosopher who is the John Cardinal O'Hara Professor of Logic at the University of Notre Dame. He has made contributions in mathematical logic, mathematical and philosophical logic, set theory and philosophy of set theory (particularly the idea of the set-theoretic multiverse), in computability theory, and in group theory. Biography After earning a Bachelor of Science in mathematics at the California Institute of Technology, Hamkins earned his Ph.D. in mathematics in 1994 at the University of California, Berkeley under the Doctoral advisor, supervision of W. Hugh Woodin, with a Doctoral dissertation, dissertation entitled ''Lifting and Extending Measures by Forcing; Fragile Measurability.'' He joined the faculty of the City University of New York in 1995, where he was a member of the doctoral faculties in Mathematics, in Philosophy and in Computer Science at the CUNY Graduate Center and professor of mathematics at the College ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

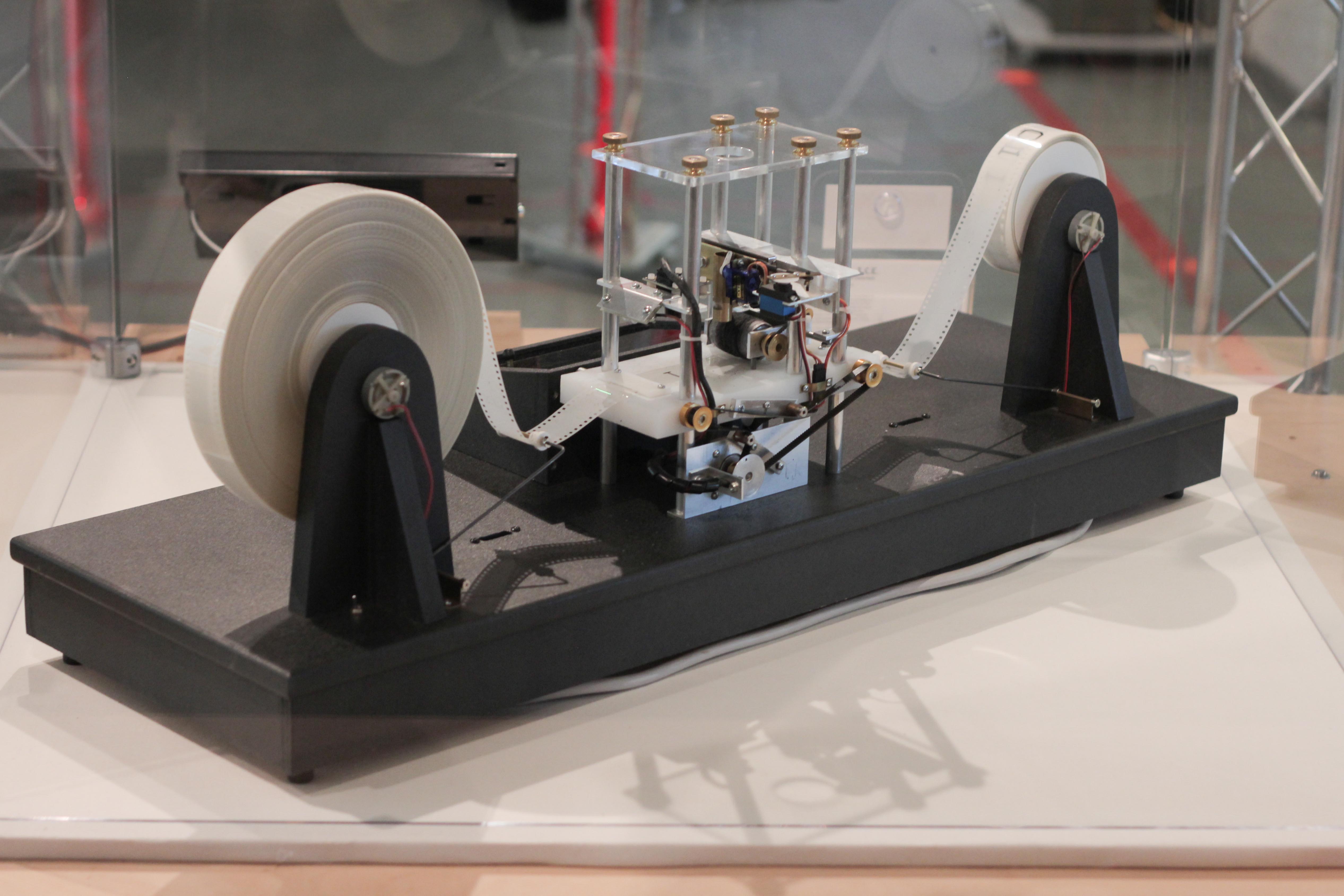

Turing Machine

A Turing machine is a mathematical model of computation describing an abstract machine that manipulates symbols on a strip of tape according to a table of rules. Despite the model's simplicity, it is capable of implementing any computer algorithm. The machine operates on an infinite memory tape divided into discrete mathematics, discrete cells, each of which can hold a single symbol drawn from a finite set of symbols called the Alphabet (formal languages), alphabet of the machine. It has a "head" that, at any point in the machine's operation, is positioned over one of these cells, and a "state" selected from a finite set of states. At each step of its operation, the head reads the symbol in its cell. Then, based on the symbol and the machine's own present state, the machine writes a symbol into the same cell, and moves the head one step to the left or the right, or halts the computation. The choice of which replacement symbol to write, which direction to move the head, and whet ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Halting Problem

In computability theory (computer science), computability theory, the halting problem is the problem of determining, from a description of an arbitrary computer program and an input, whether the program will finish running, or continue to run forever. The halting problem is ''Undecidable problem, undecidable'', meaning that no general algorithm exists that solves the halting problem for all possible program–input pairs. The problem comes up often in discussions of computability since it demonstrates that some functions are mathematically Definable set, definable but not Computable function, computable. A key part of the formal statement of the problem is a mathematical definition of a computer and program, usually via a Turing machine. The proof then shows, for any program that might determine whether programs halt, that a "pathological" program exists for which makes an incorrect determination. Specifically, is the program that, when called with some input, passes its own s ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Free Group

In mathematics, the free group ''F''''S'' over a given set ''S'' consists of all words that can be built from members of ''S'', considering two words to be different unless their equality follows from the group axioms (e.g. ''st'' = ''suu''−1''t'' but ''s'' ≠ ''t''−1 for ''s'',''t'',''u'' ∈ ''S''). The members of ''S'' are called generators of ''F''''S'', and the number of generators is the rank of the free group. An arbitrary group ''G'' is called free if it is isomorphic to ''F''''S'' for some subset ''S'' of ''G'', that is, if there is a subset ''S'' of ''G'' such that every element of ''G'' can be written in exactly one way as a product of finitely many elements of ''S'' and their inverses (disregarding trivial variations such as ''st'' = ''suu''−1''t''). A related but different notion is a free abelian group; both notions are particular instances of a free object from universal algebra. As such, free groups are defined by their universal property. History ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

HNN Extension

In mathematics, the HNN extension is an important construction of combinatorial group theory. Introduced in a 1949 paper ''Embedding Theorems for Groups'' by Graham Higman, Bernhard Neumann, and Hanna Neumann, it embeds a given group ''G'' into another group ''G' '', in such a way that two given isomorphic subgroups of ''G'' are conjugate (through a given isomorphism) in ''G' ''. Construction Let ''G'' be a group with presentation G = \langle S \mid R\rangle , and let \alpha\colon H \to K be an isomorphism between two subgroups of ''G''. Let ''t'' be a new symbol not in ''S'', and define :G*_ = \left \langle S,t \mid R, tht^=\alpha(h), \forall h\in H \right \rangle. The group G*_ is called the ''HNN extension of'' ''G'' ''relative to'' α. The original group G is called the ''base group'' for the construction, while the subgroups ''H'' and ''K'' are the ''associated subgroups''. The new generator ''t'' is called the ''stable letter''. Key properties Since the presentation fo ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |