|

Generalized Processor Sharing

Generalized processor sharing (GPS) is an ideal scheduling algorithm for process schedulers and network schedulers. It is related to the fair-queuing principle which groups packets into classes and shares the service capacity between them. GPS shares this capacity ''according to some fixed weights''. In process scheduling, GPS is "an idealized scheduling algorithm that achieves perfect fairness. All practical schedulers approximate GPS and use it as a reference to measure fairness." Generalized processor sharing assumes that traffic is fluid (infinitesimal packet sizes), and can be arbitrarily split. There are several service disciplines which track the performance of GPS quite closely such as weighted fair queuing (WFQ), also known as packet-by-packet generalized processor sharing (PGPS). Justification In a network such as the internet, different application types require different levels of performance. For example, email is a genuinely store and forward kind of application, ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Scheduling Algorithm

In computing, scheduling is the action of assigning resources to perform tasks. The resources may be processors, network links or expansion cards. The tasks may be threads, processes or data flows. The scheduling activity is carried out by a mechanism called a scheduler. Schedulers are often designed so as to keep all computer resources busy (as in load balancing), allow multiple users to share system resources effectively, or to achieve a target quality-of-service. Scheduling is fundamental to computation itself, and an intrinsic part of the execution model of a computer system; the concept of scheduling makes it possible to have computer multitasking with a single central processing unit (CPU). Goals A scheduler may aim at one or more goals, for example: * maximizing ''throughput'' (the total amount of work completed per time unit); * minimizing '' wait time'' (time from work becoming ready until the first point it begins execution); * minimizing '' latency'' or '' ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

First-come, First-served

Queueing theory is the mathematical study of Queue area, waiting lines, or wikt:queue, queues. A queueing model is constructed so that queue lengths and waiting time can be predicted. Queueing theory is generally considered a branch of operations research because the results are often used when making business decisions about the resources needed to provide a service. Queueing theory has its origins in research by Agner Krarup Erlang, who created models to describe the system of incoming calls at the Copenhagen Telephone Exchange Company. These ideas were seminal to the field of teletraffic engineering and have since seen applications in telecommunications, traffic engineering (transportation), traffic engineering, computing, project management, and particularly industrial engineering, where they are applied in the design of factories, shops, offices, and hospitals. Spelling The spelling "queueing" over "queuing" is typically encountered in the academic research field. In fa ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Weighted Round Robin

Weighted round robin (WRR) is a network scheduler for data flows, but also used to schedule processes. Weighted round robin is a generalisation of round-robin scheduling. It serves a set of queues or tasks. Whereas round-robin cycles over the queues or tasks and gives one service opportunity per cycle, weighted round robin offers to each a fixed number of opportunities, as specified by the configured weight which serves to influence the portion of capacity received by each queue or task. In computer networks, a service opportunity is the emission of one packet, if the selected queue is non-empty. If all packets have the same size, WRR is the simplest approximation of generalized processor sharing (GPS). Several variations of WRR exist. The main ones are the ''classical'' WRR, and the ''interleaved'' WRR. Algorithm Principles WRR is presented in the following as a network scheduler. It can also be used to schedule tasks in a similar way. A weighted round-robin network sc ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Processor Sharing

Processor sharing or egalitarian processor sharing is a service policy where the customers, clients or jobs are all served simultaneously, each receiving an equal fraction of the service capacity available. In such a system all jobs start service immediately (there is no queueing). The processor sharing algorithm "emerged as an idealisation of round-robin scheduling algorithms in time-shared computer systems". Queueing theory A single server queue operating subject to Poisson arrivals (such as an M/M/1 queue or M/G/1 queue) with a processor sharing discipline has a geometric stationary distribution. The sojourn time jobs experience has no closed form solution, even in an M/M/1 queue. Generalized processor sharing Generalized processor sharing is a multi-class adaptation of the policy which shares service capacity according to positive weight factors to all non-empty job classes at the node, irrespective of the number of jobs of each class present. Often it is assumed that ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Network Scheduler

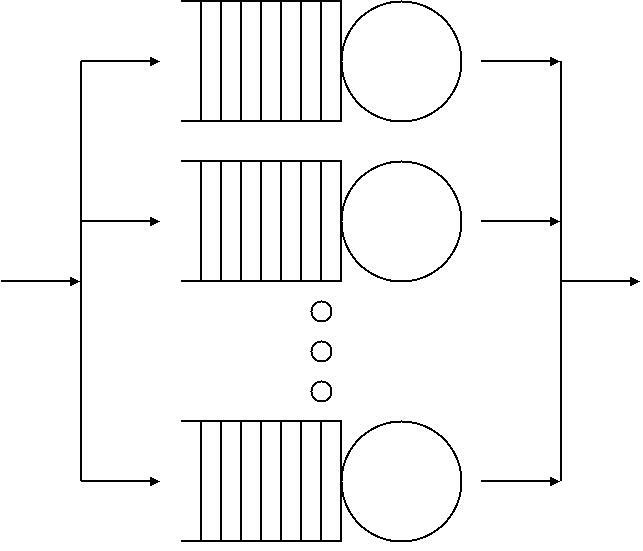

A network scheduler, also called packet scheduler, queueing discipline (qdisc) or queueing algorithm, is an arbiter on a node in a packet switching communication network. It manages the sequence of network packets in the transmit and receive queues of the protocol stack and network interface controller. There are several network schedulers available for the different operating systems, that implement many of the existing network scheduling algorithms. The network scheduler logic decides which network packet to forward next. The network scheduler is associated with a queuing system, storing the network packets temporarily until they are transmitted. Systems may have a single or multiple queues in which case each may hold the packets of one flow, classification, or priority. In some cases it may not be possible to schedule all transmissions within the constraints of the system. In these cases the network scheduler is responsible for deciding which traffic to forward and what ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Fairness Measure

Fairness measures or metrics are used in network engineering to determine whether users or applications are receiving a fair share of system resources. There are several mathematical and conceptual definitions of fairness. Transmission Control Protocol fairness Congestion control mechanisms for new network transmission protocols or peer-to-peer applications must interact well with Transmission Control Protocol (TCP). TCP fairness requires that a new protocol receive a no larger share of the network than a comparable TCP flow. This is important as TCP is the dominant transport protocol on the Internet, and if new protocols acquire unfair capacity they tend to cause problems such as congestion collapse. This was the case with the first versions of RealMedia's streaming protocol: it was based on UDP and was widely blocked at organizational firewalls until a TCP-based version was developed. TCP throughput unfairness over WiFi is a critical problem and needs further investigations. ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Deficit Round Robin

Deficit Round Robin (DRR), also Deficit Weighted Round Robin (DWRR), is a scheduling algorithm for the network scheduler. DRR is, similar to weighted fair queuing (WFQ), a packet-based implementation of the ideal Generalized Processor Sharing (GPS) policy. It was proposed by M. Shreedhar and G. Varghese in 1995 as an efficient (with ''O(1)'' complexity) and fair algorithm. Details In DRR, a scheduler handling N flows is configured with one quantum Q_i for each flow. This global idea is that, at each round, the flow i can send at most Q_i bytes, and the remaining, if any, is reported to the next round. In this way, the minimum rate that flow i will achieve over a long term is \fracR; where R is the link rate. Algorithm The DRR scans all non-empty queues in sequence. When a non-empty queue i is selected, its deficit counter is incremented by its quantum value. Then, the value of the deficit counter is a maximal number of bytes that can be sent at this turn: if the deficit count ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Weighted Fair Queuing

Weighted fair queueing (WFQ) is a network scheduling algorithm. WFQ is both a packet-based implementation of the generalized processor sharing (GPS) policy, and a natural extension of fair queuing (FQ). Whereas FQ shares the link's capacity in equal subparts, WFQ allows schedulers to specify, for each flow, which fraction of the capacity will be given. Weighted fair queuing is also known as packet-by-packet GPS (PGPS or P-GPS) since it approximates generalized processor sharing "to within one packet transmission time, regardless of the arrival patterns." Parametrization and fairness Like other GPS-like scheduling algorithms, the choice of the weights is left to the network administrator. There is no unique definition of what is "fair" (see for further discussion). By regulating the WFQ weights dynamically, WFQ can be utilized for controlling the quality of service Quality of service (QoS) is the description or measurement of the overall performance of a service, such ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Network Delay

Network delay is a design and performance characteristic of a telecommunications network. It specifies the latency for a bit of data to travel across the network from one communication endpoint to another. It is typically measured in multiples or fractions of a second. Delay may differ slightly, depending on the location of the specific pair of communicating endpoints. Engineers usually report both the maximum and average delay, and they divide the delay into several parts: * Processing delay time it takes a router to process the packet header * Queuing delay time the packet spends in routing queues * Transmission delay time it takes to push the packet's bits onto the link * Propagation delay time for a signal to propagate through the media A certain minimum level of delay is experienced by signals due to the time it takes to transmit a packet serially through a link. This delay is extended by more variable levels of delay due to network congestion. IP network delays c ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Scheduling (computing)

In computing, scheduling is the action of assigning resources to perform tasks. The resources may be processors, network links or expansion cards. The tasks may be threads, processes or data flows. The scheduling activity is carried out by a mechanism called a scheduler. Schedulers are often designed so as to keep all computer resources busy (as in load balancing), allow multiple users to share system resources effectively, or to achieve a target quality-of-service. Scheduling is fundamental to computation itself, and an intrinsic part of the execution model of a computer system; the concept of scheduling makes it possible to have computer multitasking with a single central processing unit (CPU). Goals A scheduler may aim at one or more goals, for example: * maximizing '' throughput'' (the total amount of work completed per time unit); * minimizing '' wait time'' (time from work becoming ready until the first point it begins execution); * minimizing '' latency'' o ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Videoconferencing

Videotelephony (also known as videoconferencing or video calling) is the use of audio signal, audio and video for simultaneous two-way communication. Today, videotelephony is widespread. There are many terms to refer to videotelephony. ''Videophones'' are standalone devices for video calling (compare Telephone). In the present day, devices like smartphones and computers are capable of video calling, reducing the demand for separate videophones. ''Videoconferencing'' implies group communication.Mulbach et al, 1995. pg. 291. Videoconferencing is used in telepresence, whose goal is to create the illusion that remote participants are in the same room. The concept of videotelephony was conceived in the late 19th century, and versions were available to the public starting in the 1930s. Early demonstrations were installed at booths in post offices and shown at various world expositions. In 1970, AT&T launched the first commercial personal videotelephone system. In addition to videoph ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Store And Forward

Store and forward is a telecommunications technique in which information is sent to an intermediate station where it is kept and sent at a later time to the final destination or to another intermediate station. The intermediate station, or node in a networking context, verifies the integrity of the message before forwarding it. In general, this technique is used in networks with intermittent connectivity, especially in the wilderness or environments requiring high mobility. It may also be preferable in situations when there are long delays in transmission and error rates are variable and high, or if a direct, end-to-end connection is not available. Modern store and forward networking * Store and forward originates with delay-tolerant networks. No real-time services are available for these kinds of networks. * Logistical Networking is a scalable form of store and forward networking that exposes network-embedded buffers on intermediate nodes and allows flexible creation of serv ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |