|

GPT-4

Generative Pre-trained Transformer 4 (GPT-4) is a multimodal large language model created by OpenAI and the fourth in its GPT series. It was released on March 14, 2023, and has been made publicly available in a limited form via ChatGPT Plus, with access to its commercial API being provided via a waitlist. As a transformer, GPT-4 was pretrained to predict the next token (using both public data and "data licensed from third-party providers"), and was then fine-tuned with reinforcement learning from human and AI feedback for human alignment and policy compliance. Observers reported the GPT-4 based version of ChatGPT to be an improvement on the previous (GPT-3.5 based) ChatGPT, with the caveat that GPT-4 retains some of the same problems. Unlike the predecessors, GPT-4 can take images as well as text as input. OpenAI has declined to reveal technical information such as the size of the GPT-4 model. Background OpenAI published their first paper on GPT in 2018, called "Improv ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Large Language Model

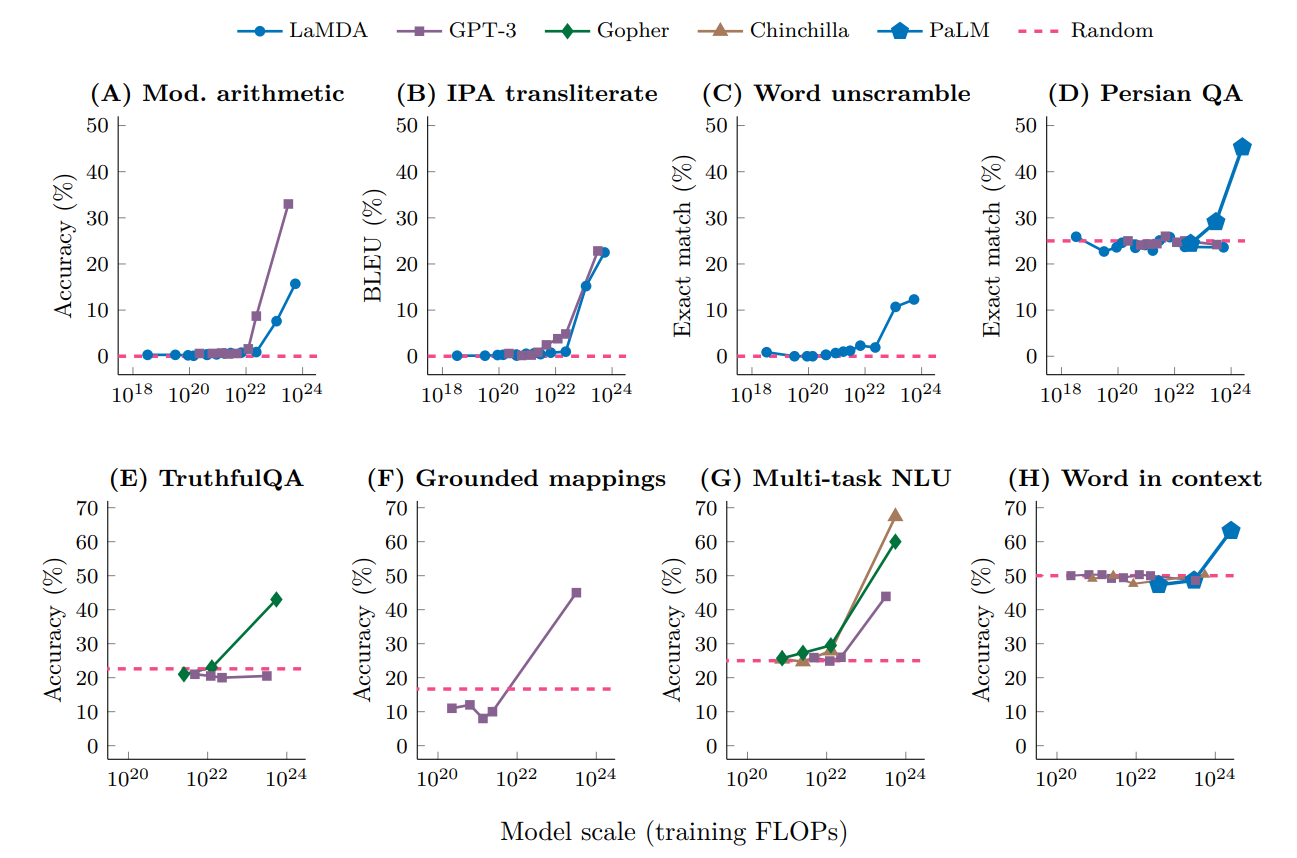

A large language model (LLM) is a language model consisting of a neural network with many parameters (typically billions of weights or more), trained on large quantities of unlabelled text using self-supervised learning. LLMs emerged around 2018 and perform well at a wide variety of tasks. This has shifted the focus of natural language processing research away from the previous paradigm of training specialized supervised models for specific tasks. Properties Though the term ''large language model'' has no formal definition, it often refers to deep learning models having a parameter count on the order of billions or more. LLMs are general purpose models which excel at a wide range of tasks, as opposed to being trained for one specific task (such as sentiment analysis, named entity recognition, or mathematical reasoning). The skill with which they accomplish tasks, and the range of tasks at which they are capable, seems to be a function of the amount of resources (data, parameter-siz ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

ChatGPT

ChatGPT (Generative Pre-trained Transformer) is a chatbot launched by OpenAI in November 2022. It is built on top of OpenAI's GPT-3 family of large language models, and is fine-tuned (an approach to transfer learning) with both supervised and reinforcement learning techniques. ChatGPT was launched as a prototype on November 30, 2022, and quickly garnered attention for its detailed responses and articulate answers across many domains of knowledge. Its uneven factual accuracy was identified as a significant drawback. Following the release of ChatGPT, OpenAI was valued at $29 billion. Training ChatGPT was fine-tuned on top of GPT-3.5 using supervised learning as well as reinforcement learning. Both approaches used human trainers to improve the model's performance. In the case of supervised learning, the model was provided with conversations in which the trainers played both sides: the user and the AI assistant. In the reinforcement step, human trainers first ranked responses ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Reinforcement Learning From Human Feedback

In machine learning, reinforcement learning from human feedback (RLHF) or reinforcement learning from human preferences is a technique that trains a "reward model" directly from human feedback and uses the model as a reward function to optimize an agent's policy using reinforcement learning (RL) through an optimization algorithm like Proximal Policy Optimization. The reward model is trained in advance to the policy being optimized to predict if a given output is good (high reward) or bad (low reward). RLHF can improve the robustness and exploration of RL agents, especially when the reward function is sparse or noisy. Human feedback is collected by asking humans to rank instances of the agent's behavior. These rankings can then be used to score outputs, for example with the Elo rating system. RLHF has been applied to various domains of natural language processing, such as conversational agents, text summarization, and natural language understanding. Ordinary reinforcement learnin ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

OpenAI

OpenAI is an artificial intelligence (AI) research laboratory consisting of the for-profit corporation OpenAI LP and its parent company, the non-profit OpenAI Inc. The company conducts research in the field of AI with the stated goal of promoting and developing friendly AI in a way that benefits humanity as a whole. The organization was founded in San Francisco in late 2015 by Sam Altman, Elon Musk, and others, who collectively pledged US$1 billion. Musk resigned from the board in February 2018 but remained a donor. In 2019, OpenAI LP received a 1 billion investment from Microsoft. OpenAI is headquartered at the Pioneer Building in Mission District, San Francisco. History In December 2015, Sam Altman, Elon Musk, Greg Brockman, Reid Hoffman, Jessica Livingston, Peter Thiel, Amazon Web Services (AWS), Infosys, and YC Research announced the formation of OpenAI and pledged over 1 billion to the venture. The organization stated it would "freely collaborate" wi ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Med-PaLM

Palm most commonly refers to: * Palm of the hand, the central region of the front of the hand * Palm plants, of family Arecaceae ** List of Arecaceae genera * Several other plants known as "palm" Palm or Palms may also refer to: Music * Palm (band), an American rock band * Palms (band), an American rock band featuring members of Deftones and Isis ** Palms (Palms album), their 2013 album * Palms (Thrice album), a 2018 album by American rock band Thrice Businesses and organizations * Palm, Inc., defunct American electronics manufacturer * Palm Breweries, a Belgian company * Palm Pictures, an American entertainment company * Palm Records, a French jazz record label * Palms Casino Resort, a hotel and casino in Las Vegas, U.S. * The Palm (restaurant), New York City, U.S. * Palm Cabaret and Bar, Puerto Vallarta, Jalisco, Mexico Places United States * Midway, Lafayette County, Arkansas, also known as Palm * Palm, Pennsylvania * Palms, Los Angeles ** Palms station * Palms, Minden ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Inference (machine Learning)

Statistical inference is the process of using data analysis to infer properties of an underlying distribution of probability.Upton, G., Cook, I. (2008) ''Oxford Dictionary of Statistics'', OUP. . Inferential statistical analysis infers properties of a population, for example by testing hypotheses and deriving estimates. It is assumed that the observed data set is sampled from a larger population. Inferential statistics can be contrasted with descriptive statistics. Descriptive statistics is solely concerned with properties of the observed data, and it does not rest on the assumption that the data come from a larger population. In machine learning, the term ''inference'' is sometimes used instead to mean "make a prediction, by evaluating an already trained model"; in this context inferring properties of the model is referred to as ''training'' or ''learning'' (rather than ''inference''), and using a model for prediction is referred to as ''inference'' (instead of ''prediction'' ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Supervised Learning

Supervised learning (SL) is a machine learning paradigm for problems where the available data consists of labelled examples, meaning that each data point contains features (covariates) and an associated label. The goal of supervised learning algorithms is learning a function that maps feature vectors (inputs) to labels (output), based on example input-output pairs. It infers a function from ' consisting of a set of ''training examples''. In supervised learning, each example is a ''pair'' consisting of an input object (typically a vector) and a desired output value (also called the ''supervisory signal''). A supervised learning algorithm analyzes the training data and produces an inferred function, which can be used for mapping new examples. An optimal scenario will allow for the algorithm to correctly determine the class labels for unseen instances. This requires the learning algorithm to generalize from the training data to unseen situations in a "reasonable" way (see inductive b ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Dataset (machine Learning)

In machine learning, a common task is the study and construction of algorithms that can learn from and make predictions on data. Such algorithms function by making data-driven predictions or decisions, through building a mathematical model from input data. These input data used to build the model are usually divided in multiple data sets. In particular, three data sets are commonly used in different stages of the creation of the model: training, validation and test sets. The model is initially fit on a training data set, which is a set of examples used to fit the parameters (e.g. weights of connections between neurons in artificial neural networks) of the model. The model (e.g. a naive Bayes classifier) is trained on the training data set using a supervised learning method, for example using optimization methods such as gradient descent or stochastic gradient descent. In practice, the training data set often consists of pairs of an input vector (or scalar) and the correspondi ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Hyperparameter (machine Learning)

In machine learning, a hyperparameter is a parameter whose value is used to control the learning process. By contrast, the values of other parameters (typically node weights) are derived via training. Hyperparameters can be classified as model hyperparameters, that cannot be inferred while fitting the machine to the training set because they refer to the model selection task, or algorithm hyperparameters, that in principle have no influence on the performance of the model but affect the speed and quality of the learning process. An example of a model hyperparameter is the topology and size of a neural network. Examples of algorithm hyperparameters are learning rate and batch size as well as mini-batch size. Batch size can refer to the full data sample where mini-batch size would be a smaller sample set. Different model training algorithms require different hyperparameters, some simple algorithms (such as ordinary least squares regression) require none. Given these hyperparameters ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Learning Rate

In machine learning and statistics, the learning rate is a tuning parameter in an optimization algorithm that determines the step size at each iteration while moving toward a minimum of a loss function. Since it influences to what extent newly acquired information overrides old information, it metaphorically represents the speed at which a machine learning model "learns". In the adaptive control literature, the learning rate is commonly referred to as gain. In setting a learning rate, there is a trade-off between the rate of convergence and overshooting. While the descent direction is usually determined from the gradient of the loss function, the learning rate determines how big a step is taken in that direction. A too high learning rate will make the learning jump over minima but a too low learning rate will either take too long to converge or get stuck in an undesirable local minimum. In order to achieve faster convergence, prevent oscillations and getting stuck in undesirable ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Bar Examination In The United States

In the United States, those seeking to become lawyers must normally pass a bar examination before they can be Admission to the bar in the United States, admitted to the bar and become licensed to practice law. Bar exams are administered by states or territories, generally by agencies under the authority of state supreme courts. Almost all states use some examination components created by the National Conference of Bar Examiners (NCBE). Forty-one jurisdictions have adopted the Uniform Bar Examination (UBE), which is composed entirely of NCBE-created components. In every U.S. jurisdiction except Wisconsin, all those seeking admission to the bar must pass a state bar examination. In Wisconsin, graduates of the Juris Doctor degree programs of the state's two American Bar Association-accredited law schoolsthe University of Wisconsin Law School and Marquette University Law Schoolmay be admitted to the Wisconsin bar by diploma privilege without taking a bar examination. History The fir ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

_-1.jpg)