|

Guillotine Cutting

Guillotine cutting is the process of producing small rectangular items of fixed dimensions from a given large rectangular sheet, using only guillotine-cuts. A guillotine-cut (also called an edge-to-edge cut) is a straight bisecting line going from one edge of an existing rectangle to the opposite edge, similarly to a paper guillotine. Guillotine cutting is particularly common in the glass industry. Glass sheets are scored along horizontal and vertical lines, and then broken along these lines to obtain smaller panels. It is also useful for cutting steel plates, cutting of wood sheets to make furniture, and cutting of cardboard into boxes. There are various optimization problems related to guillotine cutting, such as: maximize the total area of the produced pieces, or their total value; minimize the amount of waste (unused parts) of the large sheet, or the total number of sheets. They have been studied in combinatorial geometry, operations research and industrial engineering. A re ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

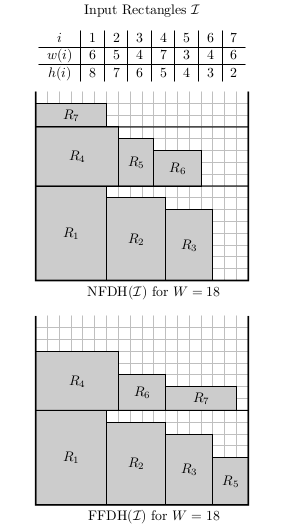

Strip Packing Problem

The strip packing problem is a 2-dimensional geometric minimization problem. Given a set of axis-aligned rectangles and a strip of bounded width and infinite height, determine an overlapping-free packing of the rectangles into the strip minimizing its height. This problem is a cutting and packing problem and is classified as an ''Open Dimension Problem'' according to Wäscher et al. This problem arises in the area of scheduling, where it models jobs, that require a contiguous portion of the memory over a given time period. Another example is the area of industrial manufacturing, where rectangular pieces need to be cut out of a sheet of material (e.g., cloth or paper) that has a fixed width but infinite length, and one wants to minimize the wasted material. This problem was first studied in 1980. It is strongly-NP hard and there exists no polynomial time approximation algorithm with a ratio smaller than 3/2 unless P = NP. However, the best approximation ratio achieved so far (by a ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Hamiltonian Circuit

In the mathematical field of graph theory, a Hamiltonian path (or traceable path) is a path in an undirected or directed graph that visits each vertex exactly once. A Hamiltonian cycle (or Hamiltonian circuit) is a cycle that visits each vertex exactly once. A Hamiltonian path that starts and ends at adjacent vertices can be completed by adding one more edge to form a Hamiltonian cycle, and removing any edge from a Hamiltonian cycle produces a Hamiltonian path. Determining whether such paths and cycles exist in graphs (the Hamiltonian path problem and Hamiltonian cycle problem) are NP-complete. Hamiltonian paths and cycles are named after William Rowan Hamilton who invented the icosian game, now also known as ''Hamilton's puzzle'', which involves finding a Hamiltonian cycle in the edge graph of the dodecahedron. Hamilton solved this problem using the icosian calculus, an algebraic structure based on roots of unity with many similarities to the quaternions (also invented by Hamilt ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Directed Graph

In mathematics, and more specifically in graph theory, a directed graph (or digraph) is a graph that is made up of a set of vertices connected by directed edges, often called arcs. Definition In formal terms, a directed graph is an ordered pair where * ''V'' is a set whose elements are called '' vertices'', ''nodes'', or ''points''; * ''A'' is a set of ordered pairs of vertices, called ''arcs'', ''directed edges'' (sometimes simply ''edges'' with the corresponding set named ''E'' instead of ''A''), ''arrows'', or ''directed lines''. It differs from an ordinary or undirected graph, in that the latter is defined in terms of unordered pairs of vertices, which are usually called ''edges'', ''links'' or ''lines''. The aforementioned definition does not allow a directed graph to have multiple arrows with the same source and target nodes, but some authors consider a broader definition that allows directed graphs to have such multiple arcs (namely, they allow the arc set to be a m ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Best-first Search

Best-first search is a class of search algorithms, which explore a graph by expanding the most promising node chosen according to a specified rule. Judea Pearl described the best-first search as estimating the promise of node ''n'' by a "heuristic evaluation function f(n) which, in general, may depend on the description of ''n'', the description of the goal, the information gathered by the search up to that point, and most importantly, on any extra knowledge about the problem domain.". pp. 94 and 95 (note 3). Some authors have used "best-first search" to refer specifically to a search with a heuristic that attempts to predict how close the end of a path is to a solution (or, goal), so that paths which are judged to be closer to a solution (or, goal) are extended first. This specific type of search is called '' greedy best-first search'' or ''pure heuristic search''. Efficient selection of the current best candidate for extension is typically implemented using a priority queue. Th ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Branch And Bound

Branch and bound (BB, B&B, or BnB) is an algorithm design paradigm for discrete and combinatorial optimization problems, as well as mathematical optimization. A branch-and-bound algorithm consists of a systematic enumeration of candidate solutions by means of state space search: the set of candidate solutions is thought of as forming a rooted tree with the full set at the root. The algorithm explores ''branches'' of this tree, which represent subsets of the solution set. Before enumerating the candidate solutions of a branch, the branch is checked against upper and lower estimated ''bounds'' on the optimal solution, and is discarded if it cannot produce a better solution than the best one found so far by the algorithm. The algorithm depends on efficient estimation of the lower and upper bounds of regions/branches of the search space. If no bounds are available, the algorithm degenerates to an exhaustive search. The method was first proposed by Ailsa Land and Alison Doig whilst ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Heuristic (computer Science)

In mathematical optimization and computer science, heuristic (from Greek εὑρίσκω "I find, discover") is a technique designed for solving a problem more quickly when classic methods are too slow for finding an approximate solution, or when classic methods fail to find any exact solution. This is achieved by trading optimality, completeness, accuracy, or precision for speed. In a way, it can be considered a shortcut. A heuristic function, also simply called a heuristic, is a function that ranks alternatives in search algorithms at each branching step based on available information to decide which branch to follow. For example, it may approximate the exact solution. Definition and motivation The objective of a heuristic is to produce a solution in a reasonable time frame that is good enough for solving the problem at hand. This solution may not be the best of all the solutions to this problem, or it may simply approximate the exact solution. But it is still valuable b ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Tree Search

In computer science, tree traversal (also known as tree search and walking the tree) is a form of graph traversal and refers to the process of visiting (e.g. retrieving, updating, or deleting) each node in a tree data structure, exactly once. Such traversals are classified by the order in which the nodes are visited. The following algorithms are described for a binary tree, but they may be generalized to other trees as well. Types Unlike linked lists, one-dimensional arrays and other linear data structures, which are canonically traversed in linear order, trees may be traversed in multiple ways. They may be traversed in depth-first or breadth-first order. There are three common ways to traverse them in depth-first order: in-order, pre-order and post-order. Beyond these basic traversals, various more complex or hybrid schemes are possible, such as depth-limited searches like iterative deepening depth-first search. The latter, as well as breadth-first search, can also be used t ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Recursion

Recursion (adjective: ''recursive'') occurs when a thing is defined in terms of itself or of its type. Recursion is used in a variety of disciplines ranging from linguistics to logic. The most common application of recursion is in mathematics and computer science, where a function being defined is applied within its own definition. While this apparently defines an infinite number of instances (function values), it is often done in such a way that no infinite loop or infinite chain of references ("crock recursion") can occur. Formal definitions In mathematics and computer science, a class of objects or methods exhibits recursive behavior when it can be defined by two properties: * A simple ''base case'' (or cases) — a terminating scenario that does not use recursion to produce an answer * A ''recursive step'' — a set of rules that reduces all successive cases toward the base case. For example, the following is a recursive definition of a person's ''ancestor''. One's ances ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Dynamic Programming

Dynamic programming is both a mathematical optimization method and a computer programming method. The method was developed by Richard Bellman in the 1950s and has found applications in numerous fields, from aerospace engineering to economics. In both contexts it refers to simplifying a complicated problem by breaking it down into simpler sub-problems in a recursive manner. While some decision problems cannot be taken apart this way, decisions that span several points in time do often break apart recursively. Likewise, in computer science, if a problem can be solved optimally by breaking it into sub-problems and then recursively finding the optimal solutions to the sub-problems, then it is said to have ''optimal substructure''. If sub-problems can be nested recursively inside larger problems, so that dynamic programming methods are applicable, then there is a relation between the value of the larger problem and the values of the sub-problems.Cormen, T. H.; Leiserson, C. E.; Rives ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Approximation Algorithm

In computer science and operations research, approximation algorithms are efficient algorithms that find approximate solutions to optimization problems (in particular NP-hard problems) with provable guarantees on the distance of the returned solution to the optimal one. Approximation algorithms naturally arise in the field of theoretical computer science as a consequence of the widely believed P ≠ NP conjecture. Under this conjecture, a wide class of optimization problems cannot be solved exactly in polynomial time. The field of approximation algorithms, therefore, tries to understand how closely it is possible to approximate optimal solutions to such problems in polynomial time. In an overwhelming majority of the cases, the guarantee of such algorithms is a multiplicative one expressed as an approximation ratio or approximation factor i.e., the optimal solution is always guaranteed to be within a (predetermined) multiplicative factor of the returned solution. However, there are ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Exact Algorithm

In computer science and operations research, exact algorithms are algorithms that always solve an optimization problem to optimality. Unless P = NP, an exact algorithm for an NP-hard optimization problem cannot run in worst-case polynomial time. There has been extensive research on finding exact algorithms whose running time is exponential with a low base. See also * Approximation-preserving reduction * APX is the class of problems with some constant-factor approximation algorithm * Heuristic algorithm In mathematical optimization and computer science, heuristic (from Greek εὑρίσκω "I find, discover") is a technique designed for solving a problem more quickly when classic methods are too slow for finding an approximate solution, or whe ... * PTAS - a type of approximation algorithm that takes the approximation ratio as a parameter References {{reflist Computational complexity theory Optimization algorithms and methods ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |