|

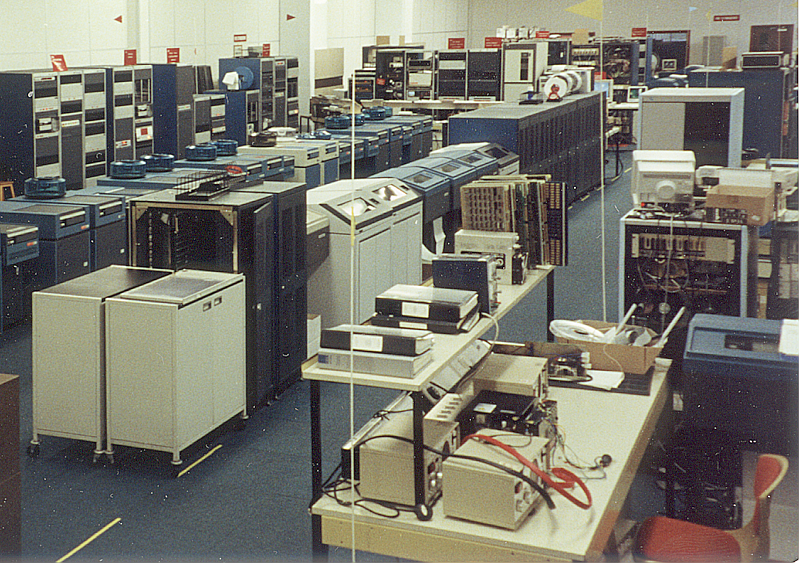

GEC 4000 Series

The GEC 4000 was a series of 16-bit, 16/32-bit minicomputers produced by GEC Computers, GEC Computers Ltd in the United Kingdom during the 1970s, 1980s and early 1990s. History GEC Computers was formed in 1968 as a business unit of the General Electric Company, GEC conglomerate. It inherited from Elliott Brothers (computer company), Elliott Automation the ageing Elliott 900 series, and needed to develop a new range of systems. Three ranges were identified, known internally as Alpha, Beta, and Gamma. Alpha appeared first and became the GEC 2050 8-bit minicomputer. Beta followed and became the GEC 4080. Gamma was never developed, so a few of its enhanced features were consequently pulled back into the 4080. The principal designer of the GEC 4080 was Dr. Michael Melliar-Smith and the principal designer of the 4060 and 4090 was Peter Mackley. The 4000 series systems were developed and manufactured in the UK at GEC Computers' Borehamwood offices in Elstree Way. Development and m ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Minicomputer

A minicomputer, or colloquially mini, is a type of general-purpose computer mostly developed from the mid-1960s, built significantly smaller and sold at a much lower price than mainframe computers . By 21st century-standards however, a mini is an exceptionally large machine. Minicomputers in the traditional technical sense covered here are only small relative to generally even earlier and much bigger machines. The class formed a distinct group with its own software architectures and operating systems. Minis were designed for control, instrumentation, human interaction, and communication switching, as distinct from calculation and record keeping. Many were sold indirectly to original equipment manufacturers (OEMs) for final end-use application. During the two-decade lifetime of the minicomputer class (1965–1985), almost 100 minicomputer vendor companies formed. Only a half-dozen remained by the mid-1980s. When single-chip CPU microprocessors appeared in the 1970s, the defi ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

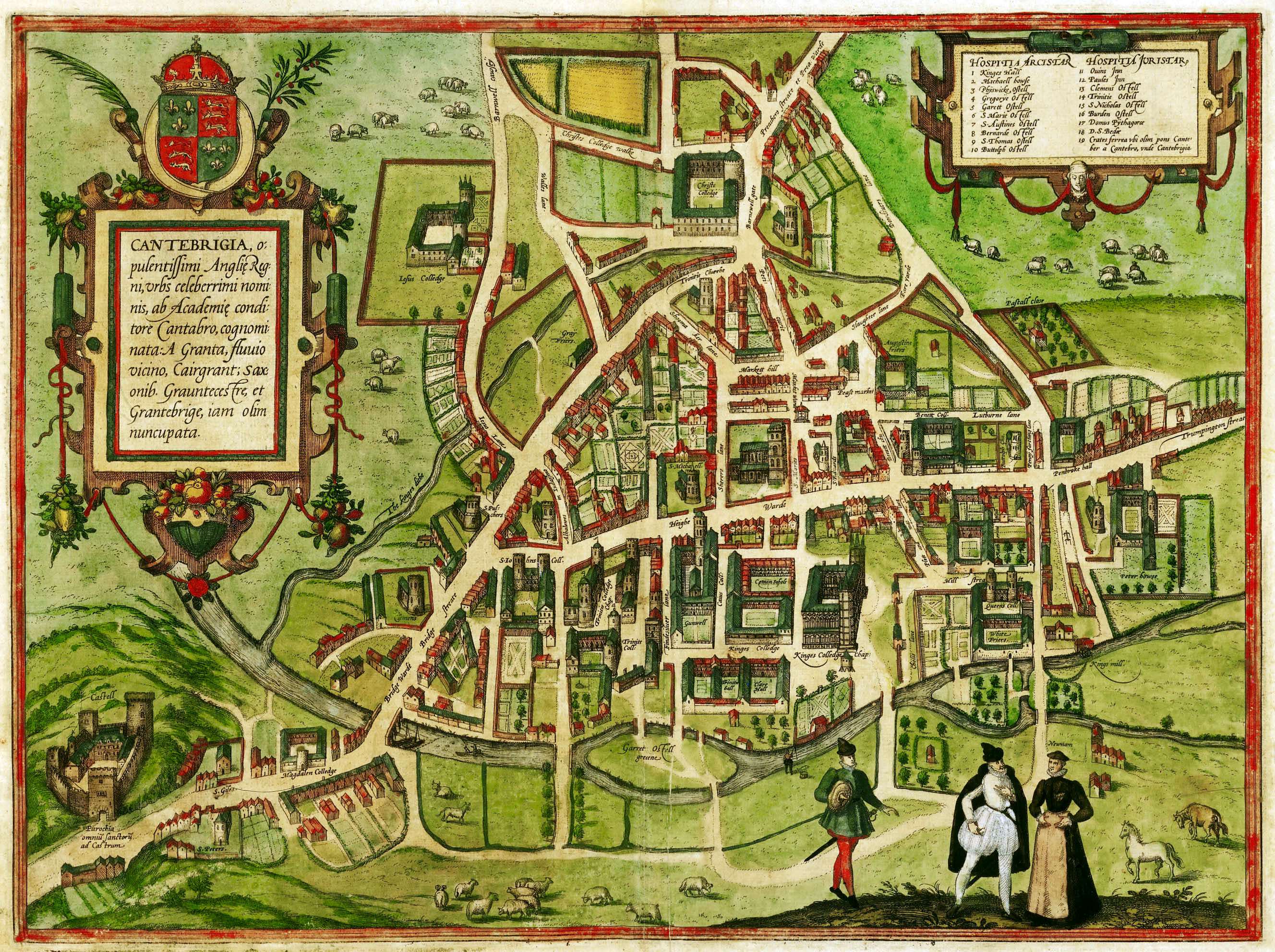

Cambridge

Cambridge ( ) is a List of cities in the United Kingdom, city and non-metropolitan district in the county of Cambridgeshire, England. It is the county town of Cambridgeshire and is located on the River Cam, north of London. As of the 2021 United Kingdom census, the population of the City of Cambridge was 145,700; the population of the wider built-up area (which extends outside the city council area) was 181,137. (2021 census) There is archaeological evidence of settlement in the area as early as the Bronze Age, and Cambridge became an important trading centre during the Roman Britain, Roman and Viking eras. The first Town charter#Municipal charters, town charters were granted in the 12th century, although modern city status was not officially conferred until 1951. The city is well known as the home of the University of Cambridge, which was founded in 1209 and consistently ranks among the best universities in the world. The buildings of the university include King's College Chap ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Instruction Set

In computer science, an instruction set architecture (ISA) is an abstract model that generally defines how software controls the CPU in a computer or a family of computers. A device or program that executes instructions described by that ISA, such as a central processing unit (CPU), is called an ''implementation'' of that ISA. In general, an ISA defines the supported Machine code, instructions, data types, Register (computer), registers, the hardware support for managing Computer memory, main memory, fundamental features (such as the memory consistency, addressing modes, virtual memory), and the input/output model of implementations of the ISA. An ISA specifies the behavior of machine code running on implementations of that ISA in a fashion that does not depend on the characteristics of that implementation, providing binary compatibility between implementations. This enables multiple implementations of an ISA that differ in characteristics such as Computer performance, performa ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Complex Instruction Set Computer

A complex instruction set computer (CISC ) is a computer architecture in which single instructions can execute several low-level operations (such as a load from memory, an arithmetic operation, and a memory store) or are capable of multi-step operations or addressing modes within single instructions. The term was retroactively coined in contrast to reduced instruction set computer (RISC) and has therefore become something of an umbrella term for everything that is not RISC, where the typical differentiating characteristic is that most RISC designs use uniform instruction length for almost all instructions, and employ strictly separate load and store instructions. Examples of CISC architectures include complex mainframe computers to simplistic microcontrollers where memory load and store operations are not separated from arithmetic instructions. Specific instruction set architectures that have been retroactively labeled CISC are System/360 through z/Architecture, the PDP-1 ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Device Drivers

In the context of an operating system, a device driver is a computer program that operates or controls a particular type of device that is attached to a computer or automaton. A driver provides a software interface to hardware devices, enabling operating systems and other computer programs to access hardware functions without needing to know precise details about the hardware being used. A driver communicates with the device through the computer bus or communications subsystem to which the hardware connects. When a calling program invokes a routine in the driver, the driver issues commands to the device (drives it). Once the device sends data back to the driver, the driver may invoke routines in the original calling program. Drivers are hardware dependent and operating-system-specific. They usually provide the interrupt handling required for any necessary asynchronous time-dependent hardware interface. Purpose The main purpose of device drivers is to provide abstraction b ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Interrupts

In digital computers, an interrupt (sometimes referred to as a trap) is a request for the processor to ''interrupt'' currently executing code (when permitted), so that the event can be processed in a timely manner. If the request is accepted, the processor will suspend its current activities, save its state, and execute a function called an ''interrupt handler'' (or an ''interrupt service routine'', ISR) to deal with the event. This interruption is often temporary, allowing the software to resume normal activities after the interrupt handler finishes, although the interrupt could instead indicate a fatal error. Interrupts are commonly used by hardware devices to indicate electronic or physical state changes that require time-sensitive attention. Interrupts are also commonly used to implement computer multitasking and system calls, especially in real-time computing. Systems that use interrupts in these ways are said to be interrupt-driven. History Hardware interrupts were int ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Message Passing

In computer science, message passing is a technique for invoking behavior (i.e., running a program) on a computer. The invoking program sends a message to a process (which may be an actor or object) and relies on that process and its supporting infrastructure to then select and run some appropriate code. Message passing differs from conventional programming where a process, subroutine, or function is directly invoked by name. Message passing is key to some models of concurrency and object-oriented programming. Message passing is ubiquitous in modern computer software. It is used as a way for the objects that make up a program to work with each other and as a means for objects and systems running on different computers (e.g., the Internet) to interact. Message passing may be implemented by various mechanisms, including channels. Overview Message passing is a technique for invoking behavior (i.e., running a program) on a computer. In contrast to the traditional technique of ca ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Semaphore (programming)

In computer science, a semaphore is a variable or abstract data type used to control access to a common resource by multiple threads and avoid critical section problems in a concurrent system such as a multitasking operating system. Semaphores are a type of synchronization primitive. A trivial semaphore is a plain variable that is changed (for example, incremented or decremented, or toggled) depending on programmer-defined conditions. A useful way to think of a semaphore as used in a real-world system is as a record of how many units of a particular resource are available, coupled with operations to adjust that record ''safely'' (i.e., to avoid race conditions) as units are acquired or become free, and, if necessary, wait until a unit of the resource becomes available. Though semaphores are useful for preventing race conditions, they do not guarantee their absence. Semaphores that allow an arbitrary resource count are called counting semaphores, while semaphores that are ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Context Switching

In computing, a context switch is the process of storing the state of a process or thread, so that it can be restored and resume execution at a later point, and then restoring a different, previously saved, state. This allows multiple processes to share a single central processing unit (CPU), and is an essential feature of a multiprogramming or multitasking operating system. In a traditional CPU, each process – a program in execution – uses the various CPU registers to store data and hold the current state of the running process. However, in a multitasking operating system, the operating system switches between processes or threads to allow the execution of multiple processes simultaneously. For every switch, the operating system must save the state of the currently running process, followed by loading the next process state, which will run on the CPU. This sequence of operations that stores the state of the running process and loads the following running process is called a ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Process (computing)

In computing, a process is the Instance (computer science), instance of a computer program that is being executed by one or many thread (computing), threads. There are many different process models, some of which are light weight, but almost all processes (even entire virtual machines) are rooted in an operating system (OS) process which comprises the program code, assigned system resources, physical and logical access permissions, and data structures to initiate, control and coordinate execution activity. Depending on the OS, a process may be made up of multiple threads of execution that execute instructions Concurrency (computer science), concurrently. While a computer program is a passive collection of Instruction set, instructions typically stored in a file on disk, a process is the execution of those instructions after being loaded from the disk into memory. Several processes may be associated with the same program; for example, opening up several instances of the same progra ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |