|

Frege System

In proof complexity, a Frege system is a propositional proof system whose proofs are sequences of formulas derived using a finite set of sound and implicationally complete inference rules. Frege systems (more often known as Hilbert systems in general proof theory) are named after Gottlob Frege. Formal definition Let ''K'' be a finite functionally complete set of Boolean connectives, and consider propositional formulas built from variables ''p''0, ''p''1, ''p''2, ... using ''K''-connectives. A Frege rule is an inference rule of the form :r=\fracB, where ''B''1, ..., ''Bn'', ''B'' are formulas. If ''R'' is a finite set of Frege rules, then ''F'' = (''K'',''R'') defines a derivation system in the following way. If ''X'' is a set of formulas, and ''A'' is a formula, then an ''F''-derivation of ''A'' from axioms ''X'' is a sequence of formulas ''A''1, ..., ''Am'' such that ''Am'' = ''A'', and every ''Ak'' is a member of ''X'', or it is derived from some of the formulas ''Ai'', ' ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Proof Complexity

In logic and theoretical computer science, and specifically proof theory and computational complexity theory, proof complexity is the field aiming to understand and analyse the computational resources that are required to prove or refute statements. Research in proof complexity is predominantly concerned with proving proof-length lower and upper bounds in various propositional proof systems. For example, among the major challenges of proof complexity is showing that the Frege system, the usual propositional calculus, does not admit polynomial-size proofs of all tautologies. Here the size of the proof is simply the number of symbols in it, and a proof is said to be of polynomial size if it is polynomial in the size of the tautology it proves. Systematic study of proof complexity began with the work of Stephen Cook and Robert Reckhow (1979) who provided the basic definition of a propositional proof system from the perspective of computational complexity. Specifically Cook and Reckhow ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Frege's Propositional Calculus

In mathematical logic, Frege's propositional calculus was the first axiomatization of propositional calculus. It was invented by Gottlob Frege, who also invented predicate calculus, in 1879 as part of his second-order predicate calculus (although Charles Peirce was the first to use the term "second-order" and developed his own version of the predicate calculus independently of Frege). It makes use of just two logical operators: implication and negation, and it is constituted by six axioms and one inference rule: modus ponens. Frege's propositional calculus is equivalent to any other classical propositional calculus, such as the "standard PC" with 11 axioms. Frege's PC and standard PC share two common axioms: THEN-1 and THEN-2. Notice that axioms THEN-1 through THEN-3 only make use of (and define) the implication operator, whereas axioms FRG-1 through FRG-3 define the negation operator. The following theorems will aim to find the remaining nine axioms of standard PC within the ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Boolean Circuit

In computational complexity theory and circuit complexity, a Boolean circuit is a mathematical model for combinational digital logic circuits. A formal language can be decided by a family of Boolean circuits, one circuit for each possible input length. Boolean circuits are defined in terms of the logic gates they contain. For example, a circuit might contain binary AND and OR gates and unary NOT gates, or be entirely described by binary NAND gates. Each gate corresponds to some Boolean function that takes a fixed number of bits as input and outputs a single bit. Boolean circuits provide a model for many digital components used in computer engineering, including multiplexers, adders, and arithmetic logic units, but they exclude sequential logic. They are an abstraction that omits many aspects relevant to designing real digital logic circuits, such as metastability, fanout, glitches, power consumption, and propagation delay variability. Formal definition In giving a forma ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Pigeonhole Principle

In mathematics, the pigeonhole principle states that if items are put into containers, with , then at least one container must contain more than one item. For example, if one has three gloves (and none is ambidextrous/reversible), then there must be at least two right-handed gloves, or at least two left-handed gloves, because there are three objects, but only two categories of handedness to put them into. This seemingly obvious statement, a type of counting argument, can be used to demonstrate possibly unexpected results. For example, given that the population of London is greater than the maximum number of hairs that can be present on a human's head, then the pigeonhole principle requires that there must be at least two people in London who have the same number of hairs on their heads. Although the pigeonhole principle appears as early as 1624 in a book attributed to Jean Leurechon, it is commonly called Dirichlet's box principle or Dirichlet's drawer principle after an 1834 t ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Sequent Calculus

In mathematical logic, sequent calculus is a style of formal logical argumentation in which every line of a proof is a conditional tautology (called a sequent by Gerhard Gentzen) instead of an unconditional tautology. Each conditional tautology is inferred from other conditional tautologies on earlier lines in a formal argument according to rules and procedures of inference, giving a better approximation to the natural style of deduction used by mathematicians than to David Hilbert's earlier style of formal logic, in which every line was an unconditional tautology. More subtle distinctions may exist; for example, propositions may implicitly depend upon non-logical axioms. In that case, sequents signify conditional theorems in a first-order language rather than conditional tautologies. Sequent calculus is one of several extant styles of proof calculus for expressing line-by-line logical arguments. * Hilbert style. Every line is an unconditional tautology (or theorem). * Gentzen s ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

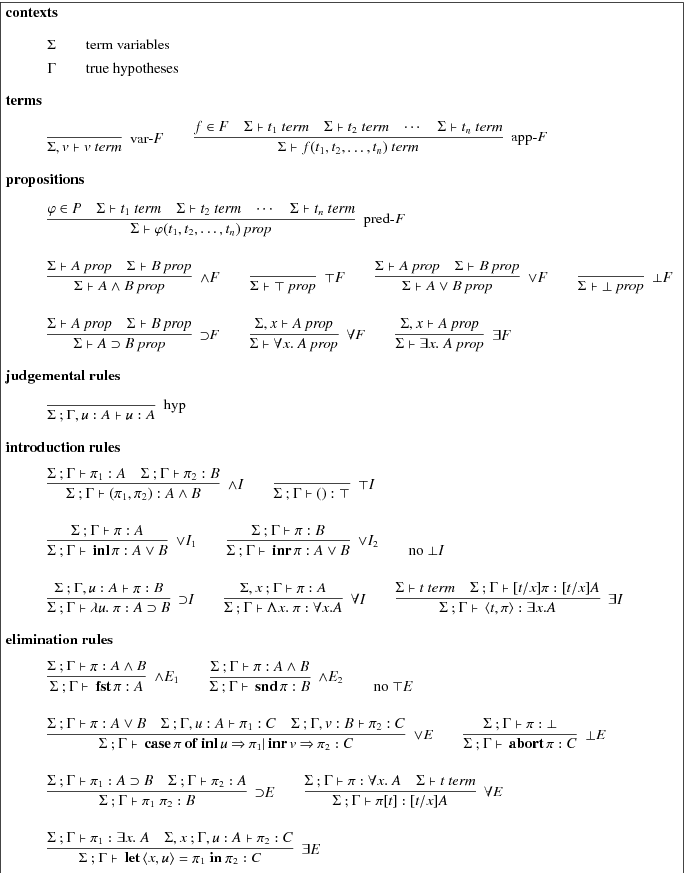

Natural Deduction

In logic and proof theory, natural deduction is a kind of proof calculus in which logical reasoning is expressed by inference rules closely related to the "natural" way of reasoning. This contrasts with Hilbert-style systems, which instead use axioms as much as possible to express the logical laws of deductive reasoning. Motivation Natural deduction grew out of a context of dissatisfaction with the axiomatizations of deductive reasoning common to the systems of Hilbert, Frege, and Russell (see, e.g., Hilbert system). Such axiomatizations were most famously used by Russell and Whitehead in their mathematical treatise ''Principia Mathematica''. Spurred on by a series of seminars in Poland in 1926 by Łukasiewicz that advocated a more natural treatment of logic, Jaśkowski made the earliest attempts at defining a more natural deduction, first in 1929 using a diagrammatic notation, and later updating his proposal in a sequence of papers in 1934 and 1935. His proposals led to diffe ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Clause (logic)

In logic, a clause is a propositional formula formed from a finite collection of literals (atoms or their negations) and logical connectives. A clause is true either whenever at least one of the literals that form it is true (a disjunctive clause, the most common use of the term), or when all of the literals that form it are true (a conjunctive clause, a less common use of the term). That is, it is a finite disjunction or conjunction of literals, depending on the context. Clauses are usually written as follows, where the symbols l_i are literals: :l_1 \vee \cdots \vee l_n Empty clauses A clause can be empty (defined from an empty set of literals). The empty clause is denoted by various symbols such as \empty, \bot, or \Box. The truth evaluation of an empty disjunctive clause is always false. This is justified by considering that false is the neutral element of the monoid (\, \vee). The truth evaluation of an empty conjunctive clause is always true. This is related to the concept ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Resolution (logic)

In mathematical logic and automated theorem proving, resolution is a rule of inference leading to a refutation complete theorem-proving technique for sentences in propositional logic and first-order logic. For propositional logic, systematically applying the resolution rule acts as a decision procedure for formula unsatisfiability, solving the (complement of the) Boolean satisfiability problem. For first-order logic, resolution can be used as the basis for a semi-algorithm for the unsatisfiability problem of first-order logic, providing a more practical method than one following from Gödel's completeness theorem. The resolution rule can be traced back to Davis and Putnam (1960); however, their algorithm required trying all ground instances of the given formula. This source of combinatorial explosion was eliminated in 1965 by John Alan Robinson's syntactical unification algorithm, which allowed one to instantiate the formula during the proof "on demand" just as far as needed to ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Propositional Calculus

Propositional calculus is a branch of logic. It is also called propositional logic, statement logic, sentential calculus, sentential logic, or sometimes zeroth-order logic. It deals with propositions (which can be true or false) and relations between propositions, including the construction of arguments based on them. Compound propositions are formed by connecting propositions by logical connectives. Propositions that contain no logical connectives are called atomic propositions. Unlike first-order logic, propositional logic does not deal with non-logical objects, predicates about them, or Quantifier (logic), quantifiers. However, all the machinery of propositional logic is included in first-order logic and higher-order logics. In this sense, propositional logic is the foundation of first-order logic and higher-order logic. Explanation Logical connectives are found in natural languages. In English for example, some examples are "and" (logical conjunction, conjunction), "or" (lo ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Substitution (logic)

Substitution is a fundamental concept in logic. A substitution is a syntactic transformation on formal expressions. To apply a substitution to an expression means to consistently replace its variable, or placeholder, symbols by other expressions. The resulting expression is called a substitution instance, or instance for short, of the original expression. Propositional logic Definition Where ''ψ'' and ''φ'' represent formulas of propositional logic, ''ψ'' is a substitution instance of ''φ'' if and only if ''ψ'' may be obtained from ''φ'' by substituting formulas for symbols in ''φ'', replacing each occurrence of the same symbol by an occurrence of the same formula. For example: ::(R → S) & (T → S) is a substitution instance of: ::P & Q and ::(A ↔ A) ↔ (A ↔ A) is a substitution instance of: ::(A ↔ A) In some deduction systems for propositional logic, a new expression (a proposition) may be entered on a line of a derivation if it is a substitution instanc ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Propositional Proof System

In propositional calculus and proof complexity a propositional proof system (pps), also called a Cook–Reckhow propositional proof system, is a system for proving classical propositional tautologies. Mathematical definition Formally a pps is a polynomial-time function ''P'' whose range is the set of all propositional tautologies (denoted TAUT). If ''A'' is a formula, then any ''x'' such that ''P''(''x'') = ''A'' is called a ''P''-proof of ''A''. The condition defining pps can be broken up as follows: * Completeness: every propositional tautology has a ''P''-proof, * Soundness: if a propositional formula has a ''P''-proof then it is a tautology, * Efficiency: ''P'' runs in polynomial time. In general, a proof system for a language ''L'' is a polynomial-time function whose range is ''L''. Thus, a propositional proof system is a proof system for TAUT. Sometimes the following alternative definition is considered: a pps is given as a proof-verification algorithm ''P''(''A'',''x'' ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Functional Completeness

In logic, a functionally complete set of logical connectives or Boolean operators is one which can be used to express all possible truth tables by combining members of the set into a Boolean expression.. ("Complete set of logical connectives").. (" nctional completeness of set of logical operators"). A well-known complete set of connectives is . Each of the singleton sets and is functionally complete. A gate or set of gates which is functionally complete can also be called a universal gate / gates. A functionally complete set of gates may utilise or generate 'garbage bits' as part of its computation which are either not part of the input or not part of the output to the system. In a context of propositional logic, functionally complete sets of connectives are also called (expressively) adequate.. (Defines "expressively adequate", shortened to "adequate set of connectives" in a section heading.) From the point of view of digital electronics, functional completeness means that ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |