|

Feature Structure

In phrase structure grammars, such as generalised phrase structure grammar, head-driven phrase structure grammar and lexical functional grammar, a feature structure is essentially a set of attribute–value pairs. For example, the attribute named ''number'' might have the value ''singular''. The value of an attribute may be either atomic, e.g. the symbol ''singular'', or complex (most commonly a feature structure, but also a list or a set). A feature structure can be represented as a directed acyclic graph (DAG), with the nodes corresponding to the variable values and the paths to the variable names. Operations defined on feature structures, e.g. unification, are used extensively in phrase structure grammars. In most theories (e.g. HPSG), operations are strictly speaking defined over equations describing feature structures and not over feature structures themselves, though feature structures are usually used in informal exposition. Often, feature structures are written like this: ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Phrase Structure Grammar

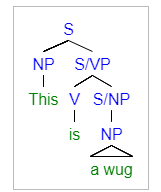

The term phrase structure grammar was originally introduced by Noam Chomsky as the term for grammar studied previously by Emil Post and Axel Thue ( Post canonical systems). Some authors, however, reserve the term for more restricted grammars in the Chomsky hierarchy: context-sensitive grammars or context-free grammars. In a broader sense, phrase structure grammars are also known as ''constituency grammars''. The defining character of phrase structure grammars is thus their adherence to the constituency relation, as opposed to the dependency relation of dependency grammars. History In 1956, Chomsky wrote, "A phrase-structure grammar is defined by a finite vocabulary (alphabet) Vp, and a finite set Σ of initial strings in Vp, and a finite set F of rules of the form: X → Y, where X and Y are strings in Vp." Constituency relation In linguistics, phrase structure grammars are all those grammars that are based on the constituency relation, as opposed to the dependency relation ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Generalised Phrase Structure Grammar

Generalized phrase structure grammar (GPSG) is a framework for describing the syntax and semantics of natural languages. It is a type of Constraint-based grammar, constraint-based phrase structure grammar. Constraint based grammars are based around defining certain syntactic processes as Grammaticality, ungrammatical for a given language and assuming everything not thus dismissed is grammatical within that language. Phrase structure grammars base their framework on constituency relationships, seeing the words in a sentence as ranked, with some words dominating the others. For example, in the sentence "The dog runs", "runs" is seen as dominating "dog" since it is the main focus of the sentence. This view stands in contrast to dependency grammar, dependency grammars, which base their assumed structure on the relationship between a single word in a sentence (the sentence head) and its dependents. Origins GPSG was initially developed in the late 1970s by Gerald Gazdar. Other contrib ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Head-driven Phrase Structure Grammar

Head-driven phrase structure grammar (HPSG) is a highly lexicalized, constraint-based grammar developed by Carl Pollard and Ivan Sag. It is a type of phrase structure grammar, as opposed to a dependency grammar, and it is the immediate successor to generalized phrase structure grammar. HPSG draws from other fields such as computer science (type system, data type theory and knowledge representation) and uses Ferdinand de Saussure's notion of the sign (linguistics), sign. It uses a uniform formalism and is organized in a modular way which makes it attractive for natural language processing. An HPSG includes principles and grammar rules and lexicon entries which are normally not considered to belong to a grammar. The formalism is based on lexicalism. This means that the lexicon is more than just a list of entries; it is in itself richly structured. Individual entries are marked with types. Types form a hierarchy. Early versions of the grammar were very lexicalized with few grammatica ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Lexical Functional Grammar

Lexical functional grammar (LFG) is a constraint-based grammar framework in theoretical linguistics. It posits several parallel levels of syntactic structure, including a phrase structure grammar representation of word order and constituency, and a representation of grammatical functions such as subject and object, similar to dependency grammar. The development of the theory was initiated by Joan Bresnan and Ronald Kaplan in the 1970s, in reaction to the theory of transformational grammar which was current in the late 1970s. It mainly focuses on syntax, including its relation with morphology and semantics. There has been little LFG work on phonology (although ideas from optimality theory have recently been popular in LFG research). Some recent work combines LFG with Distributed Morphology in Lexical-Realizational Functional Grammar.Ash Asudeh, Paul B. Melchin & Daniel Siddiqi (2021). ''Constraints all the way down: DM in a representational model of grammar''. In ''WCCFL 39 Proc ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Lisp Atom

Lisp (historically LISP, an abbreviation of "list processing") is a family of programming languages with a long history and a distinctive, fully parenthesized prefix notation. Originally specified in the late 1950s, it is the second-oldest high-level programming language still in common use, after Fortran. Lisp has changed since its early days, and many dialects have existed over its history. Today, the best-known general-purpose Lisp dialects are Common Lisp, Scheme, Racket, and Clojure. Lisp was originally created as a practical mathematical notation for computer programs, influenced by (though not originally derived from) the notation of Alonzo Church's lambda calculus. It quickly became a favored programming language for artificial intelligence (AI) research. As one of the earliest programming languages, Lisp pioneered many ideas in computer science, including tree data structures, automatic storage management, dynamic typing, conditionals, higher-order functions, recur ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Directed Acyclic Graph

In mathematics, particularly graph theory, and computer science, a directed acyclic graph (DAG) is a directed graph with no directed cycles. That is, it consists of vertices and edges (also called ''arcs''), with each edge directed from one vertex to another, such that following those directions will never form a closed loop. A directed graph is a DAG if and only if it can be topologically ordered, by arranging the vertices as a linear ordering that is consistent with all edge directions. DAGs have numerous scientific and computational applications, ranging from biology (evolution, family trees, epidemiology) to information science (citation networks) to computation (scheduling). Directed acyclic graphs are also called acyclic directed graphs or acyclic digraphs. Definitions A graph is formed by vertices and by edges connecting pairs of vertices, where the vertices can be any kind of object that is connected in pairs by edges. In the case of a directed graph, each edg ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Unification (computer Science)

In logic and computer science, specifically automated reasoning, unification is an algorithmic process of solving equations between symbolic expression (mathematics), expressions, each of the form ''Left-hand side = Right-hand side''. For example, using ''x'',''y'',''z'' as variables, and taking ''f'' to be an uninterpreted function, the Singleton (mathematics), singleton equation set is a syntactic first-order unification problem that has the substitution as its only solution. Conventions differ on what values variables may assume and which expressions are considered equivalent. In first-order syntactic unification, variables range over first-order terms and equivalence is syntactic. This version of unification has a unique "best" answer and is used in logic programming and programming language type system implementation, especially in Hindley–Milner based type inference algorithms. In higher-order unification, possibly restricted to higher-order pattern unification, terms may ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

HPSG

Head-driven phrase structure grammar (HPSG) is a highly lexicalized, constraint-based grammar developed by Carl Pollard and Ivan Sag. It is a type of phrase structure grammar, as opposed to a dependency grammar, and it is the immediate successor to generalized phrase structure grammar. HPSG draws from other fields such as computer science (type system, data type theory and knowledge representation) and uses Ferdinand de Saussure's notion of the sign (linguistics), sign. It uses a uniform formalism and is organized in a modular way which makes it attractive for natural language processing. An HPSG includes principles and grammar rules and lexicon entries which are normally not considered to belong to a grammar. The formalism is based on lexicalism. This means that the lexicon is more than just a list of entries; it is in itself richly structured. Individual entries are marked with types. Types form a hierarchy. Early versions of the grammar were very lexicalized with few grammatica ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Matrix (mathematics)

In mathematics, a matrix (: matrices) is a rectangle, rectangular array or table of numbers, symbol (formal), symbols, or expression (mathematics), expressions, with elements or entries arranged in rows and columns, which is used to represent a mathematical object or property of such an object. For example, \begin1 & 9 & -13 \\20 & 5 & -6 \end is a matrix with two rows and three columns. This is often referred to as a "two-by-three matrix", a " matrix", or a matrix of dimension . Matrices are commonly used in linear algebra, where they represent linear maps. In geometry, matrices are widely used for specifying and representing geometric transformations (for example rotation (mathematics), rotations) and coordinate changes. In numerical analysis, many computational problems are solved by reducing them to a matrix computation, and this often involves computing with matrices of huge dimensions. Matrices are used in most areas of mathematics and scientific fields, either directly ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Tree (data Structure)

In computer science, a tree is a widely used abstract data type that represents a hierarchical tree structure with a set of connected nodes. Each node in the tree can be connected to many children (depending on the type of tree), but must be connected to exactly one parent, except for the ''root'' node, which has no parent (i.e., the root node as the top-most node in the tree hierarchy). These constraints mean there are no cycles or "loops" (no node can be its own ancestor), and also that each child can be treated like the root node of its own subtree, making recursion a useful technique for tree traversal. In contrast to linear data structures, many trees cannot be represented by relationships between neighboring nodes (parent and children nodes of a node under consideration, if they exist) in a single straight line (called edge or link between two adjacent nodes). Binary trees are a commonly used type, which constrain the number of children for each parent to at most two. Whe ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

PATR-II

PATR-II is a linguistic formalism used in computational linguistics, developed by Stuart M. Shieber. It uses context-free grammar rules and feature constraints on these rules. See also * Head-driven phrase structure grammar Head-driven phrase structure grammar (HPSG) is a highly lexicalized, constraint-based grammar developed by Carl Pollard and Ivan Sag. It is a type of phrase structure grammar, as opposed to a dependency grammar, and it is the immediate successor t ... External links PC-PATR an implementation of PATR-II for PC and Unix systems. Computational linguistics {{compu-ling-stub ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |