|

Factored Language Model

The factored language model (FLM) is an extension of a conventional language model introduced by Jeff Bilmes and Katrin Kirchoff in 2003. In an FLM, each word is viewed as a vector of ''k'' factors: w_i = \. An FLM provides the probabilistic model P(f, f_1, ..., f_N) where the prediction of a factor f is based on N parents \. For example, if w represents a word token and t represents a Part of speech tag for English, the expression P(w_i, w_, w_, t_) gives a model for predicting current word token based on a traditional Ngram model as well as the Part of speech tag of the previous word. A major advantage of factored language models is that they allow users to specify linguistic knowledge such as the relationship between word tokens and Part of speech in English, or morphological information (stems, root, etc.) in Arabic. Like N-gram An ''n''-gram is a sequence of ''n'' adjacent symbols in particular order. The symbols may be ''n'' adjacent letter (alphabet), letters (inclu ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Language Model

A language model is a model of the human brain's ability to produce natural language. Language models are useful for a variety of tasks, including speech recognition, machine translation,Andreas, Jacob, Andreas Vlachos, and Stephen Clark (2013)"Semantic parsing as machine translation". Proceedings of the 51st Annual Meeting of the Association for Computational Linguistics (Volume 2: Short Papers). natural language generation (generating more human-like text), optical character recognition, route optimization, handwriting recognition, grammar induction, and information retrieval. Large language models (LLMs), currently their most advanced form, are predominantly based on transformers trained on larger datasets (frequently using words scraped from the public internet). They have superseded recurrent neural network-based models, which had previously superseded the purely statistical models, such as word ''n''-gram language model. History Noam Chomsky did pioneering work on lan ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

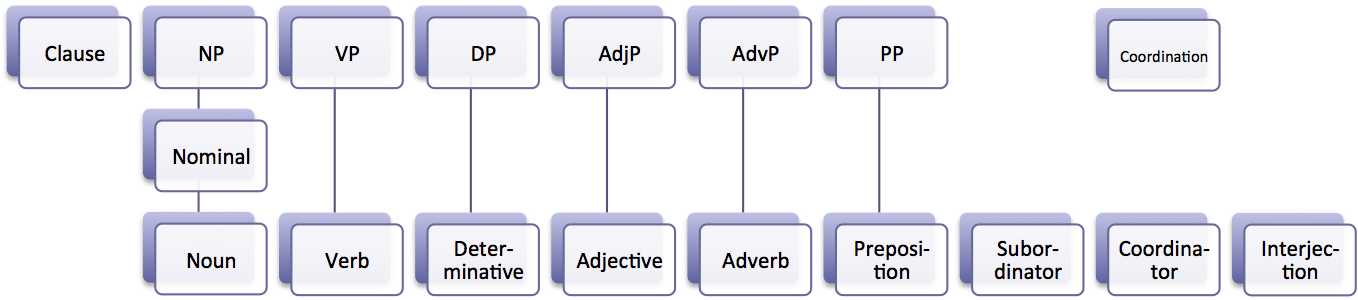

Part Of Speech

In grammar, a part of speech or part-of-speech ( abbreviated as POS or PoS, also known as word class or grammatical category) is a category of words (or, more generally, of lexical items) that have similar grammatical properties. Words that are assigned to the same part of speech generally display similar syntactic behavior (they play similar roles within the grammatical structure of sentences), sometimes similar morphological behavior in that they undergo inflection for similar properties and even similar semantic behavior. Commonly listed English parts of speech are noun, verb, adjective, adverb, pronoun, preposition, conjunction, interjection, numeral, article, and determiner. Other terms than ''part of speech''—particularly in modern linguistic classifications, which often make more precise distinctions than the traditional scheme does—include word class, lexical class, and lexical category. Some authors restrict the term ''lexical category'' to refer only to a par ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Ngram

An ''n''-gram is a sequence of ''n'' adjacent symbols in particular order. The symbols may be ''n'' adjacent letters (including punctuation marks and blanks), syllables, or rarely whole words found in a language dataset; or adjacent phonemes extracted from a speech-recording dataset, or adjacent base pairs extracted from a genome. They are collected from a text corpus or speech corpus. If Latin numerical prefixes are used, then ''n''-gram of size 1 is called a "unigram", size 2 a "bigram" (or, less commonly, a "digram") etc. If, instead of the Latin ones, the English cardinal numbers are furtherly used, then they are called "four-gram", "five-gram", etc. Similarly, using Greek numerical prefixes such as "monomer", "dimer", "trimer", "tetramer", "pentamer", etc., or English cardinal numbers, "one-mer", "two-mer", "three-mer", etc. are used in computational biology, for polymers or oligomers of a known size, called ''k''-mers. When the items are words, -grams may also be ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

N-gram

An ''n''-gram is a sequence of ''n'' adjacent symbols in particular order. The symbols may be ''n'' adjacent letter (alphabet), letters (including punctuation marks and blanks), syllables, or rarely whole words found in a language dataset; or adjacent phonemes extracted from a speech-recording dataset, or adjacent base pairs extracted from a genome. They are collected from a text corpus or speech corpus. If Latin numerical prefixes are used, then ''n''-gram of size 1 is called a "unigram", size 2 a "bigram" (or, less commonly, a "digram") etc. If, instead of the Latin ones, the Cardinal number (linguistics), English cardinal numbers are furtherly used, then they are called "four-gram", "five-gram", etc. Similarly, using Greek numerical prefixes such as "monomer", "dimer", "trimer", "tetramer", "pentamer", etc., or English cardinal numbers, "one-mer", "two-mer", "three-mer", etc. are used in computational biology, for polymers or oligomers of a known size, called k-mer, ''k'' ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Language Modeling

A language model is a model of the human brain's ability to produce natural language. Language models are useful for a variety of tasks, including speech recognition, machine translation,Andreas, Jacob, Andreas Vlachos, and Stephen Clark (2013)"Semantic parsing as machine translation". Proceedings of the 51st Annual Meeting of the Association for Computational Linguistics (Volume 2: Short Papers). natural language generation (generating more human-like text), optical character recognition, route optimization, handwriting recognition, grammar induction, and information retrieval. Large language models (LLMs), currently their most advanced form, are predominantly based on transformers trained on larger datasets (frequently using words scraped from the public internet). They have superseded recurrent neural network-based models, which had previously superseded the purely statistical models, such as word ''n''-gram language model. History Noam Chomsky did pioneering work on lang ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Statistical Natural Language Processing

Natural language processing (NLP) is a subfield of computer science and especially artificial intelligence. It is primarily concerned with providing computers with the ability to process data encoded in natural language and is thus closely related to information retrieval, knowledge representation and computational linguistics, a subfield of linguistics. Major tasks in natural language processing are speech recognition, text classification, natural language understanding, and natural language generation. History Natural language processing has its roots in the 1950s. Already in 1950, Alan Turing published an article titled "Computing Machinery and Intelligence" which proposed what is now called the Turing test as a criterion of intelligence, though at the time that was not articulated as a problem separate from artificial intelligence. The proposed test includes a task that involves the automated interpretation and generation of natural language. Symbolic NLP (1950s – ea ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |