|

F-test Of Equality Of Variances

In statistics, an ''F''-test of equality of variances is a test for the null hypothesis that two normal populations have the same variance. Notionally, any ''F''-test can be regarded as a comparison of two variances, but the specific case being discussed in this article is that of two populations, where the test statistic used is the ratio of two sample variances. This particular situation is of importance in mathematical statistics since it provides a basic exemplar case in which the ''F''-distribution can be derived. For application in applied statistics, there is concern that the test is so sensitive to the assumption of normality that it would be inadvisable to use it as a routine test for the equality of variances. In other words, this is a case where "approximate normality" (which in similar contexts would often be justified using the central limit theorem), is not good enough to make the test procedure approximately valid to an acceptable degree. The test Let ''X' ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Statistical Test

A statistical hypothesis test is a method of statistical inference used to decide whether the data at hand sufficiently support a particular hypothesis. Hypothesis testing allows us to make probabilistic statements about population parameters. History Early use While hypothesis testing was popularized early in the 20th century, early forms were used in the 1700s. The first use is credited to John Arbuthnot (1710), followed by Pierre-Simon Laplace (1770s), in analyzing the human sex ratio at birth; see . Modern origins and early controversy Modern significance testing is largely the product of Karl Pearson ( ''p''-value, Pearson's chi-squared test), William Sealy Gosset (Student's t-distribution), and Ronald Fisher ("null hypothesis", analysis of variance, "significance test"), while hypothesis testing was developed by Jerzy Neyman and Egon Pearson (son of Karl). Ronald Fisher began his life in statistics as a Bayesian (Zabell 1992), but Fisher soon grew disenchanted with the s ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

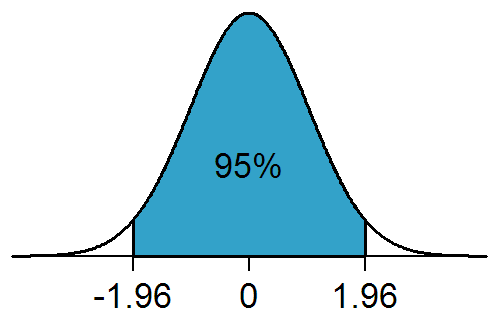

Statistical Significance

In statistical hypothesis testing, a result has statistical significance when it is very unlikely to have occurred given the null hypothesis (simply by chance alone). More precisely, a study's defined significance level, denoted by \alpha, is the probability of the study rejecting the null hypothesis, given that the null hypothesis is true; and the ''p''-value of a result, ''p'', is the probability of obtaining a result at least as extreme, given that the null hypothesis is true. The result is statistically significant, by the standards of the study, when p \le \alpha. The significance level for a study is chosen before data collection, and is typically set to 5% or much lower—depending on the field of study. In any experiment or observation that involves drawing a sample from a population, there is always the possibility that an observed effect would have occurred due to sampling error alone. But if the ''p''-value of an observed effect is less than (or equal to) the significanc ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Statistical Ratios

Statistics (from German: ''Statistik'', "description of a state, a country") is the discipline that concerns the collection, organization, analysis, interpretation, and presentation of data. In applying statistics to a scientific, industrial, or social problem, it is conventional to begin with a statistical population or a statistical model to be studied. Populations can be diverse groups of people or objects such as "all people living in a country" or "every atom composing a crystal". Statistics deals with every aspect of data, including the planning of data collection in terms of the design of surveys and experiments.Dodge, Y. (2006) ''The Oxford Dictionary of Statistical Terms'', Oxford University Press. When census data cannot be collected, statisticians collect data by developing specific experiment designs and survey samples. Representative sampling assures that inferences and conclusions can reasonably extend from the sample to the population as a whole. An experim ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Goldfeld–Quandt Test

In statistics, the Goldfeld–Quandt test checks for homoscedasticity in regression analyses. It does this by dividing a dataset into two parts or groups, and hence the test is sometimes called a two-group test. The Goldfeld–Quandt test is one of two tests proposed in a 1965 paper by Stephen Goldfeld and Richard Quandt. Both a parametric and nonparametric test are described in the paper, but the term "Goldfeld–Quandt test" is usually associated only with the former. Test In the context of multiple regression (or univariate regression), the hypothesis to be tested is that the variances of the errors of the regression model are not constant, but instead are monotonically related to a pre-identified explanatory variable. For example, data on income and consumption may be gathered and consumption regressed against income. If the variance increases as levels of income increase, then income may be used as an explanatory variable. Otherwise some third variable (e.g. wealth or las ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Hartley's Test

In statistics, Hartley's test, also known as the ''F''max test or Hartley's ''F''max, is used in the analysis of variance to verify that different groups have a similar variance, an assumption needed for other statistical tests. It was developed by H. O. Hartley, who published it in 1950. The test involves computing the ratio of the largest group variance, max(sj2) to the smallest group variance, min(sj2). The resulting ratio, Fmax, is then compared to a critical value from a table of the sampling distribution of Fmax. If the computed ratio is less than the critical value, the groups are assumed to have similar or equal variances. Hartley's test assumes that data for each group are normally distributed, and that each group has an equal number of members. This test, although convenient, is quite sensitive to violations of the normality assumption.O'Brien (1981) Alternatives to Hartley's test that are robust to violations of normality are O'Brien's procedure,O'Brien (1981) and the B ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Review Of Educational Research

The ''Review of Educational Research'' is a bimonthly peer-reviewed review journal published by SAGE Publications on behalf of the American Educational Research Association. It was established in 1931 and covers all aspects of education and educational research. The journal's editor-in-chief is P. Karen Murphy (Pennsylvania State University). Mission Statement The ''Review of Educational Research'' (''RER'') publishes critical, integrative reviews of research literature bearing on education. Such reviews should include conceptualizations, interpretations, and syntheses of literature and scholarly work in a field broadly relevant to education and educational research. ''RER'' encourages the submission of research relevant to education from any discipline, such as reviews of research in psychology, sociology, history, philosophy, political science, economics, computer science, statistics, anthropology, and biology, provided that the review bears on educational issues. ''RER'' does ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Robust Statistics

Robust statistics are statistics with good performance for data drawn from a wide range of probability distributions, especially for distributions that are not normal. Robust statistical methods have been developed for many common problems, such as estimating location, scale, and regression parameters. One motivation is to produce statistical methods that are not unduly affected by outliers. Another motivation is to provide methods with good performance when there are small departures from a parametric distribution. For example, robust methods work well for mixtures of two normal distributions with different standard deviations; under this model, non-robust methods like a t-test work poorly. Introduction Robust statistics seek to provide methods that emulate popular statistical methods, but which are not unduly affected by outliers or other small departures from Statistical assumption, model assumptions. In statistics, classical estimation methods rely heavily on assumpti ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Hypothesis Test

A statistical hypothesis test is a method of statistical inference used to decide whether the data at hand sufficiently support a particular hypothesis. Hypothesis testing allows us to make probabilistic statements about population parameters. History Early use While hypothesis testing was popularized early in the 20th century, early forms were used in the 1700s. The first use is credited to John Arbuthnot (1710), followed by Pierre-Simon Laplace (1770s), in analyzing the human sex ratio at birth; see . Modern origins and early controversy Modern significance testing is largely the product of Karl Pearson (p-value, ''p''-value, Pearson's chi-squared test), William Sealy Gosset (Student's t-distribution), and Ronald Fisher ("null hypothesis", analysis of variance, "statistical significance, significance test"), while hypothesis testing was developed by Jerzy Neyman and Egon Pearson (son of Karl). Ronald Fisher began his life in statistics as a Bayesian (Zabell 1992), but Fisher ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Homoscedasticity

In statistics, a sequence (or a vector) of random variables is homoscedastic () if all its random variables have the same finite variance. This is also known as homogeneity of variance. The complementary notion is called heteroscedasticity. The spellings ''homoskedasticity'' and ''heteroskedasticity'' are also frequently used. Assuming a variable is homoscedastic when in reality it is heteroscedastic () results in unbiased but inefficient point estimates and in biased estimates of standard errors, and may result in overestimating the goodness of fit as measured by the Pearson product-moment correlation coefficient, Pearson coefficient. The existence of heteroscedasticity is a major concern in regression analysis and the analysis of variance, as it invalidates statistical hypothesis testing, statistical tests of significance that assume that the errors and residuals in statistics, modelling errors all have the same variance. While the ordinary least squares estimator is stil ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Type I Error

In statistical hypothesis testing, a type I error is the mistaken rejection of an actually true null hypothesis (also known as a "false positive" finding or conclusion; example: "an innocent person is convicted"), while a type II error is the failure to reject a null hypothesis that is actually false (also known as a "false negative" finding or conclusion; example: "a guilty person is not convicted"). Much of statistical theory revolves around the minimization of one or both of these errors, though the complete elimination of either is a statistical impossibility if the outcome is not determined by a known, observable causal process. By selecting a low threshold (cut-off) value and modifying the alpha (α) level, the quality of the hypothesis test can be increased. The knowledge of type I errors and type II errors is widely used in medical science, biometrics and computer science. Intuitively, type I errors can be thought of as errors of ''commission'', i.e. the researcher unluck ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Brown–Forsythe Test

The Brown–Forsythe test is a statistical test for the equality of group variances based on performing an Analysis of Variance (ANOVA) on a transformation of the response variable. When a one-way ANOVA is performed, samples are assumed to have been drawn from distributions with equal variance. If this assumption is not valid, the resulting ''F''-test is invalid. The Brown–Forsythe test statistic is the F statistic resulting from an ordinary one-way analysis of variance on the absolute deviations of the groups or treatments data from their individual medians. Transformation The transformed response variable is constructed to measure the spread in each group. Let : z_=\left\vert y_ - \tilde_j \right\vert where \tilde_j is the median of group ''j''. The Brown–Forsythe test statistic is the model ''F'' statistic from a one way ANOVA on ''zij'': : F = \frac \frac where ''p'' is the number of groups, ''nj'' is the number of observations in group ''j'', and ''N'' is the tota ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Bartlett's Test

In statistics, Bartlett's test, named after Maurice Stevenson Bartlett, is used to test homoscedasticity, that is, if multiple samples are from populations with equal variances. Some statistical tests, such as the analysis of variance, assume that variances are equal across groups or samples, which can be verified with Bartlett's test. In a Bartlett test, we construct the null and alternative hypothesis. For this purpose several test procedures have been devised. The test procedure due to M.S.E (Mean Square Error/Estimator) Bartlett test is represented here. This test procedure is based on the statistic whose sampling distribution is approximately a Chi-Square distribution with (''k'' − 1) degrees of freedom, where ''k'' is the number of random samples, which may vary in size and are each drawn from independent normal distributions. Bartlett's test is sensitive to departures from normality. That is, if the samples come from non-normal distributions, then Bartlett's test may simp ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |