|

Euler's Rotation Theorem

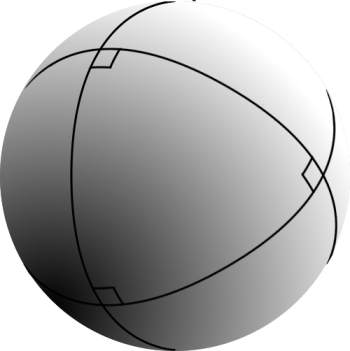

In geometry, Euler's rotation theorem states that, in three-dimensional space, any displacement of a rigid body such that a point on the rigid body remains fixed, is equivalent to a single rotation about some axis that runs through the fixed point. It also means that the composition of two rotations is also a rotation. Therefore the set of rotations has a group structure, known as a ''rotation group''. The theorem is named after Leonhard Euler, who proved it in 1775 by means of spherical geometry. The axis of rotation is known as an Euler axis, typically represented by a unit vector . Its product by the rotation angle is known as an axis-angle vector. The extension of the theorem to kinematics yields the concept of instant axis of rotation, a line of fixed points. In linear algebra terms, the theorem states that, in 3D space, any two Cartesian coordinate systems with a common origin are related by a rotation about some fixed axis. This also means that the product of two rota ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Euler AxisAngle

Leonhard Euler ( , ; 15 April 170718 September 1783) was a Swiss mathematician, physicist, astronomer, geographer, logician and engineer who founded the studies of graph theory and topology and made pioneering and influential discoveries in many other branches of mathematics such as analytic number theory, complex analysis, and infinitesimal calculus. He introduced much of modern mathematical terminology and notation, including the notion of a mathematical function. He is also known for his work in mechanics, fluid dynamics, optics, astronomy and music theory. Euler is held to be one of the greatest mathematicians in history and the greatest of the 18th century. A statement attributed to Pierre-Simon Laplace expresses Euler's influence on mathematics: "Read Euler, read Euler, he is the master of us all." Carl Friedrich Gauss remarked: "The study of Euler's works will remain the best school for the different fields of mathematics, and nothing else can replace it." Euler is al ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Spherical Triangle

Spherical trigonometry is the branch of spherical geometry that deals with the metrical relationships between the sides and angles of spherical triangles, traditionally expressed using trigonometric functions. On the sphere, geodesics are great circles. Spherical trigonometry is of great importance for calculations in astronomy, geodesy, and navigation. The origins of spherical trigonometry in Greek mathematics and the major developments in Islamic mathematics are discussed fully in History of trigonometry and Mathematics in medieval Islam. The subject came to fruition in Early Modern times with important developments by John Napier, Delambre and others, and attained an essentially complete form by the end of the nineteenth century with the publication of Todhunter's textbook ''Spherical trigonometry for the use of colleges and Schools''. Since then, significant developments have been the application of vector methods, quaternion methods, and the use of numerical methods. ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Trace (mathematics)

In linear algebra, the trace of a square matrix , denoted , is defined to be the sum of elements on the main diagonal (from the upper left to the lower right) of . The trace is only defined for a square matrix (). It can be proved that the trace of a matrix is the sum of its (complex) eigenvalues (counted with multiplicities). It can also be proved that for any two matrices and . This implies that similar matrices have the same trace. As a consequence one can define the trace of a linear operator mapping a finite-dimensional vector space into itself, since all matrices describing such an operator with respect to a basis are similar. The trace is related to the derivative of the determinant (see Jacobi's formula). Definition The trace of an square matrix is defined as \operatorname(\mathbf) = \sum_^n a_ = a_ + a_ + \dots + a_ where denotes the entry on the th row and th column of . The entries of can be real numbers or (more generally) complex numbers. The trace is not def ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Complex Conjugation

In mathematics, the complex conjugate of a complex number is the number with an equal real part and an imaginary part equal in magnitude but opposite in sign. That is, (if a and b are real, then) the complex conjugate of a + bi is equal to a - bi. The complex conjugate of z is often denoted as \overline or z^*. In polar form, the conjugate of r e^ is r e^. This can be shown using Euler's formula. The product of a complex number and its conjugate is a real number: a^2 + b^2 (or r^2 in polar coordinates). If a root of a univariate polynomial with real coefficients is complex, then its complex conjugate is also a root. Notation The complex conjugate of a complex number z is written as \overline z or z^*. The first notation, a vinculum, avoids confusion with the notation for the conjugate transpose of a matrix, which can be thought of as a generalization of the complex conjugate. The second is preferred in physics, where dagger (†) is used for the conjugate t ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Normal Matrix

In mathematics, a complex square matrix is normal if it commutes with its conjugate transpose : The concept of normal matrices can be extended to normal operators on infinite dimensional normed spaces and to normal elements in C*-algebras. As in the matrix case, normality means commutativity is preserved, to the extent possible, in the noncommutative setting. This makes normal operators, and normal elements of C*-algebras, more amenable to analysis. The spectral theorem states that a matrix is normal if and only if it is unitarily similar to a diagonal matrix, and therefore any matrix satisfying the equation is diagonalizable. The converse does not hold because diagonalizable matrices may have non-orthogonal eigenspaces. The left and right singular vectors in the singular value decomposition of a normal matrix \mathbf = \mathbf \boldsymbol \mathbf^* differ only in complex phase from each other and from the corresponding eigenvectors, since the phase must be factored ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Improper Rotation

In geometry, an improper rotation,. also called rotation-reflection, rotoreflection, rotary reflection,. or rotoinversion is an isometry in Euclidean space that is a combination of a rotation about an axis and a reflection in a plane perpendicular to that axis. Reflection and inversion are each special case of improper rotation. Any improper rotation is an affine transformation and, in cases that keep the coordinate origin fixed, a linear transformation.. It is used as a symmetry operation in the context of geometric symmetry, molecular symmetry and crystallography, where an object that is unchanged by a combination of rotation and reflection is said to have ''improper rotation symmetry''. Three dimensions In 3 dimensions, improper rotation is equivalently defined as a combination of rotation about an axis and inversion in a point on the axis. For this reason it is also called a rotoinversion or rotary inversion. The two definitions are equivalent because rotation by an ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Orthogonal Matrix

In linear algebra, an orthogonal matrix, or orthonormal matrix, is a real square matrix whose columns and rows are orthonormal vectors. One way to express this is Q^\mathrm Q = Q Q^\mathrm = I, where is the transpose of and is the identity matrix. This leads to the equivalent characterization: a matrix is orthogonal if its transpose is equal to its inverse: Q^\mathrm=Q^, where is the inverse of . An orthogonal matrix is necessarily invertible (with inverse ), unitary (), where is the Hermitian adjoint (conjugate transpose) of , and therefore normal () over the real numbers. The determinant of any orthogonal matrix is either +1 or −1. As a linear transformation, an orthogonal matrix preserves the inner product of vectors, and therefore acts as an isometry of Euclidean space, such as a rotation, reflection or rotoreflection. In other words, it is a unitary transformation. The set of orthogonal matrices, under multiplication, forms the group , known as the o ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Change Of Basis

In mathematics, an ordered basis of a vector space of finite dimension allows representing uniquely any element of the vector space by a coordinate vector, which is a sequence of scalars called coordinates. If two different bases are considered, the coordinate vector that represents a vector on one basis is, in general, different from the coordinate vector that represents on the other basis. A change of basis consists of converting every assertion expressed in terms of coordinates relative to one basis into an assertion expressed in terms of coordinates relative to the other basis. Such a conversion results from the ''change-of-basis formula'' which expresses the coordinates relative to one basis in terms of coordinates relative to the other basis. Using matrices, this formula can be written :\mathbf x_\mathrm = A \,\mathbf x_\mathrm, where "old" and "new" refer respectively to the firstly defined basis and the other basis, \mathbf x_\mathrm and \mathbf x_\mathrm are the ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Kernel (matrix)

In mathematics, the kernel of a linear map, also known as the null space or nullspace, is the linear subspace of the domain of the map which is mapped to the zero vector. That is, given a linear map between two vector spaces and , the kernel of is the vector space of all elements of such that , where denotes the zero vector in , or more symbolically: :\ker(L) = \left\ . Properties The kernel of is a linear subspace of the domain .Linear algebra, as discussed in this article, is a very well established mathematical discipline for which there are many sources. Almost all of the material in this article can be found in , , and Strang's lectures. In the linear map L : V \to W, two elements of have the same image in if and only if their difference lies in the kernel of , that is, L\left(\mathbf_1\right) = L\left(\mathbf_2\right) \quad \text \quad L\left(\mathbf_1-\mathbf_2\right) = \mathbf. From this, it follows that the image of is isomorphic to the quotient of by t ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Characteristic Polynomial

In linear algebra, the characteristic polynomial of a square matrix is a polynomial which is invariant under matrix similarity and has the eigenvalues as roots. It has the determinant and the trace of the matrix among its coefficients. The characteristic polynomial of an endomorphism of a finite-dimensional vector space is the characteristic polynomial of the matrix of that endomorphism over any base (that is, the characteristic polynomial does not depend on the choice of a basis). The characteristic equation, also known as the determinantal equation, is the equation obtained by equating the characteristic polynomial to zero. In spectral graph theory, the characteristic polynomial of a graph is the characteristic polynomial of its adjacency matrix. Motivation In linear algebra, eigenvalues and eigenvectors play a fundamental role, since, given a linear transformation, an eigenvector is a vector whose direction is not changed by the transformation, and the corresponding ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Determinant

In mathematics, the determinant is a scalar value that is a function of the entries of a square matrix. It characterizes some properties of the matrix and the linear map represented by the matrix. In particular, the determinant is nonzero if and only if the matrix is invertible and the linear map represented by the matrix is an isomorphism. The determinant of a product of matrices is the product of their determinants (the preceding property is a corollary of this one). The determinant of a matrix is denoted , , or . The determinant of a matrix is :\begin a & b\\c & d \end=ad-bc, and the determinant of a matrix is : \begin a & b & c \\ d & e & f \\ g & h & i \end= aei + bfg + cdh - ceg - bdi - afh. The determinant of a matrix can be defined in several equivalent ways. Leibniz formula expresses the determinant as a sum of signed products of matrix entries such that each summand is the product of different entries, and the number of these summands is n!, the factorial o ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Vector (mathematics And Physics)

In mathematics and physics, vector is a term that refers colloquially to some quantities that cannot be expressed by a single number (a scalar), or to elements of some vector spaces. Historically, vectors were introduced in geometry and physics (typically in mechanics) for quantities that have both a magnitude and a direction, such as displacements, forces and velocity. Such quantities are represented by geometric vectors in the same way as distances, masses and time are represented by real numbers. The term ''vector'' is also used, in some contexts, for tuples, which are finite sequences of numbers of a fixed length. Both geometric vectors and tuples can be added and scaled, and these vector operations led to the concept of a vector space, which is a set equipped with a vector addition and a scalar multiplication that satisfy some axioms generalizing the main properties of operations on the above sorts of vectors. A vector space formed by geometric vectors is called a ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |