|

Entropic Vector

The entropic vector or entropic function is a concept arising in information theory. It represents the possible values of Shannon's information entropy that subsets of one set of random variables may take. Understanding which vectors are entropic is a way to represent all possible inequalities between entropies of various subsets. For example, for any two random variables X_1,X_2, their joint entropy (the entropy of the random variable representing the pair X_1,X_2) is at most the sum of the entropies of X_1 and of X_2: :H(X_1,X_2) \leq H(X_1) + H(X_2) Other information-theoretic measures such as conditional information, mutual information, or total correlation can be expressed in terms of joint entropy and are thus related by the corresponding inequalities. Many inequalities satisfied by entropic vectors can be derived as linear combinations of a few basic ones, called ''Shannon-type inequalities''. However, it has been proven that already for n=4 variables, no finite set of li ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Information Theory

Information theory is the scientific study of the quantification (science), quantification, computer data storage, storage, and telecommunication, communication of information. The field was originally established by the works of Harry Nyquist and Ralph Hartley, in the 1920s, and Claude Shannon in the 1940s. The field is at the intersection of probability theory, statistics, computer science, statistical mechanics, information engineering (field), information engineering, and electrical engineering. A key measure in information theory is information entropy, entropy. Entropy quantifies the amount of uncertainty involved in the value of a random variable or the outcome of a random process. For example, identifying the outcome of a fair coin flip (with two equally likely outcomes) provides less information (lower entropy) than specifying the outcome from a roll of a dice, die (with six equally likely outcomes). Some other important measures in information theory are mutual informat ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Conditional Mutual Information

In probability theory, particularly information theory, the conditional mutual information is, in its most basic form, the expected value of the mutual information of two random variables given the value of a third. Definition For random variables X, Y, and Z with support sets \mathcal, \mathcal and \mathcal, we define the conditional mutual information as This may be written in terms of the expectation operator: I(X;Y, Z) = \mathbb_Z P_ \otimes P_ )/math>. Thus I(X;Y, Z) is the expected (with respect to Z) Kullback–Leibler divergence from the conditional joint distribution P_ to the product of the conditional marginals P_ and P_. Compare with the definition of mutual information. In terms of PMFs for discrete distributions For discrete random variables X, Y, and Z with support sets \mathcal, \mathcal and \mathcal, the conditional mutual information I(X;Y, Z) is as follows : I(X;Y, Z) = \sum_ p_Z(z) \sum_ \sum_ p_(x,y, z) \log \frac where the marginal, joint, ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

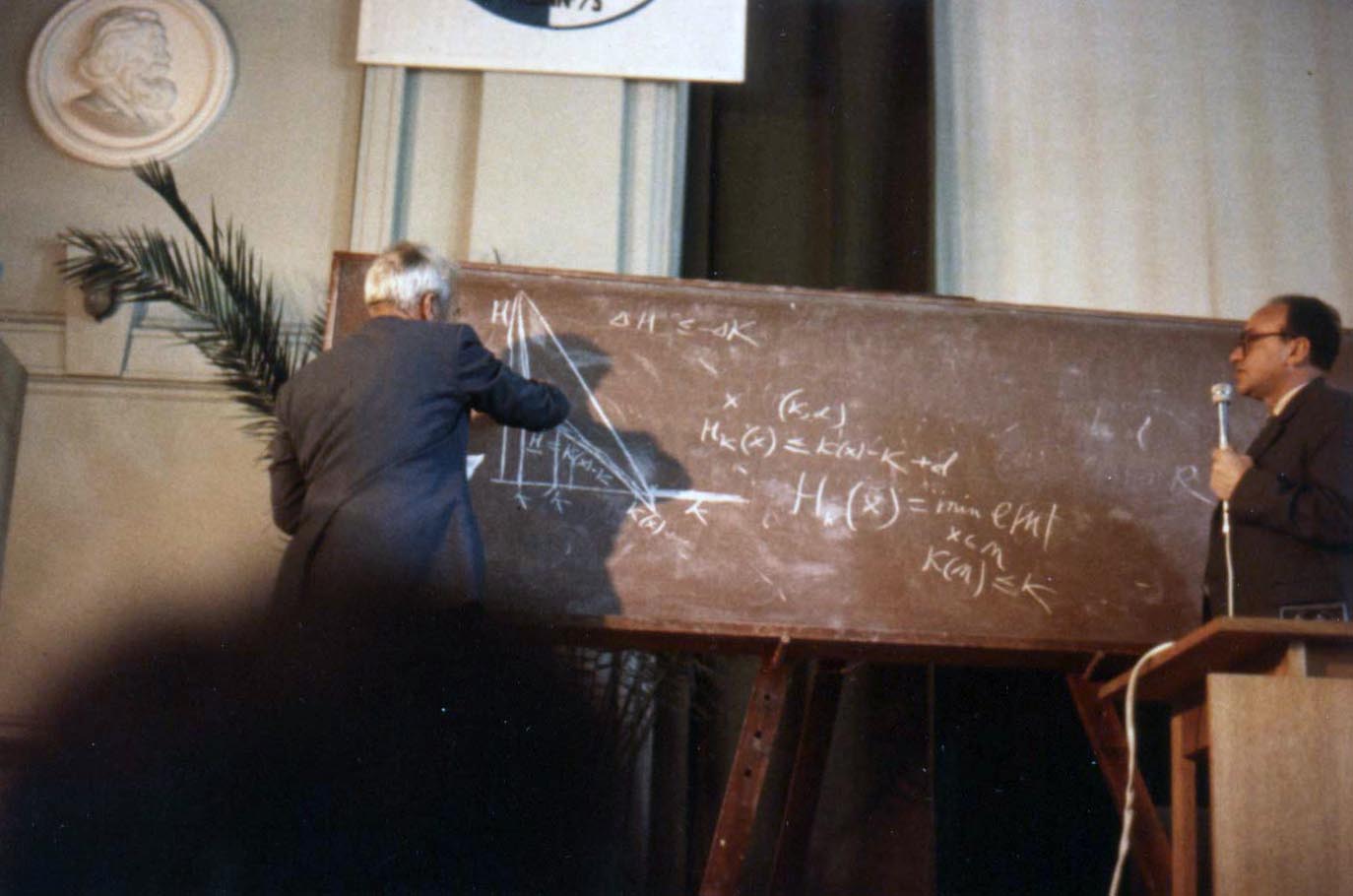

Andrey Kolmogorov

Andrey Nikolaevich Kolmogorov ( rus, Андре́й Никола́евич Колмого́ров, p=ɐnˈdrʲej nʲɪkɐˈlajɪvʲɪtɕ kəlmɐˈɡorəf, a=Ru-Andrey Nikolaevich Kolmogorov.ogg, 25 April 1903 – 20 October 1987) was a Soviet mathematician who contributed to the mathematics of probability theory, topology, intuitionistic logic, turbulence, classical mechanics, algorithmic information theory and computational complexity. Biography Early life Andrey Kolmogorov was born in Tambov, about 500 kilometers south-southeast of Moscow, in 1903. His unmarried mother, Maria Y. Kolmogorova, died giving birth to him. Andrey was raised by two of his aunts in Tunoshna (near Yaroslavl) at the estate of his grandfather, a well-to-do nobleman. Little is known about Andrey's father. He was supposedly named Nikolai Matveevich Kataev and had been an agronomist. Kataev had been exiled from St. Petersburg to the Yaroslavl province after his participation in the revolutionary movem ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Kolmogorov Complexity

In algorithmic information theory (a subfield of computer science and mathematics), the Kolmogorov complexity of an object, such as a piece of text, is the length of a shortest computer program (in a predetermined programming language) that produces the object as output. It is a measure of the computational resources needed to specify the object, and is also known as algorithmic complexity, Solomonoff–Kolmogorov–Chaitin complexity, program-size complexity, descriptive complexity, or algorithmic entropy. It is named after Andrey Kolmogorov, who first published on the subject in 1963 and is a generalization of classical information theory. The notion of Kolmogorov complexity can be used to state and prove impossibility results akin to Cantor's diagonal argument, Gödel's incompleteness theorem, and Turing's halting problem. In particular, no program ''P'' computing a lower bound for each text's Kolmogorov complexity can return a value essentially larger than ''P'''s own leng ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Coset

In mathematics, specifically group theory, a subgroup of a group may be used to decompose the underlying set of into disjoint, equal-size subsets called cosets. There are ''left cosets'' and ''right cosets''. Cosets (both left and right) have the same number of elements (cardinality) as does . Furthermore, itself is both a left coset and a right coset. The number of left cosets of in is equal to the number of right cosets of in . This common value is called the index of in and is usually denoted by . Cosets are a basic tool in the study of groups; for example, they play a central role in Lagrange's theorem that states that for any finite group , the number of elements of every subgroup of divides the number of elements of . Cosets of a particular type of subgroup (a normal subgroup) can be used as the elements of another group called a quotient group or factor group. Cosets also appear in other areas of mathematics such as vector spaces and error-correcting codes ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Group (mathematics)

In mathematics, a group is a Set (mathematics), set and an Binary operation, operation that combines any two Element (mathematics), elements of the set to produce a third element of the set, in such a way that the operation is Associative property, associative, an identity element exists and every element has an Inverse element, inverse. These three axioms hold for Number#Main classification, number systems and many other mathematical structures. For example, the integers together with the addition operation form a group. The concept of a group and the axioms that define it were elaborated for handling, in a unified way, essential structural properties of very different mathematical entities such as numbers, geometric shapes and polynomial roots. Because the concept of groups is ubiquitous in numerous areas both within and outside mathematics, some authors consider it as a central organizing principle of contemporary mathematics. In geometry groups arise naturally in the study of ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Ingleton's Inequality

In mathematics, Ingleton's inequality is an inequality that is satisfied by the rank function of any representable matroid. In this sense it is a necessary condition for representability of a matroid over a finite field. Let ''M'' be a matroid and let ''ρ'' be its rank function, Ingleton's inequality states that for any subsets ''X''1, ''X''2, ''X''3 and ''X''4 in the support of ''M'', the inequality :''ρ''(''X''1)+''ρ''(''X''2)+''ρ''(''X''1∪''X''2∪''X''3)+''ρ''(''X''1∪''X''2∪''X''4)+''ρ''(''X''3∪''X''4) ≤ ''ρ''(''X''1∪''X''2)+''ρ''(''X''1∪''X''3)+''ρ''(''X''1∪''X''4)+''ρ''(''X''2∪''X''3)+''ρ''(''X''2∪''X''4) is satisfied. Aubrey William Ingleton, an English mathematician, wrote an important paper in 1969 in which he surveyed the representability problem in matroids. Although the article is mainly expository, in this paper Ingleton stated and proved Ingleton's inequality, which has found interesting applications in information theory, matroid th ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Quantum Information Theory

Quantum information is the information of the quantum state, state of a quantum system. It is the basic entity of study in quantum information theory, and can be manipulated using quantum information processing techniques. Quantum information refers to both the technical definition in terms of Von Neumann entropy and the general computational term. It is an interdisciplinary field that involves quantum mechanics, computer science, information theory, philosophy and cryptography among other fields. Its study is also relevant to disciplines such as cognitive science, psychology and neuroscience. Its main focus is in extracting information from matter at the microscopic scale. Observation in science is one of the most important ways of acquiring information and measurement is required in order to quantify the observation, making this crucial to the scientific method. In quantum mechanics, due to the uncertainty principle, non-commuting Observable, observables cannot be precisely mea ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Von Neumann Entropy

In physics, the von Neumann entropy, named after John von Neumann, is an extension of the concept of Gibbs entropy from classical statistical mechanics to quantum statistical mechanics. For a quantum-mechanical system described by a density matrix , the von Neumann entropy is : S = - \operatorname(\rho \ln \rho), where \operatorname denotes the trace and ln denotes the (natural) matrix logarithm. If is written in terms of its eigenvectors , 1\rangle, , 2\rangle, , 3\rangle, \dots as : \rho = \sum_j \eta_j \left, j \right\rang \left\lang j \ , then the von Neumann entropy is merely : S = -\sum_j \eta_j \ln \eta_j . In this form, ''S'' can be seen as the information theoretic Shannon entropy. The von Neumann entropy is also used in different forms ( conditional entropies, relative entropies, etc.) in the framework of quantum information theory to characterize the entropy of entanglement. Background John von Neumann established a rigorous mathematical framework for quantum me ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Combinatorics, Probability And Computing

''Combinatorics, Probability and Computing'' is a peer-reviewed scientific journal in mathematics published by Cambridge University Press. Its editor-in-chief is Béla Bollobás (DPMMS and University of Memphis). History The journal was established by Bollobás in 1992. Fields Medalist Timothy Gowers calls it "a personal favourite" among combinatorics journals and writes that it "maintains a high standard". Content The journal covers combinatorics, probability theory, and theoretical computer science. Currently, it publishes six issues annually. As with other journals from the same publisher, it follows a hybrid green/gold open access policy, in which authors may either place copies of their papers in an institutional repository after a six-month embargo period, or pay an open access charge to make their papers free to read on the journal's website. Abstracting and indexing The journal is abstracted and indexed in: According to the ''Journal Citation Reports'', the jou ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Nicholas Pippenger

Nicholas John Pippenger is a researcher in computer science. He has produced a number of fundamental results many of which are being widely used in the field of theoretical computer science, database processing and compiler optimization. He has also achieved the rank of IBM Fellow at Almaden IBM Research Center in San Jose, California. He has taught at the University of British Columbia in Vancouver, British Columbia, Canada and at Princeton University in the US. In the Fall of 2006 Pippenger joined the faculty of Harvey Mudd College. Pippenger holds a B.S. in Natural Sciences from Shimer College and a PhD from the Massachusetts Institute of Technology. He is married to Maria Klawe, President of Harvey Mudd College. In 1997 he was inducted as a Fellow of the Association for Computing Machinery. In 2013 he became a fellow of the American Mathematical Society. The complexity class, Nick's Class (NC), of problems quickly solvable on a parallel computer, was named by Stephen Cook ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Submodular

In mathematics, a submodular set function (also known as a submodular function) is a set function whose value, informally, has the property that the difference in the incremental value of the function that a single element makes when added to an input set decreases as the size of the input set increases. Submodular functions have a natural diminishing returns property which makes them suitable for many applications, including approximation algorithms, game theory (as functions modeling user preferences) and electrical networks. Recently, submodular functions have also found immense utility in several real world problems in machine learning and artificial intelligence, including automatic summarization, multi-document summarization, feature selection, active learning, sensor placement, image collection summarization and many other domains. Definition If \Omega is a finite set, a submodular function is a set function f:2^\rightarrow \mathbb, where 2^\Omega denotes the power set ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

.jpg)