|

Expected Value Of Sample Information

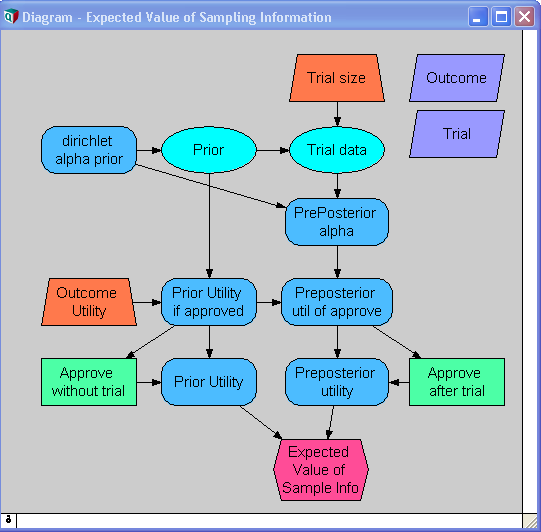

In decision theory, the expected value of sample information (EVSI) is the expected increase in utility that a decision-maker could obtain from gaining access to a sample of additional observations before making a decision. The additional information obtained from the sample may allow them to make a more informed, and thus better, decision, thus resulting in an increase in expected utility. EVSI attempts to estimate what this improvement would be before seeing actual sample data; hence, EVSI is a form of what is known as ''preposterior analysis''. The use of EVSI in decision theory was popularized by Robert Schlaifer and Howard Raiffa in the 1960s. Formulation Let : \begin d\in D & \mbox D \\ x\in X & \mbox X \\ z \in Z & \mbox n \mbox \langle z_1,z_2,..,z_n \rangle \\ U(d,x) & \mbox d \mbox x \\ p(x) & \mbox x \\ p(z, x) & \mbox z \end It is common (but not essential) in EVSI scenarios for Z_i=X, p(z, x)=\prod p(z_i, x) and \int z p(z, x) dz = x, which is to say that each ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Decision Theory

Decision theory (or the theory of choice; not to be confused with choice theory) is a branch of applied probability theory concerned with the theory of making decisions based on assigning probabilities to various factors and assigning numerical consequences to the outcome. There are three branches of decision theory: # Normative decision theory: Concerned with the identification of optimal decisions, where optimality is often determined by considering an ideal decision-maker who is able to calculate with perfect accuracy and is in some sense fully rational. # Prescriptive decision theory: Concerned with describing observed behaviors through the use of conceptual models, under the assumption that those making the decisions are behaving under some consistent rules. # Descriptive decision theory: Analyzes how individuals actually make the decisions that they do. Decision theory is closely related to the field of game theory and is an interdisciplinary topic, studied by ec ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Multinomial Distribution

In probability theory, the multinomial distribution is a generalization of the binomial distribution. For example, it models the probability of counts for each side of a ''k''-sided dice rolled ''n'' times. For ''n'' independent trials each of which leads to a success for exactly one of ''k'' categories, with each category having a given fixed success probability, the multinomial distribution gives the probability of any particular combination of numbers of successes for the various categories. When ''k'' is 2 and ''n'' is 1, the multinomial distribution is the Bernoulli distribution. When ''k'' is 2 and ''n'' is bigger than 1, it is the binomial distribution. When ''k'' is bigger than 2 and ''n'' is 1, it is the categorical distribution. The term "multinoulli" is sometimes used for the categorical distribution to emphasize this four-way relationship (so ''n'' determines the prefix, and ''k'' the suffix). The Bernoulli distribution models the outcome of a single Bernoulli tri ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Expected Utility

The expected utility hypothesis is a popular concept in economics that serves as a reference guide for decisions when the payoff is uncertain. The theory recommends which option rational individuals should choose in a complex situation, based on their risk appetite and preferences. The expected utility hypothesis states an agent chooses between risky prospects by comparing expected utility values (i.e. the weighted sum of adding the respective utility values of payoffs multiplied by their probabilities). The summarised formula for expected utility is U(p)=\sum u(x_k)p_k where p_k is the probability that outcome indexed by k with payoff x_k is realized, and function ''u'' expresses the utility of each respective payoff. On a graph, the curvature of u will explain the agent's risk attitude. For example, if an agent derives 0 utils from 0 apples, 2 utils from one apple, and 3 utils from two apples, their expected utility for a 50–50 gamble between zero apples and two is 0.5''u''( ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Expected Value Of Including Uncertainty

In decision theory and quantitative policy analysis, the expected value of including uncertainty (EVIU) is the expected difference in the value of a decision based on a probabilistic analysis versus a decision based on an analysis that ignores uncertainty. Background Decisions must be made every day in the ubiquitous presence of uncertainty. For most day-to-day decisions, various heuristics are used to act reasonably in the presence of uncertainty, often with little thought about its presence. However, for larger high-stakes decisions or decisions in highly public situations, decision makers may often benefit from a more systematic treatment of their decision problem, such as through quantitative analysis or decision analysis. When building a quantitative decision model, a model builder identifies various relevant factors, and encodes these as ''input variables''. From these inputs, other quantities, called ''result variables'', can be computed; these provide information for t ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Expected Value Of Perfect Information

In decision theory, the expected value of perfect information (EVPI) is the price that one would be willing to pay in order to gain access to perfect information. A common discipline that uses the EVPI concept is health economics. In that context and when looking at a decision of whether to adopt a new treatment technology, there is always some degree of uncertainty surrounding the decision, because there is always a chance that the decision turns out to be wrong. The expected value of perfect information analysis tries to measure the expected cost of that uncertainty, which “can be interpreted as the expected value of perfect information (EVPI), since perfect information can eliminate the possibility of making the wrong decision” at least from a theoretical perspective. Equation The problem is modeled with a payoff matrix ''Rij'' in which the row index ''i'' describes a choice that must be made by the player, while the column index ''j'' describes a random variable that the ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Bayes' Theorem

In probability theory and statistics, Bayes' theorem (alternatively Bayes' law or Bayes' rule), named after Thomas Bayes, describes the probability of an event, based on prior knowledge of conditions that might be related to the event. For example, if the risk of developing health problems is known to increase with age, Bayes' theorem allows the risk to an individual of a known age to be assessed more accurately (by conditioning it on their age) than simply assuming that the individual is typical of the population as a whole. One of the many applications of Bayes' theorem is Bayesian inference, a particular approach to statistical inference. When applied, the probabilities involved in the theorem may have different probability interpretations. With Bayesian probability interpretation, the theorem expresses how a degree of belief, expressed as a probability, should rationally change to account for the availability of related evidence. Bayesian inference is fundamental to Baye ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Monte Carlo Methods

Monte Carlo methods, or Monte Carlo experiments, are a broad class of computational algorithms that rely on repeated random sampling to obtain numerical results. The underlying concept is to use randomness to solve problems that might be deterministic in principle. They are often used in physical and mathematical problems and are most useful when it is difficult or impossible to use other approaches. Monte Carlo methods are mainly used in three problem classes: optimization, numerical integration, and generating draws from a probability distribution. In physics-related problems, Monte Carlo methods are useful for simulating systems with many coupled degrees of freedom, such as fluids, disordered materials, strongly coupled solids, and cellular structures (see cellular Potts model, interacting particle systems, McKean–Vlasov processes, kinetic models of gases). Other examples include modeling phenomena with significant uncertainty in inputs such as the calculation of r ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Expected Value Of Including Uncertainty

In decision theory and quantitative policy analysis, the expected value of including uncertainty (EVIU) is the expected difference in the value of a decision based on a probabilistic analysis versus a decision based on an analysis that ignores uncertainty. Background Decisions must be made every day in the ubiquitous presence of uncertainty. For most day-to-day decisions, various heuristics are used to act reasonably in the presence of uncertainty, often with little thought about its presence. However, for larger high-stakes decisions or decisions in highly public situations, decision makers may often benefit from a more systematic treatment of their decision problem, such as through quantitative analysis or decision analysis. When building a quantitative decision model, a model builder identifies various relevant factors, and encodes these as ''input variables''. From these inputs, other quantities, called ''result variables'', can be computed; these provide information for t ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Expected Value Of Perfect Information

In decision theory, the expected value of perfect information (EVPI) is the price that one would be willing to pay in order to gain access to perfect information. A common discipline that uses the EVPI concept is health economics. In that context and when looking at a decision of whether to adopt a new treatment technology, there is always some degree of uncertainty surrounding the decision, because there is always a chance that the decision turns out to be wrong. The expected value of perfect information analysis tries to measure the expected cost of that uncertainty, which “can be interpreted as the expected value of perfect information (EVPI), since perfect information can eliminate the possibility of making the wrong decision” at least from a theoretical perspective. Equation The problem is modeled with a payoff matrix ''Rij'' in which the row index ''i'' describes a choice that must be made by the player, while the column index ''j'' describes a random variable that the ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

EVSI Result

In decision theory, the expected value of sample information (EVSI) is the expected increase in utility that a decision-maker could obtain from gaining access to a sample of additional observations before making a decision. The additional information obtained from the sample may allow them to make a more informed, and thus better, decision, thus resulting in an increase in expected utility. EVSI attempts to estimate what this improvement would be before seeing actual sample data; hence, EVSI is a form of what is known as ''preposterior analysis''. The use of EVSI in decision theory was popularized by Robert Schlaifer and Howard Raiffa in the 1960s. Formulation Let : \begin d\in D & \mbox D \\ x\in X & \mbox X \\ z \in Z & \mbox n \mbox \langle z_1,z_2,..,z_n \rangle \\ U(d,x) & \mbox d \mbox x \\ p(x) & \mbox x \\ p(z, x) & \mbox z \end It is common (but not essential) in EVSI scenarios for Z_i=X, p(z, x)=\prod p(z_i, x) and \int z p(z, x) dz = x, which is to say that each o ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |