|

Durbin–Wu–Hausman Test

The Durbin–Wu–Hausman test (also called Hausman specification test) is a statistical hypothesis test in econometrics named after James Durbin, De-Min Wu, and Jerry A. Hausman. The test evaluates the consistency of an estimator when compared to an alternative, less efficient estimator which is already known to be consistent. It helps one evaluate if a statistical model corresponds to the data. Details Consider the linear model ''y'' = ''Xb'' + ''e'', where ''y'' is the dependent variable and ''X'' is vector of regressors, ''b'' is a vector of coefficients and ''e'' is the error term. We have two estimators for ''b'': ''b''0 and ''b''1. Under the null hypothesis, both of these estimators are consistent, but ''b''1 is efficient (has the smallest asymptotic variance), at least in the class of estimators containing ''b''0. Under the alternative hypothesis, ''b''0 is consistent, whereas ''b''1 isn't. Then the Wu–Hausman statistic is: : H=(b_-b_)'\big(\o ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Statistical Hypothesis Test

A statistical hypothesis test is a method of statistical inference used to decide whether the data at hand sufficiently support a particular hypothesis. Hypothesis testing allows us to make probabilistic statements about population parameters. History Early use While hypothesis testing was popularized early in the 20th century, early forms were used in the 1700s. The first use is credited to John Arbuthnot (1710), followed by Pierre-Simon Laplace (1770s), in analyzing the human sex ratio at birth; see . Modern origins and early controversy Modern significance testing is largely the product of Karl Pearson ( ''p''-value, Pearson's chi-squared test), William Sealy Gosset (Student's t-distribution), and Ronald Fisher ("null hypothesis", analysis of variance, "significance test"), while hypothesis testing was developed by Jerzy Neyman and Egon Pearson (son of Karl). Ronald Fisher began his life in statistics as a Bayesian (Zabell 1992), but Fisher soon grew disenchanted with the ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

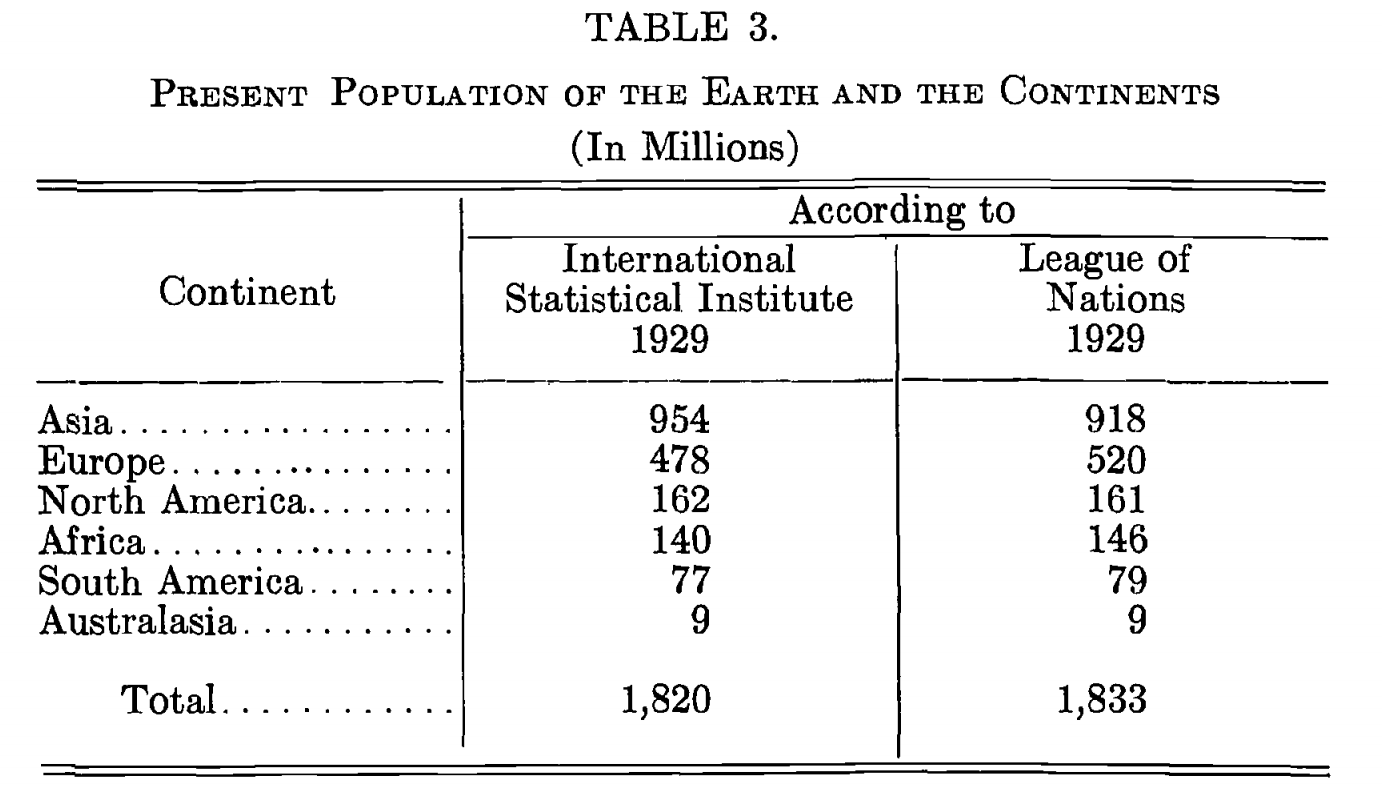

Review Of The International Statistical Institute

The International Statistical Institute (ISI) is a professional association of statisticians. It was founded in 1885, although there had been international statistical congresses since 1853. The institute has about 4,000 elected members from government, academia, and the private sector. The affiliated Associations have membership open to any professional statistician. The institute publishes a variety of books and journals, and holds an international conference every two years. The biennial convention was commonly known as the ISI Session; however, since 2011, it is now referred to as the ISI World Statistics Congress. The permanent office of the institute is located in the Statistics Netherlands building in Leidschenveen (The Hague), in the Netherlands. Specialized Associations ISI serves as an umbrella for seven specialized Associations: *Bernoulli Society for Mathematical Statistics and Probability (BS) *International Association for Statistical Computing (IASC) *Internation ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Statistical Model Specification

In statistics, model specification is part of the process of building a statistical model: specification consists of selecting an appropriate functional form for the model and choosing which variables to include. For example, given personal income y together with years of schooling s and on-the-job experience x, we might specify a functional relationship y = f(s,x) as follows: : \ln y = \ln y_0 + \rho s + \beta_1 x + \beta_2 x^2 + \varepsilon where \varepsilon is the unexplained error term that is supposed to comprise independent and identically distributed Gaussian variables. The statistician Sir David Cox has said, "How hetranslation from subject-matter problem to statistical model is done is often the most critical part of an analysis". Specification error and bias Specification error occurs when the functional form or the choice of independent variables poorly represent relevant aspects of the true data-generating process. In particular, bias (the expected value of the di ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Regression Model Validation

In statistics, regression validation is the process of deciding whether the numerical results quantifying hypothesized relationships between variables, obtained from regression analysis, are acceptable as descriptions of the data. The validation process can involve analyzing the goodness of fit of the regression, analyzing whether the regression residuals are random, and checking whether the model's predictive performance deteriorates substantially when applied to data that were not used in model estimation. Goodness of fit One measure of goodness of fit is the ''R''2 (coefficient of determination), which in ordinary least squares with an intercept ranges between 0 and 1. However, an ''R''2 close to 1 does not guarantee that the model fits the data well: as Anscombe's quartet shows, a high ''R''2 can occur in the presence of misspecification of the functional form of a relationship or in the presence of outliers that distort the true relationship. One problem with the ''R''2 ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Panel Analysis

Panel (data) analysis is a statistical method, widely used in social science, epidemiology, and econometrics to analyze two-dimensional (typically cross sectional and longitudinal) panel data. The data are usually collected over time and over the same individuals and then a regression is run over these two dimensions. Multidimensional analysis is an econometric method in which data are collected over more than two dimensions (typically, time, individuals, and some third dimension). A common panel data regression model looks like y_=a+bx_+\varepsilon_, where y is the dependent variable, x is the independent variable, a and b are coefficients, i and t are indices for individuals and time. The error \varepsilon_ is very important in this analysis. Assumptions about the error term determine whether we speak of fixed effects or random effects. In a fixed effects model, \varepsilon_ is assumed to vary non-stochastically over i or t making the fixed effects model analogous to a dummy var ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Random Effects Model

In statistics, a random effects model, also called a variance components model, is a statistical model where the model parameters are random variables. It is a kind of hierarchical linear model, which assumes that the data being analysed are drawn from a hierarchy of different populations whose differences relate to that hierarchy. A random effects model is a special case of a mixed model. Contrast this to the biostatistics definitions, as biostatisticians use "fixed" and "random" effects to respectively refer to the population-average and subject-specific effects (and where the latter are generally assumed to be unknown, latent variables). Qualitative description Random effect models assist in controlling for unobserved heterogeneity when the heterogeneity is constant over time and not correlated with independent variables. This constant can be removed from longitudinal data through differencing, since taking a first difference will remove any time invariant components of the m ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Fixed Effects Model

In statistics, a fixed effects model is a statistical model in which the model parameters are fixed or non-random quantities. This is in contrast to random effects models and mixed models in which all or some of the model parameters are random variables. In many applications including econometrics and biostatistics a fixed effects model refers to a regression model in which the group means are fixed (non-random) as opposed to a random effects model in which the group means are a random sample from a population. Generally, data can be grouped according to several observed factors. The group means could be modeled as fixed or random effects for each grouping. In a fixed effects model each group mean is a group-specific fixed quantity. In panel data where longitudinal observations exist for the same subject, fixed effects represent the subject-specific means. In panel data analysis the term fixed effects estimator (also known as the within estimator) is used to refer to an estimator ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Delta Method

In statistics, the delta method is a result concerning the approximate probability distribution for a function of an asymptotically normal statistical estimator from knowledge of the limiting variance of that estimator. History The delta method was derived from propagation of error, and the idea behind was known in the early 19th century. Its statistical application can be traced as far back as 1928 by T. L. Kelley. A formal description of the method was presented by J. L. Doob in 1935. Robert Dorfman also described a version of it in 1938. Univariate delta method While the delta method generalizes easily to a multivariate setting, careful motivation of the technique is more easily demonstrated in univariate terms. Roughly, if there is a sequence of random variables satisfying :, where ''θ'' and ''σ''2 are finite valued constants and \xrightarrow denotes convergence in distribution, then : for any function ''g'' satisfying the property that exists and is non-zero value ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Ordinary Least Squares

In statistics, ordinary least squares (OLS) is a type of linear least squares method for choosing the unknown parameters in a linear regression model (with fixed level-one effects of a linear function of a set of explanatory variables) by the principle of least squares: minimizing the sum of the squares of the differences between the observed dependent variable (values of the variable being observed) in the input dataset and the output of the (linear) function of the independent variable. Geometrically, this is seen as the sum of the squared distances, parallel to the axis of the dependent variable, between each data point in the set and the corresponding point on the regression surface—the smaller the differences, the better the model fits the data. The resulting estimator can be expressed by a simple formula, especially in the case of a simple linear regression, in which there is a single regressor on the right side of the regression equation. The OLS estimator is consiste ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Instrumental Variable

In statistics, econometrics, epidemiology and related disciplines, the method of instrumental variables (IV) is used to estimate causal relationships when controlled experiments are not feasible or when a treatment is not successfully delivered to every unit in a randomized experiment. Intuitively, IVs are used when an explanatory variable of interest is correlated with the error term, in which case ordinary least squares and ANOVA give biased results. A valid instrument induces changes in the explanatory variable but has no independent effect on the dependent variable, allowing a researcher to uncover the causal effect of the explanatory variable on the dependent variable. Instrumental variable methods allow for consistent estimation when the explanatory variables (covariates) are correlated with the error terms in a regression model. Such correlation may occur when: # changes in the dependent variable change the value of at least one of the covariates ("reverse" causation), # ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Endogeneity (econometrics)

In econometrics, endogeneity broadly refers to situations in which an explanatory variable is correlated with the error term. The distinction between endogenous and exogenous variables originated in simultaneous equations models, where one separates variables whose values are determined by the model from variables which are predetermined; ignoring simultaneity in the estimation leads to biased estimates as it violates the exogeneity assumption of the Gauss–Markov theorem. The problem of endogeneity is often ignored by researchers conducting non-experimental research and doing so precludes making policy recommendations. Instrumental variable techniques are commonly used to address this problem. Besides simultaneity, correlation between explanatory variables and the error term can arise when an unobserved or omitted variable is confounding both independent and dependent variables, or when independent variables are measured with error. Exogeneity versus endogeneity In a sto ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |