|

Double-precision

Double-precision floating-point format (sometimes called FP64 or float64) is a floating-point number format, usually occupying 64 bits in computer memory; it represents a wide range of numeric values by using a floating radix point. Double precision may be chosen when the range or precision of single precision would be insufficient. In the IEEE 754 standard, the 64-bit base-2 format is officially referred to as binary64; it was called double in IEEE 754-1985. IEEE 754 specifies additional floating-point formats, including 32-bit base-2 ''single precision'' and, more recently, base-10 representations (decimal floating point). One of the first programming languages to provide floating-point data types was Fortran. Before the widespread adoption of IEEE 754-1985, the representation and properties of floating-point data types depended on the computer manufacturer and computer model, and upon decisions made by programming-language implementers. E.g., GW-BASIC's double-precision ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Floating-point Arithmetic

In computing, floating-point arithmetic (FP) is arithmetic on subsets of real numbers formed by a ''significand'' (a Sign (mathematics), signed sequence of a fixed number of digits in some Radix, base) multiplied by an integer power of that base. Numbers of this form are called floating-point numbers. For example, the number 2469/200 is a floating-point number in base ten with five digits: 2469/200 = 12.345 = \! \underbrace_\text \! \times \! \underbrace_\text\!\!\!\!\!\!\!\overbrace^ However, 7716/625 = 12.3456 is not a floating-point number in base ten with five digits—it needs six digits. The nearest floating-point number with only five digits is 12.346. And 1/3 = 0.3333… is not a floating-point number in base ten with any finite number of digits. In practice, most floating-point systems use Binary number, base two, though base ten (decimal floating point) is also common. Floating-point arithmetic operations, such as addition and division, approximate the correspond ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

IEEE 754

The IEEE Standard for Floating-Point Arithmetic (IEEE 754) is a technical standard for floating-point arithmetic originally established in 1985 by the Institute of Electrical and Electronics Engineers (IEEE). The standard #Design rationale, addressed many problems found in the diverse floating-point implementations that made them difficult to use reliably and Software portability, portably. Many hardware floating-point units use the IEEE 754 standard. The standard defines: * ''arithmetic formats:'' sets of Binary code, binary and decimal floating-point data, which consist of finite numbers (including signed zeros and subnormal numbers), infinity, infinities, and special "not a number" values (NaNs) * ''interchange formats:'' encodings (bit strings) that may be used to exchange floating-point data in an efficient and compact form * ''rounding rules:'' properties to be satisfied when rounding numbers during arithmetic and conversions * ''operations:'' arithmetic and other operatio ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Normal Number (computing)

In computing, a normal number is a non-zero number in a floating-point representation which is within the balanced range supported by a given floating-point format: it is a floating point number that can be represented without leading zeros in its significand. The magnitude of the smallest normal number in a format is given by: b^ where ''b'' is the base (radix) of the format (like common values 2 or 10, for binary and decimal number systems), and ''E_'' depends on the size and layout of the format. Similarly, the magnitude of the largest normal number in a format is given by :b^\cdot\left(b - b^\right) where ''p'' is the precision of the format in digits and ''E_'' is related to ''E_'' as: E_\, \overset\, 1 - E_ = \left(-E_\right) + 1 In the IEEE 754 binary and decimal formats, ''b'', ''p'', E_, and ''E_'' have the following values: For example, in the smallest decimal format in the table (decimal32), the range of positive normal numbers is 10−95 through ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

IEEE Floating Point

The IEEE Standard for Floating-Point Arithmetic (IEEE 754) is a technical standard for floating-point arithmetic originally established in 1985 by the Institute of Electrical and Electronics Engineers (IEEE). The standard addressed many problems found in the diverse floating-point implementations that made them difficult to use reliably and portably. Many hardware floating-point units use the IEEE 754 standard. The standard defines: * ''arithmetic formats:'' sets of binary and decimal floating-point data, which consist of finite numbers (including signed zeros and subnormal numbers), infinities, and special "not a number" values ( NaNs) * ''interchange formats:'' encodings (bit strings) that may be used to exchange floating-point data in an efficient and compact form * ''rounding rules:'' properties to be satisfied when rounding numbers during arithmetic and conversions * ''operations:'' arithmetic and other operations (such as trigonometric functions) on arithmetic for ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Single-precision Floating-point Format

Single-precision floating-point format (sometimes called FP32 or float32) is a computer number format, usually occupying 32 bits in computer memory; it represents a wide dynamic range of numeric values by using a floating radix point. A floating-point variable can represent a wider range of numbers than a fixed-point variable of the same bit width at the cost of precision. A signed 32-bit integer variable has a maximum value of 231 − 1 = 2,147,483,647, whereas an IEEE 754 32-bit base-2 floating-point variable has a maximum value of (2 − 2−23) × 2127 ≈ 3.4028235 × 1038. All integers with seven or fewer decimal digits, and any 2''n'' for a whole number −149 ≤ ''n'' ≤ 127, can be converted exactly into an IEEE 754 single-precision floating-point value. In the IEEE 754 standard, the 32-bit base-2 format is officially referred to as binary32; it was called single in IEEE 754-1985. IEEE 754 specifies additional floating-point types, such as 64-bit base-2 ''doubl ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

IEEE 754-1985

IEEE 754-1985 is a historic industry standard for representing floating-point numbers in computers, officially adopted in 1985 and superseded in 2008 by IEEE 754-2008, and then again in 2019 by minor revision IEEE 754-2019. During its 23 years, it was the most widely used format for floating-point computation. It was implemented in software, in the form of floating-point libraries, and in hardware, in the instructions of many CPUs and FPUs. The first integrated circuit to implement the draft of what was to become IEEE 754-1985 was the Intel 8087. IEEE 754-1985 represents numbers in binary, providing definitions for four levels of precision, of which the two most commonly used are: The standard also defines representations for positive and negative infinity, a "negative zero", five exceptions to handle invalid results like division by zero, special values called NaNs for representing those exceptions, denormal numbers to represent numbers smaller than shown above, a ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Programming Language

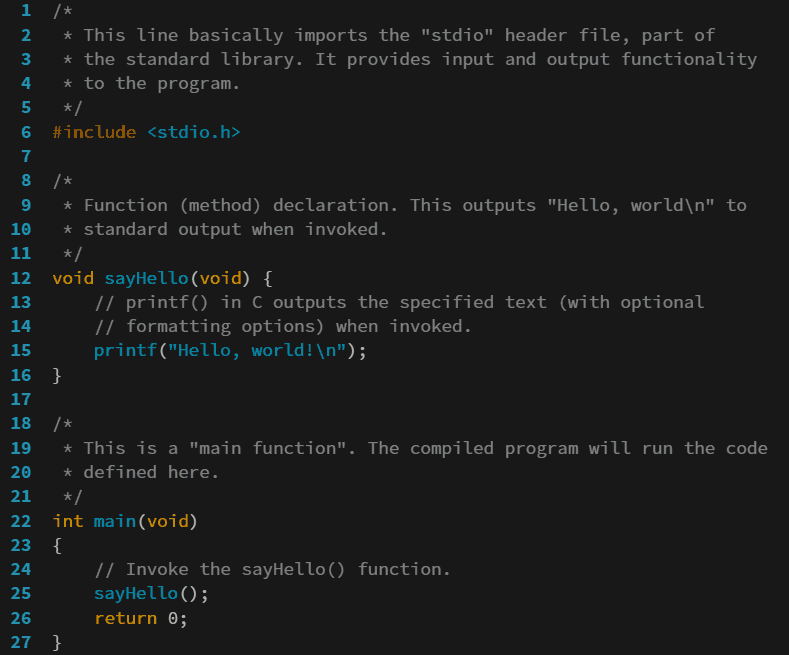

A programming language is a system of notation for writing computer programs. Programming languages are described in terms of their Syntax (programming languages), syntax (form) and semantics (computer science), semantics (meaning), usually defined by a formal language. Languages usually provide features such as a type system, Variable (computer science), variables, and mechanisms for Exception handling (programming), error handling. An Programming language implementation, implementation of a programming language is required in order to Execution (computing), execute programs, namely an Interpreter (computing), interpreter or a compiler. An interpreter directly executes the source code, while a compiler produces an executable program. Computer architecture has strongly influenced the design of programming languages, with the most common type (imperative languages—which implement operations in a specified order) developed to perform well on the popular von Neumann architecture. ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Exponent Bias

In IEEE 754 floating-point numbers, the exponent is biased in the engineering sense of the word – the value stored is offset from the actual value by the exponent bias, also called a biased exponent. Biasing is done because exponents have to be signed values in order to be able to represent both tiny and huge values, but two's complement, the usual representation for signed values, would make comparison harder. To solve this problem the exponent is stored as an unsigned value which is suitable for comparison, and when being interpreted it is converted into an exponent within a signed range by subtracting the bias. By arranging the fields such that the sign bit takes the most significant bit position, the biased exponent takes the middle position, then the significand will be the least significant bits and the resulting value will be ordered properly. This is the case whether or not it is interpreted as a floating-point or integer value. The purpose of this is to enable high sp ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Single Precision

Single-precision floating-point format (sometimes called FP32 or float32) is a computer number format, usually occupying 32 bits in computer memory; it represents a wide dynamic range of numeric values by using a floating radix point. A floating-point variable can represent a wider range of numbers than a fixed-point variable of the same bit width at the cost of precision. A signed 32-bit integer variable has a maximum value of 231 − 1 = 2,147,483,647, whereas an IEEE 754 32-bit base-2 floating-point variable has a maximum value of (2 − 2−23) × 2127 ≈ 3.4028235 × 1038. All integers with seven or fewer decimal digits, and any 2''n'' for a whole number −149 ≤ ''n'' ≤ 127, can be converted exactly into an IEEE 754 single-precision floating-point value. In the IEEE 754 standard, the 32-bit base-2 format is officially referred to as binary32; it was called single in IEEE 754-1985. IEEE 754 specifies additional floating-point types, such as 64-bit base-2 ''doubl ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Machine Epsilon

Machine epsilon or machine precision is an upper bound on the relative approximation error due to rounding in floating point number systems. This value characterizes computer arithmetic in the field of numerical analysis, and by extension in the subject of computational science. The quantity is also called macheps and it has the symbols Greek epsilon \varepsilon. There are two prevailing definitions, denoted here as ''rounding machine epsilon'' or the ''formal definition'' and ''interval machine epsilon'' or ''mainstream definition''. In the ''mainstream definition'', machine epsilon is independent of rounding method, and is defined simply as ''the difference between 1 and the next larger floating point number''. In the ''formal definition'', machine epsilon is dependent on the type of rounding used and is also called unit roundoff, which has the symbol bold Roman u. The two terms can generally be considered to differ by simply a factor of two, with the ''formal definition'' ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Significand

The significand (also coefficient, sometimes argument, or more ambiguously mantissa, fraction, or characteristic) is the first (left) part of a number in scientific notation or related concepts in floating-point representation, consisting of its significant digits. For negative numbers, it does not include the initial minus sign. Depending on the interpretation of the exponent, the significand may represent an integer or a fractional number, which may cause the term "mantissa" to be misleading, since the ''mantissa'' of a logarithm is always its fractional part. Although the other names mentioned are common, ''significand'' is the word used by IEEE 754, an important technical standard for floating-point arithmetic. In mathematics, the term "argument" may also be ambiguous, since "the argument of a number" sometimes refers to the length of a circular arc from 1 to a number on the unit circle in the complex plane. Example The number 123.45 can be represented as a decimal floati ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |