|

Dekker's Algorithm

Dekker's algorithm is the first known correct solution to the mutual exclusion problem in concurrent programming where processes only communicate via shared memory. The solution was attributed to Dutch people, Dutch mathematician Theodorus Dekker, Th. J. Dekker by Edsger W. Dijkstra in an unpublished paper on sequential process descriptions and his manuscript on cooperating sequential processes. It allows two threads to share a single-use resource without conflict, using only Shared memory (interprocess communication), shared memory for communication. It avoids the strict alternation of a naïve turn-taking algorithm, and was one of the first mutual exclusion algorithms to be invented. Overview If two processes attempt to enter a critical section at the same time, the algorithm will allow only one process in, based on whose it is. If one process is already in the critical section, the other process will busy wait for the first process to exit. This is done by the use of two fl ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Mutual Exclusion

In computer science, mutual exclusion is a property of concurrency control, which is instituted for the purpose of preventing race conditions. It is the requirement that one thread of execution never enters a critical section while a concurrent thread of execution is already accessing said critical section, which refers to an interval of time during which a thread of execution accesses a shared resource or shared memory. The shared resource is a data object, which two or more concurrent threads are trying to modify (where two concurrent read operations are permitted but, no two concurrent write operations or one read and one write are permitted, since it leads to data inconsistency). Mutual exclusion algorithms ensure that if a process is already performing write operation on a data object ritical sectionno other process/thread is allowed to access/modify the same object until the first process has finished writing upon the data object ritical sectionand released the obj ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Memory Ordering

Memory ordering is the order of accesses to computer memory by a CPU. Memory ordering depends on both the order of the instructions generated by the compiler at compile time and the execution order of the CPU at runtime. However, memory order is of little concern outside of multithreading and memory-mapped I/O, because if the compiler or CPU changes the order of any operations, it must necessarily ensure that the reordering does not change the output of ordinary single-threaded code. The memory order is said to be ''strong'' or ''sequentially consistent'' when either the order of operations cannot change or when such changes have no visible effect on any thread. Conversely, the memory order is called ''weak'' or ''relaxed'' when one thread cannot predict the order of operations arising from another thread. Many naïvely written parallel algorithms fail when compiled or executed with a weak memory order. The problem is most often solved by inserting memory barrier instruction ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Semaphore (programming)

In computer science, a semaphore is a variable or abstract data type used to control access to a common resource by multiple threads and avoid critical section problems in a concurrent system such as a multitasking operating system. Semaphores are a type of synchronization primitive. A trivial semaphore is a plain variable that is changed (for example, incremented or decremented, or toggled) depending on programmer-defined conditions. A useful way to think of a semaphore as used in a real-world system is as a record of how many units of a particular resource are available, coupled with operations to adjust that record ''safely'' (i.e., to avoid race conditions) as units are acquired or become free, and, if necessary, wait until a unit of the resource becomes available. Though semaphores are useful for preventing race conditions, they do not guarantee their absence. Semaphores that allow an arbitrary resource count are called counting semaphores, while semaphores that are ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

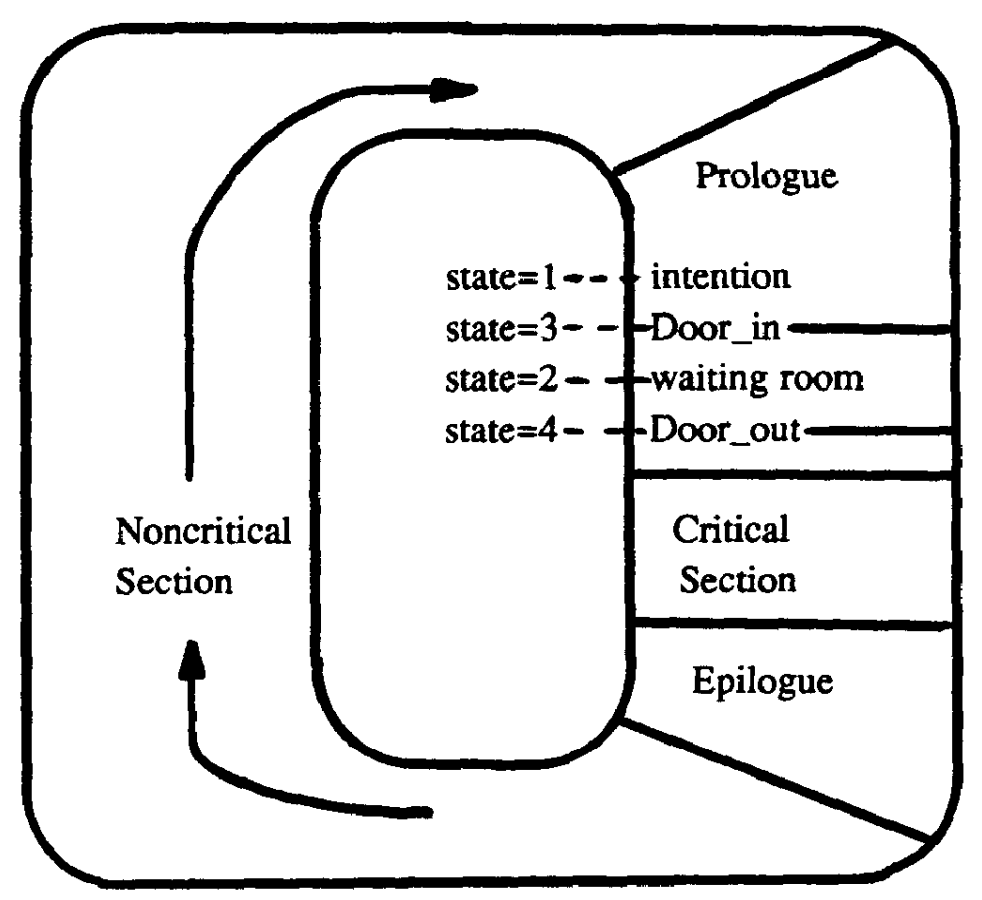

Szymański's Algorithm

Szymański's Mutual Exclusion Algorithm is a mutual exclusion algorithm devised by computer scientist Dr. Bolesław Szymański, which has many favorable properties including linear wait, and which extension solved the open problem posted by Leslie Lamport whether there is an algorithm with a constant number of communication bits per process that satisfies every reasonable fairness and failure-tolerance requirement that Lamport conceived of (Lamport's solution used ''n'' factorial communication variables vs. Szymański's 5). The algorithm The algorithm is modeled on a waiting room with an entry and exit doorway. Initially the entry door is open and the exit door is closed. All processes which request entry into the critical section at roughly the same time enter the waiting room; the last of them closes the entry door and opens the exit door. The processes then enter the critical section one by one (or in larger groups if the critical section permits this). The last process to l ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Lamport's Bakery Algorithm

Lamport's bakery algorithm is a computer algorithm devised by computer scientist Leslie Lamport, as part of his long study of the formal correctness of concurrent systems, which is intended to improve the safety in the usage of shared resources among multiple threads by means of mutual exclusion. In computer science, it is common for multiple threads to simultaneously access the same resources. Data corruption can occur if two or more threads try to write into the same memory location, or if one thread reads a memory location before another has finished writing into it. Lamport's bakery algorithm is one of many mutual exclusion algorithms designed to prevent concurrent threads entering critical sections of code concurrently to eliminate the risk of data corruption. Algorithm Analogy Lamport envisioned a bakery with a numbering machine at its entrance so each customer is given a unique number. Numbers increase by one as customers enter the store. A global counter display ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |