|

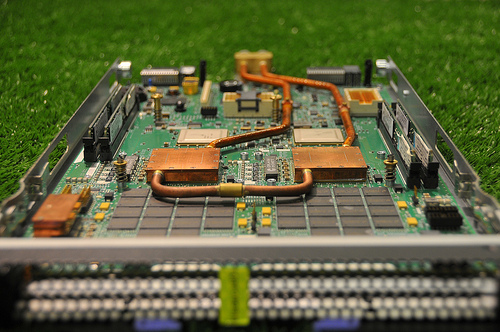

DOME Microserver

A microDataCenter contains compute, storage, power, cooling and networking in a very small volume, sometimes also called a "DataCenter-in-a-box". The term has been used to describe various incarnations of this idea over the past 20 years. Late 2017 a very tightly integrated version was shown at SuperComputing conference 2017: the DOME microDataCenter. Key features are its hot-watercooling, fully solid-state and being built with commodity components and standards only. DOME project DOME is a Dutch government-funded project between IBM and ASTRON in form of a public–private partnership to develop technology roadmaps targeting the Square Kilometer Array (SKA), the world's largest planned radio telescope. It will be built in Australia and South Africa during the late 2010s and early 2020s. One of the 7 DOME projects is MicroDataCenter (previously called Microservers) that are small, inexpensive and computationally efficient. The goal for the MicroDataCenter is the capability to b ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

DOME Project

DOME is a Dutch government-funded project between IBM and ASTRON in form of a public-private-partnership focussing on the Square Kilometre Array (SKA), the world's largest planned radio telescope. SKA will be built in Australia and South Africa. The DOME project objective is technology roadmap development that applies both to SKA and IBM. The 5-year project was started in 2012 and is co-funded by the Dutch government and IBM Research in Zürich, Switzerland and ASTRON in the Netherlands. The project ended officially on 30 September 2017. The DOME project is focusing on three areas of computing, green computing, data and streaming and nano-photonics and partitioned into seven research projects. * P1 Algorithms & Machines – As traditional computing scaling have essentially hit a wall, a new set of methodologies and principles is needed for the design of future large-scale computers. This will be an umbrella project for the other six. * P2 Access Patterns – When faced with stori ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Supercomputer

A supercomputer is a computer with a high level of performance as compared to a general-purpose computer. The performance of a supercomputer is commonly measured in floating-point operations per second ( FLOPS) instead of million instructions per second (MIPS). Since 2017, there have existed supercomputers which can perform over 1017 FLOPS (a hundred quadrillion FLOPS, 100 petaFLOPS or 100 PFLOPS). For comparison, a desktop computer has performance in the range of hundreds of gigaFLOPS (1011) to tens of teraFLOPS (1013). Since November 2017, all of the world's fastest 500 supercomputers run on Linux-based operating systems. Additional research is being conducted in the United States, the European Union, Taiwan, Japan, and China to build faster, more powerful and technologically superior exascale supercomputers. Supercomputers play an important role in the field of computational science, and are used for a wide range of computationally intensive tasks in var ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

10 Gigabit Ethernet

10 Gigabit Ethernet (10GE, 10GbE, or 10 GigE) is a group of computer networking technologies for transmitting Ethernet frames at a rate of 10 gigabits per second. It was first defined by the IEEE 802.3ae-2002 standard. Unlike previous Ethernet standards, 10 Gigabit Ethernet defines only full-duplex point-to-point links which are generally connected by network switches; shared-medium CSMA/CD operation has not been carried over from the previous generations Ethernet standards so half-duplex operation and repeater hubs do not exist in 10GbE. The 10 Gigabit Ethernet standard encompasses a number of different physical layer (PHY) standards. A networking device, such as a switch or a network interface controller may have different PHY types through pluggable PHY modules, such as those based on SFP+. Like previous versions of Ethernet, 10GbE can use either copper or fiber cabling. Maximum distance over copper cable is 100 meters but because of its bandwidth requirements, higher ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Gigabit Ethernet

In computer networking, Gigabit Ethernet (GbE or 1 GigE) is the term applied to transmitting Ethernet frames at a rate of a gigabit per second. The most popular variant, 1000BASE-T, is defined by the IEEE 802.3ab standard. It came into use in 1999, and has replaced Fast Ethernet in wired local networks due to its considerable speed improvement over Fast Ethernet, as well as its use of cables and equipment that are widely available, economical, and similar to previous standards. History Ethernet was the result of research conducted at Xerox PARC in the early 1970s, and later evolved into a widely implemented physical and link layer protocol. Fast Ethernet increased the speed from 10 to 100 megabits per second (Mbit/s). Gigabit Ethernet was the next iteration, increasing the speed to 1000 Mbit/s. * The initial standard for Gigabit Ethernet was produced by the IEEE in June 1998 as IEEE 802.3z, and required optical fiber. 802.3z is commonly referred to as 1000BASE-X, whe ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Serial ATA

SATA (Serial AT Attachment) is a computer bus interface that connects host bus adapters to mass storage devices such as hard disk drives, optical drives, and solid-state drives. Serial ATA succeeded the earlier Parallel ATA (PATA) standard to become the predominant interface for storage devices. Serial ATA industry compatibility specifications originate from the Serial ATA International Organization (SATA-IO) which are then promulgated by the INCITS Technical Committee T13, AT Attachment (INCITS T13). History SATA was announced in 2000 in order to provide several advantages over the earlier PATA interface such as reduced cable size and cost (seven conductors instead of 40 or 80), native hot swapping, faster data transfer through higher signaling rates, and more efficient transfer through an (optional) I/O queuing protocol. Revision 1.0 of the specification was released in January 2003. Serial ATA industry compatibility specifications originate from the Serial ATA Internat ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Booting

In computing, booting is the process of starting a computer as initiated via hardware such as a button or by a software command. After it is switched on, a computer's central processing unit (CPU) has no software in its main memory, so some process must load software into memory before it can be executed. This may be done by hardware or firmware in the CPU, or by a separate processor in the computer system. Restarting a computer also is called rebooting, which can be "hard", e.g. after electrical power to the CPU is switched from off to on, or "soft", where the power is not cut. On some systems, a soft boot may optionally clear RAM to zero. Both hard and soft booting can be initiated by hardware such as a button press or by a software command. Booting is complete when the operative runtime system, typically the operating system and some applications,Including daemons. is attained. The process of returning a computer from a state of sleep (suspension) does not involve bo ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Debugging

In computer programming and software development, debugging is the process of finding and resolving '' bugs'' (defects or problems that prevent correct operation) within computer programs, software, or systems. Debugging tactics can involve interactive debugging, control flow analysis, unit testing, integration testing, log file analysis, monitoring at the application or system level, memory dumps, and profiling. Many programming languages and software development tools also offer programs to aid in debugging, known as ''debuggers''. Etymology The terms "bug" and "debugging" are popularly attributed to Admiral Grace Hopper in the 1940s. While she was working on a Mark II computer at Harvard University, her associates discovered a moth stuck in a relay and thereby impeding operation, whereupon she remarked that they were "debugging" the system. However, the term "bug", in the sense of "technical error", dates back at least to 1878 and Thomas Edison who describes the "litt ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Cypress Semiconductor

Cypress Semiconductor was an American semiconductor design and manufacturing company. It offered NOR flash memories, F-RAM and SRAM Traveo microcontrollers, PSoC programmable system-on-chip solutions, analog and PMIC Power Management ICs, CapSense capacitive touch-sensing controllers, Wireless BLE Bluetooth Low-Energy and USB connectivity solutions. Its headquarters were in San Jose, California, with operations in the United States, Ireland, India and the Philippines. In April 2016, Cypress Semiconductors announced the acquisition of Broadcom’s Wireless Internet of Things Business. The deal was closed in July 2016. In June 2019, Infineon Technologies announced it would acquire Cypress for $9.4 billion. The deal closed in April 2020, making Infineon one of the world's top 10 semiconductor manufacturers. Some of its main competitors included Microchip Technology, NXP Semiconductors, Renesas Electronics and Micron Technology. History Founding and early years It was ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

PSoC

PSoC (programmable system on a chip) is a family of microcontroller integrated circuits by Cypress Semiconductor. These chips include a CPU core and mixed-signal arrays of configurable integrated analog and digital peripherals. History In 2002, Cypress began shipping commercial quantities of the PSoC 1. To promote the PSoC, Cypress sponsored a "PSoC Design Challenge" in '' Circuit Cellar'' magazine in 2002 and 2004. In April 2013, Cypress released the fourth generation, PSoC 4. The PSoC 4 features a 32-bit ARM Cortex-M0 CPU, with programmable analog blocks (operational amplifiers and comparators), programmable digital blocks (PLD-based UDBs), programmable routing and flexible GPIO (route any function to any pin), a serial communication block (for SPI, UART, I²C), a timer/counter/PWM block and more. PSoC is used in devices as simple as Sonicare toothbrushes and Adidas sneakers, and as complex as the TiVo set-top box. One PSoC implements capacitive sensing for the to ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Dynamic Random-access Memory

Dynamic random-access memory (dynamic RAM or DRAM) is a type of random-access semiconductor memory that stores each bit of data in a memory cell, usually consisting of a tiny capacitor and a transistor, both typically based on metal-oxide-semiconductor (MOS) technology. While most DRAM memory cell designs use a capacitor and transistor, some only use two transistors. In the designs where a capacitor is used, the capacitor can either be charged or discharged; these two states are taken to represent the two values of a bit, conventionally called 0 and 1. The electric charge on the capacitors gradually leaks away; without intervention the data on the capacitor would soon be lost. To prevent this, DRAM requires an external ''memory refresh'' circuit which periodically rewrites the data in the capacitors, restoring them to their original charge. This refresh process is the defining characteristic of dynamic random-access memory, in contrast to static random-access memory (SRAM ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Aquasar

Aquasar is a supercomputer (a high-performance computer) prototype created by IBM Labs in collaboration with ETH Zurich in Zürich, Switzerland and ETH Lausanne in Lausanne, Switzerland. While most supercomputers use air as their coolant of choice, the Aquasar uses hot water to achieve its great computing efficiency. Along with using hot water as the main coolant, an air-cooled section is also included to be used to compare the cooling efficiency of both coolants. The comparison could later be used to help improve the hot water coolant's performance. The research program was first termed to be: "Direct use of waste heat from liquid-cooled supercomputers: the path to energy saving, emission-high performance computers and data centers." The waste heat produced by the cooling system is able to be recycled back in the building's heating system, potentially saving money. Beginning in 2009, the three-year collaborative project was introduced and developed in the interest of saving energ ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |