|

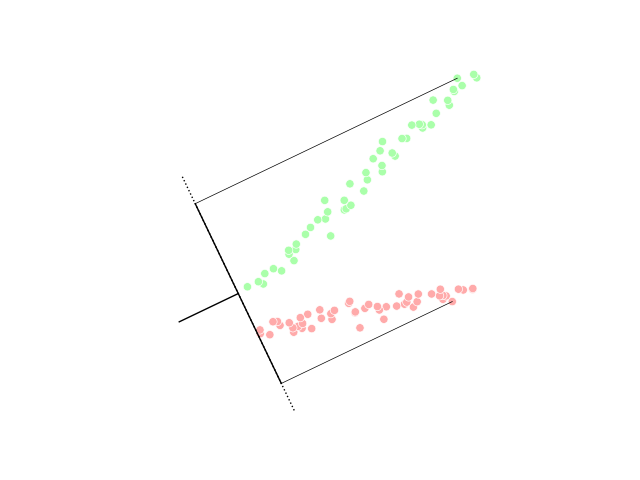

Decision Boundary

__NOTOC__ In a statistical-classification problem with two classes, a decision boundary or decision surface is a hypersurface that partitions the underlying vector space into two sets, one for each class. The classifier will classify all the points on one side of the decision boundary as belonging to one class and all those on the other side as belonging to the other class. A decision boundary is the region of a problem space in which the output label of a classifier is ambiguous. If the decision surface is a hyperplane, then the classification problem is linear, and the classes are linearly separable. Decision boundaries are not always clear cut. That is, the transition from one class in the feature space to another is not discontinuous, but gradual. This effect is common in fuzzy logic based classification algorithms, where membership in one class or another is ambiguous. Decision boundaries can be approximations of optimal stopping boundaries. The decision boundary is the ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Statistical Classification

When classification is performed by a computer, statistical methods are normally used to develop the algorithm. Often, the individual observations are analyzed into a set of quantifiable properties, known variously as explanatory variables or ''features''. These properties may variously be categorical (e.g. "A", "B", "AB" or "O", for blood type), ordinal (e.g. "large", "medium" or "small"), integer-valued (e.g. the number of occurrences of a particular word in an email) or real-valued (e.g. a measurement of blood pressure). Other classifiers work by comparing observations to previous observations by means of a similarity or distance function. An algorithm that implements classification, especially in a concrete implementation, is known as a classifier. The term "classifier" sometimes also refers to the mathematical function, implemented by a classification algorithm, that maps input data to a category. Terminology across fields is quite varied. In statistics, where classi ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Continuous Function

In mathematics, a continuous function is a function such that a small variation of the argument induces a small variation of the value of the function. This implies there are no abrupt changes in value, known as '' discontinuities''. More precisely, a function is continuous if arbitrarily small changes in its value can be assured by restricting to sufficiently small changes of its argument. A discontinuous function is a function that is . Until the 19th century, mathematicians largely relied on intuitive notions of continuity and considered only continuous functions. The epsilon–delta definition of a limit was introduced to formalize the definition of continuity. Continuity is one of the core concepts of calculus and mathematical analysis, where arguments and values of functions are real and complex numbers. The concept has been generalized to functions between metric spaces and between topological spaces. The latter are the most general continuous functions, and their d ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Classification Algorithms

Classification is the activity of assigning objects to some pre-existing classes or categories. This is distinct from the task of establishing the classes themselves (for example through cluster analysis). Examples include diagnostic tests, identifying spam emails and deciding whether to give someone a driving license. As well as 'category', synonyms or near-synonyms for 'class' include 'type', 'species', 'order', 'concept', 'taxon', 'group', 'identification' and 'division'. The meaning of the word 'classification' (and its synonyms) may take on one of several related meanings. It may encompass both classification and the creation of classes, as for example in 'the task of categorizing pages in Wikipedia'; this overall activity is listed under taxonomy. It may refer exclusively to the underlying scheme of classes (which otherwise may be called a taxonomy). Or it may refer to the label given to an object by the classifier. Classification is a part of many different kinds of activ ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Hyperplane Separation Theorem

In geometry, the hyperplane separation theorem is a theorem about disjoint convex sets in ''n''-dimensional Euclidean space. There are several rather similar versions. In one version of the theorem, if both these sets are closed and at least one of them is compact, then there is a hyperplane in between them and even two parallel hyperplanes in between them separated by a gap. In another version, if both disjoint convex sets are open, then there is a hyperplane in between them, but not necessarily any gap. An axis which is orthogonal to a separating hyperplane is a separating axis, because the orthogonal projections of the convex bodies onto the axis are disjoint. The hyperplane separation theorem is due to Hermann Minkowski. The Hahn–Banach separation theorem generalizes the result to topological vector spaces. A related result is the supporting hyperplane theorem. In the context of support-vector machines, the ''optimally separating hyperplane'' or ''maximum-margin hy ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Discriminant Function

Linear discriminant analysis (LDA), normal discriminant analysis (NDA), canonical variates analysis (CVA), or discriminant function analysis is a generalization of Fisher's linear discriminant, a method used in statistics and other fields, to find a linear combination of features that characterizes or separates two or more classes of objects or events. The resulting combination may be used as a linear classifier, or, more commonly, for dimensionality reduction before later classification. LDA is closely related to analysis of variance (ANOVA) and regression analysis, which also attempt to express one dependent variable as a linear combination of other features or measurements. However, ANOVA uses categorical independent variables and a continuous dependent variable, whereas discriminant analysis has continuous independent variables and a categorical dependent variable (''i.e.'' the class label). Logistic regression and probit regression are more similar to LDA than ANOVA ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Kernel Trick

In machine learning, kernel machines are a class of algorithms for pattern analysis, whose best known member is the support-vector machine (SVM). These methods involve using linear classifiers to solve nonlinear problems. The general task of pattern analysis is to find and study general types of relations (for example clusters, rankings, principal components, correlations, classifications) in datasets. For many algorithms that solve these tasks, the data in raw representation have to be explicitly transformed into feature vector representations via a user-specified ''feature map'': in contrast, kernel methods require only a user-specified ''kernel'', i.e., a similarity function over all pairs of data points computed using inner products. The feature map in kernel machines is infinite dimensional but only requires a finite dimensional matrix from user-input according to the representer theorem. Kernel machines are slow to compute for datasets larger than a couple of thousand ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Maximum-margin Hyperplane

In geometry, the hyperplane separation theorem is a theorem about Disjoint sets, disjoint convex sets in ''n''-dimensional Euclidean space. There are several rather similar versions. In one version of the theorem, if both these sets are Closed set, closed and at least one of them is Compact set, compact, then there is a hyperplane in between them and even two parallel hyperplanes in between them separated by a gap. In another version, if both disjoint convex sets are open, then there is a hyperplane in between them, but not necessarily any gap. An axis which is orthogonal to a separating hyperplane is a separating axis, because the orthogonal Projection (set theory), projections of the convex bodies onto the axis are disjoint. The hyperplane separation theorem is due to Hermann Minkowski. The Hahn–Banach theorem#Hahn–Banach separation theorem, Hahn–Banach separation theorem generalizes the result to topological vector spaces. A related result is the supporting hyperplane t ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Support Vector Machine

In machine learning, support vector machines (SVMs, also support vector networks) are supervised max-margin models with associated learning algorithms that analyze data for classification and regression analysis. Developed at AT&T Bell Laboratories, SVMs are one of the most studied models, being based on statistical learning frameworks of VC theory proposed by Vapnik (1982, 1995) and Chervonenkis (1974). In addition to performing linear classification, SVMs can efficiently perform non-linear classification using the ''kernel trick'', representing the data only through a set of pairwise similarity comparisons between the original data points using a kernel function, which transforms them into coordinates in a higher-dimensional feature space. Thus, SVMs use the kernel trick to implicitly map their inputs into high-dimensional feature spaces, where linear classification can be performed. Being max-margin models, SVMs are resilient to noisy data (e.g., misclassified examples). ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Universal Approximation Theorem

In the mathematical theory of artificial neural networks, universal approximation theorems are theorems of the following form: Given a family of neural networks, for each function f from a certain function space, there exists a sequence of neural networks \phi_1, \phi_2, \dots from the family, such that \phi_n \to f according to some criterion. That is, the family of neural networks is dense in the function space. The most popular version states that feedforward networks with non-polynomial activation functions are dense in the space of continuous functions between two Euclidean spaces, with respect to the compact convergence topology. Universal approximation theorems are existence theorems: They simply state that there ''exists'' such a sequence \phi_1, \phi_2, \dots \to f, and do not provide any way to actually find such a sequence. They also do not guarantee any method, such as backpropagation, might actually find such a sequence. Any method for searching the space of neural ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Euclidean Space

Euclidean space is the fundamental space of geometry, intended to represent physical space. Originally, in Euclid's ''Elements'', it was the three-dimensional space of Euclidean geometry, but in modern mathematics there are ''Euclidean spaces'' of any positive integer dimension ''n'', which are called Euclidean ''n''-spaces when one wants to specify their dimension. For ''n'' equal to one or two, they are commonly called respectively Euclidean lines and Euclidean planes. The qualifier "Euclidean" is used to distinguish Euclidean spaces from other spaces that were later considered in physics and modern mathematics. Ancient Greek geometers introduced Euclidean space for modeling the physical space. Their work was collected by the ancient Greek mathematician Euclid in his ''Elements'', with the great innovation of '' proving'' all properties of the space as theorems, by starting from a few fundamental properties, called '' postulates'', which either were considered as evid ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Compact Space

In mathematics, specifically general topology, compactness is a property that seeks to generalize the notion of a closed and bounded subset of Euclidean space. The idea is that a compact space has no "punctures" or "missing endpoints", i.e., it includes all ''limiting values'' of points. For example, the open interval (0,1) would not be compact because it excludes the limiting values of 0 and 1, whereas the closed interval ,1would be compact. Similarly, the space of rational numbers \mathbb is not compact, because it has infinitely many "punctures" corresponding to the irrational numbers, and the space of real numbers \mathbb is not compact either, because it excludes the two limiting values +\infty and -\infty. However, the ''extended'' real number line ''would'' be compact, since it contains both infinities. There are many ways to make this heuristic notion precise. These ways usually agree in a metric space, but may not be equivalent in other topological spaces. One suc ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Perceptron

In machine learning, the perceptron is an algorithm for supervised classification, supervised learning of binary classification, binary classifiers. A binary classifier is a function that can decide whether or not an input, represented by a vector of numbers, belongs to some specific class. It is a type of linear classifier, i.e. a classification algorithm that makes its predictions based on a linear predictor function combining a set of Weighting, weights with the feature vector. History The artificial neuron network was invented in 1943 by Warren McCulloch and Walter Pitts in ''A Logical Calculus of the Ideas Immanent in Nervous Activity, A logical calculus of the ideas immanent in nervous activity''. In 1957, Frank Rosenblatt was at the Cornell Aeronautical Laboratory. He simulated the perceptron on an IBM 704. Later, he obtained funding by the Information Systems Branch of the United States Office of Naval Research and the Rome Air Development Center, to build a custom- ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |