|

Database Refactoring

A database refactoring is a simple change to a database schema that improves its design while retaining both its behavioral and informational semantics. Database refactoring does not change the way data is interpreted or used and does not fix bugs or add new functionality. Every refactoring to a database leaves the system in a working state, thus not causing maintenance lags, provided the meaningful data exists in the production environment. A database refactoring is conceptually more difficult than a code refactoring; code refactorings only need to maintain behavioral semantics while database refactorings also must maintain informational semantics. A database schema is typically refactored for one of several reasons: # To develop the schema in an evolutionary manner in parallel with the evolutionary design of the rest of the system. # To fix design problems with an existing legacy database schema. Database refactorings are often motivated by the desire for database normalizat ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Database Schema

The database schema is the structure of a database described in a formal language supported typically by a relational database management system (RDBMS). The term "wikt:schema, schema" refers to the organization of data as a blueprint of how the database is constructed (divided into database tables in the case of relational databases). The formal definition of a database schema is a set of formulas (sentences) called integrity constraints imposed on a database. These integrity constraints ensure compatibility between parts of the schema. All constraints are expressible in the same language. A database can be considered a structure in realization of the database language. The states of a created conceptual schema are transformed into an explicit mapping, the database schema. This describes how real-world entities are Data modeling, modeled in the database. "A database schema specifies, based on the database administrator's knowledge of possible applications, the facts that can ent ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Software Bug

A software bug is a design defect ( bug) in computer software. A computer program with many or serious bugs may be described as ''buggy''. The effects of a software bug range from minor (such as a misspelled word in the user interface) to severe (such as frequent crashing). In 2002, a study commissioned by the US Department of Commerce's National Institute of Standards and Technology concluded that "software bugs, or errors, are so prevalent and so detrimental that they cost the US economy an estimated $59 billion annually, or about 0.6 percent of the gross domestic product". Since the 1950s, some computer systems have been designed to detect or auto-correct various software errors during operations. History Terminology ''Mistake metamorphism'' (from Greek ''meta'' = "change", ''morph'' = "form") refers to the evolution of a defect in the final stage of software deployment. Transformation of a ''mistake'' committed by an analyst in the early stages of the softw ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Code Refactoring

In computer programming and software design, code refactoring is the process of restructuring existing source code—changing the '' factoring''—without changing its external behavior. Refactoring is intended to improve the design, structure, and/or implementation of the software (its '' non-functional'' attributes), while preserving its functionality. Potential advantages of refactoring may include improved code readability and reduced complexity; these can improve the source code's maintainability and create a simpler, cleaner, or more expressive internal architecture or object model to improve extensibility. Another potential goal for refactoring is improved performance; software engineers face an ongoing challenge to write programs that perform faster or use less memory. Typically, refactoring applies a series of standardized basic ''micro-refactorings'', each of which is (usually) a tiny change in a computer program's source code that either preserves the behavior of ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Database Normalization

Database normalization is the process of structuring a relational database in accordance with a series of so-called '' normal forms'' in order to reduce data redundancy and improve data integrity. It was first proposed by British computer scientist Edgar F. Codd as part of his relational model. Normalization entails organizing the columns (attributes) and tables (relations) of a database to ensure that their dependencies are properly enforced by database integrity constraints. It is accomplished by applying some formal rules either by a process of ''synthesis'' (creating a new database design) or ''decomposition'' (improving an existing database design). Objectives A basic objective of the first normal form defined by Codd in 1970 was to permit data to be queried and manipulated using a "universal data sub-language" grounded in first-order logic. An example of such a language is SQL, though it is one that Codd regarded as seriously flawed. The objectives of normalization ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Database Testing

Database testing usually consists of a layered process, including the user interface (UI) layer, the business layer, the data access layer and the database itself. The UI layer deals with the interface design of the database, while the business layer includes databases supporting business strategies. Purposes Databases, the collection of interconnected files on a server, storing information, may not deal with the same ''type'' of data, i.e. databases may be heterogeneous. As a result, many kinds of implementation and integration errors may occur in large database systems, which negatively affect the system's performance, reliability, consistency and security. Thus, it is important to test in order to obtain a database system which satisfies the ACID properties (Atomicity, Consistency, Isolation, and Durability) of a database management system. One of the most critical layers is the data access layer, which deals with databases directly during the communication process. Dat ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Data Migration

Data migration is the process of selecting, preparing, extracting, and transforming data and permanently transferring it from one computer storage system to another. Additionally, the validation of migrated data for completeness and the decommissioning of legacy data storage are considered part of the entire data migration process. Data migration is a key consideration for any system implementation, upgrade, or consolidation, and it is typically performed in such a way as to be as automated as possible, freeing up human resources from tedious tasks. Data migration occurs for a variety of reasons, including server or storage equipment replacements, maintenance or upgrades, application migration, website consolidation, disaster recovery, and data center relocation. The standard phases , "nearly 40 percent of data migration projects were over time, over budget, or failed entirely." Thus, proper planning is critical for an effective data migration. While the specifics of a data mi ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Denormalization

Denormalization is a strategy used on a previously- normalized database to increase performance. In computing, denormalization is the process of trying to improve the read performance of a database, at the expense of losing some write performance, by adding redundant copies of data or by grouping data.S. K. Shin and G. L. SandersDenormalization strategies for data retrieval from data warehouses Decision Support Systems, 42(1):267-282, October 2006. It is often motivated by performance or scalability in relational database software needing to carry out very large numbers of read operations. Denormalization differs from the unnormalized form in that denormalization benefits can only be fully realized on a data model that is otherwise normalized. Implementation A normalized design will often "store" different but related pieces of information in separate logical tables (called relations). If these relations are stored physically as separate disk files, completing a database que ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Refactoring

In computer programming and software design, code refactoring is the process of restructuring existing source code—changing the '' factoring''—without changing its external behavior. Refactoring is intended to improve the design, structure, and/or implementation of the software (its '' non-functional'' attributes), while preserving its functionality. Potential advantages of refactoring may include improved code readability and reduced complexity; these can improve the source code's maintainability and create a simpler, cleaner, or more expressive internal architecture or object model to improve extensibility. Another potential goal for refactoring is improved performance; software engineers face an ongoing challenge to write programs that perform faster or use less memory. Typically, refactoring applies a series of standardized basic ''micro-refactorings'', each of which is (usually) a tiny change in a computer program's source code that either preserves the behavior of ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

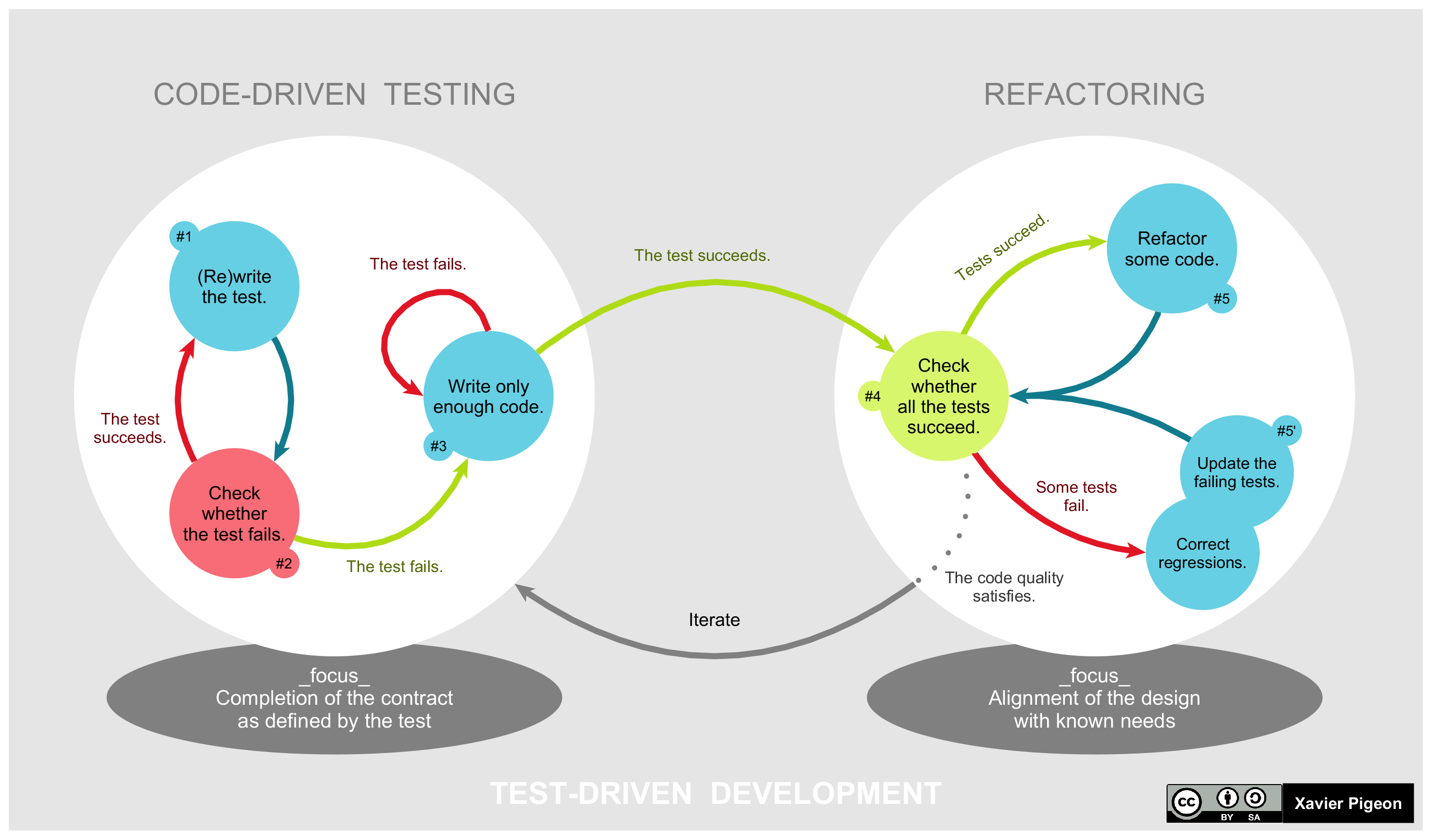

Test-driven Development

Test-driven development (TDD) is a way of writing source code, code that involves writing an test automation, automated unit testing, unit-level test case that fails, then writing just enough code to make the test pass, then refactoring both the test code and the production code, then repeating with another new test case. Alternative approaches to writing automated tests is to write all of the production code before starting on the test code or to write all of the test code before starting on the production code. With TsDD, both are written together, therefore shortening debugging time necessities. TDD is related to the test-first programming concepts of extreme programming, begun in 1999, but more recently has created more general interest in its own right.Newkirk, JW and Vorontsov, AA. ''Test-Driven Development in Microsoft .NET'', Microsoft Press, 2004. Programmers also apply the concept to improving and software bug, debugging legacy code developed with older techniques.Feat ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Unit Testing

Unit testing, component or module testing, is a form of software testing by which isolated source code is tested to validate expected behavior. Unit testing describes tests that are run at the unit-level to contrast testing at the Integration testing, integration or System testing, system level. History Unit testing, as a principle for testing separately smaller parts of large software systems, dates back to the early days of software engineering. In June 1956 at US Navy's Symposium on Advanced Programming Methods for Digital Computers, H.D. Benington presented the Semi-Automatic Ground Environment, SAGE project. It featured a specification-based approach where the coding phase was followed by "parameter testing" to validate component subprograms against their specification, followed then by an "assembly testing" for parts put together. In 1964, a similar approach is described for the software of the Project Mercury, Mercury project, where individual units developed by dif ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Extreme Programming

Extreme programming (XP) is a software development methodology intended to improve software quality and responsiveness to changing customer requirements. As a type of agile software development,"Human Centred Technology Workshop 2006 ", 2006, PDFHuman Centred Technology Workshop 2006 /ref> it advocates frequent releases in short development cycles, intended to improve productivity and introduce checkpoints at which new customer requirements can be adopted. Other elements of extreme programming include programming in pairs or doing extensive code review, unit testing of all code, not programming features until they are actually needed, a flat management structure, code simplicity and clarity, expecting changes in the customer's requirements as time passes and the problem is better understood, and frequent communication with the customer and among programmers. [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |